- 1、[CV] Rethinking “Batch” in BatchNorm

- 2、[AI] Texture Generation with Neural Cellular Automata

- 3、[LG] Evading the Simplicity Bias: Training a Diverse Set of Models Discovers Solutions with Superior OOD Generalization

- 4、[LG] Deep physical neural networks enabled by a backpropagation algorithm for arbitrary physical systems

- 5、[CV] MOS: Towards Scaling Out-of-distribution Detection for Large Semantic Space

- [LG] Physics-informed attention-based neural network for solving non-linear partial differential equations

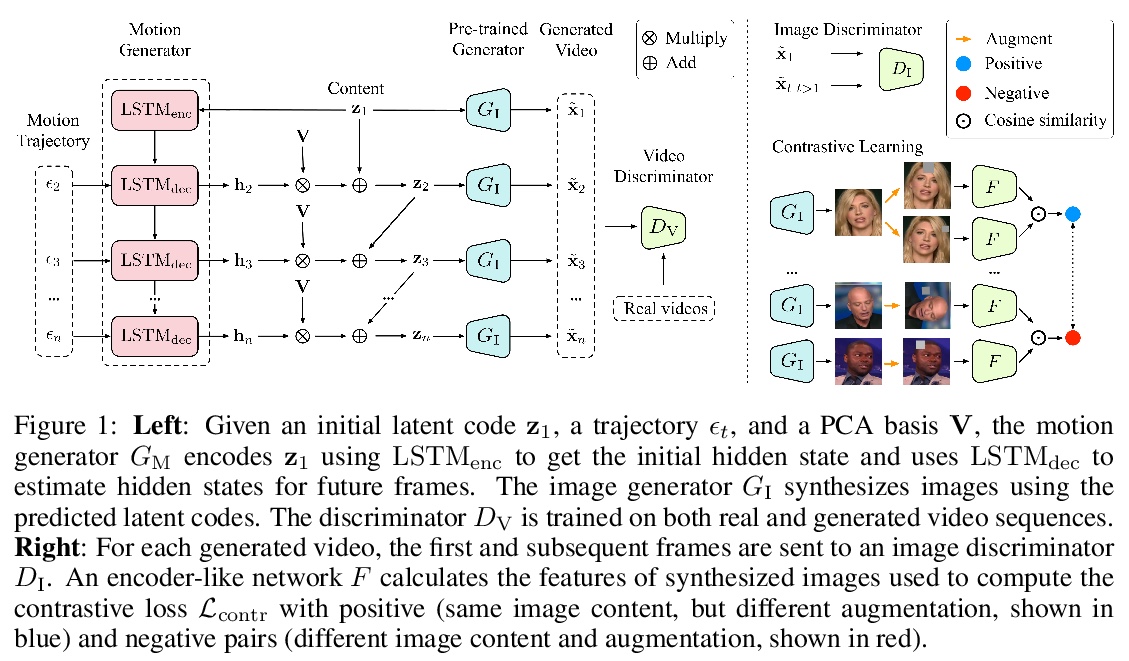

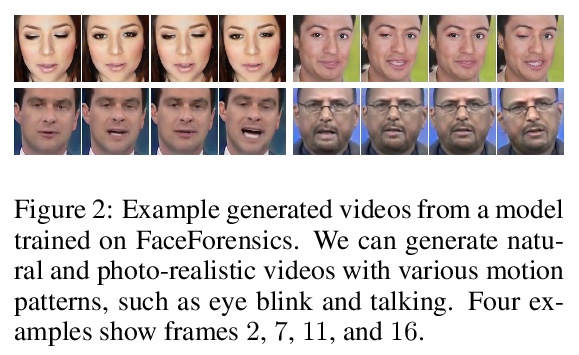

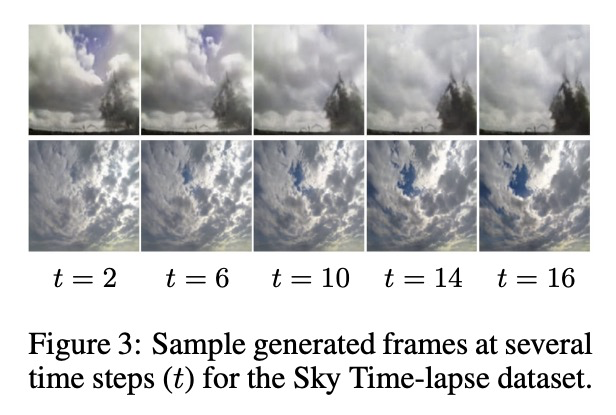

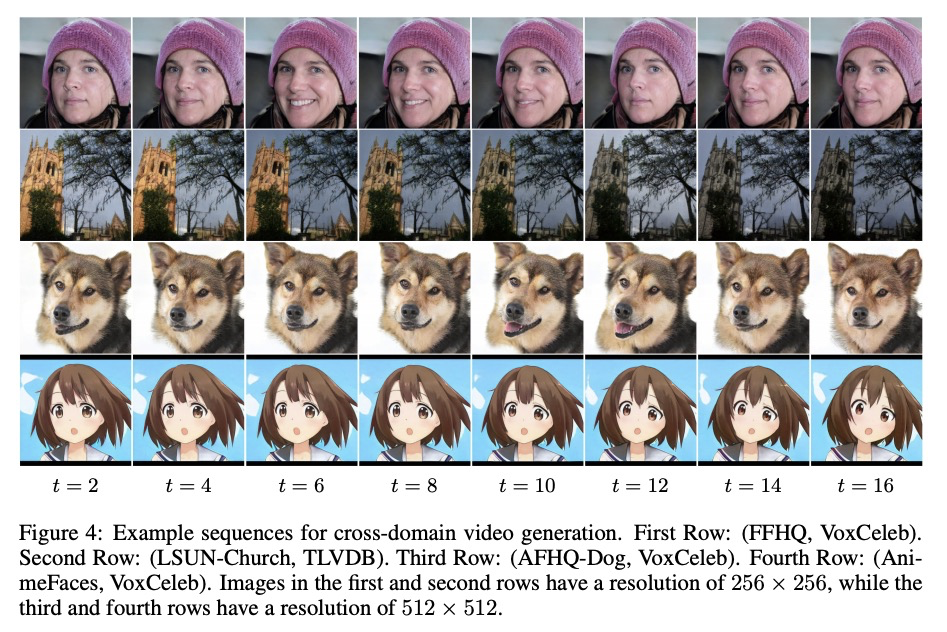

- [CV] A Good Image Generator Is What You Need for High-Resolution Video Synthesis

- [CL] High-performance symbolic-numerics via multiple dispatch

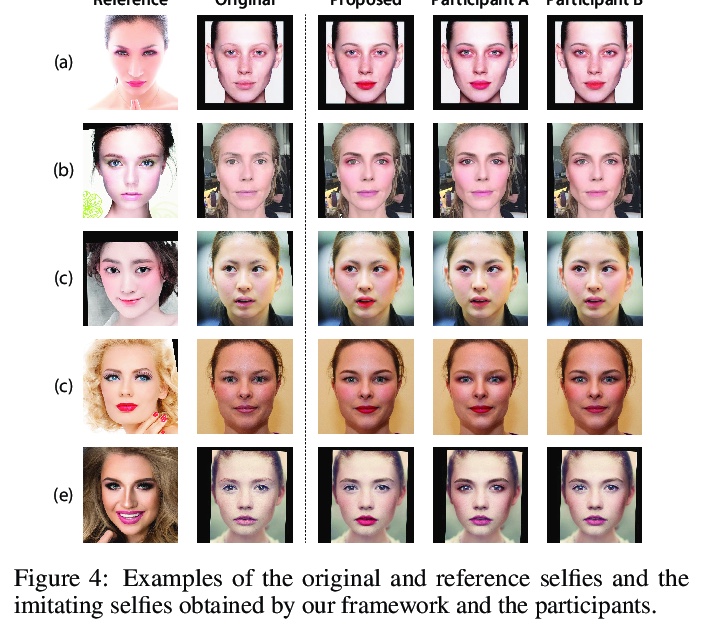

- [LG] Tool- and Domain-Agnostic Parameterization of Style Transfer Effects Leveraging Pretrained Perceptual Metrics

LG - 机器学习 CV - 计算机视觉 CL - 计算与语言 AS - 音频与语音 RO - 机器人

1、[CV] Rethinking “Batch” in BatchNorm

Y Wu, J Johnson

[Facebook AI Research]

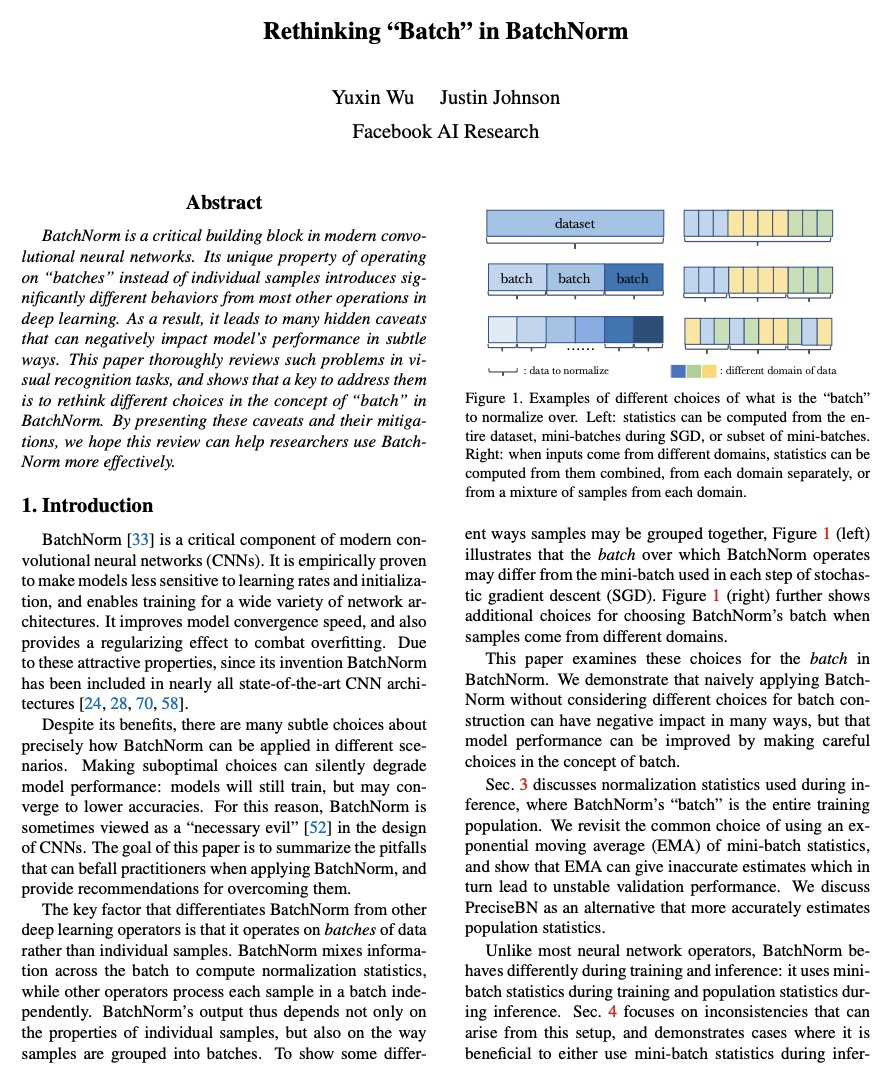

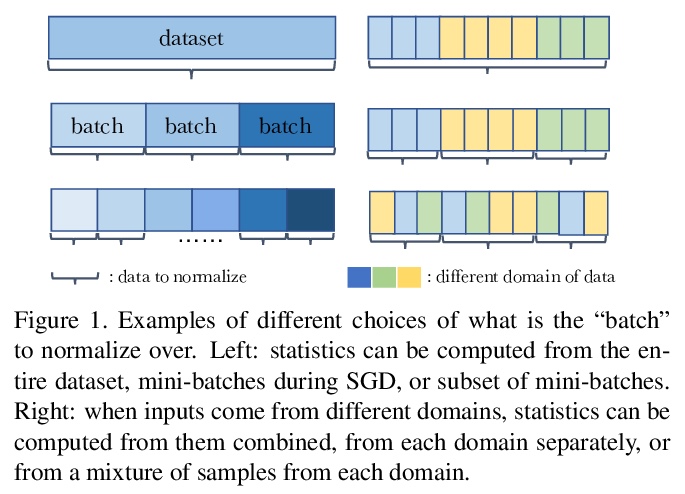

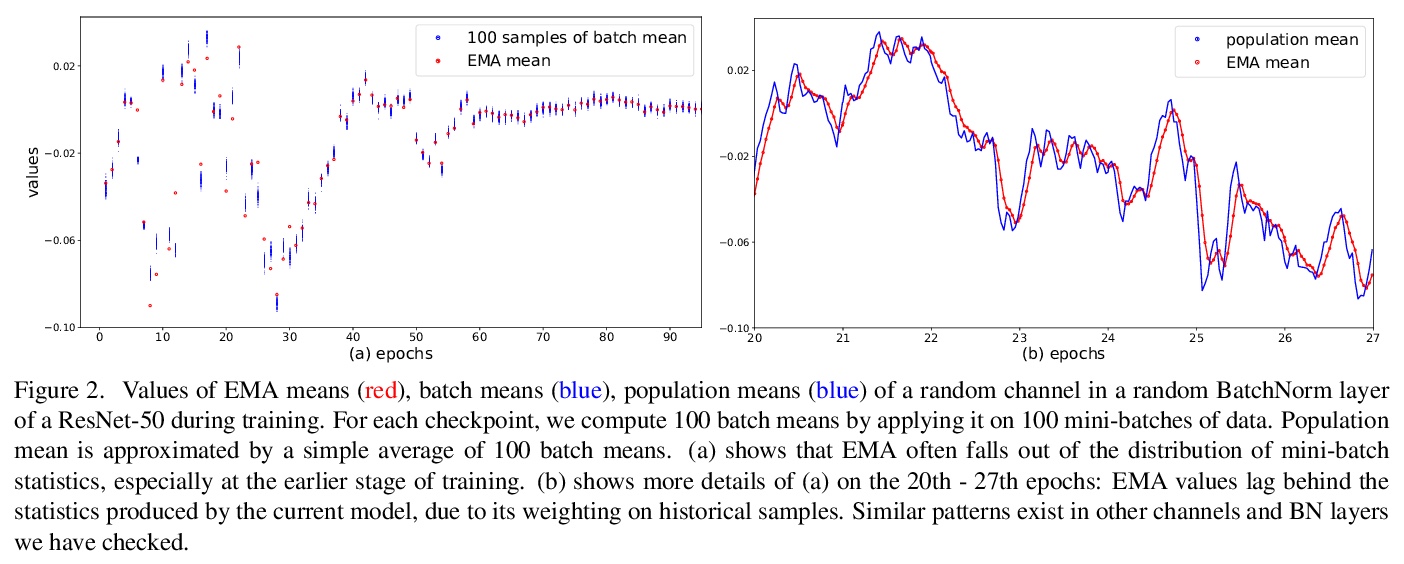

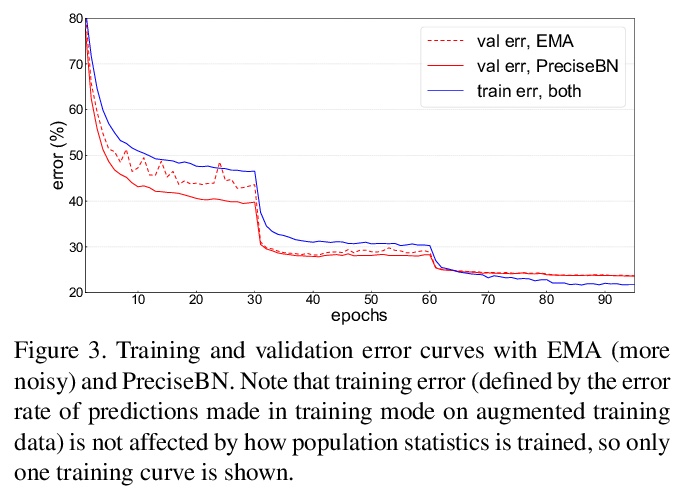

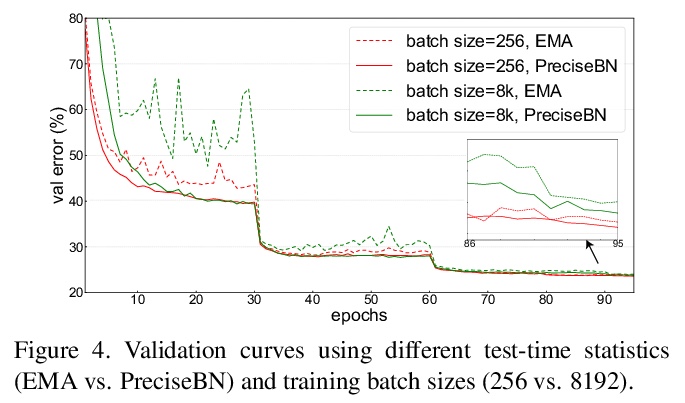

BatchNorm中的”Batch”的反思。批量归一化(BatchNorm)是现代卷积神经网络的关键构建模块,其独特性在于对”批”而非单样本进行操作,这与深度学习中的大多数其他操作明显不同,会导致许多并不显著的点,以微妙方式对模型性能产生负面影响。本文彻底回顾了视觉识别任务中的这些问题,并表明解决这些问题的关键是重新思考BatchNorm中”Batch”概念的不同选择。通过提示这些注意事项及其缓解措施,希望能帮助研究人员更有效地使用BatchNorm。

BatchNorm is a critical building block in modern convolutional neural networks. Its unique property of operating on “batches” instead of individual samples introduces significantly different behaviors from most other operations in deep learning. As a result, it leads to many hidden caveats that can negatively impact model’s performance in subtle ways. This paper thoroughly reviews such problems in visual recognition tasks, and shows that a key to address them is to rethink different choices in the concept of “batch” in BatchNorm. By presenting these caveats and their mitigations, we hope this review can help researchers use BatchNorm more effectively.

https://weibo.com/1402400261/KgJBk63Zx

2、[AI] Texture Generation with Neural Cellular Automata

A Mordvintsev, E Niklasson, E Randazzo

[Google Research]

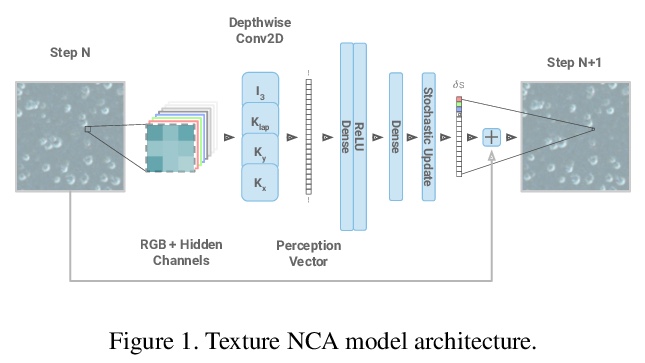

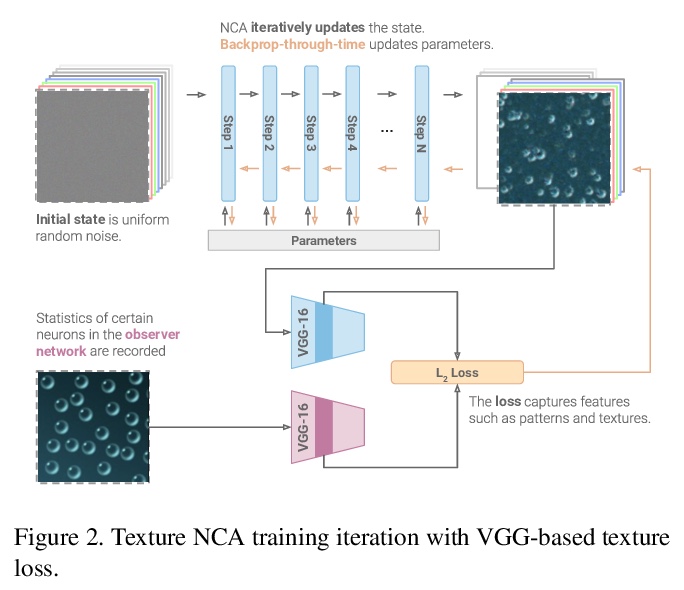

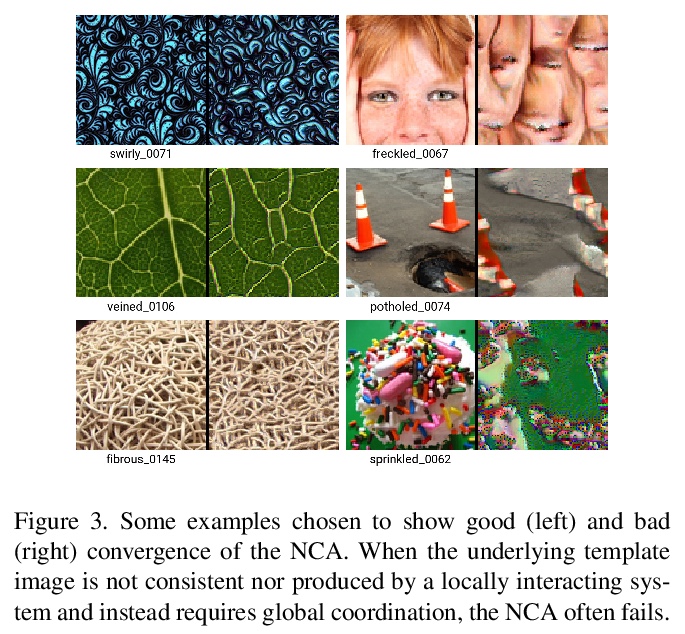

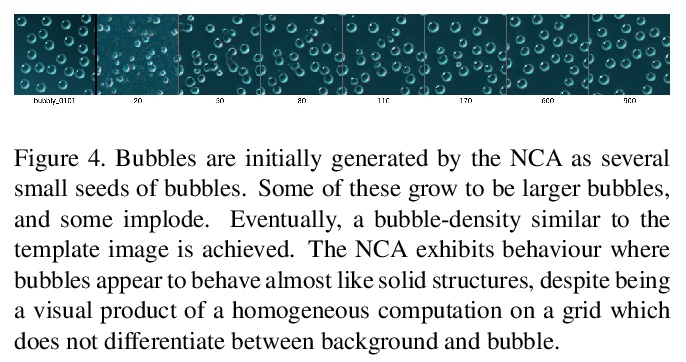

神经元胞自动机纹理生成。神经元胞自动机(NCA)在学习所需规则以”生长”图像、对形态进行分类和对图像进行分割,以及进行一般计算(如寻路)方面表现出了卓越的能力,其引入的归纳先验可用于纹理生成。自然界纹理通常是由局部相互作用的反应-扩散系统的变体产生的,人工制造的纹理也经常以局部方式产生(例如纺织)或使用具有局部依赖性的规则(规则网格或几何图案)。本文演示了从单一模板图像中学习纹理生成器,该生成方法高度并行,具有快速收敛和高保真输出特性,只需围绕基础状态流形的一些最小假设。本文研究了所学模型的有用和有趣的特性,如非平稳动态性和对损坏的内在鲁棒性,说明了NCA模型所表现出的行为是一种习得的、分布式的、生成纹理的局部算法,与现有的纹理生成工作有很大不同。

Neural Cellular Automata (NCA1) have shown a remarkable ability to learn the required rules to ”grow” images [20], classify morphologies [26] and segment images [28], as well as to do general computation such as path-finding [10]. We believe the inductive prior they introduce lends itself to the generation of textures. Textures in the natural world are often generated by variants of locally interacting reaction-diffusion systems. Human-made textures are likewise often generated in a local manner (textile weaving, for instance) or using rules with local dependencies (regular grids or geometric patterns). We demonstrate learning a texture generator from a single template image, with the generation method being embarrassingly parallel, exhibiting quick convergence and high fidelity of output, and requiring only some minimal assumptions around the un* Contributed equally. 1We use NCA to mean both Neural Cellular Automata and Neural Cellular Automaton in this work. derlying state manifold. Furthermore, we investigate properties of the learned models that are both useful and interesting, such as non-stationary dynamics and an inherent robustness to damage. Finally, we make qualitative claims that the behaviour exhibited by the NCA model is a learned, distributed, local algorithm to generate a texture, setting our method apart from existing work on texture generation. We discuss the advantages of such a paradigm.

https://weibo.com/1402400261/KgJGmbIOr

3、[LG] Evading the Simplicity Bias: Training a Diverse Set of Models Discovers Solutions with Superior OOD Generalization

D Teney, E Abbasnejad, S Lucey, A v d Hengel

[Idiap Research Institute & University of Adelaide]

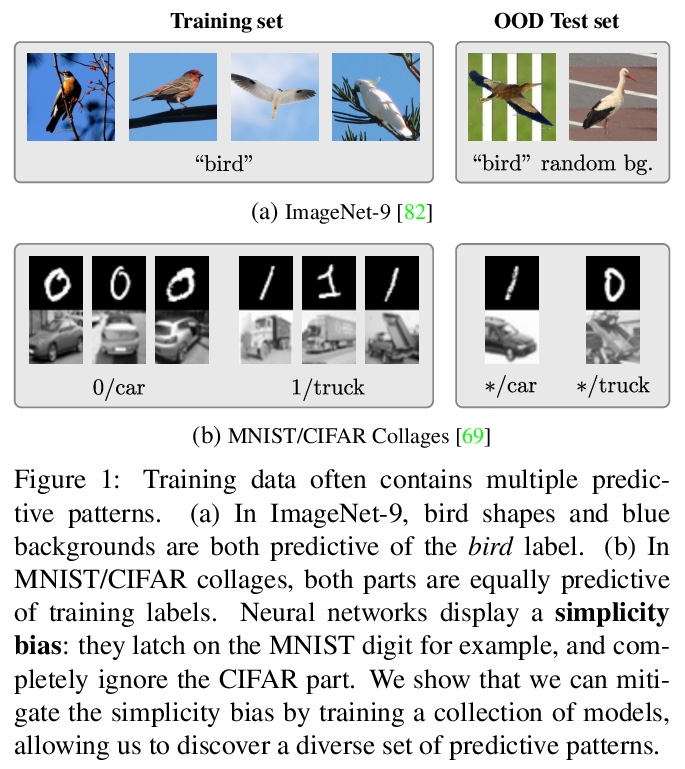

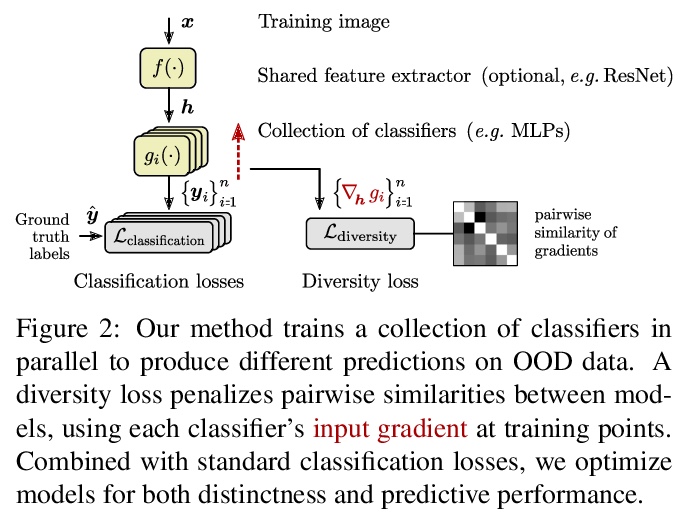

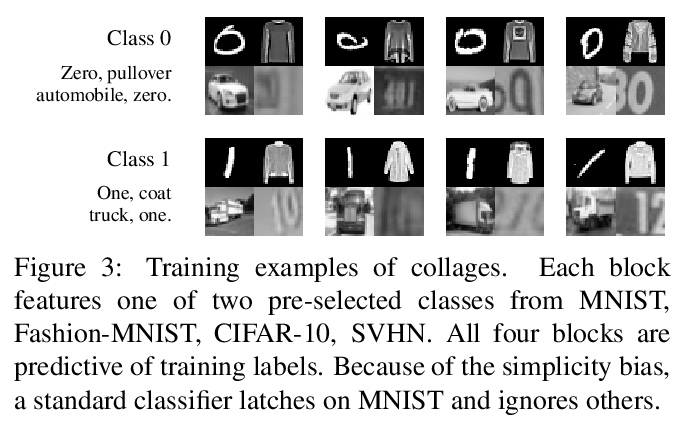

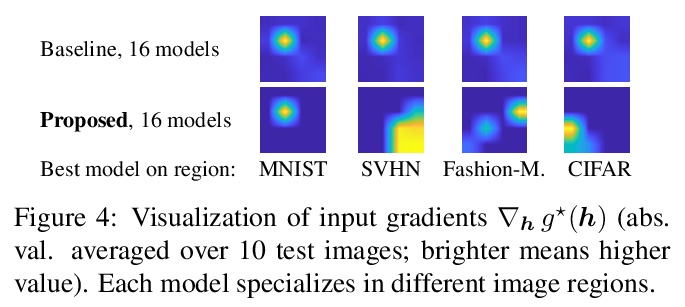

回避简单性偏差:训练模型多样化集发现具有卓越OOD泛化能力的解决方案。最近,用SGD训练的神经网络被证明优先依赖线性预测性特征,而忽略复杂等效预测性特征。这种简单性偏差,可解释其缺乏鲁棒性的分布(OOD)。要学习的任务越复杂,统计假象(即选择偏差、虚假的相关性)就越有可能比要学习的机制更简单。本文证明,简单性偏差可以被缓解,OOD的泛化也可以得到改善。训练一组类似的模型,利用对其输入梯度排列的惩罚,以不同方式来拟合数据。本文从理论和经验上表明,这能诱导学习更复杂的预测模式。OOD泛化从根本上需要超出独立同分布样本的信息,如多种训练环境、反事实样本或其他辅助信息。本方法表明,可以将这个要求推迟到独立的模型选择阶段。在有偏差数据上的视觉识别和跨视觉域的泛化方面获得了SOTA的结果。该方法强调了在深度学习中更好地理解和控制归纳性偏差的必要性。

Neural networks trained with SGD were recently shown to rely preferentially on linearly-predictive features and can ignore complex, equally-predictive ones. This simplicity bias can explain their lack of robustness out of distribution (OOD). The more complex the task to learn, the more likely it is that statistical artifacts (i.e. selection biases, spurious correlations) are simpler than the mechanisms to learn. We demonstrate that the simplicity bias can be mitigated and OOD generalization improved. We train a set of similar models to fit the data in different ways using a penalty on the alignment of their input gradients. We show theoretically and empirically that this induces the learning of more complex predictive patterns. OOD generalization fundamentally requires information beyond i.i.d. examples, such as multiple training environments, counterfactual examples, or other side information. Our approach shows that we can defer this requirement to an independent model selection stage. We obtain SOTA results in visual recognition on biased data and generalization across visual domains. The method – the first to evade the simplicity bias – highlights the need for a better understanding and control of inductive biases in deep learning.

https://weibo.com/1402400261/KgJKF1yH8

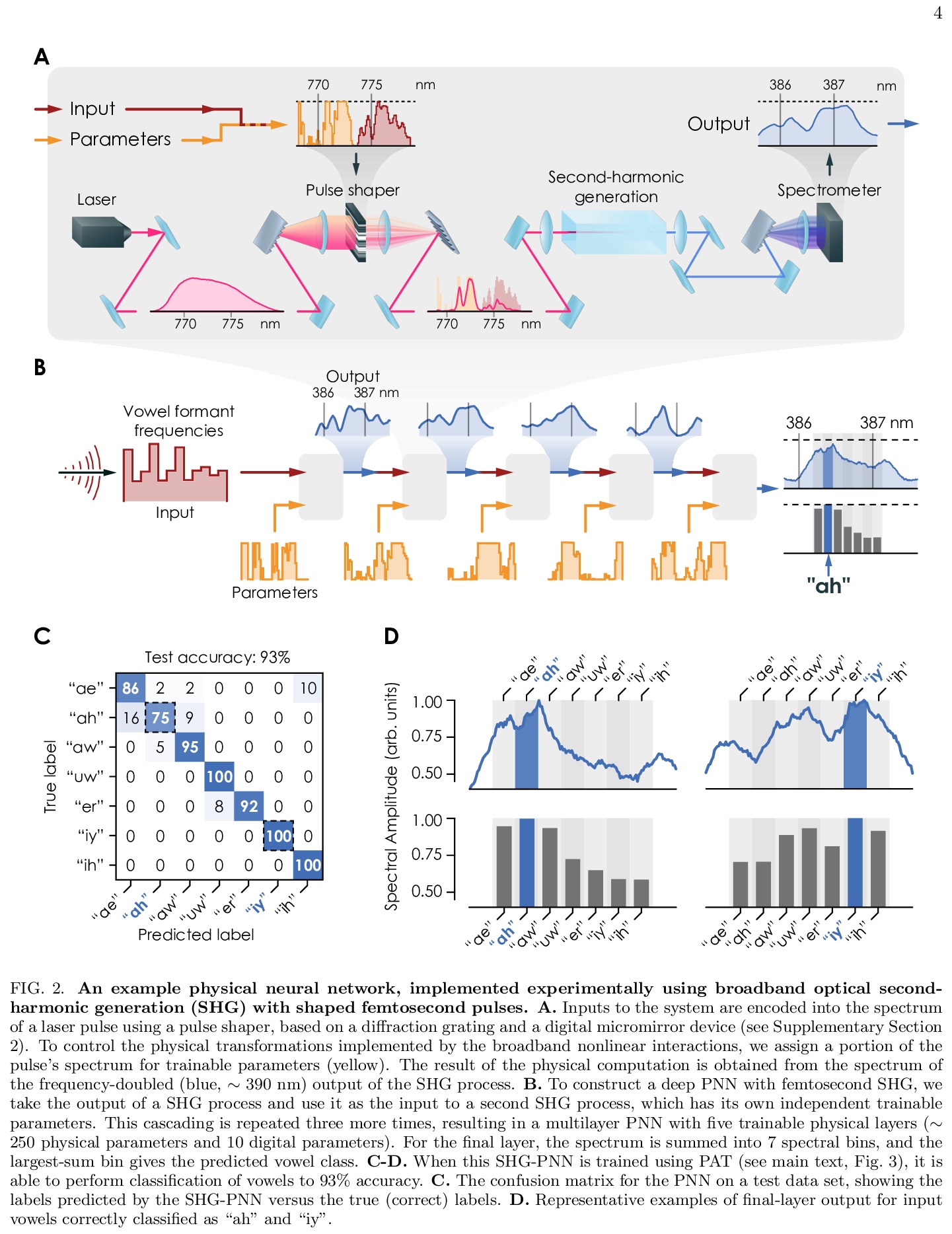

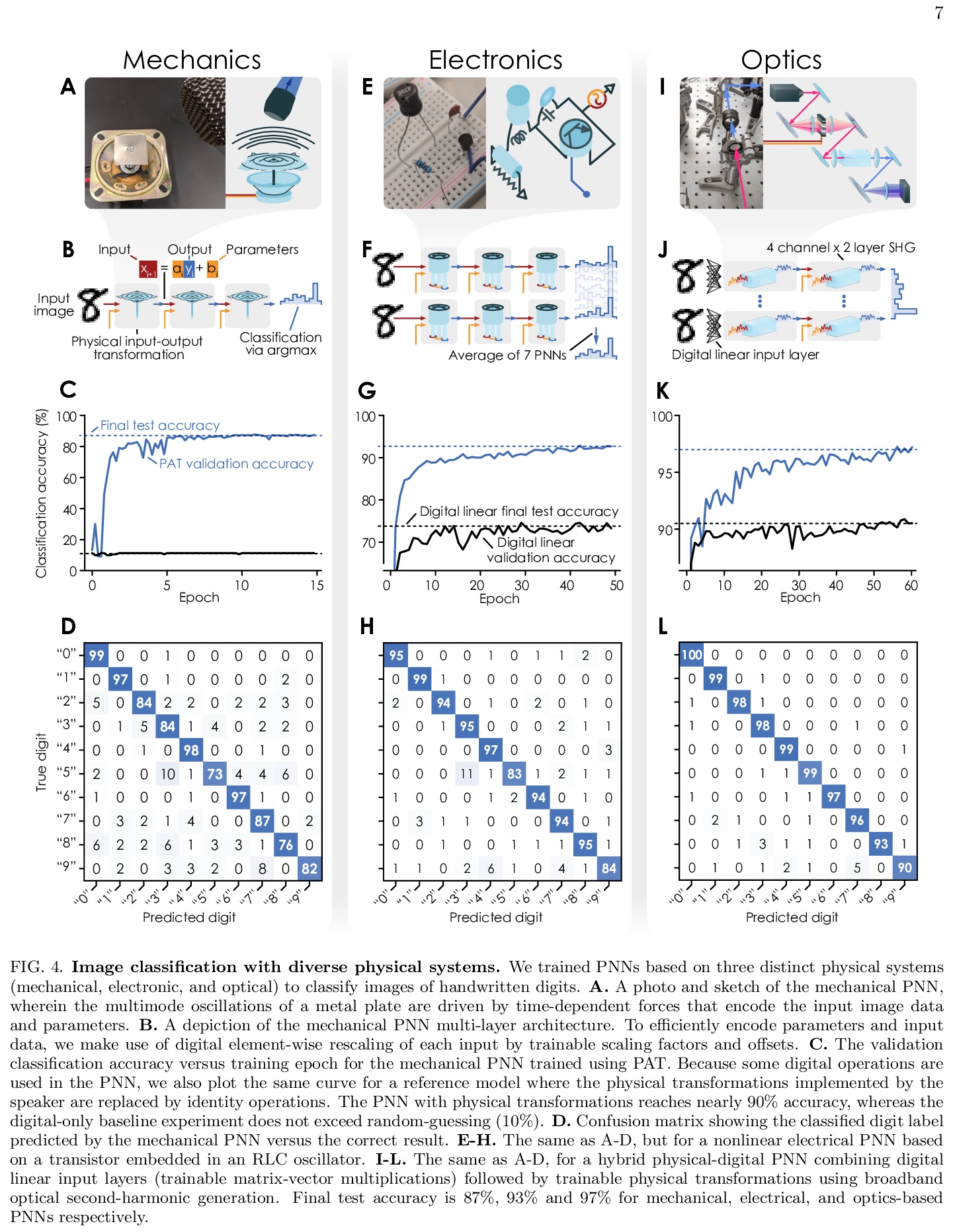

4、[LG] Deep physical neural networks enabled by a backpropagation algorithm for arbitrary physical systems

L G. Wright, T Onodera, M M. Stein, T Wang, D T. Schachter, Z Hu, P L. McMahon

[Cornell University]

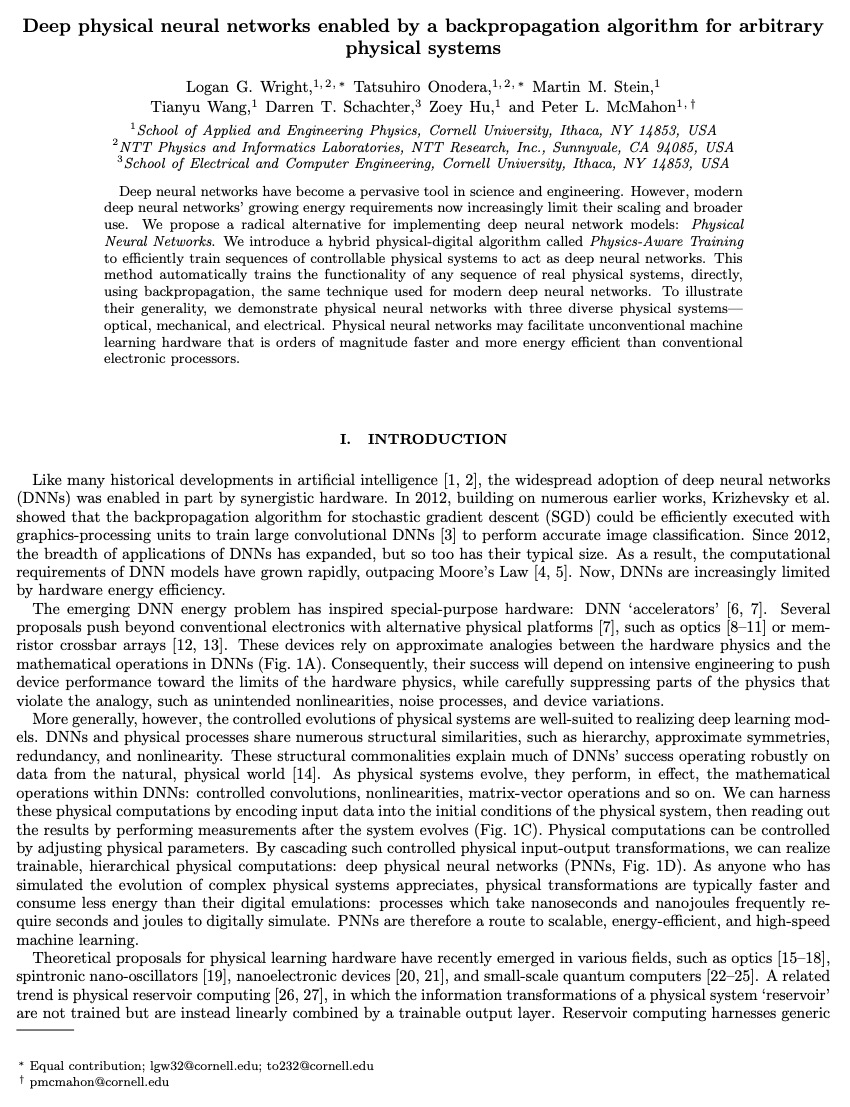

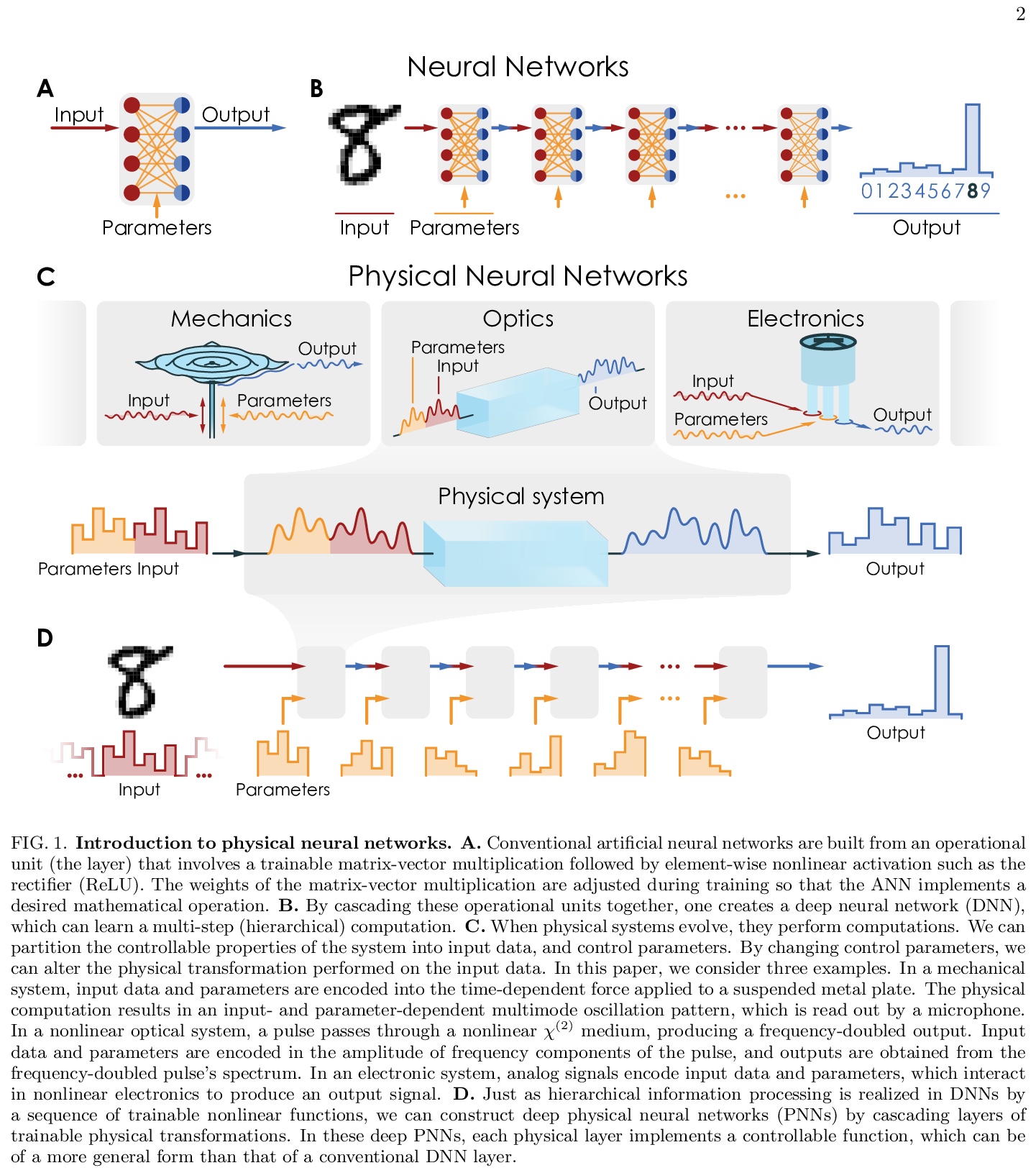

任意物理系统反向传播算法使能的深度物理神经网络。深度神经网络已经成为科学和工程中的一个普遍工具。然而,现代深度神经网络日益增长的能量需求现在越来越限制它们的扩展和更广泛的使用。本文提出一种实现深度神经网络模型的根本替代方案——物理神经网络。引入一种名为”物理感知训练”的物理-数字混合算法,以有效训练可控物理系统的序列,使其作为深度神经网络。该方法可自动训练任意真实物理系统序列的功能性,直接使用反向传播,即用于现代深度神经网络的相同技术。为说明其通用性,用三种不同的物理系统——光学、机械和电气——来演示物理神经网络。物理神经网络可能会促进非常规的机器学习硬件,其速度和能效比传统的电子处理器要高几个数量级。

Deep neural networks have become a pervasive tool in science and engineering. However, modern deep neural networks’ growing energy requirements now increasingly limit their scaling and broader use. We propose a radical alternative for implementing deep neural network models: Physical Neural Networks. We introduce a hybrid physical-digital algorithm called Physics-Aware Training to efficiently train sequences of controllable physical systems to act as deep neural networks. This method automatically trains the functionality of any sequence of real physical systems, directly, using backpropagation, the same technique used for modern deep neural networks. To illustrate their generality, we demonstrate physical neural networks with three diverse physical systems— optical, mechanical, and electrical. Physical neural networks may facilitate unconventional machine learning hardware that is orders of magnitude faster and more energy efficient than conventional electronic processors.

https://weibo.com/1402400261/KgJQE7zSc

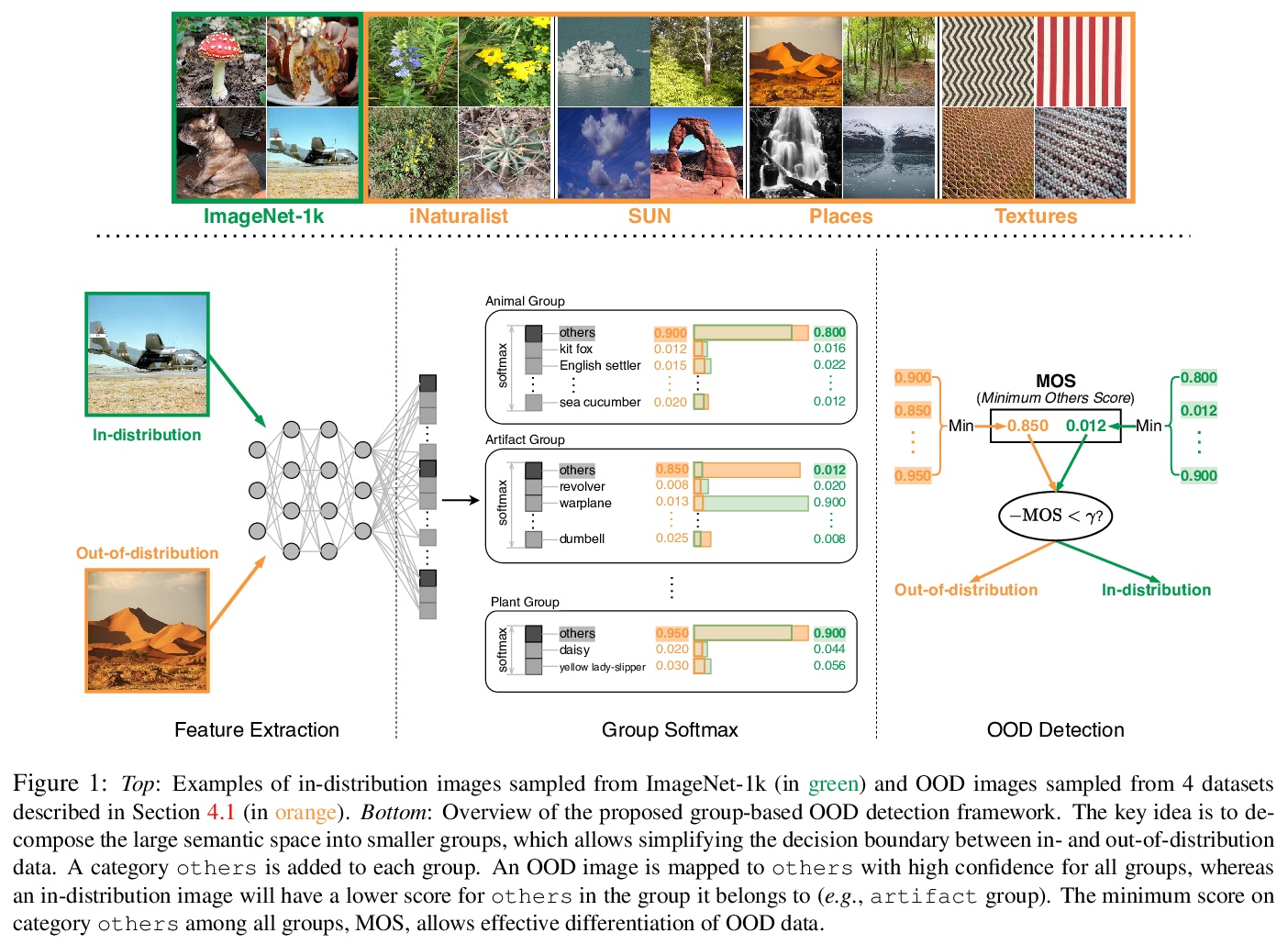

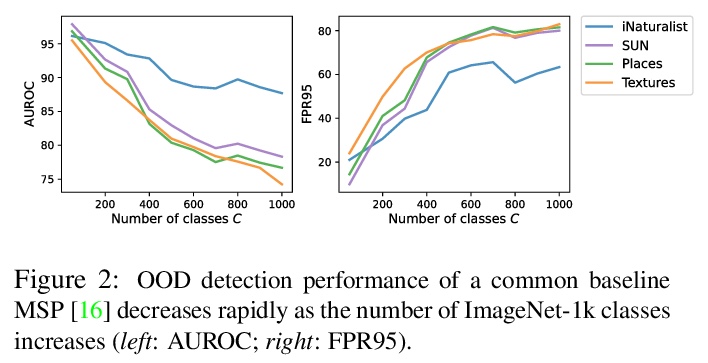

5、[CV] MOS: Towards Scaling Out-of-distribution Detection for Large Semantic Space

R Huang, Y Li

[University of Wisconsin-Madison]

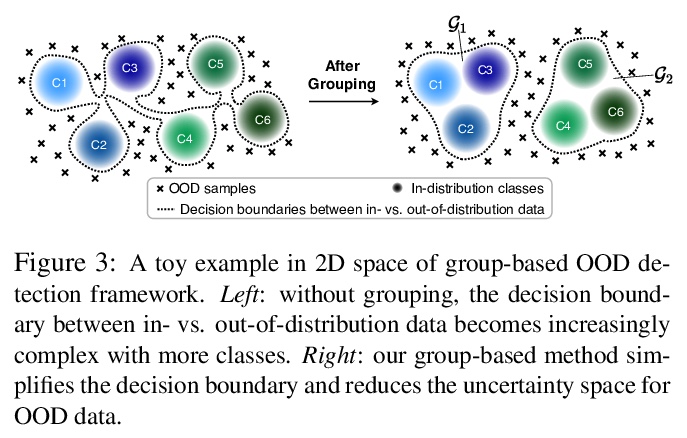

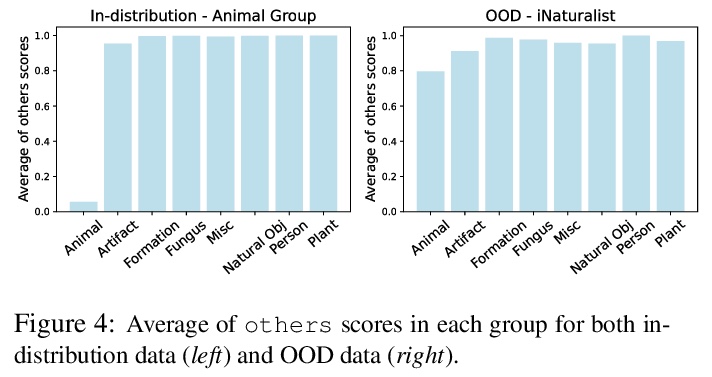

MOS:大型语义空间分布外检测的规模化实现。检测分布外(OOD)输入是在现实世界中安全部署机器学习模型的一个核心挑战。现有的解决方案主要由小数据集驱动,分辨率低,类别标签少(如CIFAR)。因此,大规模图像分类任务的OOD检测在很大程度上仍未被探索。本文提出一种基于群的OOD检测框架,以及一个新的OOD评分函数(称为MOS),来弥补这一关键差距。其关键思想是将庞大的语义空间分解成具有类似概念的较小的群,用以简化分布内和分布外数据间的决策边界,实现有效的OOD检测。该方法对高维类空间的扩展性大大优于之前方法。针对四个精心策划的OOD数据集评估了在ImageNet上训练的模型,这些数据集跨越了不同的语义。MOS建立了最先进的性能,将平均FPR95降低了14.33%,同时与之前最好的方法相比,实现了6倍速度的推理。

Detecting out-of-distribution (OOD) inputs is a central challenge for safely deploying machine learning models in the real world. Existing solutions are mainly driven by small datasets, with low resolution and very few class labels (e.g., CIFAR). As a result, OOD detection for largescale image classification tasks remains largely unexplored. In this paper, we bridge this critical gap by proposing a group-based OOD detection framework, along with a novel OOD scoring function termed MOS. Our key idea is to decompose the large semantic space into smaller groups with similar concepts, which allows simplifying the decision boundaries between invs. out-of-distribution data for effective OOD detection. Our method scales substantially better for high-dimensional class space than previous approaches. We evaluate models trained on ImageNet against four carefully curated OOD datasets, spanning diverse semantics. MOS establishes state-of-the-art performance, reducing the average FPR95 by 14.33% while achieving 6x speedup in inference compared to the previous best method.

https://weibo.com/1402400261/KgJTT6YU7

另外几篇值得关注的论文:

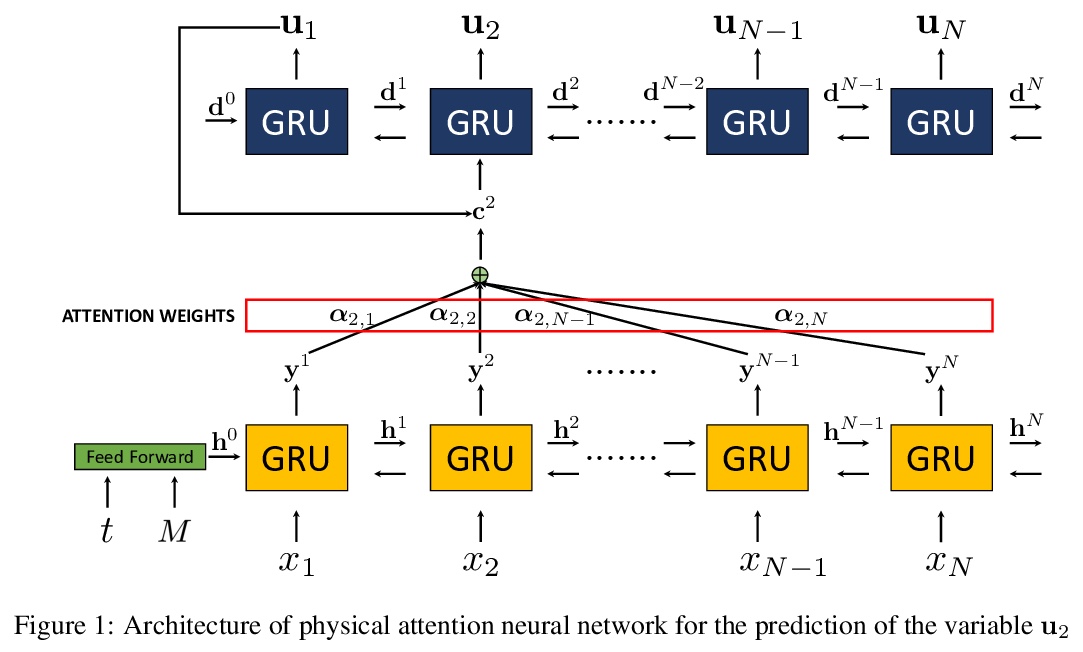

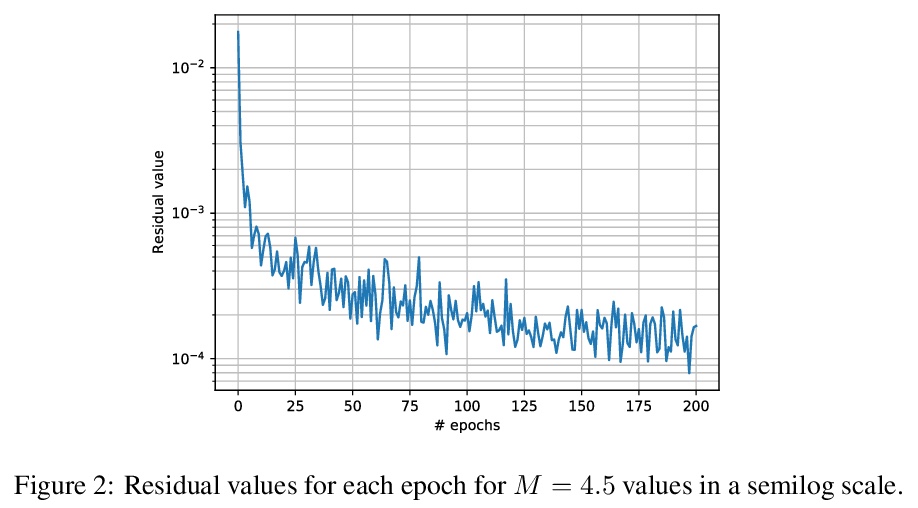

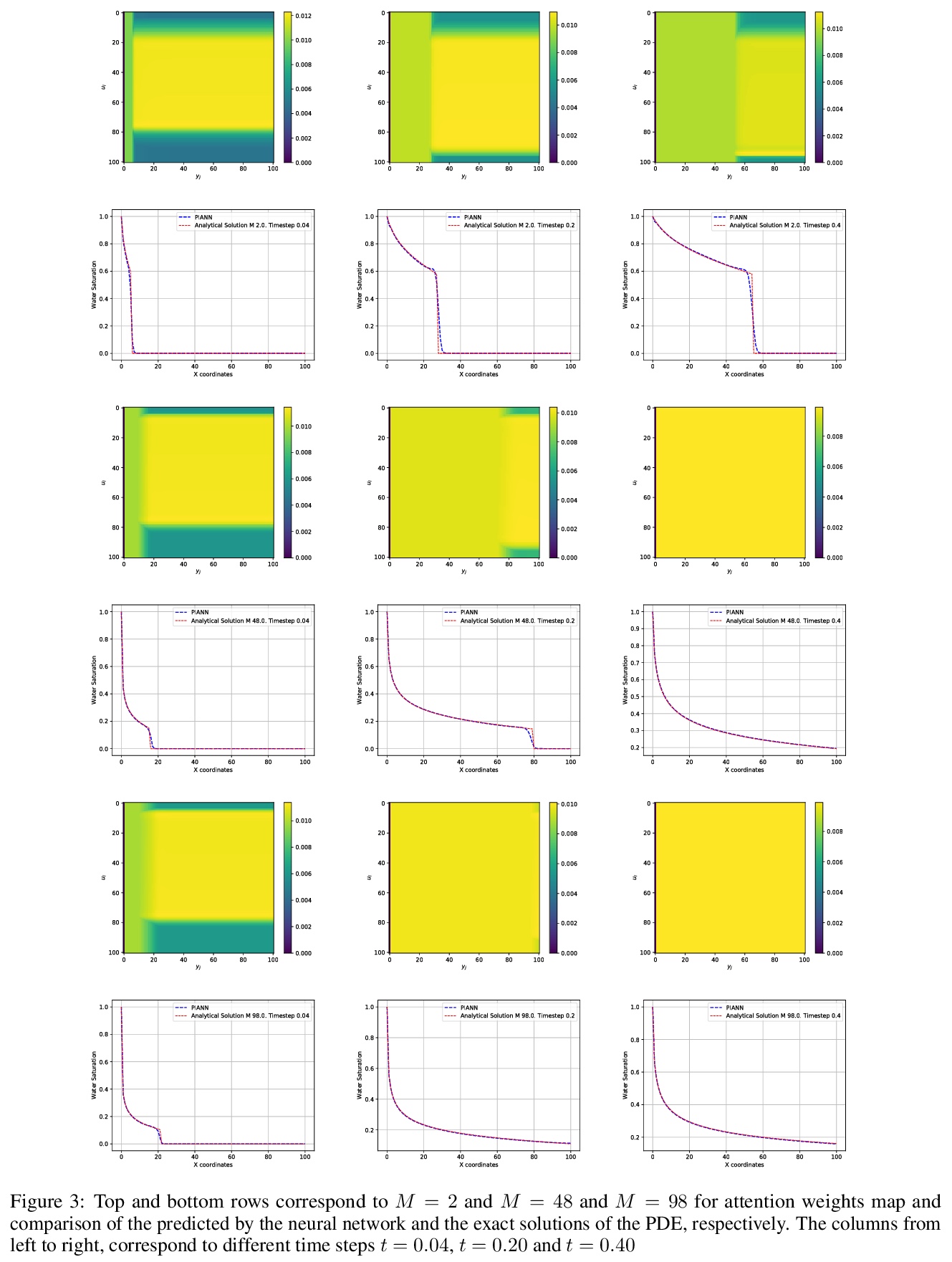

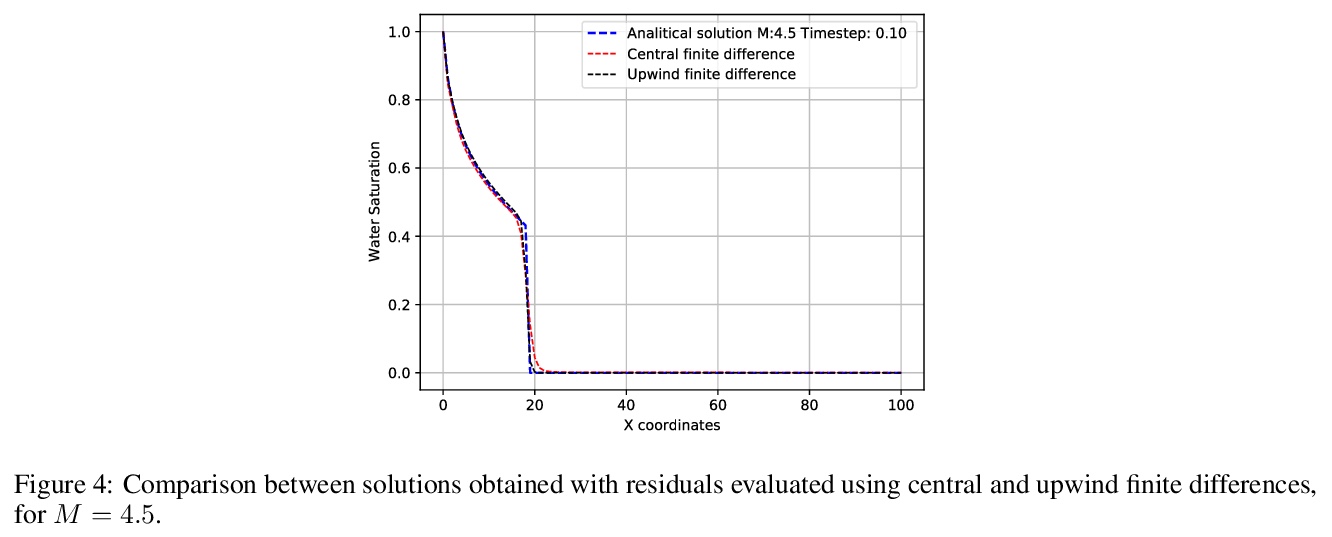

[LG] Physics-informed attention-based neural network for solving non-linear partial differential equations

用物理信息注意力神经网络求解非线性偏微分方程

R Rodriguez-Torrado, P Ruiz, L Cueto-Felgueroso, M C Green, T Friesen, S Matringe, J Togelius

[OriGen.AI & Universidad Politecnica de Madrid & Hess Corporation]

https://weibo.com/1402400261/KgJXiBLOw

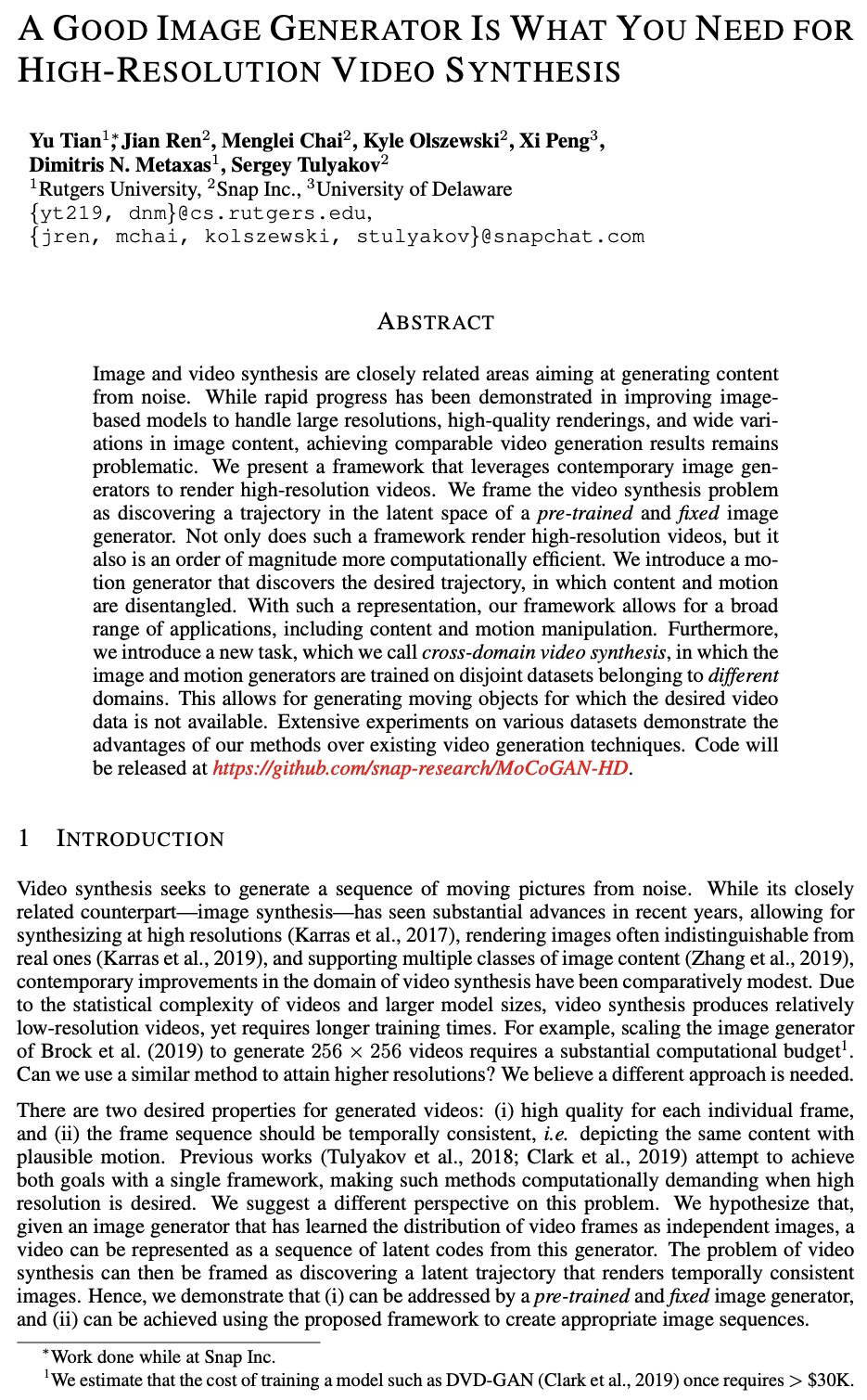

[CV] A Good Image Generator Is What You Need for High-Resolution Video Synthesis

要实现高分辨率视频合成只要好的图像生成器就够了

Y Tian, J Ren, M Chai, K Olszewski, X Peng, D N. Metaxas, S Tulyakov

[Rutgers University & Snap Inc & University of Delaware]

https://weibo.com/1402400261/KgJZQ6Jcj

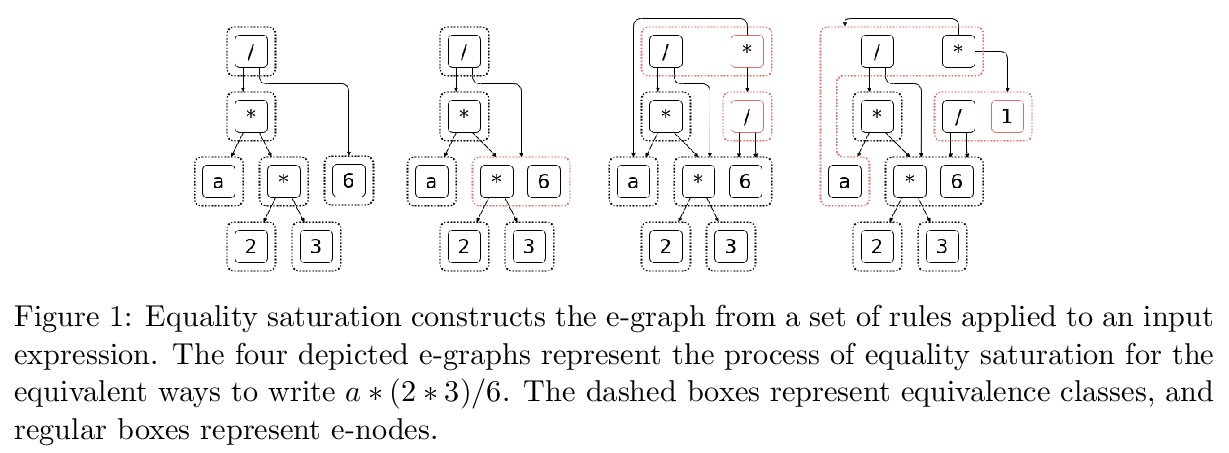

[CL] High-performance symbolic-numerics via multiple dispatch

基于多重分派的高性能符号-数字系统

S Gowda, Y Ma, A Cheli, M Gwozdz, V B. Shah, A Edelman, C Rackauckas

[MIT & Julia Computing & University of Pisa]

https://weibo.com/1402400261/KgK15e4Ld

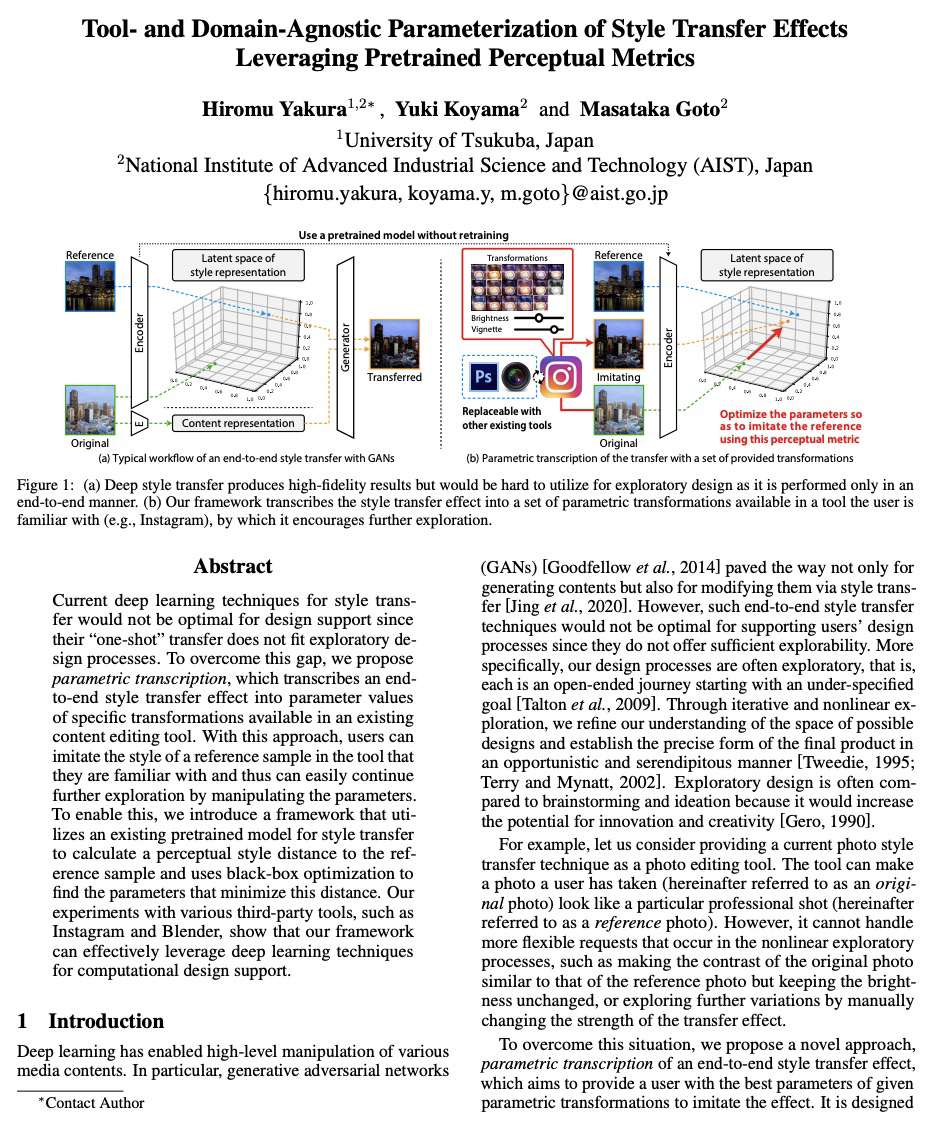

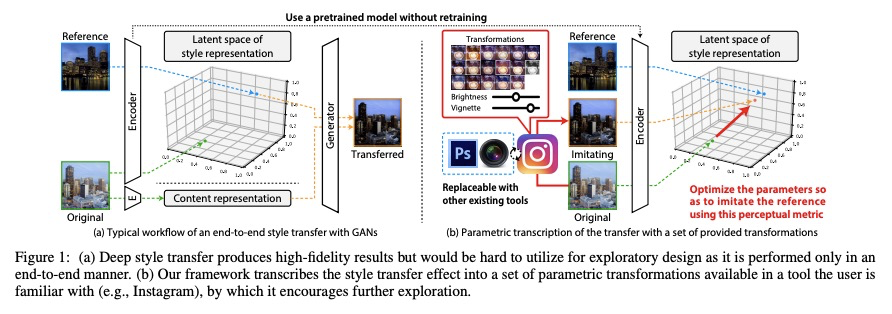

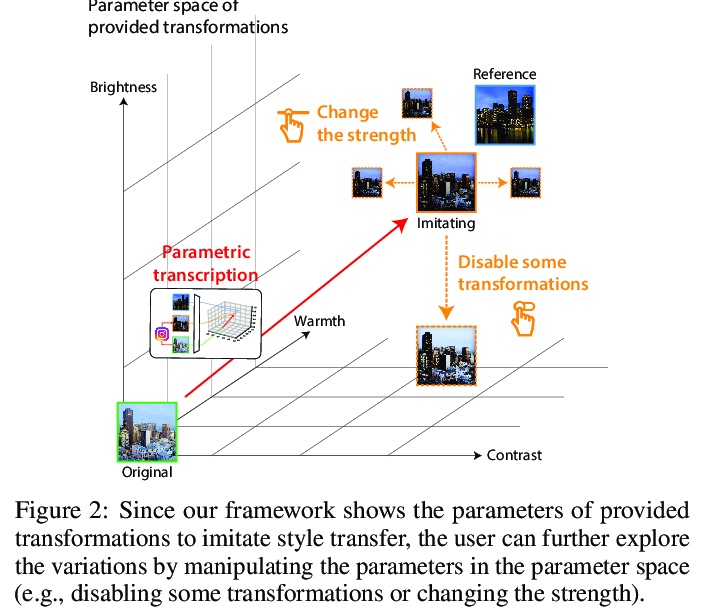

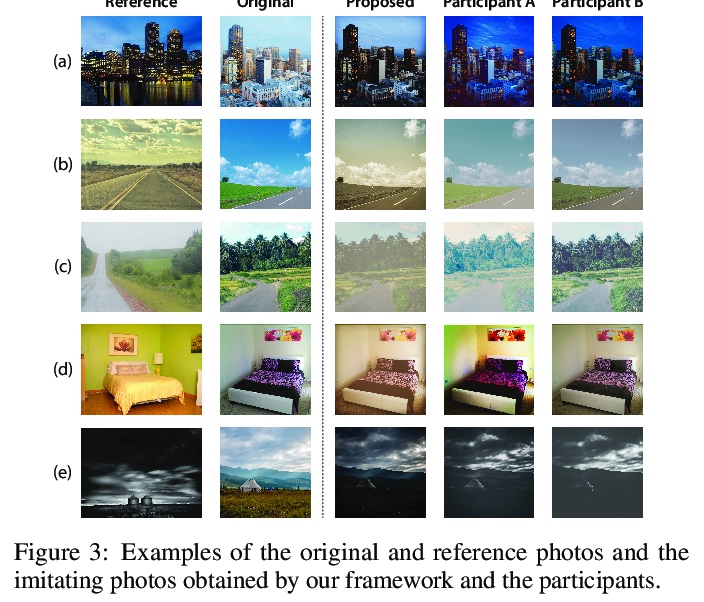

[LG] Tool- and Domain-Agnostic Parameterization of Style Transfer Effects Leveraging Pretrained Perceptual Metrics

基于预训练感知度量的工具/领域无关风格转换效果参数化

H Yakura, Y Koyama, M Goto

[University of Tsukuba & AIST]

https://weibo.com/1402400261/KgK3Zwh43