LG - 机器学习 CV - 计算机视觉 CL - 计算与语言 RO - 机器人

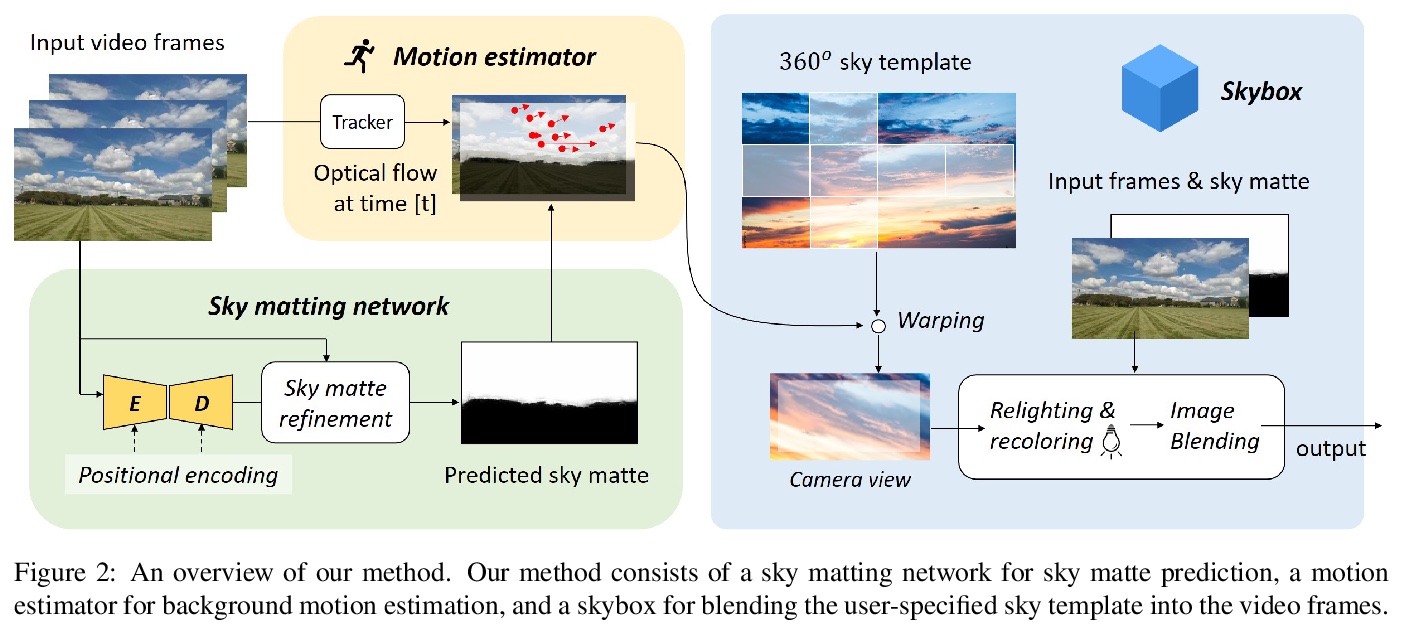

1、[CV] *Castle in the Sky: Dynamic Sky Replacement and Harmonization in Videos

Z Zou

[University of Michigan]

视频中天空的动态替换与协调,研究了天空视频增强问题,用视觉方法对视频中的天空进行自动替换和协调,以可控风格在视频中自动生成逼真、生动的天空背景。将问题分解为三个子任务:柔性天空抠图、运动估计和图像混合。通过对智能手机和行车记录仪实际捕捉的视频进行实验,证明了该方法在视觉质量和光照/运动动力学方面的高保真度和良好的泛化性。

This paper proposes a vision-based method for video sky replacement and harmonization, which can automatically generate realistic and dramatic sky backgrounds in videos with controllable styles. Different from previous sky editing methods that either focus on static photos or require inertial measurement units integrated in smartphones on shooting videos, our method is purely vision-based, without any requirements on the capturing devices, and can be well applied to either online or offline processing scenarios. Our method runs in real-time and is free of user interactions. We decompose this artistic creation process into a couple of proxy tasks including sky matting, motion estimation, and image blending. Experiments are conducted on videos diversely captured in the wild by handheld smartphones and dash cameras, and show high fidelity and good generalization of our method in both visual quality and lighting/motion dynamics. Our code and animated results are available at > this https URL.

https://weibo.com/1402400261/JqBYrhNhF

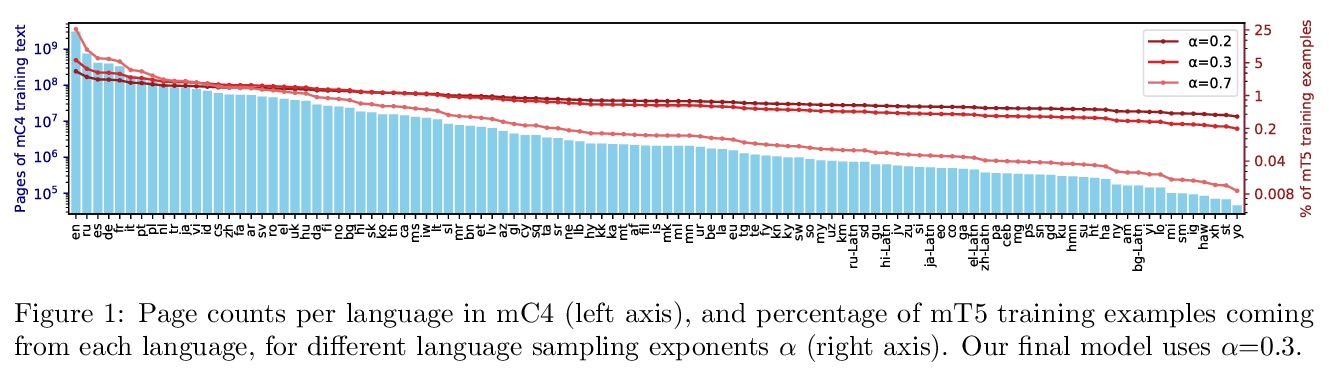

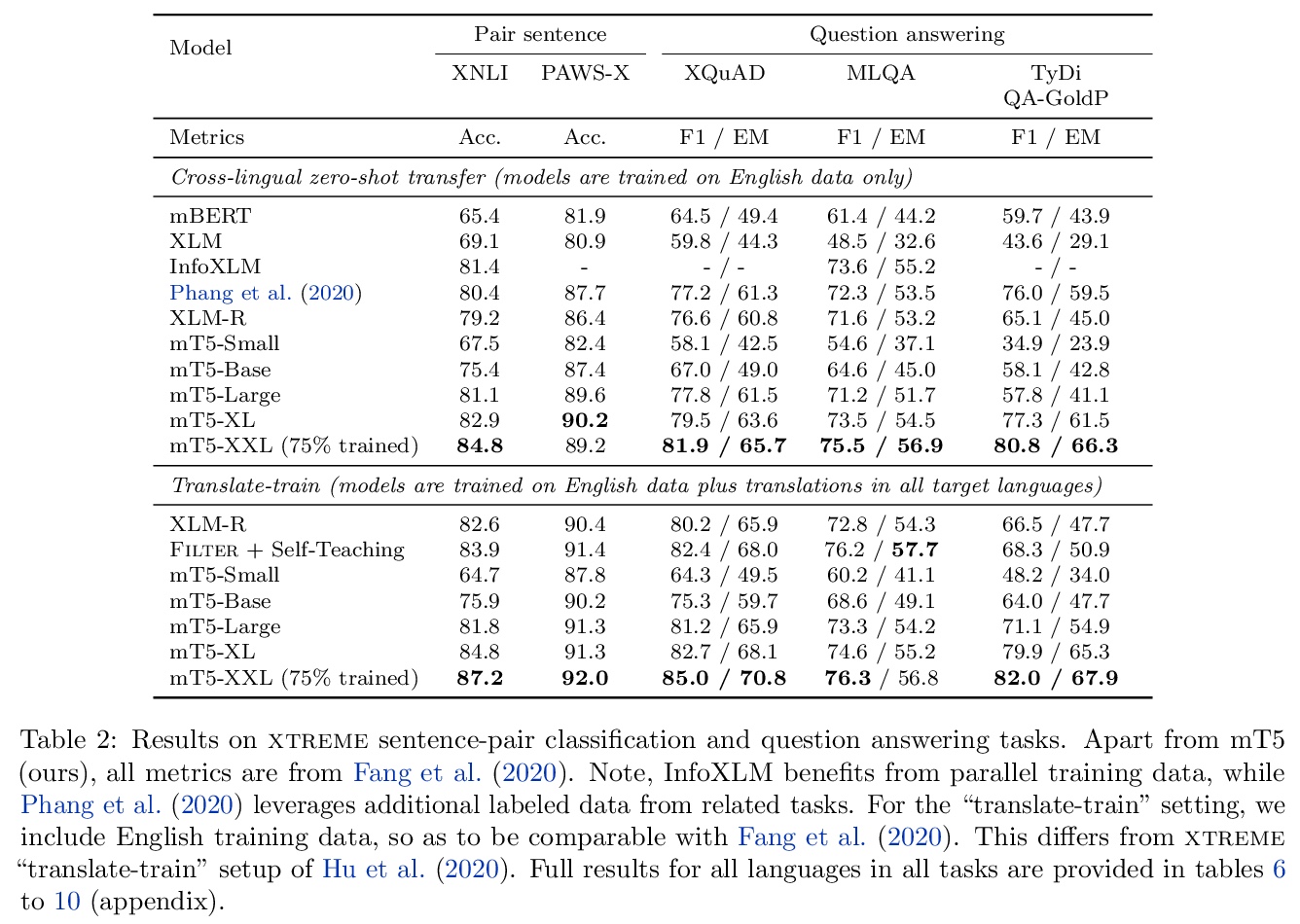

2、[CL] *mT5: A massively multilingual pre-trained text-to-text transformer

L Xue, N Constant, A Roberts, M Kale, R Al-Rfou, A Siddhant, A Barua, C Raffel

[Google]

大规模多语言T5预训练语言模型mT5,在覆盖101种语言的新的Common Crawl数据集上进行预训练,可直接适用于多语言场景,在各种基准测试集上展现出强大的性能。

The recent “Text-to-Text Transfer Transformer” (T5) leveraged a unified text-to-text format and scale to attain state-of-the-art results on a wide variety of English-language NLP tasks. In this paper, we introduce mT5, a multilingual variant of T5 that was pre-trained on a new Common Crawl-based dataset covering 101 languages. We describe the design and modified training of mT5 and demonstrate its state-of-the-art performance on many multilingual benchmarks. All of the code and model checkpoints used in this work are publicly available.

https://weibo.com/1402400261/JqC5HBrta

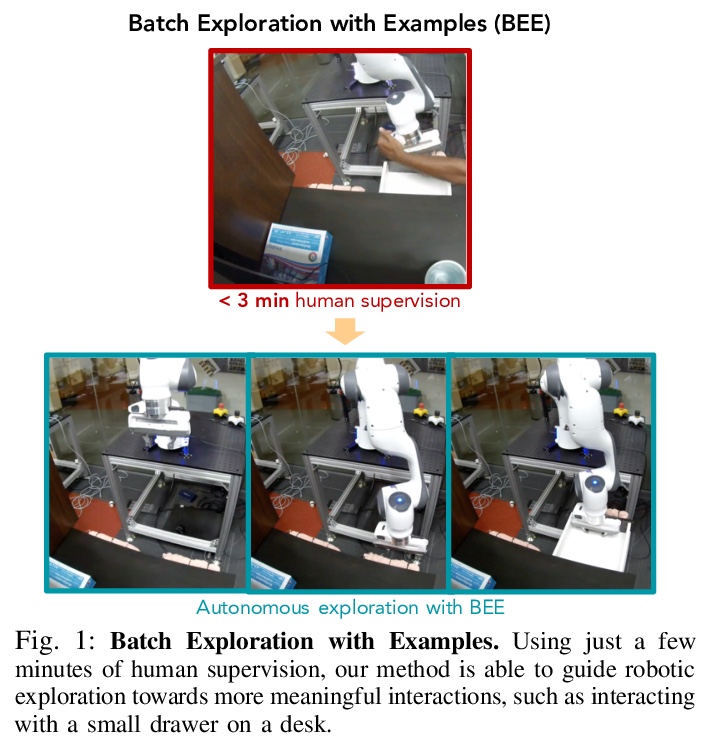

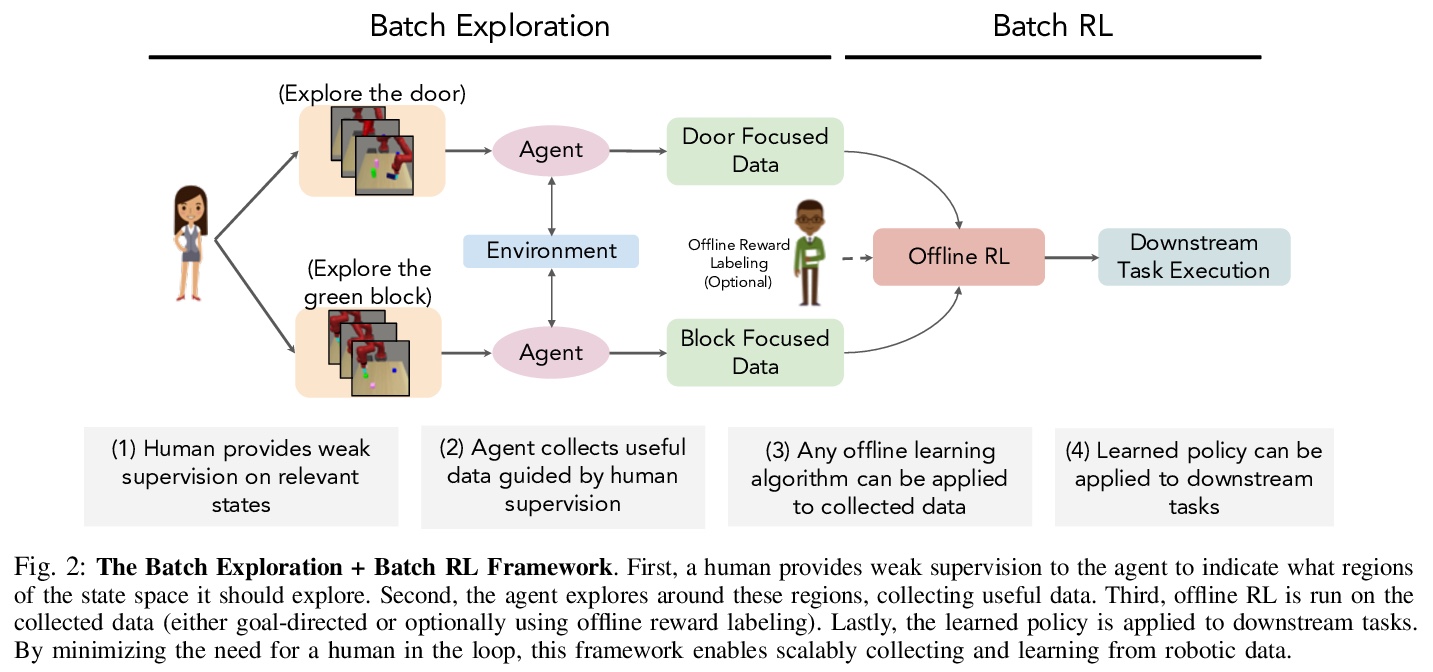

3、[RO] **Batch Exploration with Examples for Scalable Robotic Reinforcement Learning

A S. Chen, H Nam, S Nair, C Finn

[Stanford University]

实例批量探索(BEE)可扩展机器人离线强化学习,用人工弱监督引导重点探索状态空间的重要部分,避免无效探索。BEE通过人工提供的少量重要状态图进行引导,探索状态空间相关区域,人工引导图只需要在数据收集的开始收集一次,几分钟即可完成,可以和任意批量强化学习算法相结合。**

Learning from diverse offline datasets is a promising path towards learning general purpose robotic agents. However, a core challenge in this paradigm lies in collecting large amounts of meaningful data, while not depending on a human in the loop for data collection. One way to address this challenge is through task-agnostic exploration, where an agent attempts to explore without a task-specific reward function, and collect data that can be useful for any downstream task. While these approaches have shown some promise in simple domains, they often struggle to explore the relevant regions of the state space in more challenging settings, such as vision based robotic manipulation. This challenge stems from an objective that encourages exploring everything in a potentially vast state space. To mitigate this challenge, we propose to focus exploration on the important parts of the state space using weak human supervision. Concretely, we propose an exploration technique, Batch Exploration with Examples (BEE), that explores relevant regions of the state-space, guided by a modest number of human provided images of important states. These human provided images only need to be collected once at the beginning of data collection and can be collected in a matter of minutes, allowing us to scalably collect diverse datasets, which can then be combined with any batch RL algorithm. We find that BEE is able to tackle challenging vision-based manipulation tasks both in simulation and on a real Franka robot, and observe that compared to task-agnostic and weakly-supervised exploration techniques, it (1) interacts more than twice as often with relevant objects, and (2) improves downstream task performance when used in conjunction with offline RL.

https://weibo.com/1402400261/JqCaToZdj

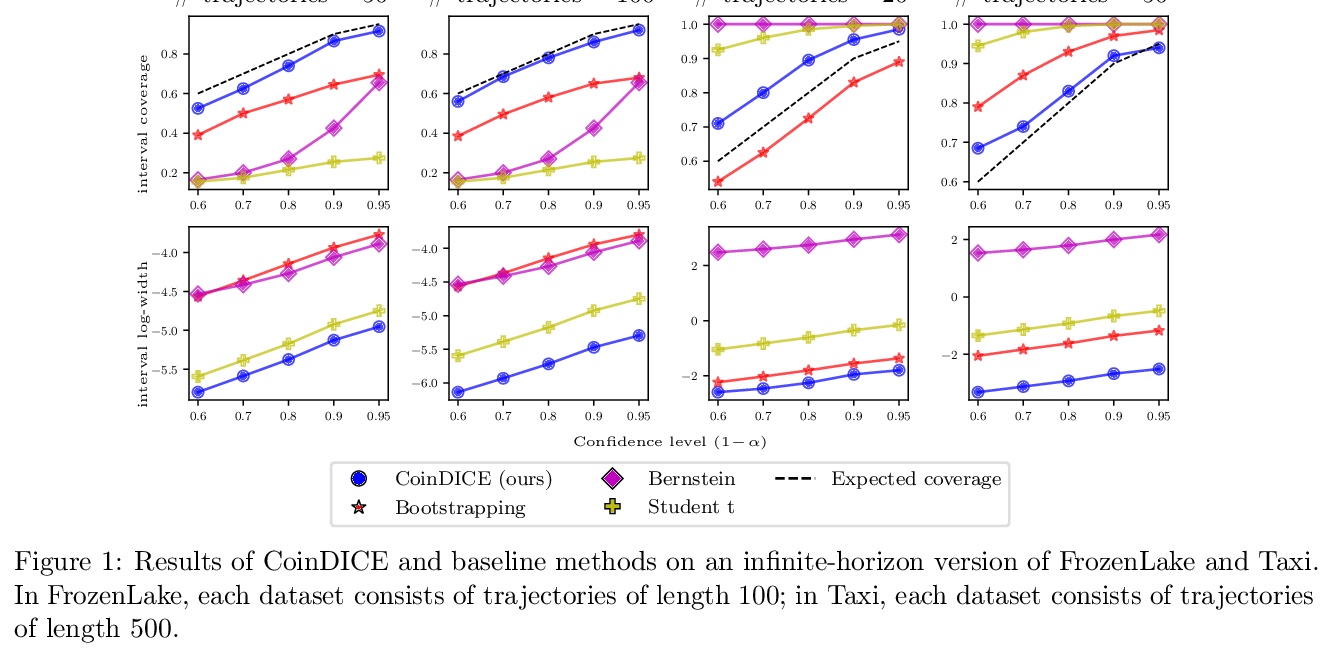

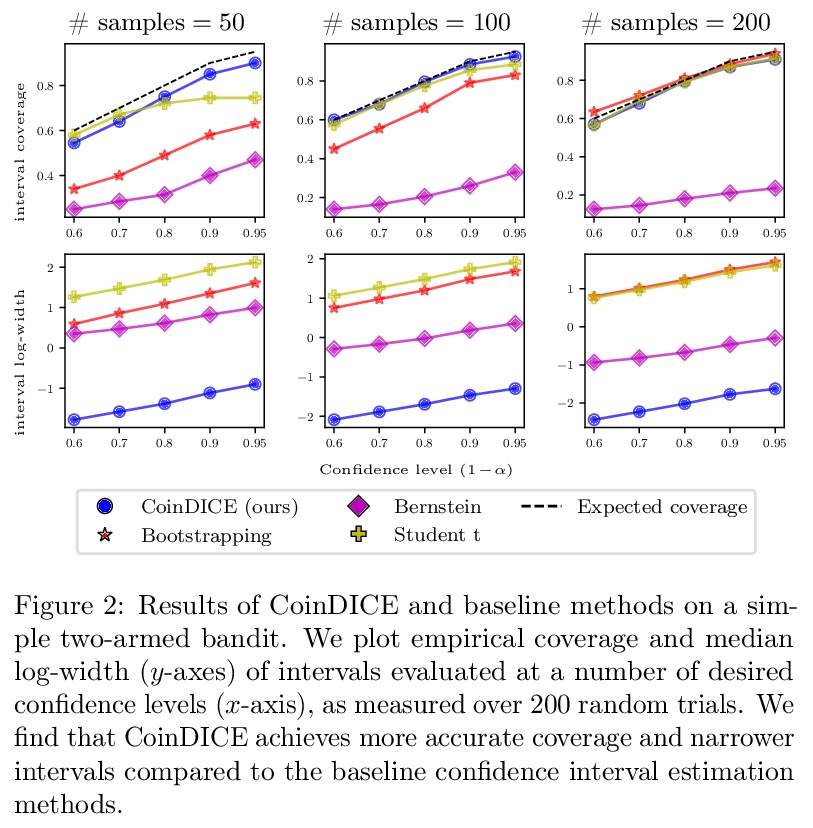

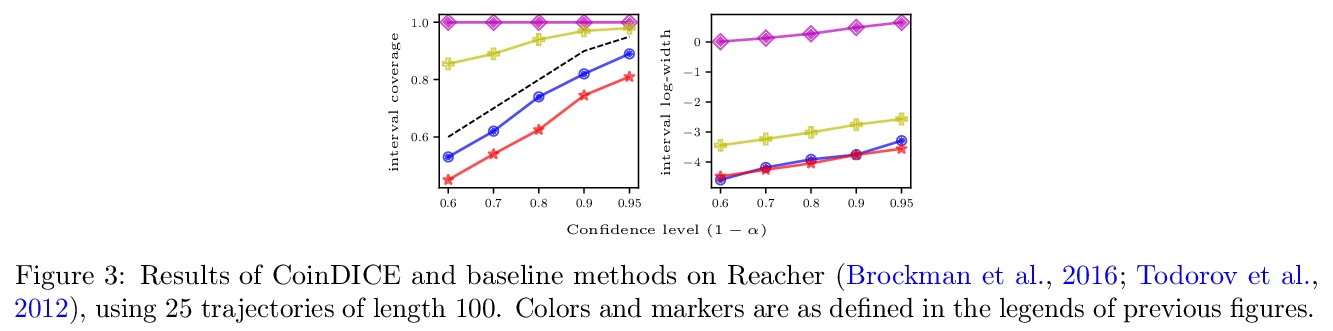

4、[LG] **CoinDICE: Off-Policy Confidence Interval Estimation

B Dai, O Nachum, Y Chow, L Li, C Szepesvári, D Schuurmans

[Google Research & DeepMind]

CoinDICE离线策略置信区间估计算法,适用于行为不确定的离线场景。CoinDICE包括一个新的特征嵌入Q-LP,以及一个广义经验似然方法的置信区间估计。从理论上证明了所得到的置信区间在渐近和有限样本条件下都是有效的。**

We study high-confidence behavior-agnostic off-policy evaluation in reinforcement learning, where the goal is to estimate a confidence interval on a target policy’s value, given only access to a static experience dataset collected by unknown behavior policies. Starting from a function space embedding of the linear program formulation of the > Q-function, we obtain an optimization problem with generalized estimating equation constraints. By applying the generalized empirical likelihood method to the resulting Lagrangian, we propose CoinDICE, a novel and efficient algorithm for computing confidence intervals. Theoretically, we prove the obtained confidence intervals are valid, in both asymptotic and finite-sample regimes. Empirically, we show in a variety of benchmarks that the confidence interval estimates are tighter and more accurate than existing methods.

https://weibo.com/1402400261/JqCp0BwIz

5、[CV] **Learning Loss for Test-Time Augmentation

I Kim, Y Kim, S Kim

[Kakao Brain & Sungshin Women’s University]

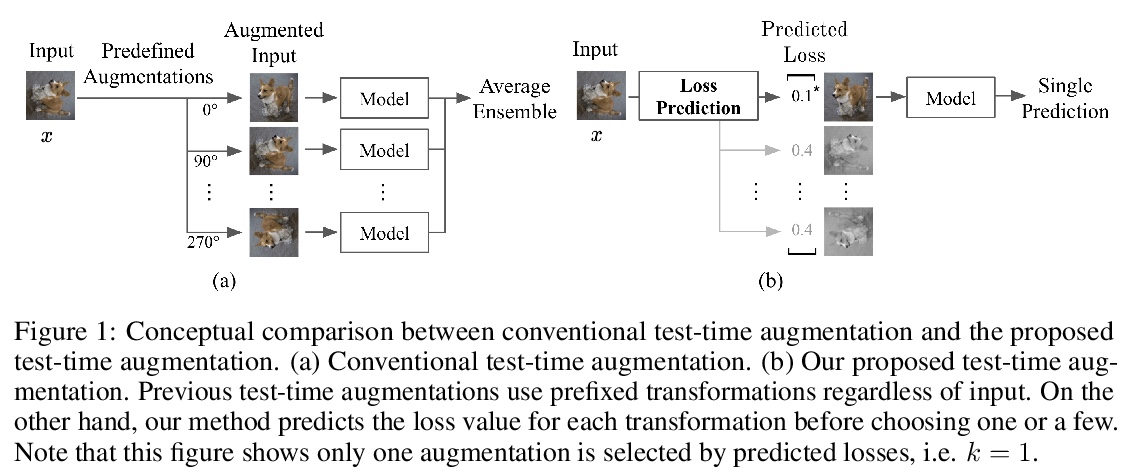

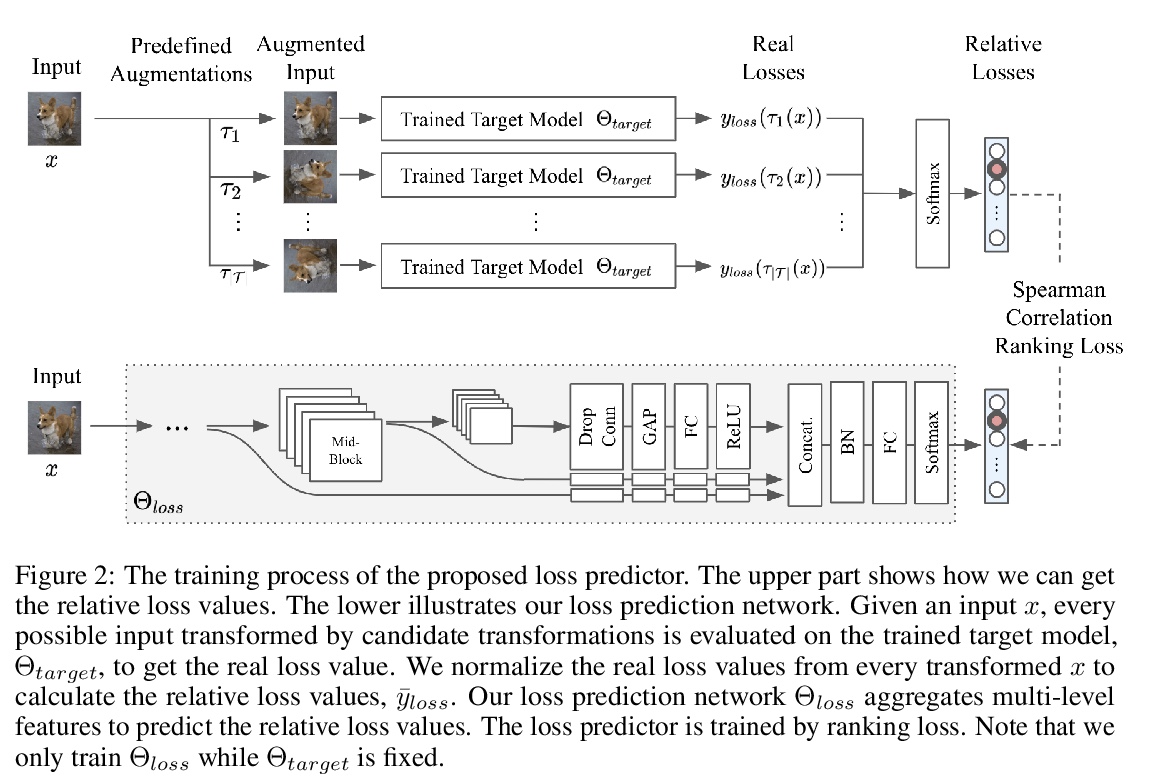

实例感知测试时数据增强方法,可有效地为测试输入选择合适的变换。提出的方法包括一个辅助模块来预测给定输入的每个可能变换的损失,将具有较低预测损失的变换应用于输入。实验表明,该方法提高了模型对各种破坏的鲁棒性。**

Data augmentation has been actively studied for robust neural networks. Most of the recent data augmentation methods focus on augmenting datasets during the training phase. At the testing phase, simple transformations are still widely used for test-time augmentation. This paper proposes a novel instance-level test-time augmentation that efficiently selects suitable transformations for a test input. Our proposed method involves an auxiliary module to predict the loss of each possible transformation given the input. Then, the transformations having lower predicted losses are applied to the input. The network obtains the results by averaging the prediction results of augmented inputs. Experimental results on several image classification benchmarks show that the proposed instance-aware test-time augmentation improves the model’s robustness against various corruptions.

https://weibo.com/1402400261/JqCsSlOPa

另外几篇值得关注的论文:

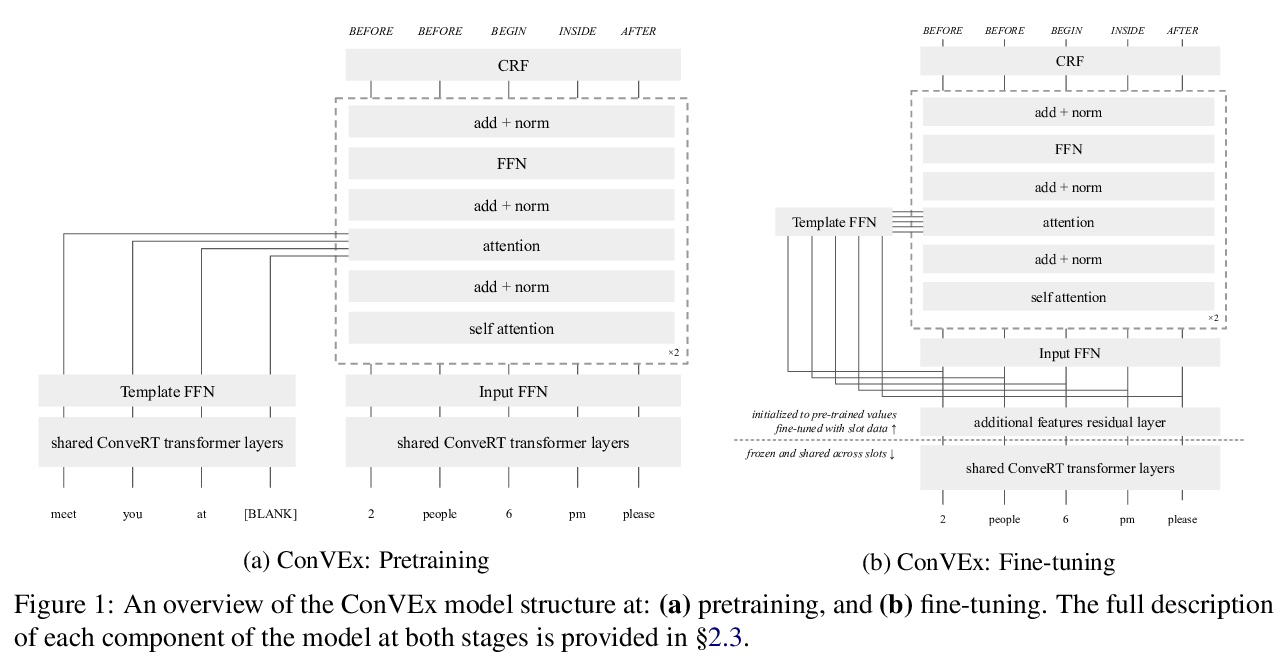

[CL] ConVEx: Data-Efficient and Few-Shot Slot Labeling

ConVEx:数据高效的少样本槽标记

M Henderson, I Vulić

[PolyAI Ltd]

https://weibo.com/1402400261/JqCw0u3XT

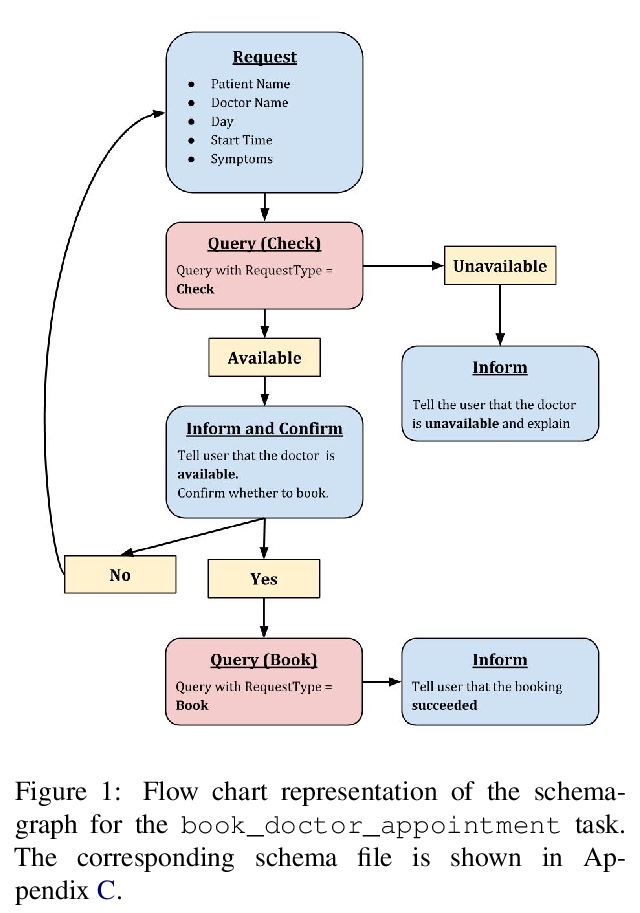

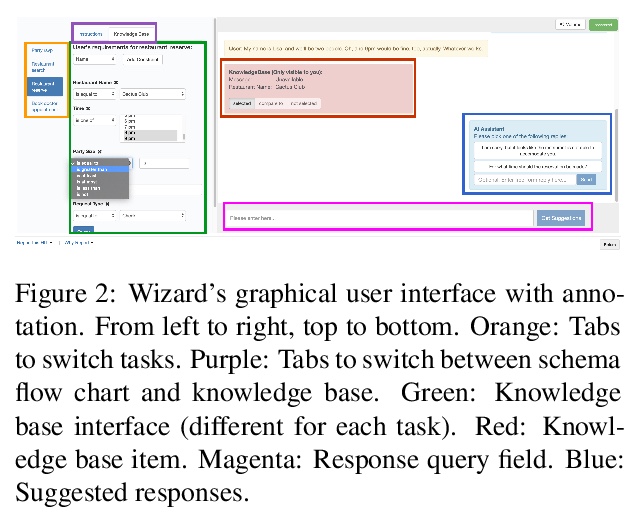

[CL] STAR: A Schema-Guided Dialog Dataset for Transfer Learning

STAR:模式引导面向任务的迁移学习对话数据集

J E. M. Mosig, S Mehri, T Kober

[Rasa & CMU]

https://weibo.com/1402400261/JqCyltIT3

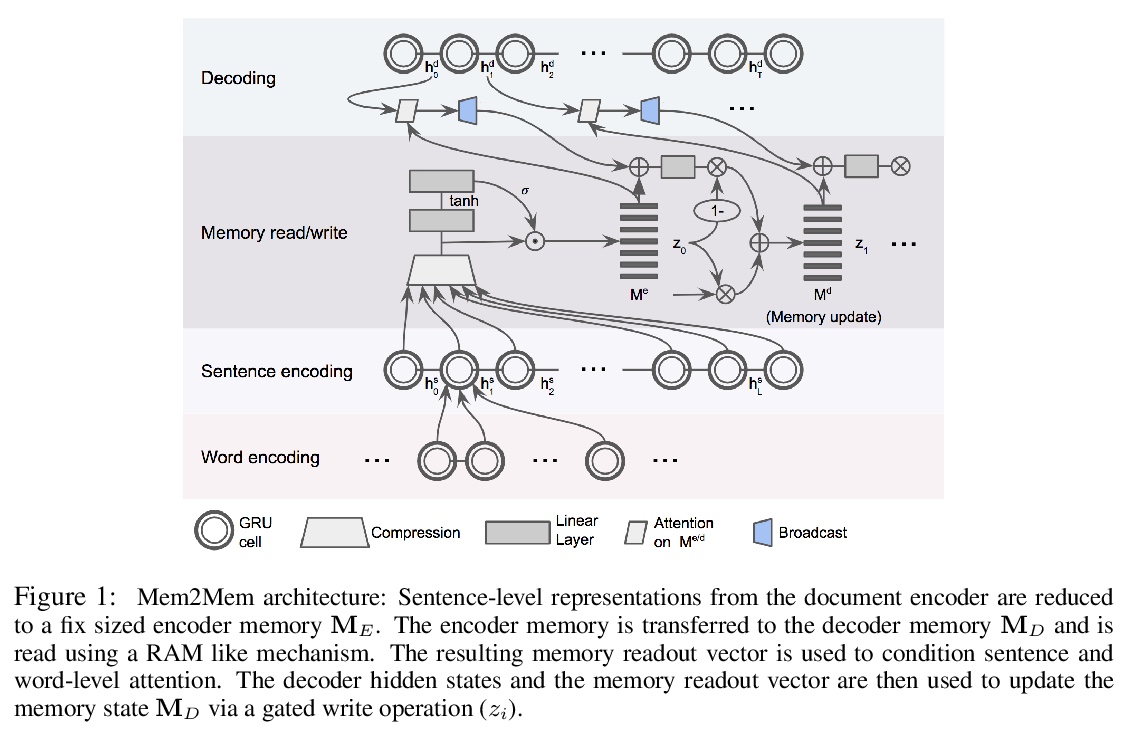

[CL] Learning to Summarize Long Texts with Memory Compression and Transfer

基于记忆压缩和迁移的长文摘要学习(Mem2Mem)

J Park, J Pilault, C Pal

[Element AI]

https://weibo.com/1402400261/JqCAr0ZW1

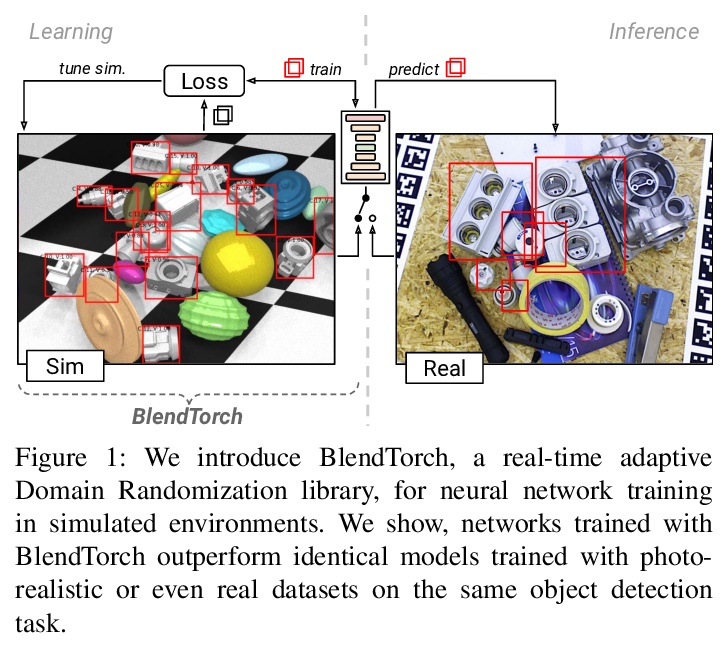

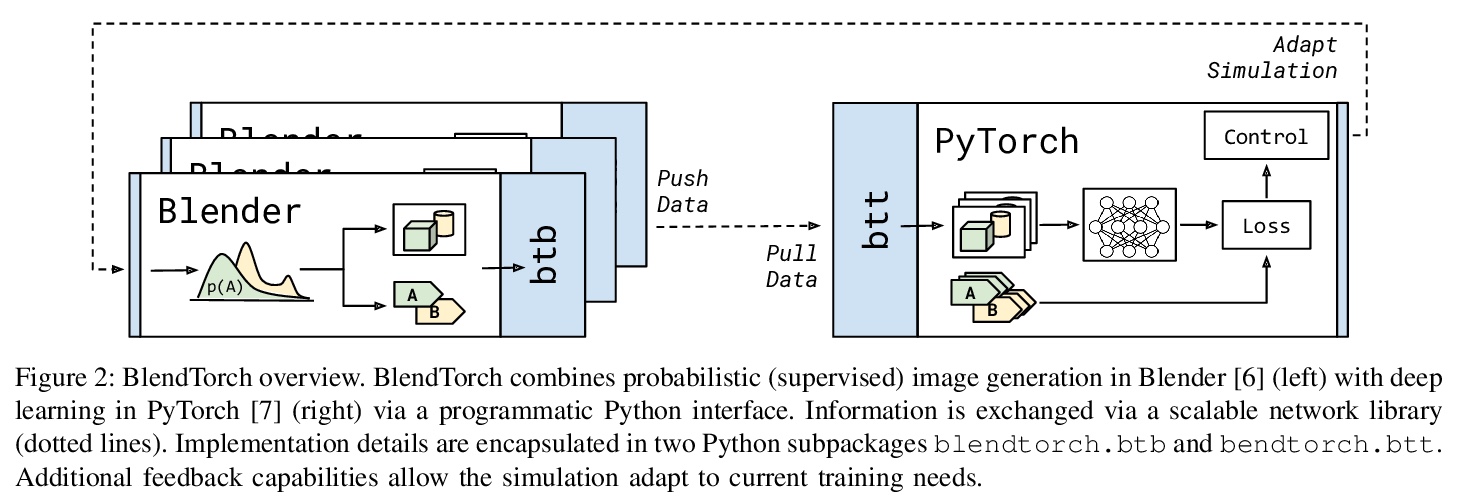

[CV] BlendTorch: A Real-Time, Adaptive Domain Randomization Library

BlendTorch:用于创建无限合成训练数据流的自适应域随机化(DR)库

C Heindl, L Brunner, S Zambal, J Scharinger

[Profactor GmbH & JKU]

https://weibo.com/1402400261/JqCCgbkKQ

[LG] Rethinking pooling in graph neural networks

图神经网络池化的反思

D Mesquita, A H. Souza, S Kaski

[Aalto University & Federal Institute of Ceará]

https://weibo.com/1402400261/JqCEXp22G