LG - 机器学习 CV - 计算机视觉 CL - 计算与语言

1、[LG] *Learning Invariances in Neural Networks

G Benton, M Finzi, P Izmailov, A G Wilson

[New York University]

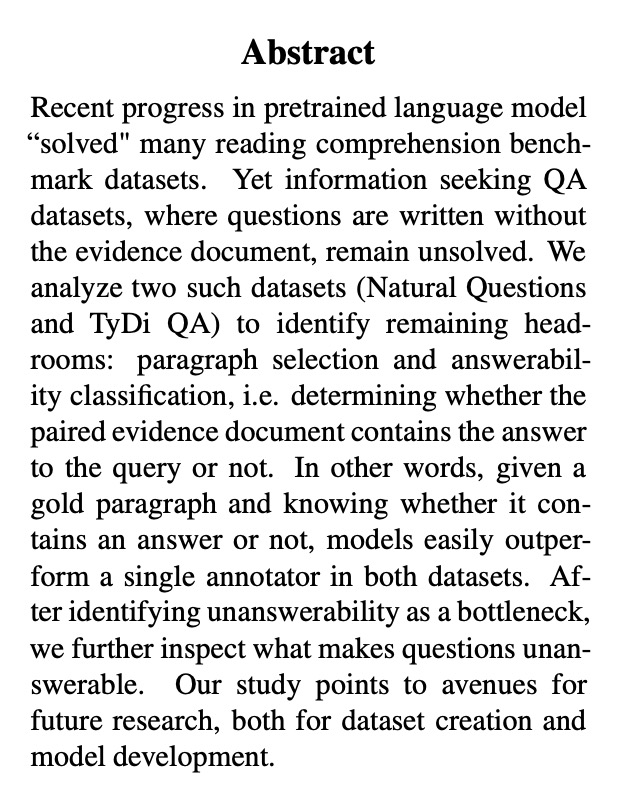

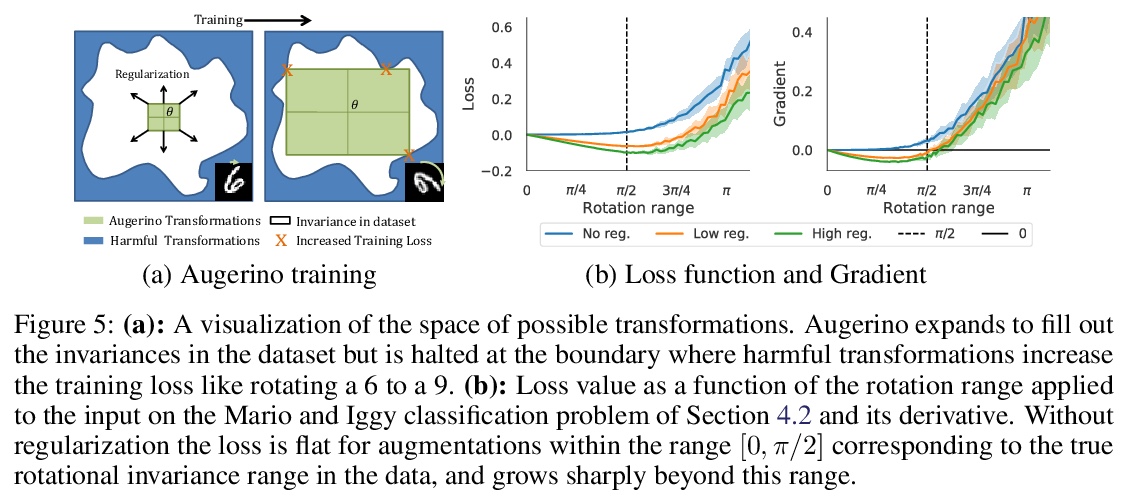

从训练数据中学习对称性,提出了可与标准模型架构无缝部署的Augerino框架,通过增广分布参数化,以及对网络参数和增广参数训练损失的同时优化,学习不变性和同变性,提高泛化能力,在回归、分类、分割和分子性质预测方面都有不错的效果。

Invariances to translations have imbued convolutional neural networks with powerful generalization properties. However, we often do not know a priori what invariances are present in the data, or to what extent a model should be invariant to a given symmetry group. We show how to \emph{learn} invariances and equivariances by parameterizing a distribution over augmentations and optimizing the training loss simultaneously with respect to the network parameters and augmentation parameters. With this simple procedure we can recover the correct set and extent of invariances on image classification, regression, segmentation, and molecular property prediction from a large space of augmentations, on training data alone.

https://weibo.com/1402400261/JqLqQ2XBs

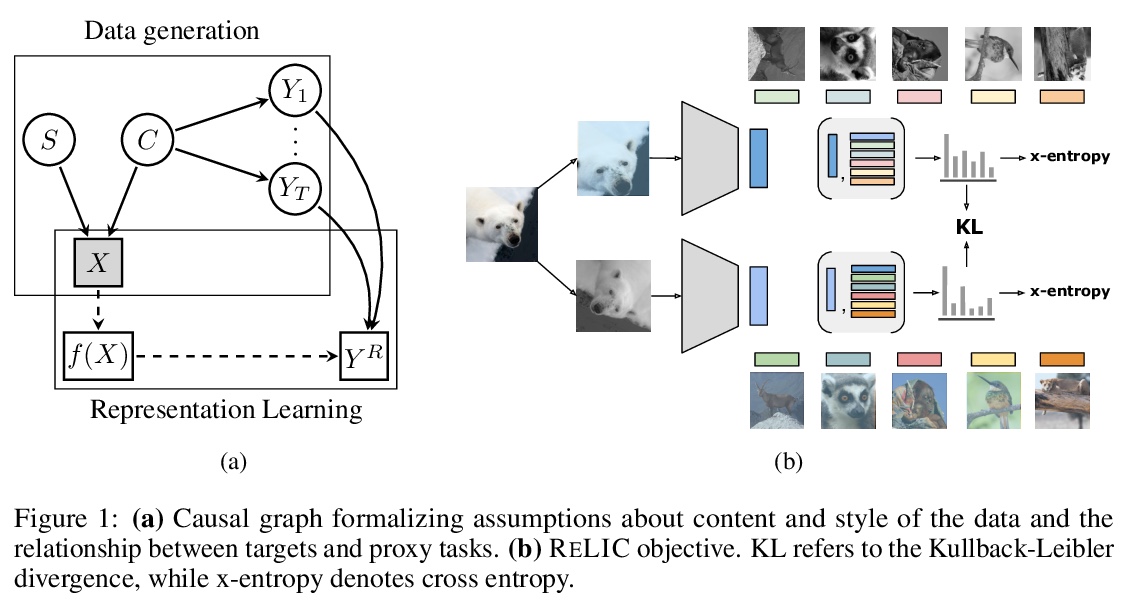

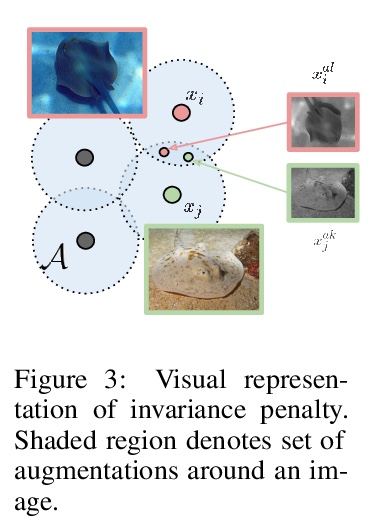

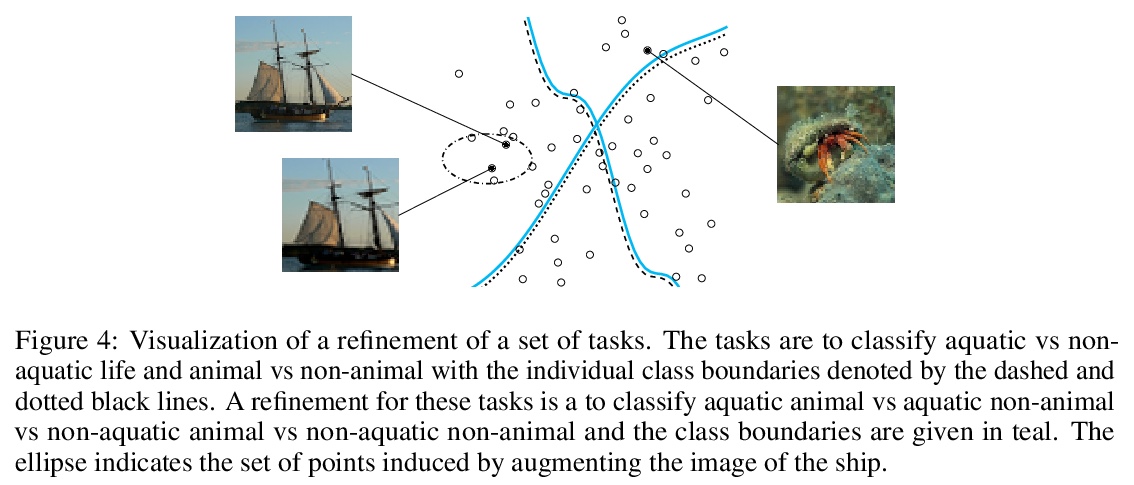

2、[LG] *Representation Learning via Invariant Causal Mechanisms

J Mitrovic, B McWilliams, J Walker, L Buesing, C Blundell

[DeepMind]

通过不变因果机制实现的自监督表示学习(ReLIC),用不变正则化器来实现代目标的不变预测,可实现更好的泛化。用因果关系推广了对比学习这一特殊的自监督方法,利用改进的概念推广了对比方法,证明用不变预测原理学习改进的表示法,是这些表示法推广到下游任务的充分条件。ReLIC在鲁棒性和在ImageNet上习得表示的分布外泛化方面显著优于其他竞争方法。**

Self-supervised learning has emerged as a strategy to reduce the reliance on costly supervised signal by pretraining representations only using unlabeled data. These methods combine heuristic proxy classification tasks with data augmentations and have achieved significant success, but our theoretical understanding of this success remains limited. In this paper we analyze self-supervised representation learning using a causal framework. We show how data augmentations can be more effectively utilized through explicit invariance constraints on the proxy classifiers employed during pretraining. Based on this, we propose a novel self-supervised objective, Representation Learning via Invariant Causal Mechanisms (ReLIC), that enforces invariant prediction of proxy targets across augmentations through an invariance regularizer which yields improved generalization guarantees. Further, using causality we generalize contrastive learning, a particular kind of self-supervised method, and provide an alternative theoretical explanation for the success of these methods. Empirically, ReLIC significantly outperforms competing methods in terms of robustness and out-of-distribution generalization on ImageNet, while also significantly outperforming these methods on Atari achieving above human-level performance on > 51 out of > 57 games.

https://weibo.com/1402400261/JqLBLDOkl

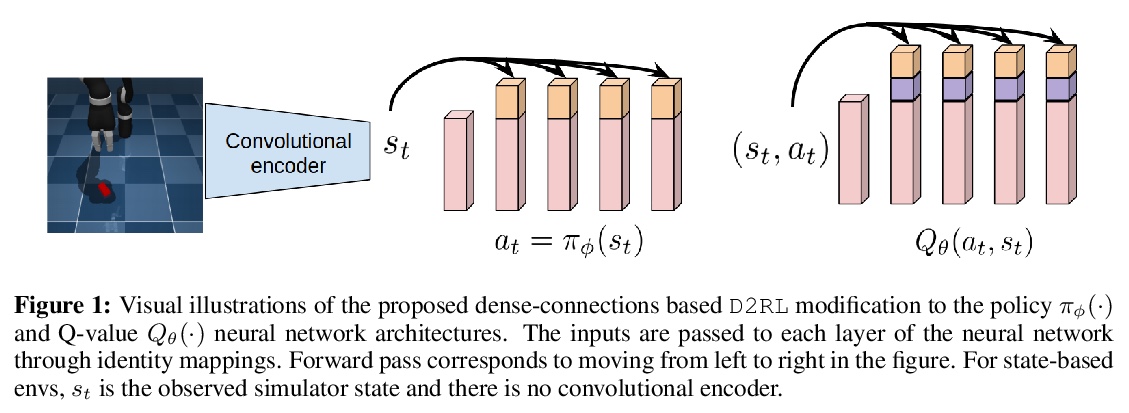

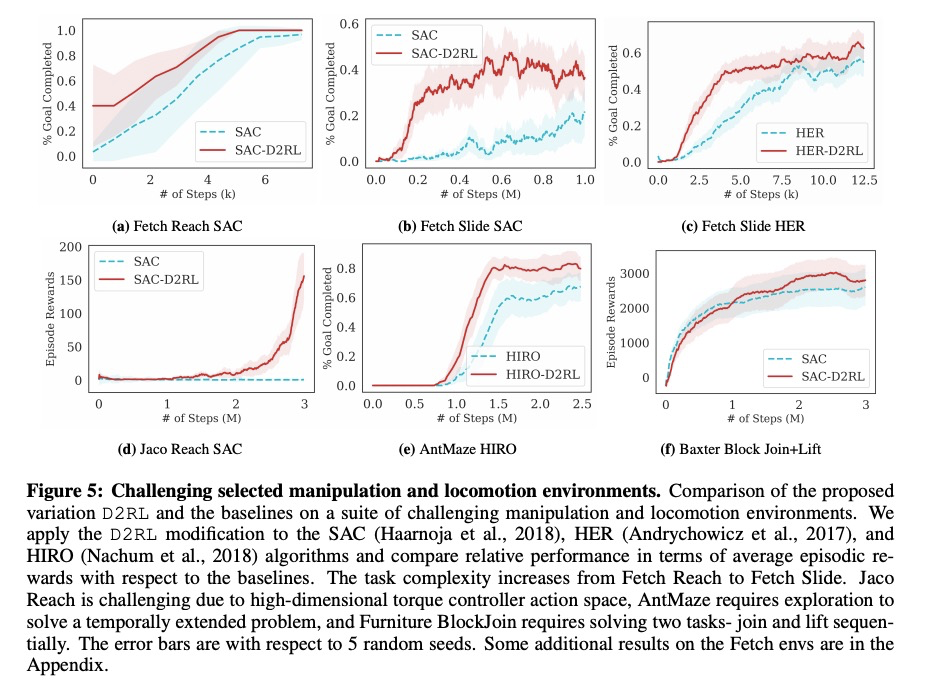

3、[LG] *D2RL: Deep Dense Architectures in Reinforcement Learning

S Sinha, H Bharadhwaj, A Srinivas, A Garg

[University of Toronto & UC Berkeley]

强化学习中的深度密集架构,研究了层数改变对深度强化学习(DRL)策略和价值函数参数化的影响,以及随着层数增加性能的下降,提出了一种普遍适用的解决方案,可显著提高在不同的操作和配置场景下最先进DRL基线的采样效率,用更深的网络实现更好的特征提取和学习。**

While improvements in deep learning architectures have played a crucial role in improving the state of supervised and unsupervised learning in computer vision and natural language processing, neural network architecture choices for reinforcement learning remain relatively under-explored. We take inspiration from successful architectural choices in computer vision and generative modelling, and investigate the use of deeper networks and dense connections for reinforcement learning on a variety of simulated robotic learning benchmark environments. Our findings reveal that current methods benefit significantly from dense connections and deeper networks, across a suite of manipulation and locomotion tasks, for both proprioceptive and image-based observations. We hope that our results can serve as a strong baseline and further motivate future research into neural network architectures for reinforcement learning. The project website with code is at this link > this https URL.

https://weibo.com/1402400261/JqLJXfYPH

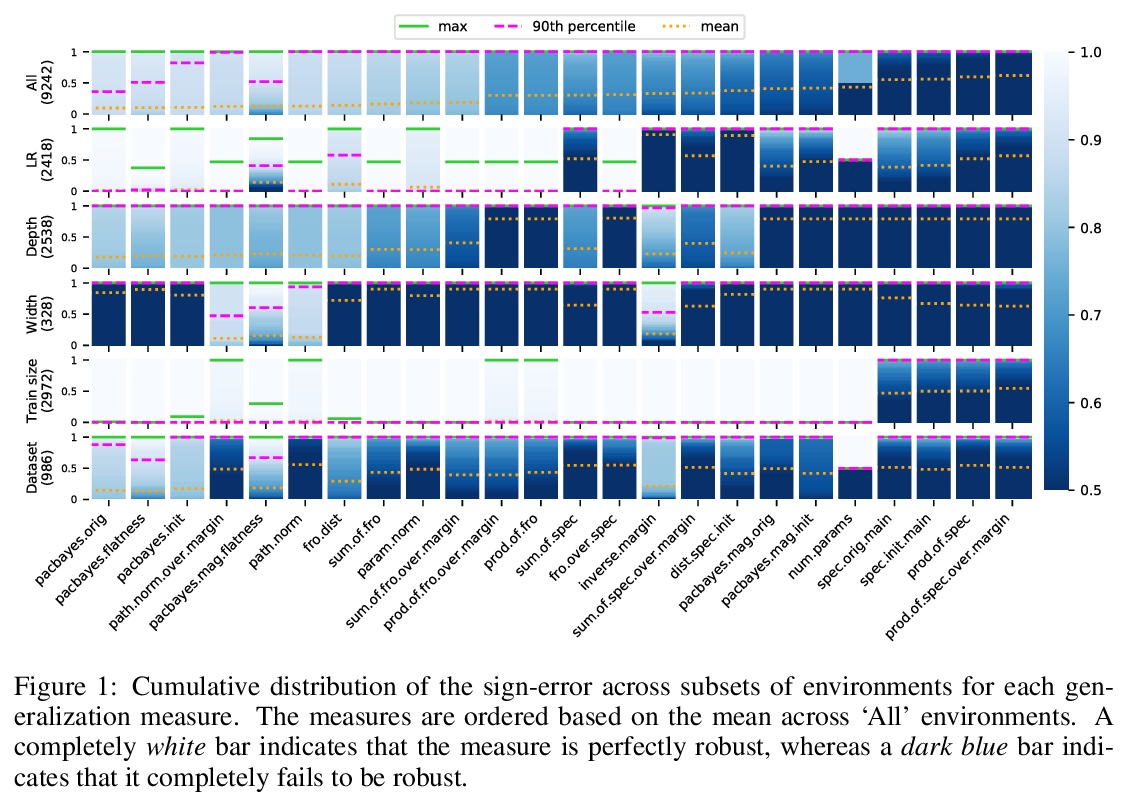

4、[LG] **In Search of Robust Measures of Generalization

G K Dziugaite, A Drouin, B Neal, N Rajkumar, E Caballero, L Wang, I Mitliagkas, D M. Roy

[Element AI & Mila & University of Toronto]

寻求泛化的鲁棒度量,提出基于分布鲁棒性的方法,通过改进泛化的实证评价理论,提高对泛化的理解。**

One of the principal scientific challenges in deep learning is explaining generalization, i.e., why the particular way the community now trains networks to achieve small training error also leads to small error on held-out data from the same population. It is widely appreciated that some worst-case theories — such as those based on the VC dimension of the class of predictors induced by modern neural network architectures — are unable to explain empirical performance. A large volume of work aims to close this gap, primarily by developing bounds on generalization error, optimization error, and excess risk. When evaluated empirically, however, most of these bounds are numerically vacuous. Focusing on generalization bounds, this work addresses the question of how to evaluate such bounds empirically. Jiang et al. (2020) recently described a large-scale empirical study aimed at uncovering potential causal relationships between bounds/measures and generalization. Building on their study, we highlight where their proposed methods can obscure failures and successes of generalization measures in explaining generalization. We argue that generalization measures should instead be evaluated within the framework of distributional robustness.

https://weibo.com/1402400261/JqLRetYcn

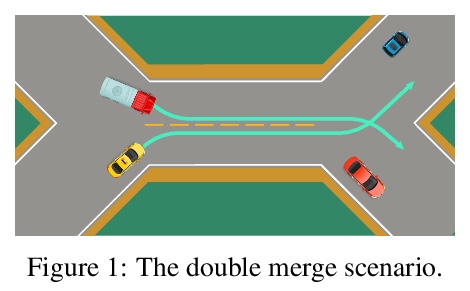

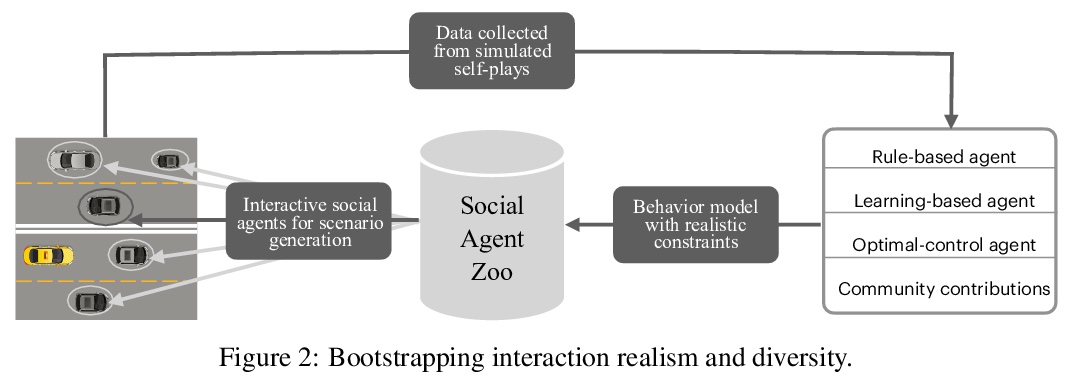

5、[LG] **SMARTS: Scalable Multi-Agent Reinforcement Learning Training School for Autonomous Driving

M Zhou, J Luo, J Villela, Y Yang…

[Noah’s Ark Lab & Shanghai Jiao Tong University]

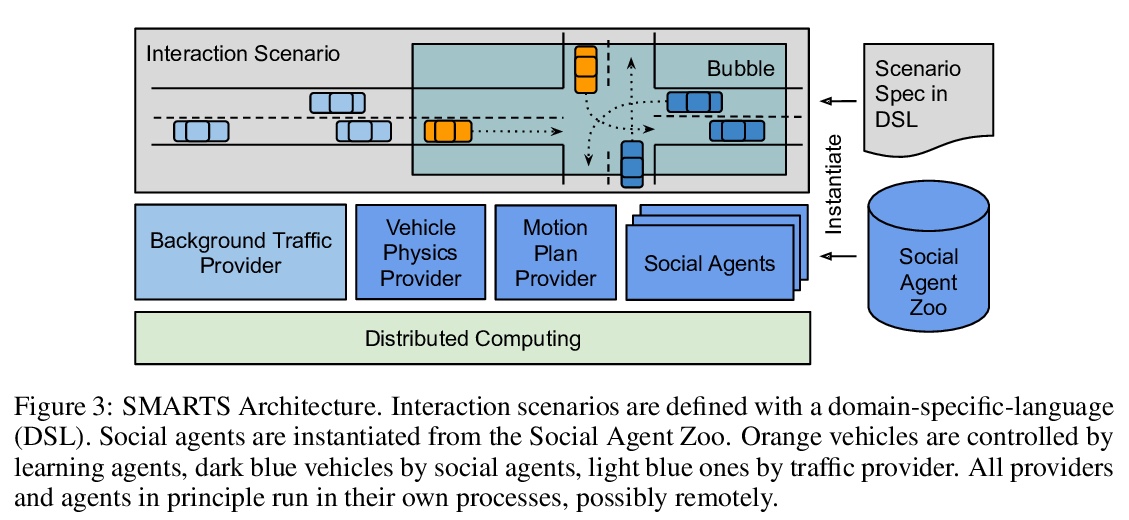

可扩展无人驾驶多智能体强化学习训练仿真平台SMART,致力于将可扩展多智能体学习和可扩展现实驾驶交互仿真结合在一起,支持训练、积累和使用道路使用者的各种行为模式,反过来还可用于创建越来越现实和多样化的交互,使多智能体交互的研究更加深入和广泛。**

Multi-agent interaction is a fundamental aspect of autonomous driving in the real world. Despite more than a decade of research and development, the problem of how to competently interact with diverse road users in diverse scenarios remains largely unsolved. Learning methods have much to offer towards solving this problem. But they require a realistic multi-agent simulator that generates diverse and competent driving interactions. To meet this need, we develop a dedicated simulation platform called SMARTS (Scalable Multi-Agent RL Training School). SMARTS supports the training, accumulation, and use of diverse behavior models of road users. These are in turn used to create increasingly more realistic and diverse interactions that enable deeper and broader research on multi-agent interaction. In this paper, we describe the design goals of SMARTS, explain its basic architecture and its key features, and illustrate its use through concrete multi-agent experiments on interactive scenarios. We open-source the SMARTS platform and the associated benchmark tasks and evaluation metrics to encourage and empower research on multi-agent learning for autonomous driving.

https://weibo.com/1402400261/JqLXouUAC

其他几篇值得关注的论文:

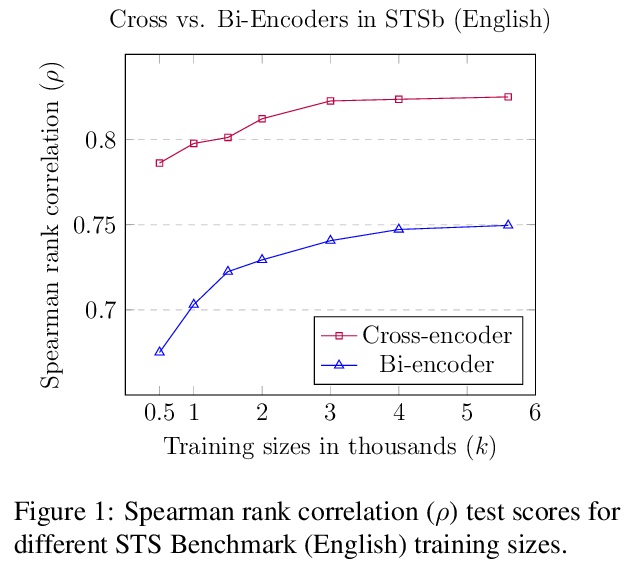

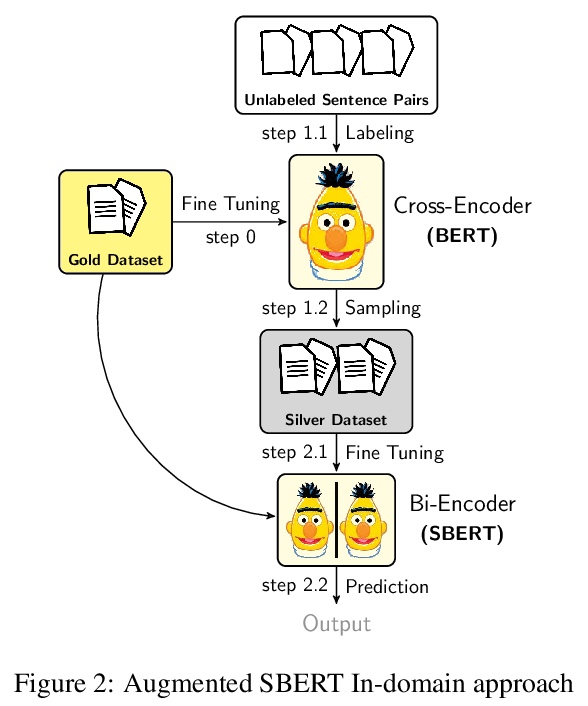

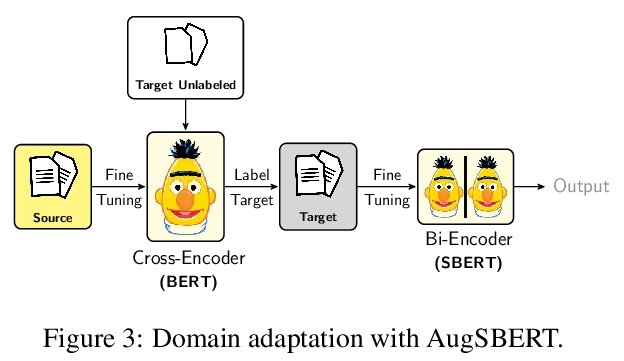

[CL] Augmented SBERT: Data Augmentation Method for Improving Bi-Encoders for Pairwise Sentence Scoring Tasks

增广SBERT: 一种改进面向句子成对打分任务的双编码器的数据增强方法

N Thakur, N Reimers, J Daxenberger, I Gurevych

[Technische Universitat Darmstadt]

https://weibo.com/1402400261/JqM1HCm2Z

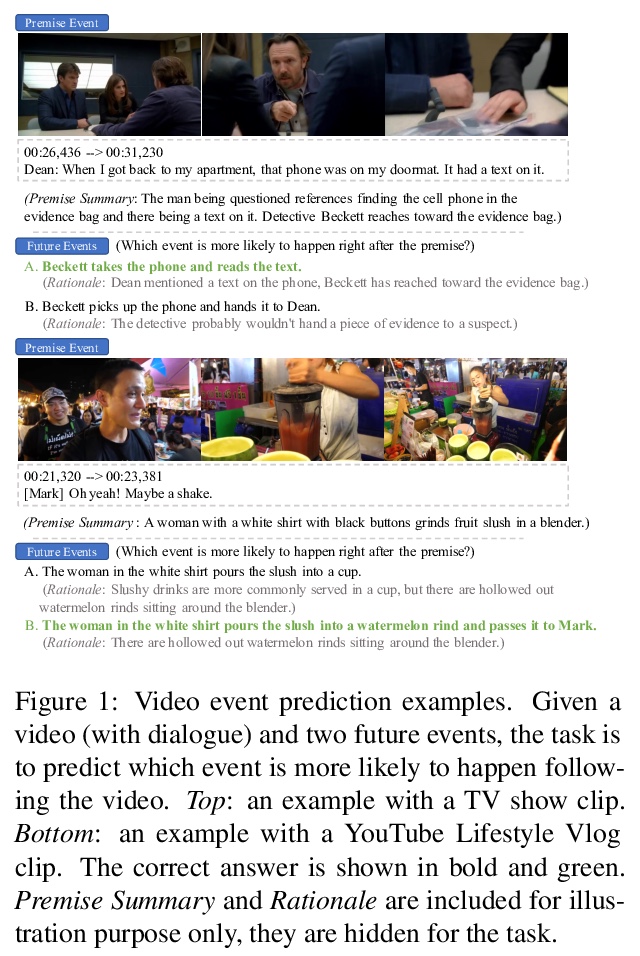

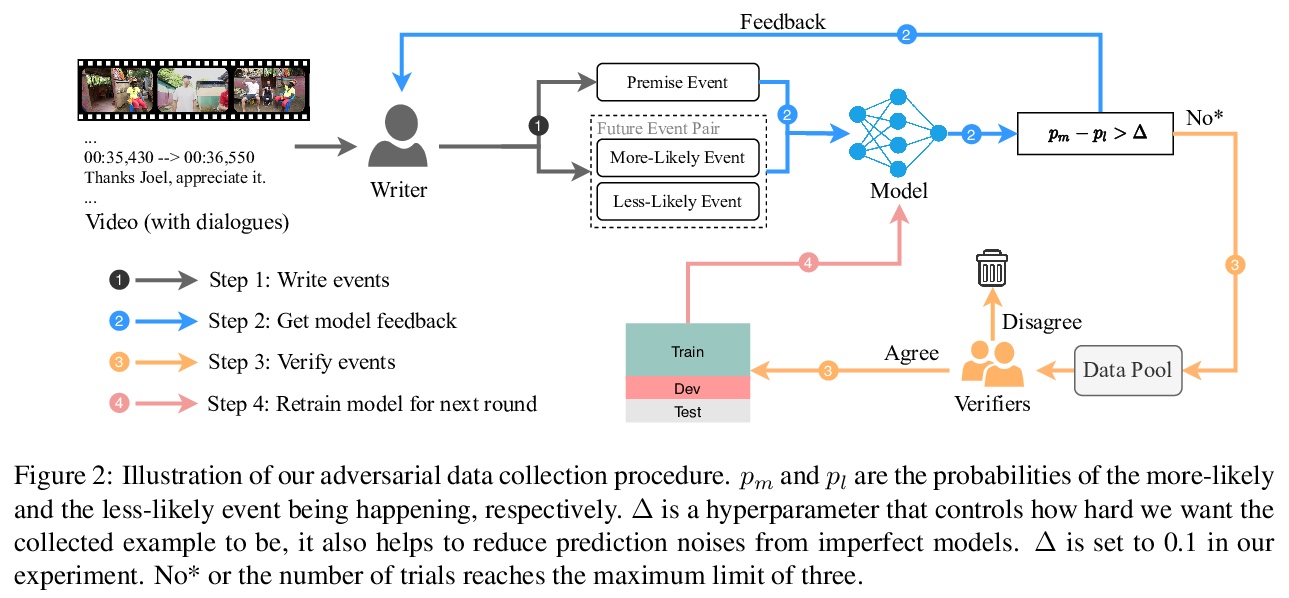

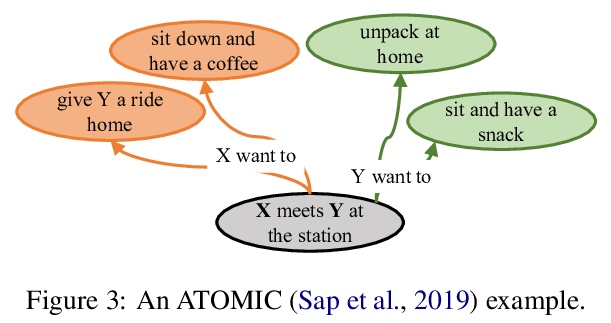

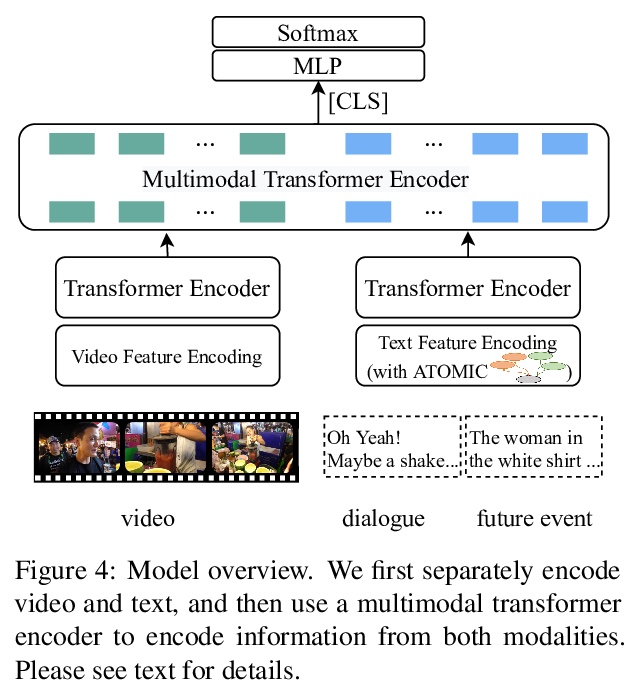

[CL] What is More Likely to Happen Next? Video-and-Language Future Event Prediction

视频+语言未来事件预测任务

J Lei, L Yu, T L. Berg, M Bansal

[University of North Carolina at Chapel Hill]

https://weibo.com/1402400261/JqM5AeMXH

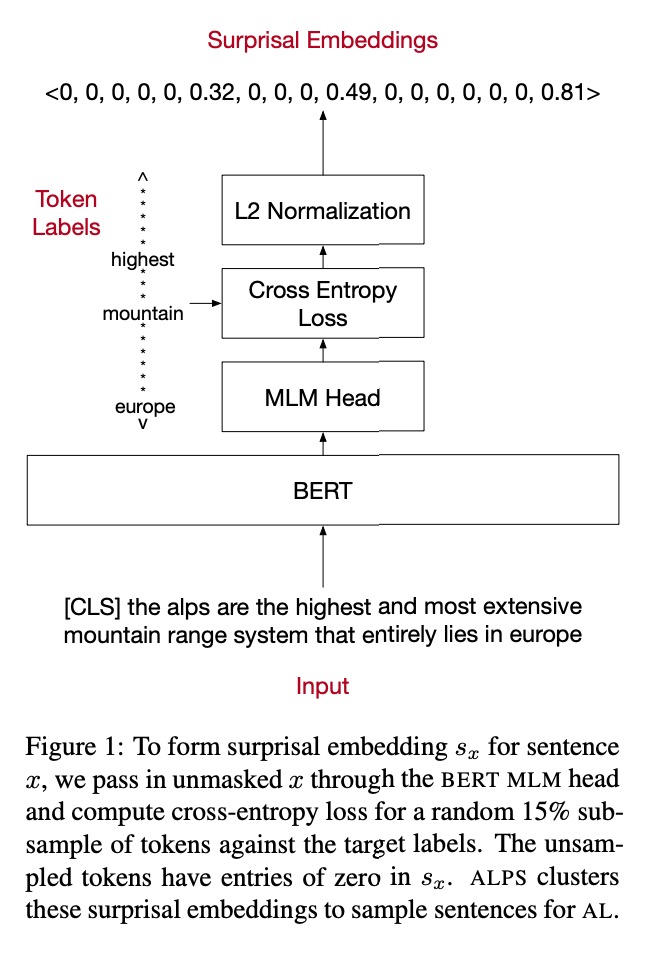

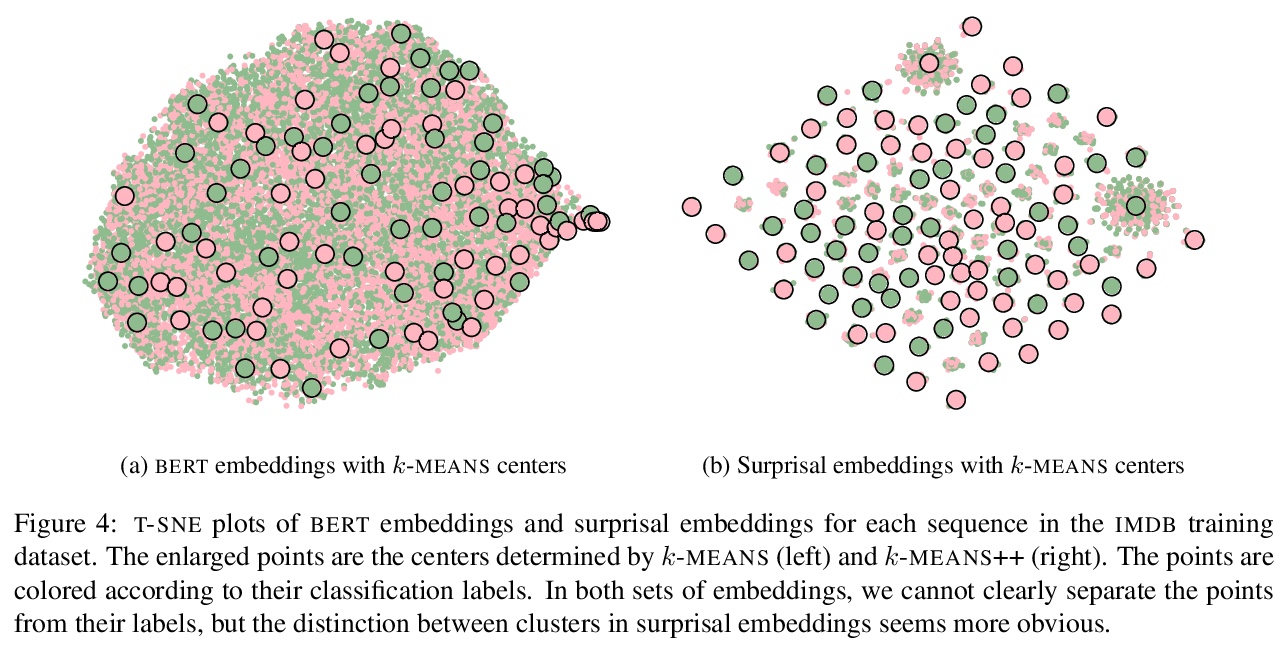

[CL] Cold-start Active Learning through Self-supervised Language Modeling

用自监督语言建模冷启动主动学习

M Yuan, H Lin, J Boyd-Graber

[University of Maryland]

https://weibo.com/1402400261/JqM7yeREj

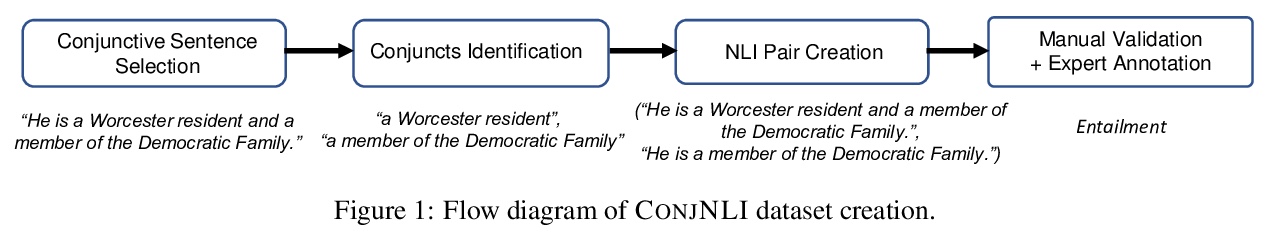

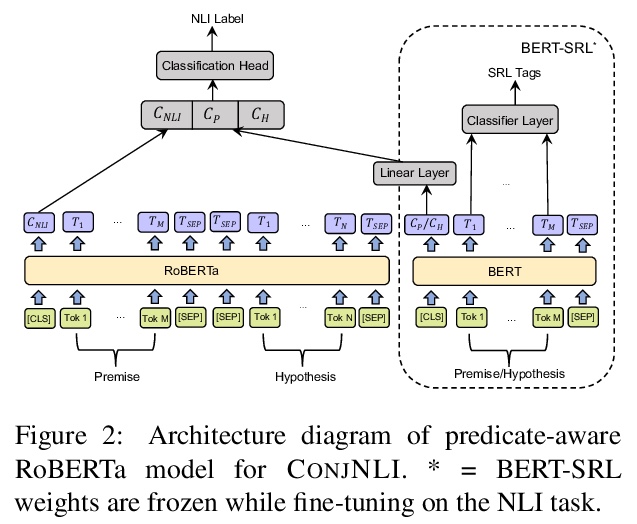

[CL] ConjNLI: Natural Language Inference Over Conjunctive Sentences

ConjNLI:连接句自然语言推理

S Saha, Y Nie, M Bansal

[UNC Chapel Hill]

https://weibo.com/1402400261/JqM9o1VVu

[LG] A Wigner-Eckart Theorem for Group Equivariant Convolution Kernels

群等变卷积核Wigner-Eckart定理

L Lang, M Weiler

[University of Amsterdam]

https://weibo.com/1402400261/JqMbe7HqT

[LG] Exchanging Lessons Between Algorithmic Fairness and Domain Generalization

算法公平与领域泛化间的互惠

E Creager, J Jacobsen, R Zemel

[Vector Institute & University of Toronto]

https://weibo.com/1402400261/JqMdV4Uhl

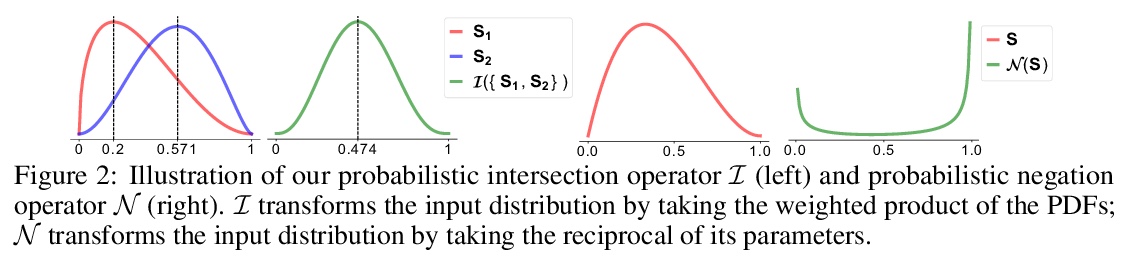

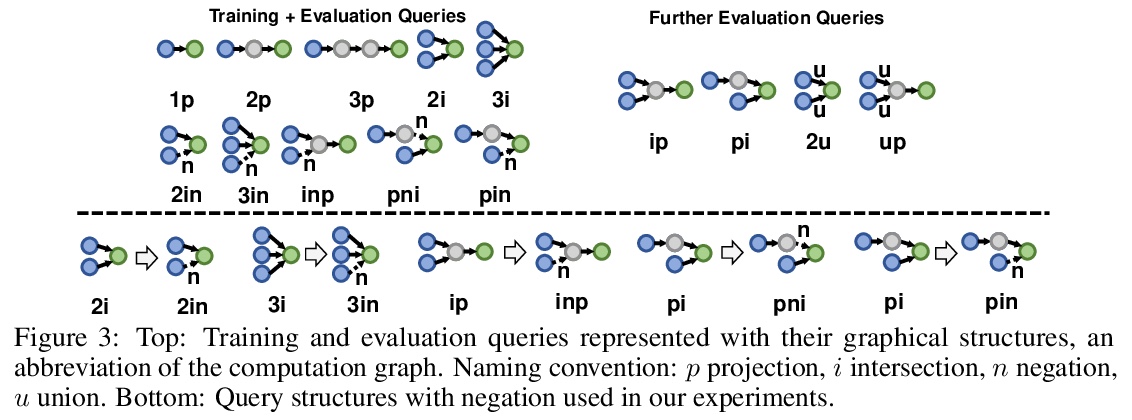

[LG]Beta Embeddings for Multi-Hop Logical Reasoning in Knowledge Graphs

知识图谱Beta嵌入多跳逻辑推理

H Ren, J Leskovec

[Stanford University]

https://weibo.com/1402400261/JqMh3c1ZD

[CL]Challenges in Information Seeking QA:Unanswerable Questions and Paragraph Retrieval

信息查询QA的挑战: 无法回答问题与段落检索

A Asai, E Choi

[University of Washington & The University of Texas at Austin]

https://weibo.com/1402400261/JqMjDlmN2