LG - 机器学习 CV - 计算机视觉 CL - 计算与语言 AS - 音频与语音 RO - 机器人

1、[AS] *Contrastive Learning of General-Purpose Audio Representations

A Saeed, D Grangier, N Zeghidour

[Eindhoven University of Technology & Google Research]

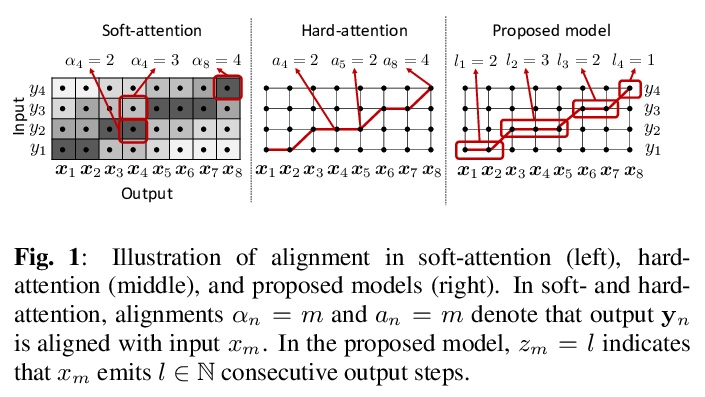

通用音频对比自监督表示学习,提出COLA,一种基于对比学习用于学习通用音频表示的自监督预训练方法。COLA用于学习一种表示,该表示对从同一录音中提取的音频段赋予高度相似度,而对不同录音中的音频段赋予较低的相似度。基于计算机视觉的对比学习和强化学习的最新进展,设计了一个轻量级、易于实现的自监督音频模型。在一系列具有挑战性的下游任务上取得了较早期无监督方法显著的性能改进,通过微调显著提高了有监督方法基线的结果。**

https://weibo.com/1402400261/JqULC0NrF

We introduce COLA, a self-supervised pre-training approach for learning a general-purpose representation of audio. Our approach is based on contrastive learning: it learns a representation which assigns high similarity to audio segments extracted from the same recording while assigning lower similarity to segments from different recordings. We build on top of recent advances in contrastive learning for computer vision and reinforcement learning to design a lightweight, easy-to-implement self-supervised model of audio. We pre-train embeddings on the large-scale Audioset database and transfer these representations to 9 diverse classification tasks, including speech, music, animal sounds, and acoustic scenes. We show that despite its simplicity, our method significantly outperforms previous self-supervised systems. We furthermore conduct ablation studies to identify key design choices and release a library to pre-train and fine-tune COLA models.

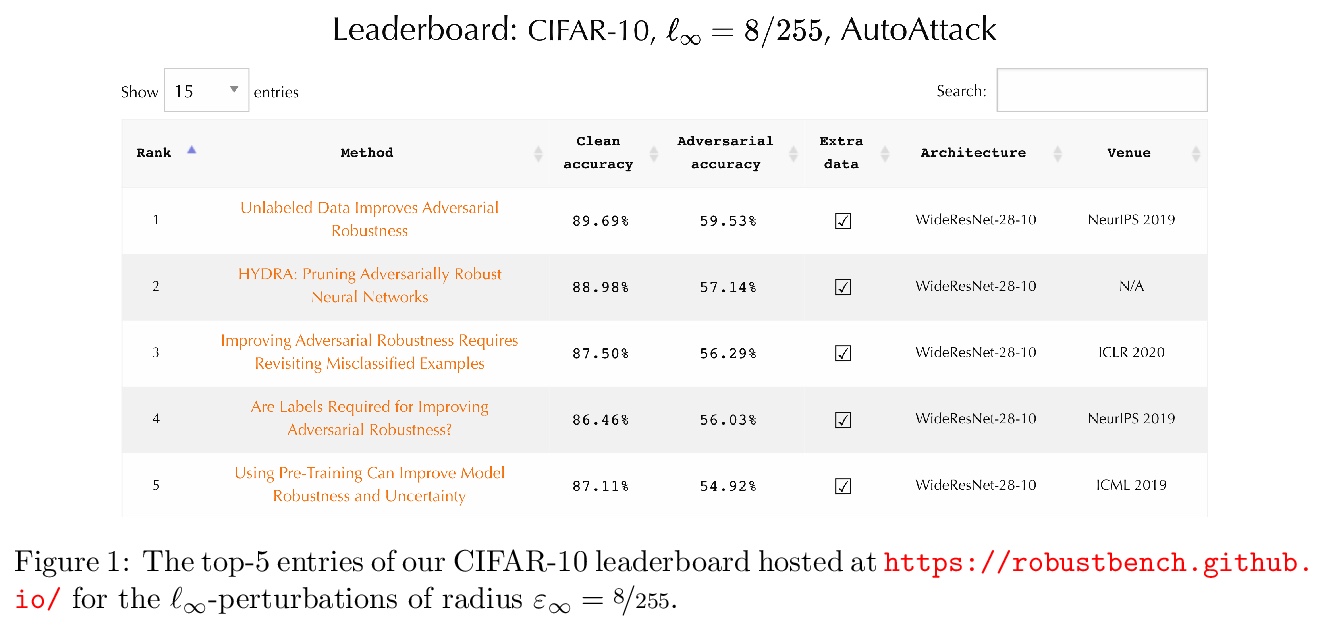

2、[LG] RobustBench: a standardized adversarial robustness benchmark

F Croce, M Andriushchenko, V Sehwag, N Flammarion, M Chiang, P Mittal, M Hein

[University of T¨ubingen & EPFL & Princeton University]

标准化对抗鲁棒性基准RobustBench,目标是在合理的计算量范围内,尽可能准确地反映所考虑模型的鲁棒性。RobustBench用一种白盒/黑盒攻击的集合AutoAttack来评价基准测试模型的鲁棒性,提供了对最先进的鲁棒模型的统一访问,以促进其下游应用。

Evaluation of adversarial robustness is often error-prone leading to overestimation of the true robustness of models. While adaptive attacks designed for a particular defense are a way out of this, there are only approximate guidelines on how to perform them. Moreover, adaptive evaluations are highly customized for particular models, which makes it difficult to compare different defenses. Our goal is to establish a standardized benchmark of adversarial robustness, which as accurately as possible reflects the robustness of the considered models within a reasonable computational budget. This requires to impose some restrictions on the admitted models to rule out defenses that only make gradient-based attacks ineffective without improving actual robustness. We evaluate robustness of models for our benchmark with AutoAttack, an ensemble of white- and black-box attacks which was recently shown in a large-scale study to improve almost all robustness evaluations compared to the original publications. Our leaderboard, hosted at > this http URL, aims at reflecting the current state of the art on a set of well-defined tasks in > ℓ∞- and > ℓ2-threat models with possible extensions in the future. Additionally, we open-source the library > this http URL that provides unified access to state-of-the-art robust models to facilitate their downstream applications. Finally, based on the collected models, we analyze general trends in > ℓp-robustness and its impact on other tasks such as robustness to various distribution shifts and out-of-distribution detection.

https://weibo.com/1402400261/JqUSdAYj0

3、[LG] **Stationary Activations for Uncertainty Calibration in Deep Learning

L Meronen, C Irwanto, A Solin

[Aalto University]

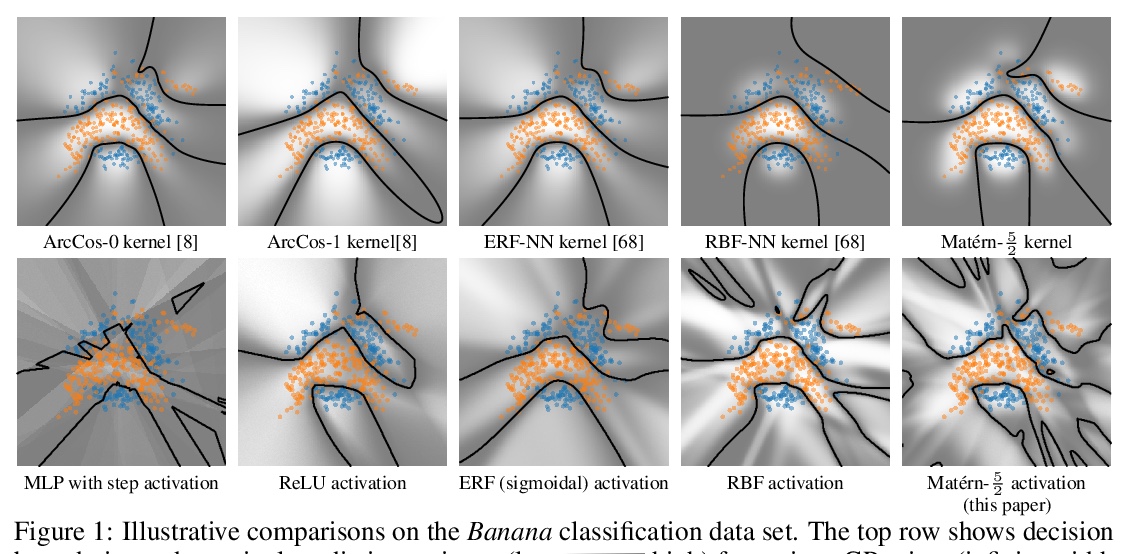

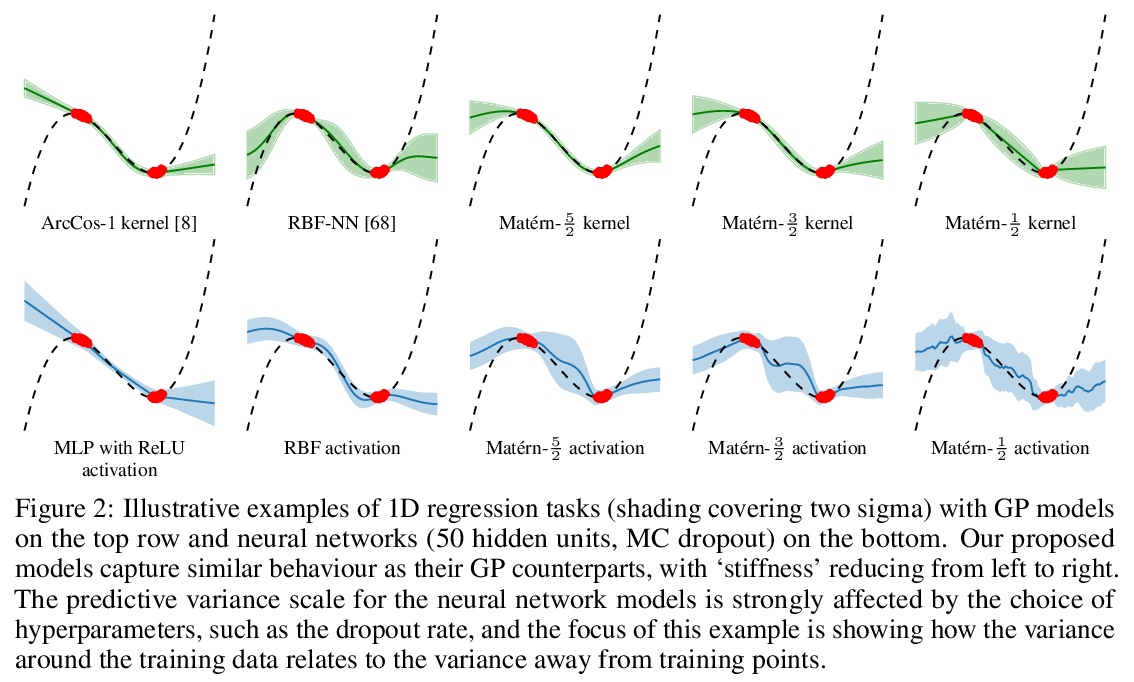

面向深度学习不确定度校准的固定激活,引入了一族新的非线性神经网络激活函数,用于模拟高斯过程模型中广泛使用的Matérn核族所具有的特性,这族激活函数涵盖了一系列具有不同程度均方可微性的局部平稳模型,将神经网络激活函数与Matern核族(协方差函数)联系起来。实验表明,该方法在贝叶斯深度学习任务中具有广泛的适用性和良好的实用性能。**

We introduce a new family of non-linear neural network activation functions that mimic the properties induced by the widely-used Matérn family of kernels in Gaussian process (GP) models. This class spans a range of locally stationary models of various degrees of mean-square differentiability. We show an explicit link to the corresponding GP models in the case that the network consists of one infinitely wide hidden layer. In the limit of infinite smoothness the Matérn family results in the RBF kernel, and in this case we recover RBF activations. Matérn activation functions result in similar appealing properties to their counterparts in GP models, and we demonstrate that the local stationarity property together with limited mean-square differentiability shows both good performance and uncertainty calibration in Bayesian deep learning tasks. In particular, local stationarity helps calibrate out-of-distribution (OOD) uncertainty. We demonstrate these properties on classification and regression benchmarks and a radar emitter classification task.

https://weibo.com/1402400261/JqUWhiex4

4、[LG] **Identifying Learning Rules From Neural Network Observables

A Nayebi, S Srivastava, S Ganguli, D L.K. Yamins

[Stanford University]

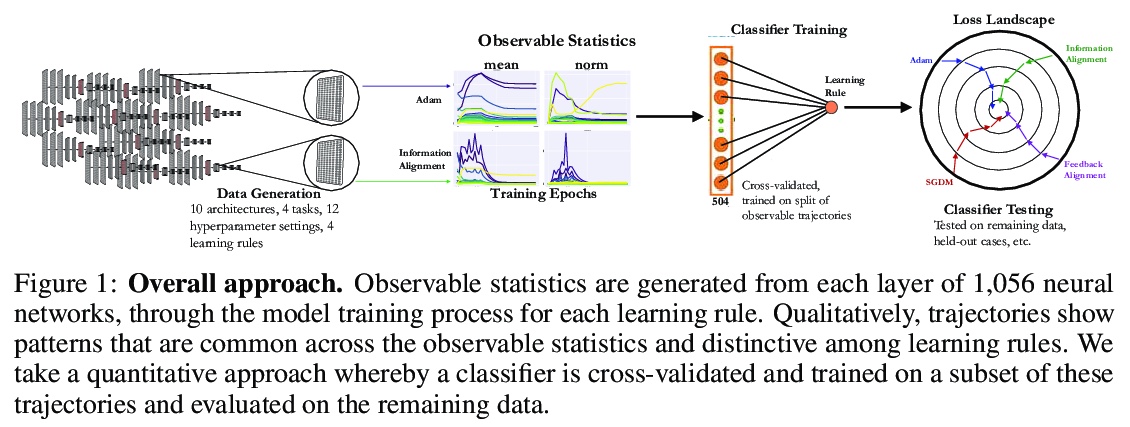

从神经网络可观察量识别学习规则,用一种“虚拟实验”的方法来解决识别可度量的可观察量的问题,用人工神经网络模拟理想化的神经科学实验,生成一个大规模的学习轨迹数据集,这些学习轨迹是在各种神经网络架构、损失函数、学习规则超参数和参数初始化中测量的聚合统计数据。再采用区分性方法,训练线性和简单的非线性分类器从特征中识别学习规则。**

The brain modifies its synaptic strengths during learning in order to better adapt to its environment. However, the underlying plasticity rules that govern learning are unknown. Many proposals have been suggested, including Hebbian mechanisms, explicit error backpropagation, and a variety of alternatives. It is an open question as to what specific experimental measurements would need to be made to determine whether any given learning rule is operative in a real biological system. In this work, we take a “virtual experimental” approach to this problem. Simulating idealized neuroscience experiments with artificial neural networks, we generate a large-scale dataset of learning trajectories of aggregate statistics measured in a variety of neural network architectures, loss functions, learning rule hyperparameters, and parameter initializations. We then take a discriminative approach, training linear and simple non-linear classifiers to identify learning rules from features based on these observables. We show that different classes of learning rules can be separated solely on the basis of aggregate statistics of the weights, activations, or instantaneous layer-wise activity changes, and that these results generalize to limited access to the trajectory and held-out architectures and learning curricula. We identify the statistics of each observable that are most relevant for rule identification, finding that statistics from network activities across training are more robust to unit undersampling and measurement noise than those obtained from the synaptic strengths. Our results suggest that activation patterns, available from electrophysiological recordings of post-synaptic activities on the order of several hundred units, frequently measured at wider intervals over the course of learning, may provide a good basis on which to identify learning rules.

https://weibo.com/1402400261/JqV0rg83U

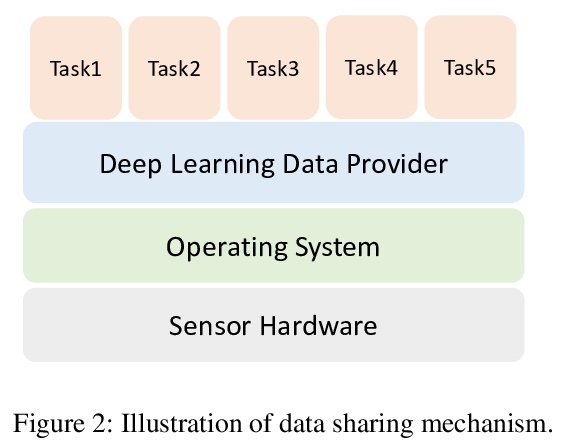

5、[LG] **Deep Learning in the Era of Edge Computing: Challenges and Opportunities

M Zhang, F Zhang, N D. Lane, Y Shu, X Zeng, B Fang, S Yan, H Xu

[Michigan State University & AInnovation & Oxford University & Microsoft Research]

边缘计算时代深度学习的挑战与机遇,提出了计算机系统、网络和机器学习交叉领域的八个挑战。这些挑战的来源,是DNN模型的高计算需求和边缘设备的有限电池寿命、现实环境的数据差异、异构传感器数据的处理需求,以及不同的计算单元上进行并发深学习任务等方面的差距。解决这些挑战将使资源有限的边缘设备能够更有效地利用深度学习的巨大潜力。**

The era of edge computing has arrived. Although the Internet is the backbone of edge computing, its true value lies at the intersection of gathering data from sensors and extracting meaningful information from the sensor data. We envision that in the near future, majority of edge devices will be equipped with machine intelligence powered by deep learning. However, deep learning-based approaches require a large volume of high-quality data to train and are very expensive in terms of computation, memory, and power consumption. In this chapter, we describe eight research challenges and promising opportunities at the intersection of computer systems, networking, and machine learning. Solving those challenges will enable resource-limited edge devices to leverage the amazing capability of deep learning. We hope this chapter could inspire new research that will eventually lead to the realization of the vision of intelligent edge.

https://weibo.com/1402400261/JqV4xqLjf

其他几篇值得关注的论文:

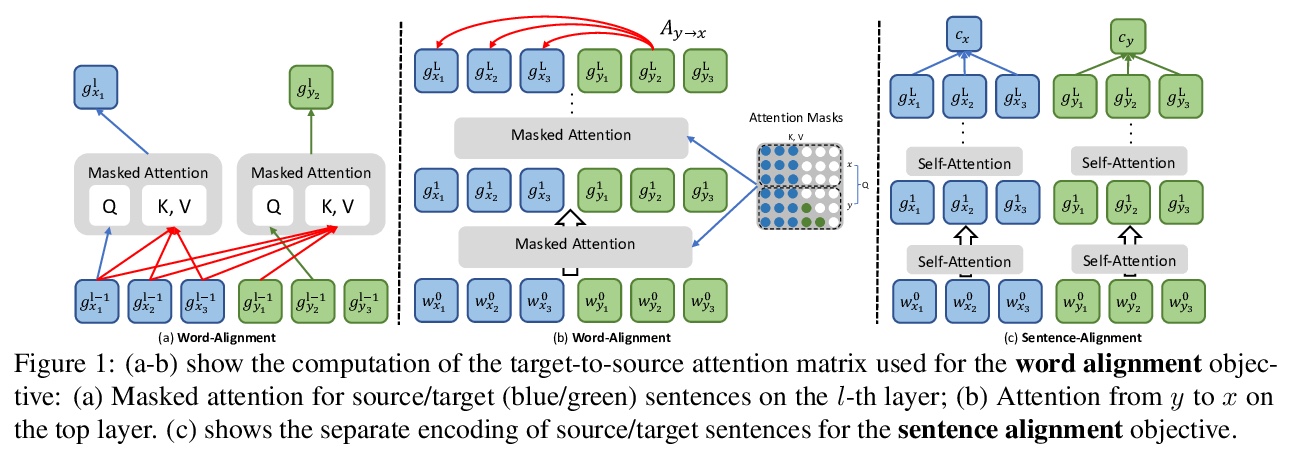

[CL] Explicit Alignment Objectives for Multilingual Bidirectional Encoders

多语言双向编码器显式对齐目标(AMBER)

J Hu, M Johnson, O Firat, A Siddhant, G Neubig

[CMU & Google AI]

https://weibo.com/1402400261/JqV8C4yHX

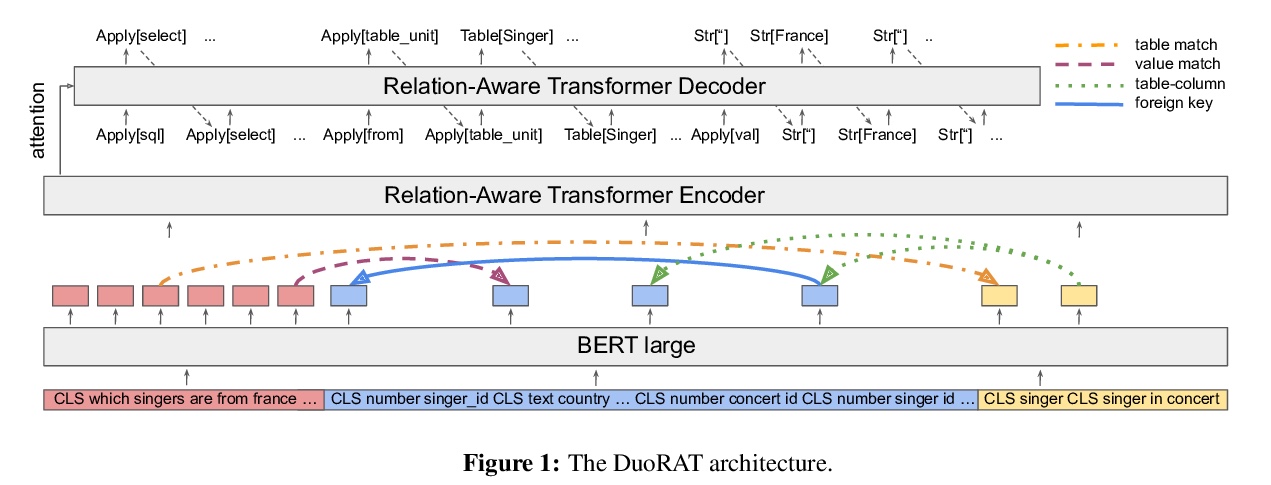

[CL] DuoRAT: Towards Simpler Text-to-SQL Models

DuoRAT:简化Text-to-SQL模型

T Scholak, R Li, D Bahdanau, H d Vries, C Pal

[Element AI]

https://weibo.com/1402400261/JqVa4uHIx

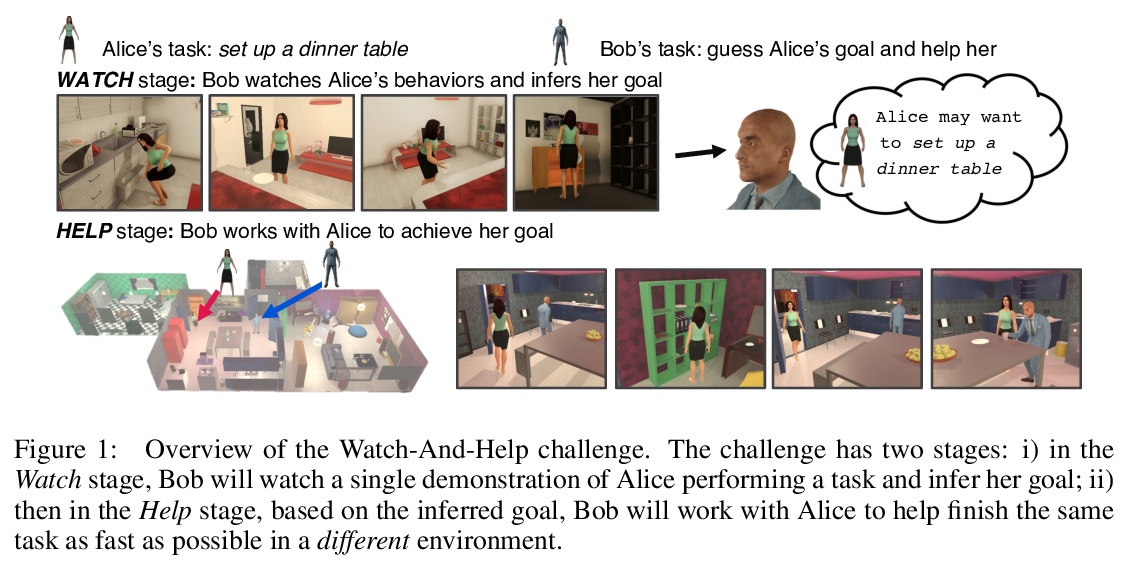

[LG] Watch-And-Help: A Challenge for Social Perception and Human-AI Collaboration

Watch-And-Help:社会化认知与人-AI协作挑战

X Puig, T Shu, S Li, Z Wang, J B. Tenenbaum, S Fidler, A Torralba

[MIT & ETH Zurich & University of Toronto]

https://weibo.com/1402400261/JqVbUzniU

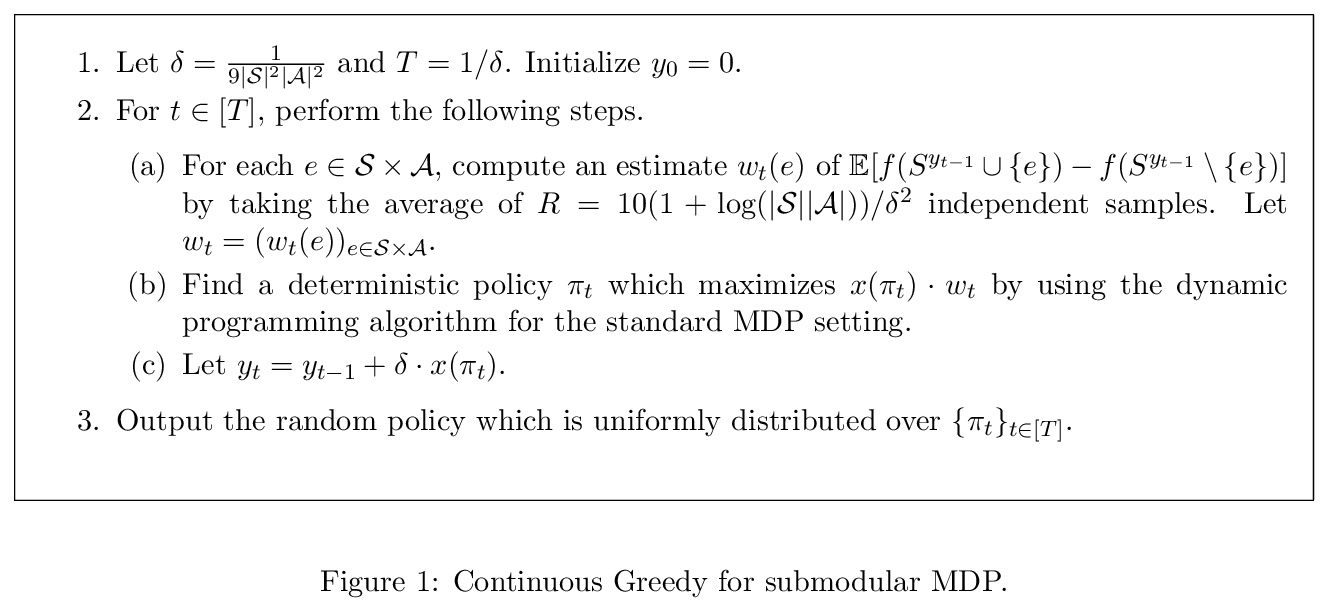

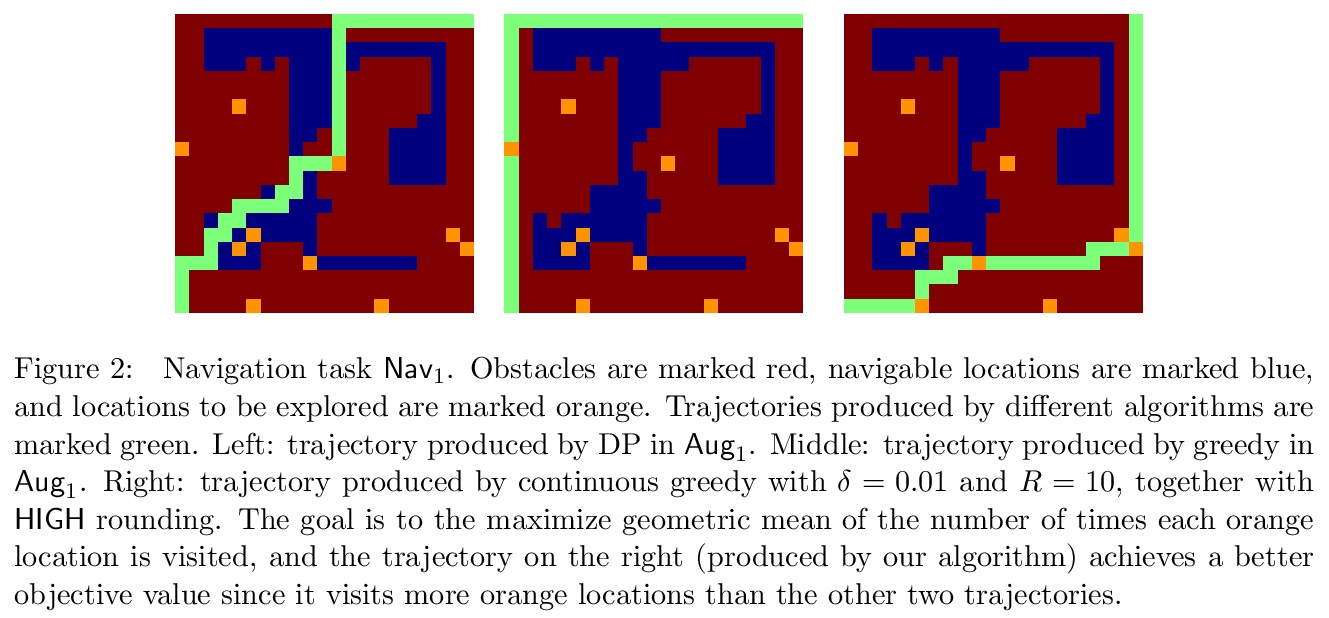

[LG] Planning with Submodular Objective Functions

子模块目标函数规划

R Wang, H Zhang, D S Chaplot, D Garagić, R Salakhutdinov

[CMU & Duke University & Sarcos Robotics]

https://weibo.com/1402400261/JqVdp0xR2

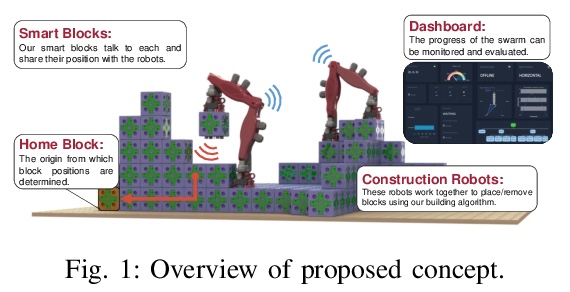

[RO] SMAC: Symbiotic Multi-Agent Construction

SMAC:共生多智能体构建

C Wagner, N Dhanaraj, T Rizzo, J Contreras, H Liang, G Lewin, C Pinciroli

[Worcester Polytechnic Institute]

https://weibo.com/1402400261/JqVeJt5hU

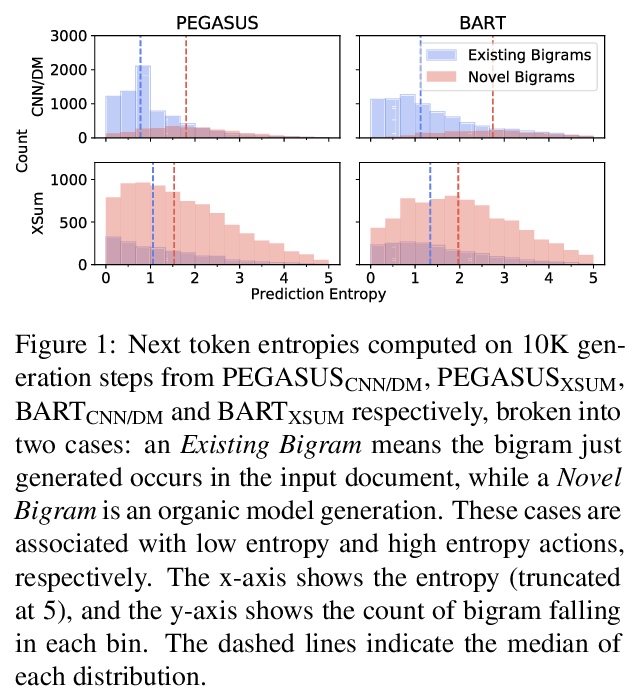

[CL] Understanding Neural Abstractive Summarization Models via Uncertainty

用不确定性理解神经网络抽象摘要模型

J Xu, S Desai, G Durrett

[The University of Texas at Austin]

https://weibo.com/1402400261/JqVfUrbpG

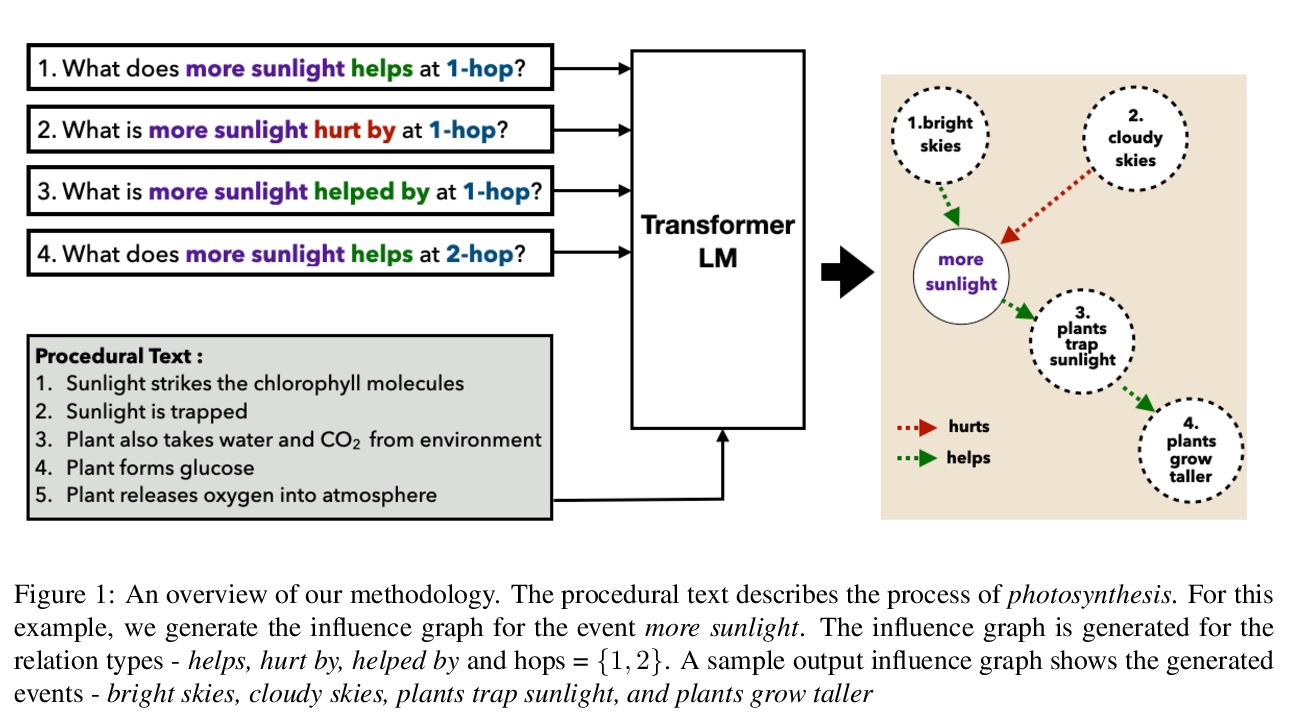

[CL] EIGEN: Event Influence GENeration using Pre-trained Language Models

EIGEN:基于预训练语言模型的事件影响生成

A Madaan, D Rajagopal, Y Yang, A Ravichander, E Hovy, S Prabhumoye

[CMU]

https://weibo.com/1402400261/JqVhmjeIP

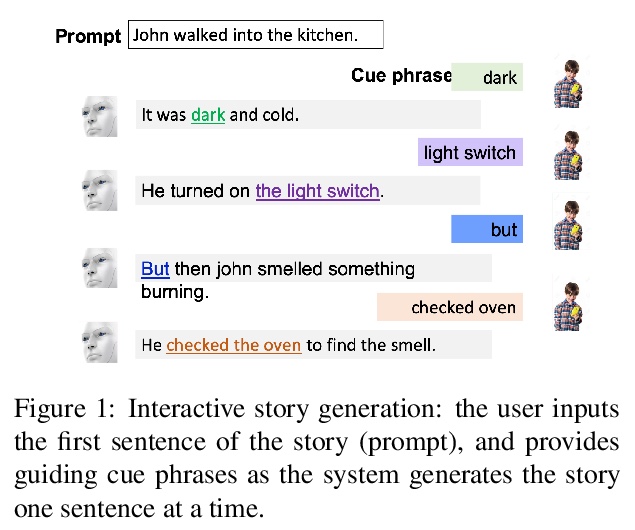

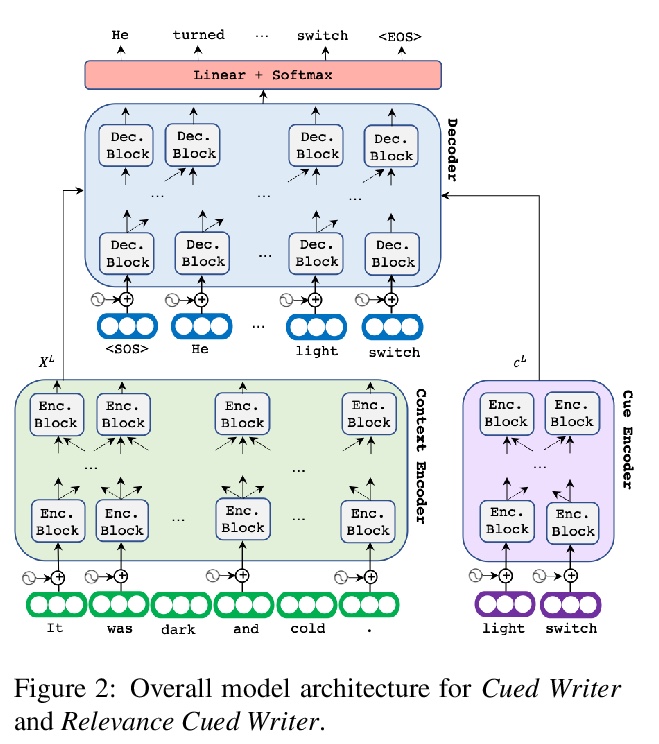

[CL] Cue Me In: Content-Inducing Approaches to Interactive Story Generation

给我提示: 基于内容诱导方法的交互式故事生成

F Brahman, A Petrusca, S Chaturvedi

[University of North Carolina at Chapel Hill]

https://weibo.com/1402400261/JqViwCods

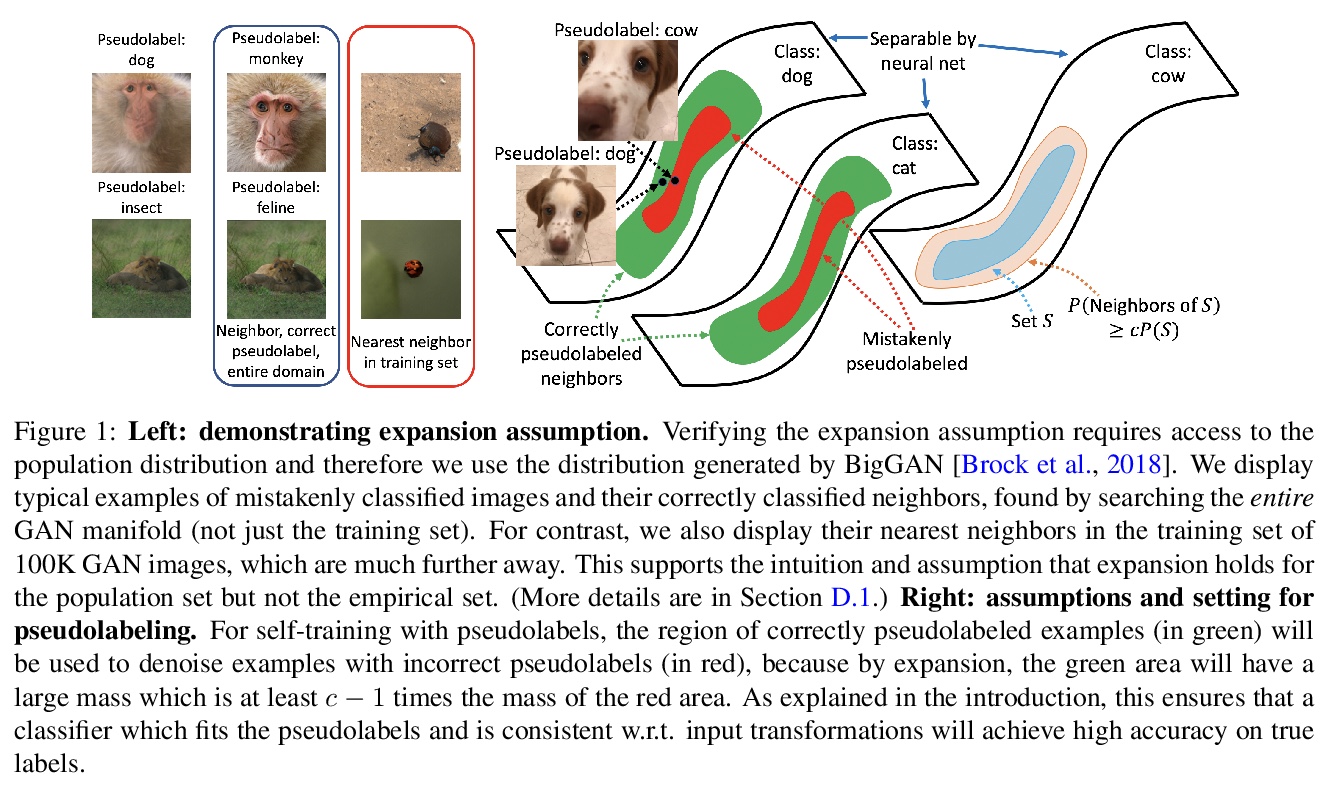

[LG] Theoretical Analysis of Self-Training with Deep Networks on Unlabeled Data

未标记数据深度网络自训练理论分析

C Wei, K Shen, Y Chen, T Ma

[Stanford University]

https://weibo.com/1402400261/JqVkbtrrv

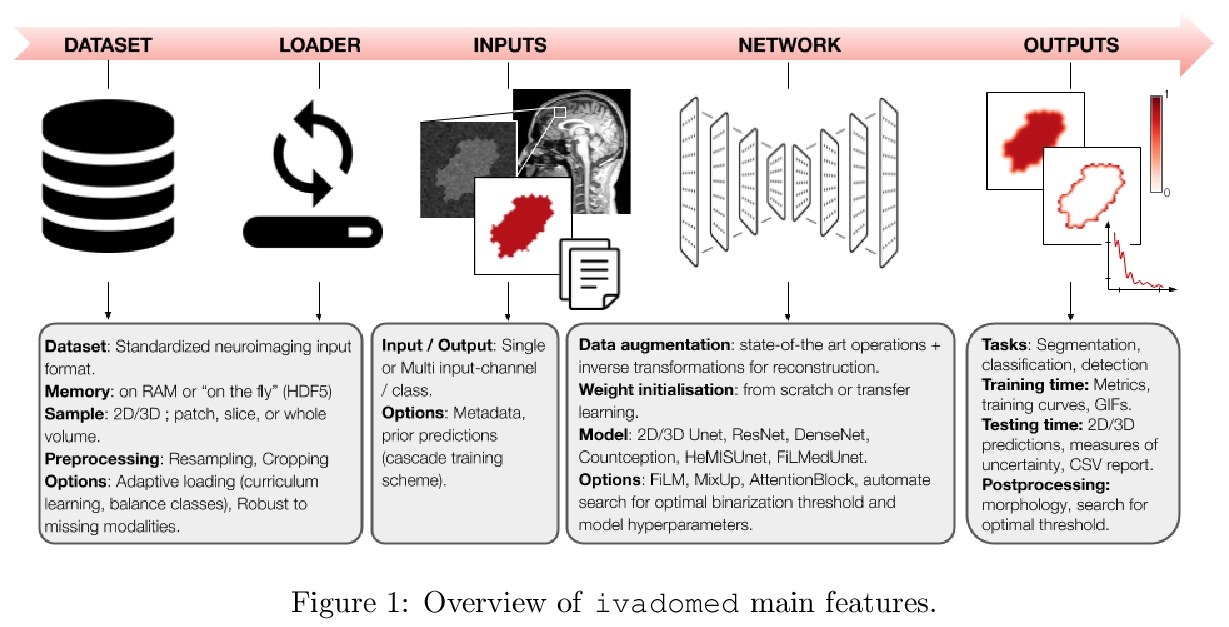

[LG] ivadomed: A Medical Imaging Deep Learning Toolbox

ivadomed:医学图像深度学习工具箱

C Gros, A Lemay, O Vincent, L Rouhier, A Bucquet, J P Cohen, J Cohen-Adad

[Polytechnique Montreal & Stanford University]

https://weibo.com/1402400261/JqVlp7h2d

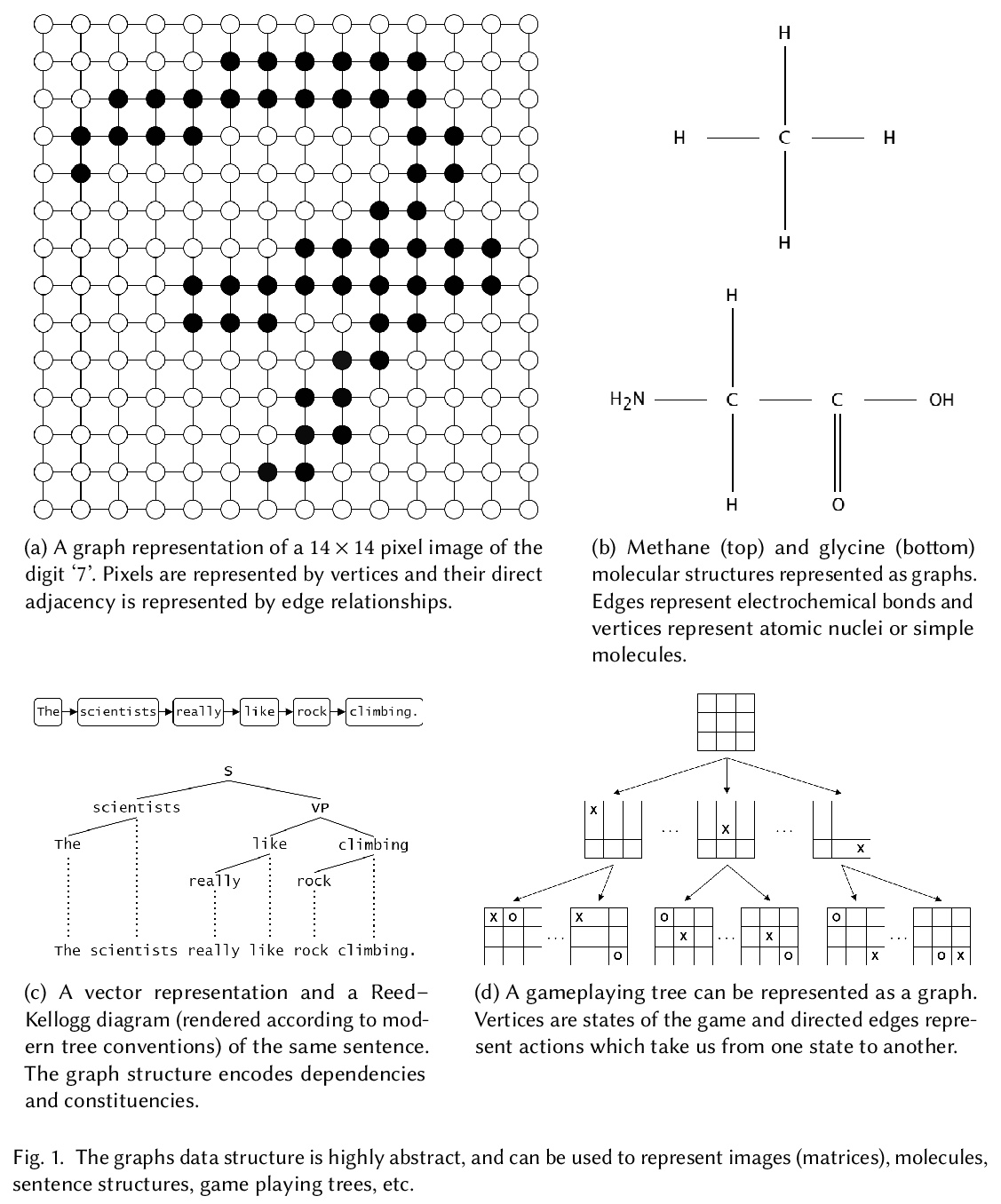

[LG] A Practical Guide to Graph Neural Networks

图神经网络实用指南

I R Ward, J Joyner, C Lickfold, S Rowe, Y Guo, M Bennamoun

[ISOLABS & Sun Yat-sen University & The University of Western Australia]

https://weibo.com/1402400261/JqVmwkigF

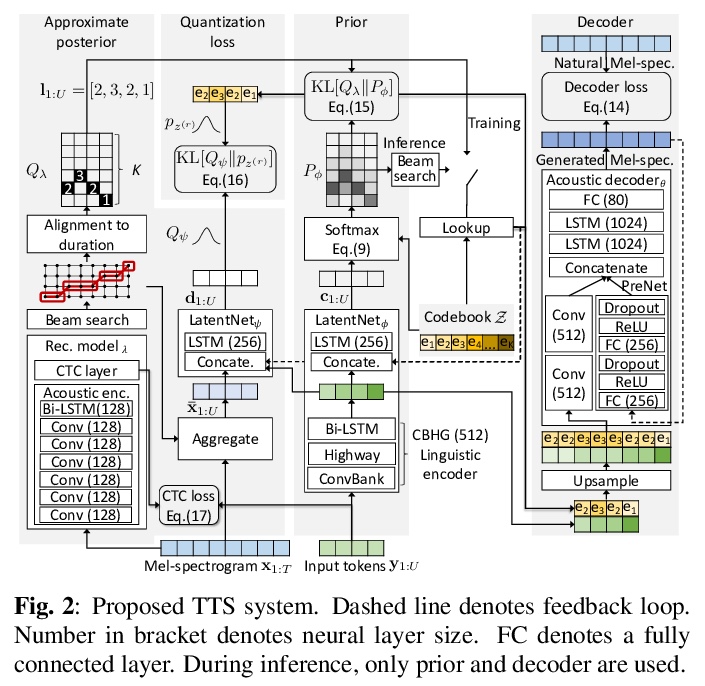

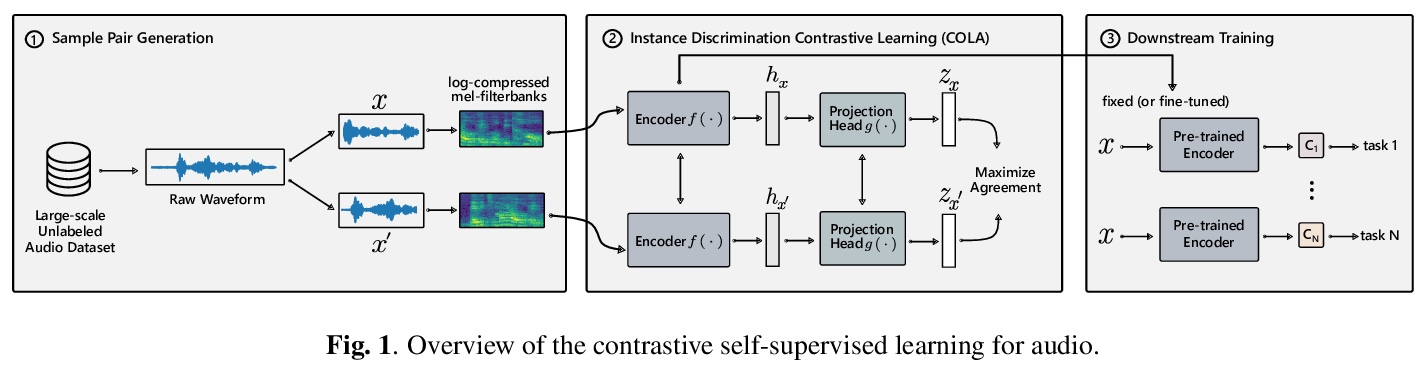

[AS] End-to-End Text-to-Speech using Latent Duration based on VQ-VAE

基于VQ-VAE的潜持续时间端到端语音变换

Y Yasuda, X Wang, J Yamagishi

[National Institute of Informatics, Japan]

https://weibo.com/1402400261/JqVnXzBty