- 1、 [CV] YolactEdge: Real-time Instance Segmentation on the Edge (Jetson AGX Xavier: 30 FPS, RTX 2080 Ti: 170 FPS)

- 2、 [CV] Time-Travel Rephotography

- 3、 [CL] Pre-Training a Language Model Without Human Language

- 4、[AI] Knowledge Graphs Evolution and Preservation — A Technical Report from ISWS 2019

- 5、 [CL] Few-Shot Text Generation with Pattern-Exploiting Training

- [CV] Unadversarial Examples: Designing Objects for Robust Vision

- [CV] GuidedStyle: Attribute Knowledge Guided Style Manipulation for Semantic Face Editing

- [CV] Latent Feature Representation via Unsupervised Learning for Pattern Discovery in Massive Electron Microscopy Image Volumes

- [CV] Non-Rigid Neural Radiance Fields: Reconstruction and Novel View Synthesis of a Deforming Scene from Monocular Video

LG - 机器学习 CV - 计算机视觉 CL - 计算与语言 AS - 音频与语音 RO - 机器人 (*表示值得重点关注)

1、 [CV] YolactEdge: Real-time Instance Segmentation on the Edge (Jetson AGX Xavier: 30 FPS, RTX 2080 Ti: 170 FPS)

H Liu, R A. R Soto, F Xiao, Y J Lee

[University of California, Davis]

YolactEdge:边缘设备上的实时实例分割。提出在小型边缘设备上以实时速度运行的实例分割方法YolactEdge,在Jetson AGX Xavier上的帧率高达30.8FPS(在RTX 2080 Ti上的帧率为172.7 FPS)。对目前最先进的基于图像的实时方法YOLACT进行了两项改进:(1)TensorRT优化,同时仔细权衡速度和准确性;(2)新的特征翘曲模块,以利用视频中的时间冗余。实验表明,YolactEdge比现有实时方法提高了3-5倍的速度,同时产生了具有竞争力的掩模和框检测精度。

We propose YolactEdge, the first competitive instance segmentation approach that runs on small edge devices at real-time speeds. Specifically, YolactEdge runs at up to 30.8 FPS on a Jetson AGX Xavier (and 172.7 FPS on an RTX 2080 Ti) with a ResNet-101 backbone on 550x550 resolution images. To achieve this, we make two improvements to the state-of-the-art image-based real-time method YOLACT: (1) TensorRT optimization while carefully trading off speed and accuracy, and (2) a novel feature warping module to exploit temporal redundancy in videos. Experiments on the YouTube VIS and MS COCO datasets demonstrate that YolactEdge produces a 3-5x speed up over existing real-time methods while producing competitive mask and box detection accuracy. We also conduct ablation studies to dissect our design choices and modules. Code and models are available at > this https URL.

https://weibo.com/1402400261/JzSW5pgvW

2、 [CV] Time-Travel Rephotography

X Luo, X Zhang, P Yoo, R Martin-Brualla, J Lawrence, S M. Seitz

[University of Washington & UC Berkeley & Google Research]

历史人物黑白老照片现代化翻新。提出时空穿越重摄,一种图像合成技术,以黑白参考照片为基础,用StyleGAN2将老照片投射到现代高分辨率照片空间,用统一框架实现所有效果。通过对潜样式代码约束优化,由新的重构损失引导,可模拟旧胶片和相机的独特属性。引入兄弟网络,产生图像恢复颜色和局部细节结果。

Many historical people are captured only in old, faded, black and white photos, that have been distorted by the limitations of early cameras and the passage of time. This paper simulates traveling back in time with a modern camera to rephotograph famous subjects. Unlike conventional image restoration filters which apply independent operations like denoising, colorization, and superresolution, we leverage the StyleGAN2 framework to project old photos into the space of modern high-resolution photos, achieving all of these effects in a unified framework. A unique challenge with this approach is capturing the identity and pose of the photo’s subject and not the many artifacts in low-quality antique photos. Our comparisons to current state-of-the-art restoration filters show significant improvements and compelling results for a variety of important historical people.

https://weibo.com/1402400261/JzT78ldNx

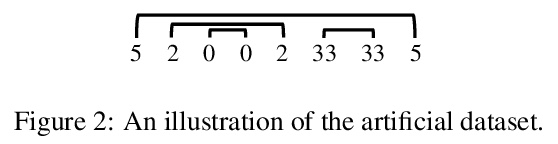

3、 [CL] Pre-Training a Language Model Without Human Language

C Chiang, H Lee

[Taiwan University]

用非人类语言预训练语言模型。研究了预训练数据内在性质如何有助于下游任务微调性能。在几个具有特定特征的语料库上预训练不同的基于transformer的掩蔽语言模型,用GLUE基准对其进行微调。发现在非结构化数据上预训练的模型胜过在下游任务上直接从头训练的模型。研究结果还表明,对结构化数据的预训练并不总是使模型获得可迁移到自然语言下游任务的能力。对某些非人类语言数据进行预训练,可以使在GLUE上的表现接近于对另一种非英语语言进行预训练的表现。

In this paper, we study how the intrinsic nature of pre-training data contributes to the fine-tuned downstream performance. To this end, we pre-train different transformer-based masked language models on several corpora with certain features, and we fine-tune those language models on GLUE benchmarks. We find that models pre-trained on unstructured data beat those trained directly from scratch on downstream tasks. Our results also show that pre-training on structured data does not always make the model acquire ability that can be transferred to natural language downstream tasks. To our great astonishment, we uncover that pre-training on certain non-human language data gives GLUE performance close to performance pre-trained on another non-English language.

https://weibo.com/1402400261/JzTc3wpPf

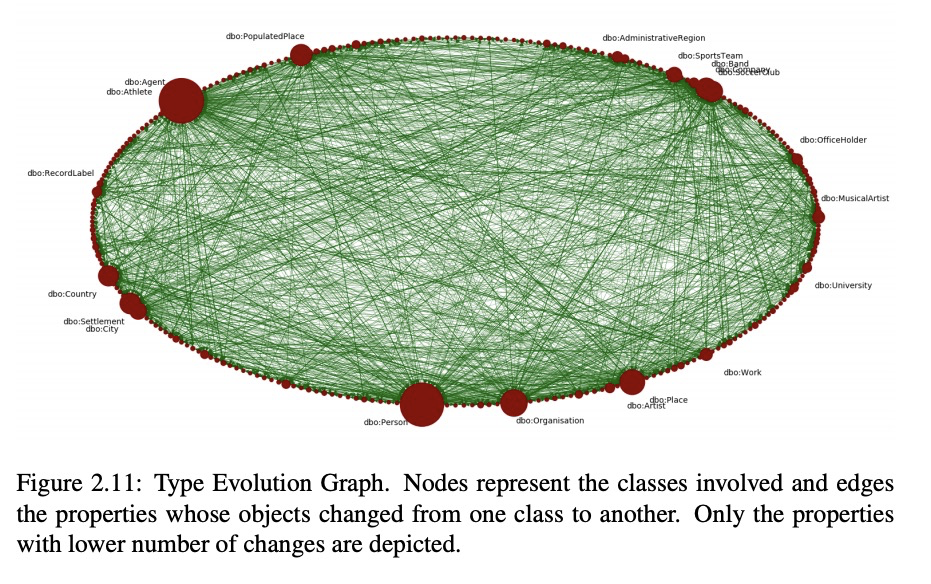

4、[AI] Knowledge Graphs Evolution and Preservation — A Technical Report from ISWS 2019

N Abbas, K Alghamdi, M Alinam, F Alloatti, G Amaral…

知识图谱进化与保持——ISWS 2019技术报告。提出了四步工作流程,用来收集、分析、审查和细化众包知识图谱中的偏差。描述了如何在整个过程中引入人在环路因素,以帮助识别和改善在知识图谱中的偏差表示。

One of the grand challenges discussed during the Dagstuhl Seminar “Knowledge Graphs: New Directions for Knowledge Representation on the Semantic Web” and described in its report is that of a: “Public FAIR Knowledge Graph of Everything: We increasingly see the creation of knowledge graphs that capture information about the entirety of a class of entities. […] This grand challenge extends this further by asking if we can create a knowledge graph of “everything” ranging from common sense concepts to location based entities. This knowledge graph should be “open to the public” in a FAIR manner democratizing this mass amount of knowledge.” Although linked open data (LOD) is one knowledge graph, it is the closest realisation (and probably the only one) to a public FAIR Knowledge Graph (KG) of everything. Surely, LOD provides a unique testbed for experimenting and evaluating research hypotheses on open and FAIR KG. One of the most neglected FAIR issues about KGs is their ongoing evolution and long term preservation. We want to investigate this problem, that is to understand what preserving and supporting the evolution of KGs means and how these problems can be addressed. Clearly, the problem can be approached from different perspectives and may require the development of different approaches, including new theories, ontologies, metrics, strategies, procedures, etc. This document reports a collaborative effort performed by 9 teams of students, each guided by a senior researcher as their mentor, attending the International Semantic Web Research School (ISWS 2019). Each team provides a different perspective to the problem of knowledge graph evolution substantiated by a set of research questions as the main subject of their investigation. In addition, they provide their working definition for KG preservation and evolution.

https://weibo.com/1402400261/JzTiXpUUa

5、 [CL] Few-Shot Text Generation with Pattern-Exploiting Training

T Schick, H Schütze

[LMU Munich]

基于模式挖掘训练的少样本文本生成。提出模式挖掘训练(PET),通过引入解码器前缀概念和知识蒸馏组合模式(从随机选择模式生成目标序列),优化生成语言模型的文本生成任务。在几个文本摘要和标题生成数据集上,PET变体在少样本设置下的强基线上提供了一致的改进。

Providing pretrained language models with simple task descriptions or prompts in natural language yields impressive few-shot results for a wide range of text classification tasks when combined with gradient-based learning from examples. In this paper, we show that the underlying idea can also be applied to text generation tasks: We adapt Pattern-Exploiting Training (PET), a recently proposed few-shot approach, for finetuning generative language models on text generation tasks. On several text summarization and headline generation datasets, our proposed variant of PET gives consistent improvements over a strong baseline in few-shot settings.

https://weibo.com/1402400261/JzTl9Fktg

[CV] Unadversarial Examples: Designing Objects for Robust Vision

非对抗样本:通过设计对象提高视觉模型鲁棒性

H Salman, A Ilyas, L Engstrom, S Vemprala, A Madry, A Kapoor

[Microsoft Research & MIT]

https://weibo.com/1402400261/JzToeeR3A

[CV] GuidedStyle: Attribute Knowledge Guided Style Manipulation for Semantic Face Editing

GuidedStyle:属性知识引导的语义人脸编辑风格操作

X Hou, X Zhang, L Shen, Z Lai, J Wan

[Shenzhen University]

https://weibo.com/1402400261/JzTrydrWV

[CV] Latent Feature Representation via Unsupervised Learning for Pattern Discovery in Massive Electron Microscopy Image Volumes

用于大规模电子显微镜图像模式发现的无监督学习潜特征表示

G B Huang, H Yang, S Takemura, P Rivlin, S M Plaza

[Janelia Research Campus & Sun Yat-sen University]

https://weibo.com/1402400261/JzTtoeOYl

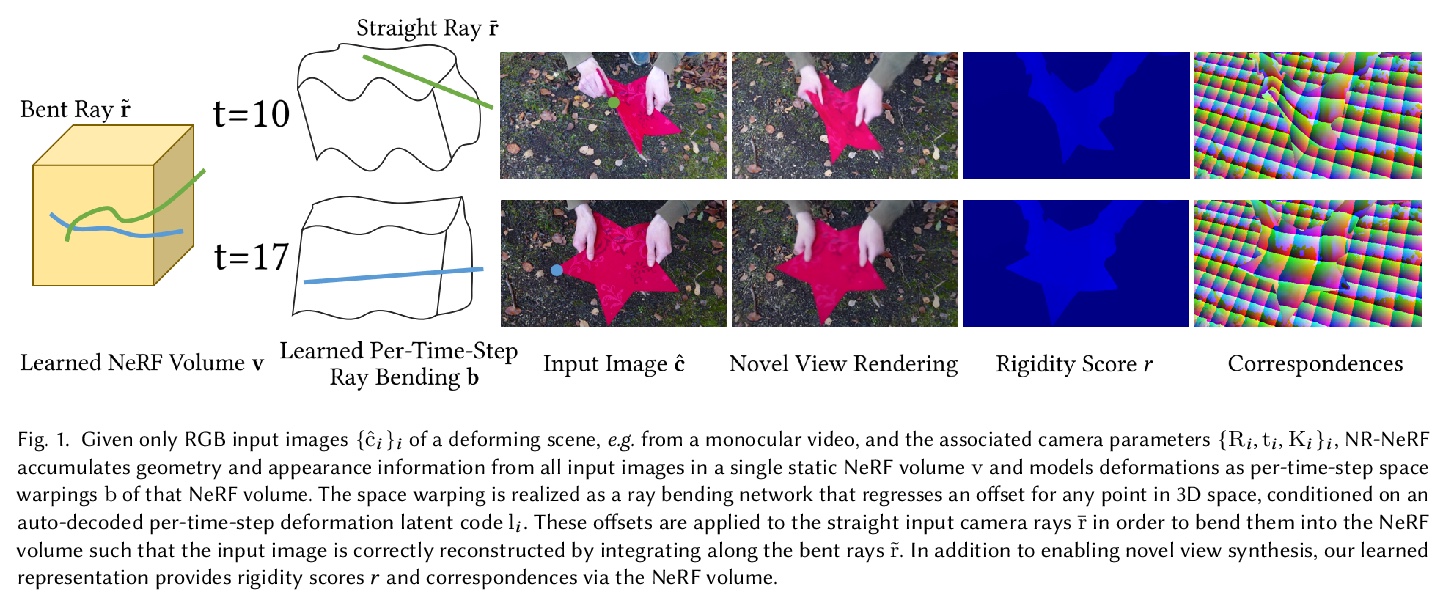

[CV] Non-Rigid Neural Radiance Fields: Reconstruction and Novel View Synthesis of a Deforming Scene from Monocular Video

非刚性神经辐射场:单目视频变形场景重建与新视图合成

E Tretschk, A Tewari, V Golyanik, M Zollhöfer, C Lassner, C Theobalt

[MPI for Informatics & Facebook Reality Labs]

https://weibo.com/1402400261/JzTuUyPeB