Big Data characteristics

● We now live in the era of Big Data 生活在大数据时代

– Massive amounts of data are being collected by many organisations (both in the private and public sectors), as well as by individuals 许多组织(私营和公共部门)以及个人都在收集大量数据

– Many companies and services move online, generating constant streams of (transactional) data 许多公司和服务都在网上移动,不断产生(事务性)数据流

– The Internet of Things (IoT) is resulting in billions of sensor being connected, producing vasts amount of data 许多公司和服务都在网上移动,不断产生(事务性)数据流

● Temporal, dynamic and streaming data pose novel challenges for both data wrangling (as well as data mining) 时间、动态和流数据对数据争论(以及数据挖掘)提出了新的挑战

– Not much is published about these topics (ongoing research in some areas), so the following slides are mostly discussion points 关于这些主题(某些领域正在进行的研究)的出版物不多,因此以下幻灯片主要是讨论点

● The four V’s that characterise Big Data

– Volume (the size of data): Data are too large to be stored for later processing or analysis 容量(数据的大小):数据太大,无法存储以供以后处理或分析

– Variety (different forms of data): Different and novel processing, exploration, cleaning, and integration approaches are required 多样性(不同形式的数据):需要不同且新颖的处理、探索、清理和集成方法

– Velocity (streaming data): Data are being generated on a constant basis (data streams), and require ongoing (near) real-time processing, wrangling and analysis 速度(流数据):数据是在恒定的基础上生成的(数据流),需要持续(接近)实时的处理、争论和分析

– Veracity (uncertainty of data): Data quality can change over time, will be of different quality from different sources, will be difficult to ascertain quality of all data due to huge volume准确性(数据的不确定性):数据质量会随着时间的推移而变化,不同来源的数据质量不同,由于数据量巨大,很难确定所有数据的质量

Wrangling dynamic data 动态数据清洗

● Dynamic data are when values of known entities change over time 动态数据是指已知实体的值随时间变化

● Dynamic data occur in many domains 动态数据出现在许多领域

– Name and address changes of people 人员姓名和地址的变化

– Price changes for consumer products 消费品的价格变化

● New records (with changed attribute values) for a known entity should be verified for consistency 应验证已知实体的新记录(属性值已更改)的一致性

– Gender and/or age of a person to be consistent with previous values of the same person 一个人的性别和/或年龄与同一个人以前的价值观一致

– Updated price of a product is sensible (for example, same camera doesn’t suddenly cost 10 times as much) 产品的更新价格是合理的(例如,同一台相机的价格不会突然上涨10倍)

● Overall, databases should be consistent 总的来说,数据库应该是一致的

– We cannot suddenly have 5,000 people living at the same address我们不能突然让5000人住在同一个地址

● The temporal dimension makes wrangling of dynamic challenging 时间维度使得动态的争论极具挑战性

● For data exploration, distributions and ranges might change, new values might occur 对于数据探索,分布和范围可能会改变,新的值可能会出现

– Such as new postcodes for new suburbs previously not seen 对于数据探索,分布和范围可能会改变,新的值可能会出现

– Changes in name popularity, or number of people living in a postcode area 姓名流行度或居住在邮政编码区的人数的变化

● Difficult to decide if a new value is an outlier and maybe wrong, or a new valid value 姓名流行度或居住在邮政编码区的人数的变化

● Difficult to decide what the valid minimum and maximum values should be 难以确定有效的最小值和最大值应该是多少

– Changes in external factors (new policies or regulations) will affect data types and/or values外部因素(新政策或法规)的变化将影响数据类型和/或值

● Data cleaning is also challenged by dynamically changing data 数据清理也受到动态变化数据的挑战

– What was normal once might become unusual later, and might need to be cleaned (such as addresses of residencies replaced by businesses) 曾经正常的东西可能会在以后变得不寻常,可能需要清理(例如被企业取代的住宅地址)

– Difficult to apply transformation techniques such as binning and histograms, as ranges and distributions change over time 由于范围和分布随时间变化,很难应用bining变换和直方图等变换技术

– Difficult to normalise data if min-max values change (for 0-1 normalisation), or mean and standard deviation change (for z-score normalisation) 如果最小值-最大值改变(0-1标准化)或平均值和标准偏差改变(z分数标准化),则难以标准化数据

– Normalised data in the past might become skewed if distributions change over time 如果分布随时间变化,过去的标准化数据可能会出现偏差

– Missingness of data likely changes as data collection processes change (new Web input forms, new automatic collection equipment, etc.)数据丢失可能会随着数据收集流程的变化而变化(新的网络输入表单、新的自动收集设备等)。)

● Due to challenge of data cleaning, data integration will also be challenged 由于数据清理的挑战,数据集成也将受到挑战

● Schema matching: Database schemas can change over time 模式匹配:数据库模式可以随着时间的推移而变化

– New or refined attributes, split attributes, new database tables, etc. 新的或细化的属性、拆分的属性、新的数据库表等。

● Record linkage: Attribute values (and maybe their types and structures) likely change over time 记录链接:属性值(可能还有它们的类型和结构)可能会随着时间的推移而改变

– Such as names, addresses and contact numbers of people or businesses 如人员或企业的姓名、地址和联系电话

● Requirements of how data are to be fused will change over time 如何融合数据的要求会随着时间的推移而改变

– Depending upon record linkage results, as well as of the applications that require the fused data 如何融合数据的要求会随着时间的推移而改变

Wrangling data streams 流数据清洗

● Data streams are characterised by rapid arrival of individual (transactional) records, but unpredictable arrival rate 数据流的特点是单个(事务性)记录快速到达,但到达率不可预测

● Data volume is often too large to store and process off-line 数据量通常太大,无法离线存储和处理

– Applications often require (near) real-time processing and analysis 应用程序通常需要(接近)实时处理和分析

– Each record can be accessed (inspected and modified) only once 每个记录只能访问(检查和修改)一次

– Examples are online financial transactions such as credit card purchases 每个记录只能访问(检查和修改)一次

● Data exploration and cleaning need to be conducted in real-time 数据探索和清理需要实时进行

– So all the challenges of dynamic data, plus the additional requirement of processing in real-time因此,动态数据的所有挑战,加上实时处理的额外要求

Example technique: Sliding window 样例技术:滑窗

● Given a data stream with unpredictable arrival rate 给定到达率不可预测的数据流

– Unknown in advance how many records arrive in a certain time interval 预先未知在特定时间间隔内有多少记录到达

● Assume we want to monitor: 假设我们想要监控:

– Minimum, averages, medians, and maximums of numerical attributes 数值属性的最小值、平均值、中间值和最大值

– Number of unique values and percentage of missing values for categorical attributes 分类属性的唯一值的数量和缺失值的百分比

● We keep in memory the last x records, and calculate the above measures on these x records 我们将最后的x条记录保存在内存中,并在这些x条记录上计算上述度量

– When a new record arrives, the oldest record will be removed 当新记录到达时,最早的记录将被删除

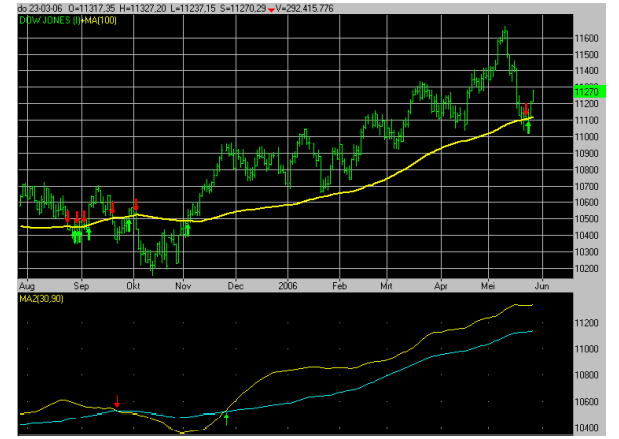

– We can visualise these calculated values as moving (dynamic) graphs 我们可以将这些计算值可视化为移动(动态)图表

● Such an approach smoothes the input data (“moving averages”) 这种方法平滑了输入数据(“移动平均线”)

– Different weights can be given to more recent compared to older data 与较旧的数据相比,可以对较新的数据赋予不同的权重

– Irregular changes (such as outliers and missing or inaccurate values) are smoothed out 消除不规则的变化(如异常值和缺失或不准确的值)

– Trends are clearly visible 趋势清晰可见

Data wrangling location (spatial) data 数据清洗位置(空间)数据

● Location data are increasingly being generated and used in various application domains 位置数据越来越多地在各种应用领域生成和使用

– Location data are automatically generated by smartphones, cars, etc. 位置数据由智能手机、汽车等自动生成。

– Generally contain location and time when the location was recorded (as individual location points or trajectories) 通常包含记录位置的位置和时间(作为单独的位置点或轨迹)

– Applications range from mapping apps, spatial data analysis, location specific advertisements, all the way to fitness and dating applications 应用范围从地图应用、空间数据分析、特定位置广告,一直到健身和约会应用

● Allow all kinds of useful applications, but challenging due to privacy concerns 允许各种有用的应用程序,但由于隐私问题而具有挑战性

– Once location data are integrated with other geographical or personal data一旦位置数据与其他地理或个人数据整合

– For example, identify where celebrities or rich individuals live by tracking routes from prestigious night clubs to end points of taxi / Uber rides 例如,通过追踪从著名夜总会到出租车/优步终点的路线,确定名人或富人的居住地

● Location data is dynamic and often requires real-time processing and analysis 位置数据是动态的,通常需要实时处理和分析

– As we have seen before, this is challenging 正如我们之前看到的,这很有挑战性

– Location data might be incomplete and/or inaccurate (GPS turned off, people inside buildings, vehicles in tunnels, etc.) 位置数据可能不完整和/或不准确(全球定位系统关闭、建筑物内人员、隧道内车辆等)。)

– It might not be possible to integrate location data legally 可能无法合法整合位置数据

– By themselves, location data is of limited use (i.e. if context is not known), only traffic densities are available (such as number of people using public transport at a certain station at what time) 就其本身而言,位置数据的用途有限(即,如果上下文未知),只能获得交通密度(例如,某个车站在什么时间使用公共交通工具的人数)

● Anonymising location data is challenging 匿名化位置数据具有挑战性

– Only a few data points make a taxi trip unique, once integrated with other data re-identification is often possible只有几个数据点使出租车旅程独一无二,一旦与其他数据整合,通常可以重新识别

● Location data provide new ways of exploring and cleaning data 位置数据提供了探索和清理数据的新方法

– Location data can be visualised on maps, allowing for interactive human inspection (which can provide immediate feedback on data quality 位置数据可以在地图上可视化,允许交互式人工检查(可以对数据质量提供即时反馈

– for example too many individuals located at a remote train station points to either an event or equipment malfunction) 位置数据可以在地图上可视化,允许交互式人工检查(可以对数据质量提供即时反馈

– Automatic verification of validity of locations and trajectories is possible based on domain knowledge (such as maximum speed of a taxi in a city will highlight trajectories that are not possible; or a smartphone cannot be in two locations at the same time) 基于领域知识自动验证位置和轨迹的有效性是可能的(例如城市中出租车的最大速度将突出不可能的轨迹;或者智能手机不能同时在两个位置)

– Missing locations can potentially be inferred (taxi trajectory from A to B must go via either C or D 可以潜在地推断缺失的位置(从A到B的滑行轨迹必须通过C或D

– if for example A and B are on different sides of a river and C and D are bridges) 例如,如果A和B在河的不同侧,C和D是桥)