Information Gain

- This was a very early method that sprang from AI research in ID3, and was refined further to become Gain Ratio in C4.5.

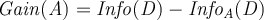

- It selects the attribute to split on with the highest information gain 选择信息增益最大的属性来拆分节点

- Let

be the probability that an arbitrary tuple in

be the probability that an arbitrary tuple in  belongs to class

belongs to class  , of

, of  classes, where

classes, where  is the set of tuples in

is the set of tuples in  labelled with class

labelled with class

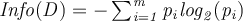

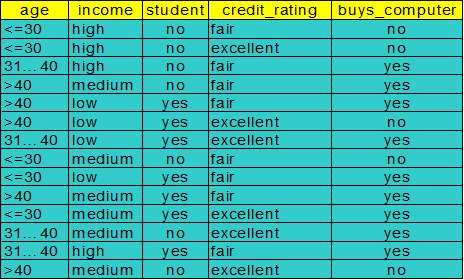

- Expected information (entropy) needed to classify a tuple in

is defined by

is defined by

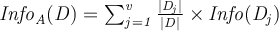

- After using attribute

to split

to split  into

into  partitions, corresponding to each attribute value for

partitions, corresponding to each attribute value for  , each one of these partitions being

, each one of these partitions being  , the information that is still needed to separate the classes is:

, the information that is still needed to separate the classes is:

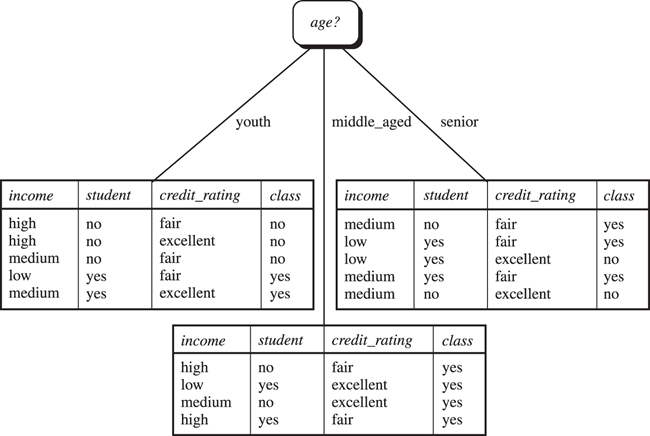

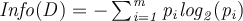

Example (continued from previous)

Consider 2 classes: Class P is buyscomputer = “yes”. Class _N is buyscomputer = “no”

For some partition on  with

with  examples of _P and

examples of _P and  examples of N, let

examples of N, let  be written as

be written as  .

.

Using the definition  from above,

from above,

we have

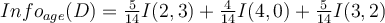

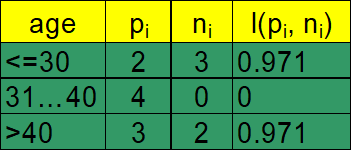

Now consider the first partition on _age. _We have the following

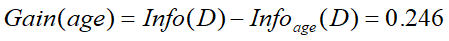

Therefore

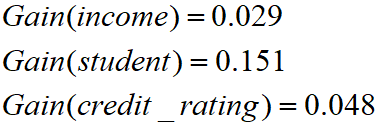

Similarly,

So Gain(age) is optimal and we split on age to get