值得参考的博客 Function backward探讨 从头开始读pytorch源码 operator篇 机器学习 矩阵对矩阵求导 求导链式法则 标量,向量,矩阵

Function

作用:记录运算历史,定义微分运算的方程。

我们很多时候会自己编写运算,比如损失函数等,为了让该运算能够加入网络中,并能够实现反向传播求导,这时候可以借助Function实现。

Function是定义的一个操作,其中如何计算,如何求导等; Module是定义的数据结构,它构成网络不同的layer,其中保存了相应的参数;

运算需要是Function的子类,且实现两个方法:

forward—定义如何计算。-

forward

在forward中,可以利用

ctx来进行一些操作。

forward中可用的方法(基于ctx) save_for_backward()must be used when saving input or output of the forward to be used later in the backward.mark_dirty()must be used to mark any input that is modified inplace by the forward function.mark_non_differentiable()must be used to tell the engine if an output is not differentiable.Function属性(成员变量,通过ctx进行访问) saved_tensors: 传给forward()的参数,在backward()中会用到。 needs_input_grad:长度为 : attr:num_inputs的bool元组,表示输出是否需要梯度。可以用于优化反向过程的缓存。 num_inputs: 传给函数 : func:forward的参数的数量。 num_outputs: 函数 :func:forward返回的值的数目。 requires_grad: 布尔值,表示函数 :func:backward 是否永远不会被调用。

backward

backward最主要的就是计算梯度,我们需要利用矩阵求导的方法得到公式,然后编程实现即可。

STATIC backward(ctx: Any, *grad_outputs: Any) → Any

- 参数列表以ctx为第一个参数;

- 后面的grad_outputs数量要和forward返回的参数个数相同;

- 其返回tensors数量和forward输入一样多!

w.r.t:with reference to—关于

样例

但是,pytorch是提供了自动求导机制的!

下面是一个线性操作

# Inherit from Functionclass LinearFunction(Function):# Note that both forward and backward are @staticmethods@staticmethod# bias is an optional argumentdef forward(ctx, input, weight, bias=None):ctx.save_for_backward(input, weight, bias)output = input.mm(weight.t()) # 值得注意的是,这里用的矩阵乘法if bias is not None:output += bias.unsqueeze(0).expand_as(output)return output# This function has only a single output, so it gets only one gradient@staticmethoddef backward(ctx, grad_output):# This is a pattern that is very convenient - at the top of backward# unpack saved_tensors and initialize all gradients w.r.t. inputs to# None. Thanks to the fact that additional trailing Nones are# ignored, the return statement is simple even when the function has# optional inputs.# 其中的grad_output即是:(dloss / doutput)# 我们需要做的是求:dloss / dx或者dloss / dwinput, weight, bias = ctx.saved_tensorsgrad_input = grad_weight = grad_bias = None# These needs_input_grad checks are optional and there only to# improve efficiency. If you want to make your code simpler, you can# skip them. Returning gradients for inputs that don't require it is# not an error.# 当然为了准确首先需要写出矩阵方程# 通常来说if判断不需要,直接计算梯度即可# 线性函数:y = wx + b# 返回:dloss / dx(dy)(当然要注意维度关系)if ctx.needs_input_grad[0]:grad_input = grad_output.mm(weight) # 一个有转置一个没有:格式统一if ctx.needs_input_grad[1]:grad_weight = grad_output.t().mm(input) # 事实上,我们是更新参数if bias is not None and ctx.needs_input_grad[2]:grad_bias = grad_output.sum(0)return grad_input, grad_weight, grad_bias

感觉这里的求导方法有点问题 -_-!

事实上上面的求导没有问题,可以证明,当然不是使用链式法则!(矩阵只能从定义来加以解决,由于定义是标量乘积,满足链式法则)

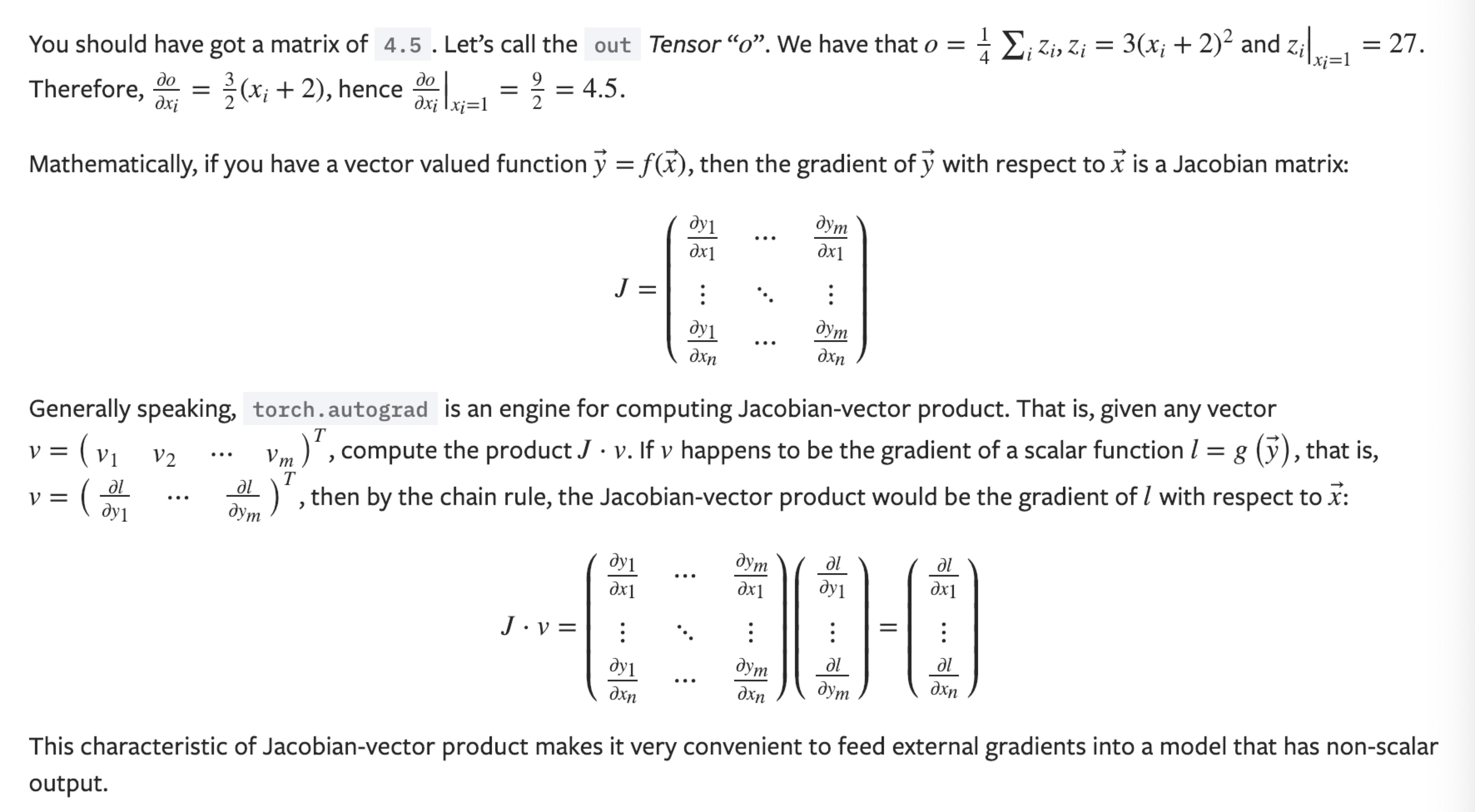

矩阵求导,还需要再了解一下! 矩阵求导

事实上,标量对向量求导,向量对向量求导更方便。矩阵对矩阵求导,比较方便的方法是,先将矩阵向量化!

对于上面定义的LinearFunction,在使用时可以对其apply先取别名为linear:

# 运用需要借助applylinear = LinearFunction.apply

测试

对于编写好的操作,可以利用gradcheck进行检测:

from torch.autograd import gradcheck# gradcheck takes a tuple of tensors as input, check if your gradient# evaluated with these tensors are close enough to numerical# approximations and returns True if they all verify this condition.input = (torch.randn(20,20,dtype=torch.double,requires_grad=True), torch.randn(30,20,dtype=torch.double,requires_grad=True))test = gradcheck(linear, input, eps=1e-6, atol=1e-4)print(test)

和Module搭配

Module和Function搭配就能够产生网络搭建过程中的基本模块。

Module编写需要实现两个方法:

__init__—初始化函数;forward—前向传播函数;class Linear(nn.Module):def __init__(self, input_features, output_features, bias=True):super(Linear, self).__init__()self.input_features = input_featuresself.output_features = output_features# nn.Parameter is a special kind of Tensor, that will get# automatically registered as Module's parameter once it's assigned# as an attribute. Parameters and buffers need to be registered, or# they won't appear in .parameters() (doesn't apply to buffers), and# won't be converted when e.g. .cuda() is called. You can use# .register_buffer() to register buffers.# nn.Parameters require gradients by default.self.weight = nn.Parameter(torch.Tensor(output_features, input_features))if bias:self.bias = nn.Parameter(torch.Tensor(output_features))else:# You should always register all possible parameters, but the# optional ones can be None if you want.self.register_parameter('bias', None)# Not a very smart way to initialize weightsself.weight.data.uniform_(-0.1, 0.1)if self.bias is not None:self.bias.data.uniform_(-0.1, 0.1)def forward(self, input):# See the autograd section for explanation of what happens here.return LinearFunction.apply(input, self.weight, self.bias)def extra_repr(self):# (Optional)Set the extra information about this module. You can test# it by printing an object of this class.return 'input_features={}, output_features={}, bias={}'.format(self.input_features, self.output_features, self.bias is not None)