问题

对词性进行标注(demo展示)

基本思路

- 编码词

- 得到编码的句子序列

- 输入LSTM

- LSTM输入Linear

- 经过log_softmax

- 取max为预测对象进行Loss计算

- loss_function = nn.NLLLoss()

- optimizer = optim.SGD(model.parameters(), lr=0.1)

代码

原理见另一个知识库笔记

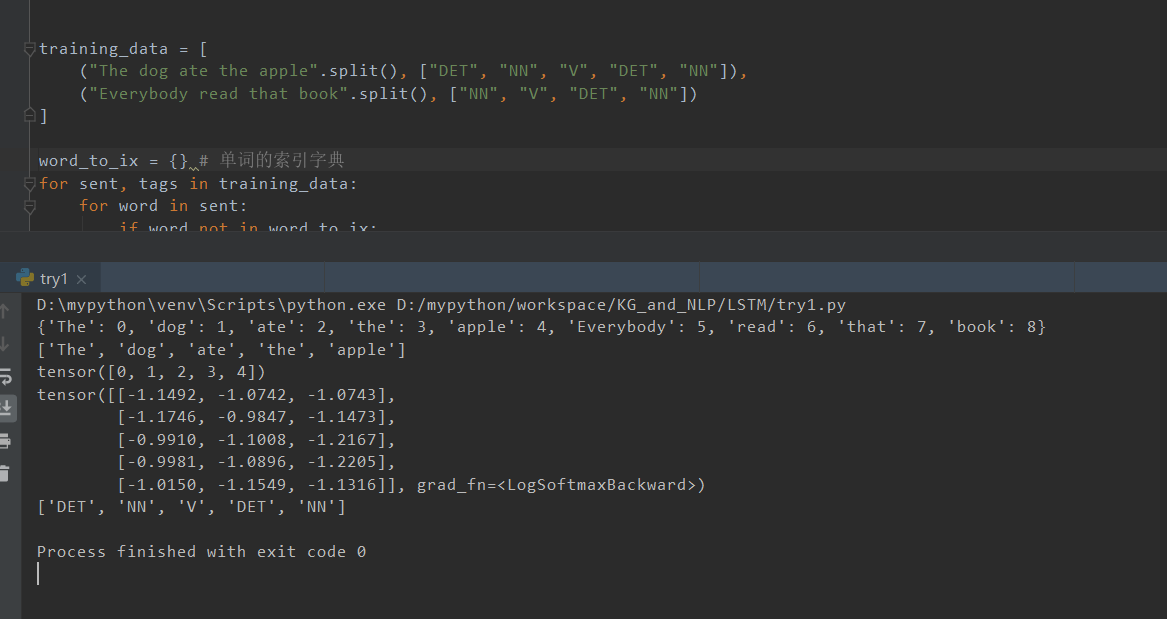

import torchimport torch.autograd as autogradimport torch.nn as nnimport torch.nn.functional as Fimport torch.optim as optimimport numpytorch.manual_seed(1)'''net = nn.LSTM(3, 4, bidirectional=True, batch_first=True) # 隐层尺度为4net2 = nn.LSTM(3, 4, bidirectional=False, batch_first=True)x = torch.rand(1, 5, 3) # 序列长度为5,输入尺度为3y=net(x)y2=net2(x)print(len(y))print(len(y2))print(y[0].shape)print(y2[0].shape)#print(y[1].shape)#print(y.shape)'''training_data = [("The dog ate the apple".split(), ["DET", "NN", "V", "DET", "NN"]),("Everybody read that book".split(), ["NN", "V", "DET", "NN"])]word_to_ix = {} # 单词的索引字典for sent, tags in training_data:for word in sent:if word not in word_to_ix:word_to_ix[word] = len(word_to_ix)print(word_to_ix)tag_to_ix = {"DET": 0, "NN": 1, "V": 2} # 手工设定词性标签数据字典index_to_tag={0:"DET", 1:"NN",2:"V"}class LSTMTagger(nn.Module):def __init__(self, embedding_dim, hidden_dim, vocab_size, tagset_size):super(LSTMTagger, self).__init__()self.hidden_dim = hidden_dimself.word_embeddings = nn.Embedding(vocab_size, embedding_dim)self.lstm = nn.LSTM(embedding_dim, hidden_dim)self.hidden2tag = nn.Linear(hidden_dim, tagset_size)self.hidden = self.init_hidden()def init_hidden(self):return (autograd.Variable(torch.zeros(1, 1, self.hidden_dim)),autograd.Variable(torch.zeros(1, 1, self.hidden_dim)))def forward(self, sentence):embeds = self.word_embeddings(sentence)lstm_out, self.hidden = self.lstm(embeds.view(len(sentence), 1, -1), self.hidden)tag_space = self.hidden2tag(lstm_out.view(len(sentence), -1))tag_scores = F.log_softmax(tag_space,dim=1)return tag_scoresdef prepare_sequence(seq, to_ix):idxs = [to_ix[w] for w in seq]tensor = torch.LongTensor(idxs)return autograd.Variable(tensor)model = LSTMTagger(300, 100, len(word_to_ix), len(tag_to_ix))loss_function = nn.NLLLoss()optimizer = optim.SGD(model.parameters(), lr=0.1)inputs = prepare_sequence(training_data[0][0], word_to_ix)tag_scores = model(inputs)print(training_data[0][0])print(inputs)print(tag_scores)for epoch in range(300):for sentence, tags in training_data:model.zero_grad()model.hidden = model.init_hidden()sentence_in = prepare_sequence(sentence, word_to_ix)#print(type(sentence_in))#print((sentence_in.shape))targets = prepare_sequence(tags, tag_to_ix)tag_scores = model(sentence_in)loss = loss_function(tag_scores, targets)loss.backward()optimizer.step()# 来检验下模型训练的结果inputs = prepare_sequence(training_data[0][0], word_to_ix)tag_scores = model(inputs)index=torch.max(tag_scores,1)[1].data.numpy()index=index.tolist()result=[index_to_tag[i] for i in index]print(result)

结果

利用第一句数据为预测对象进行预测,得到完全正确的结果

当然了数据这么少,还用数据测试不太妥,不过作为demo展示