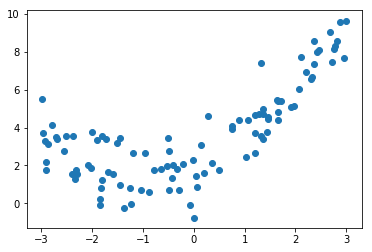

准备数据

import numpy as npimport matplotlib.pyplot as pltnp.random.seed(666)x = np.random.uniform(-3.0, 3.0, size=100)X = x.reshape(-1, 1)y = 0.5 * x**2 + x + 2 + np.random.normal(0, 1, size=100)plt.scatter(x, y)plt.show()

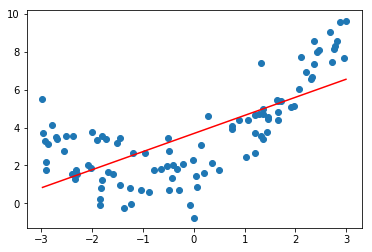

线性回归建模

from sklearn.linear_model import LinearRegressionlin_reg = LinearRegression()lin_reg.fit(X, y)lin_reg.score(X, y) # 0.49537078118650091y_predict = lin_reg.predict(X)plt.scatter(x, y)plt.plot(np.sort(x), y_predict[np.argsort(x)], color='r')plt.show()from sklearn.metrics import mean_squared_errory_predict = lin_reg.predict(X)mean_squared_error(y, y_predict) # 3.0750025765636577

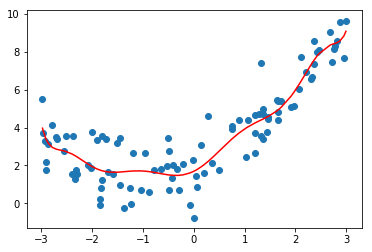

多项式回归建模

from sklearn.pipeline import Pipelinefrom sklearn.preprocessing import PolynomialFeaturesfrom sklearn.preprocessing import StandardScaler# 配置初始化def PolynomialRegression(degree):return Pipeline([("poly", PolynomialFeatures(degree=degree)),("std_scaler", StandardScaler()),("lin_reg", LinearRegression())])# 建模poly2_reg = PolynomialRegression(degree=2) # 2阶多项式poly2_reg.fit(X, y)# 预测值y2_predict = poly2_reg.predict(X)# 误差mean_squared_error(y, y2_predict) # 1.0987392142417856# 可视化plt.scatter(x, y)plt.plot(np.sort(x), y2_predict[np.argsort(x)], color='r')plt.show()

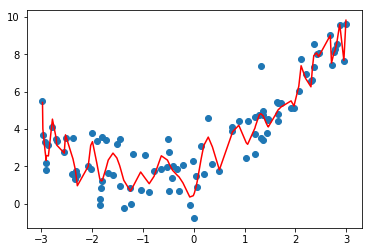

调参

poly10_reg = PolynomialRegression(degree=10) # 10阶多项式poly10_reg.fit(X, y)y10_predict = poly10_reg.predict(X)mean_squared_error(y, y10_predict) # 1.0508466763764164plt.scatter(x, y)plt.plot(np.sort(x), y10_predict[np.argsort(x)], color='r')plt.show()

从图可以看出来,有点过拟合。

poly100_reg = PolynomialRegression(degree=100) # 100阶多项式poly100_reg.fit(X, y)y100_predict = poly100_reg.predict(X)mean_squared_error(y, y100_predict) # 0.68743577834336944plt.scatter(x, y)plt.plot(np.sort(x), y100_predict[np.argsort(x)], color='r')plt.show()

总结

机器学习,主要解决的是过拟合问题。