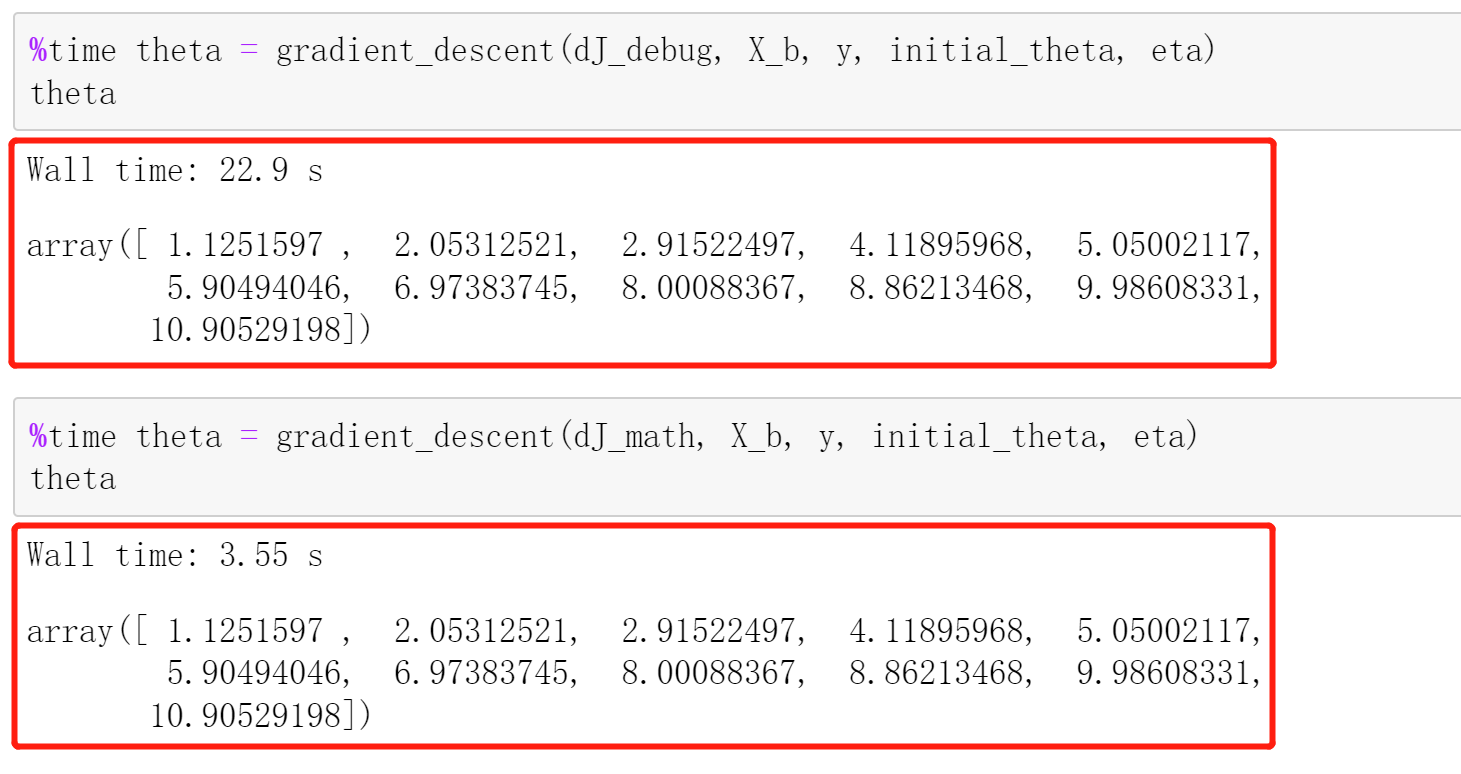

我们无法知道梯度公式是否正确,所以想了一种虽然慢一些但是更简单的梯度求解公式,这样可以用来求解正确的参数值。

import numpy as npimport matplotlib.pyplot as plt# 准备数据np.random.seed(666)X = np.random.random(size=(1000, 10))true_theta = np.arange(1, 12, dtype=float)X_b = np.hstack([np.ones((len(X), 1)), X])y = X_b.dot(true_theta) + np.random.normal(size=1000)# 损失函数def J(theta, X_b, y):try:return np.sum((y - X_b.dot(theta))**2) / len(X_b)except:return float('inf')# 梯度公式def dJ_math(theta, X_b, y):return X_b.T.dot(X_b.dot(theta) - y) * 2. / len(y)# 梯度调试公式def dJ_debug(theta, X_b, y, epsilon=0.01):res = np.empty(len(theta))for i in range(len(theta)):theta_1 = theta.copy()theta_1[i] += epsilontheta_2 = theta.copy()theta_2[i] -= epsilonres[i] = (J(theta_1, X_b, y) - J(theta_2, X_b, y)) / (2 * epsilon)return res# 梯度下降函数def gradient_descent(dJ, X_b, y, initial_theta, eta, n_iters = 1e4, epsilon=1e-8):theta = initial_thetacur_iter = 0while cur_iter < n_iters:gradient = dJ(theta, X_b, y)last_theta = thetatheta = theta - eta * gradientif(abs(J(theta, X_b, y) - J(last_theta, X_b, y)) < epsilon):breakcur_iter += 1return theta# 参数初始化X_b = np.hstack([np.ones((len(X), 1)), X])initial_theta = np.zeros(X_b.shape[1])eta = 0.01