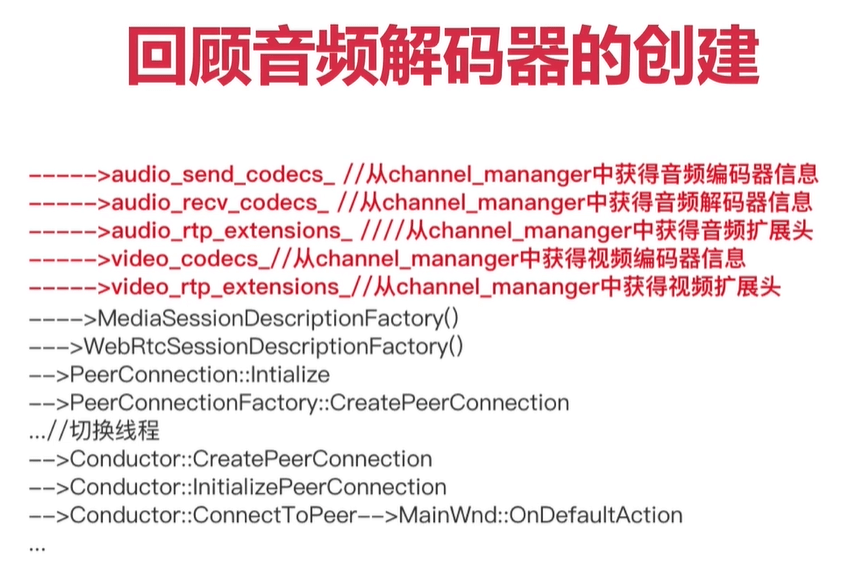

音频解码器的收集

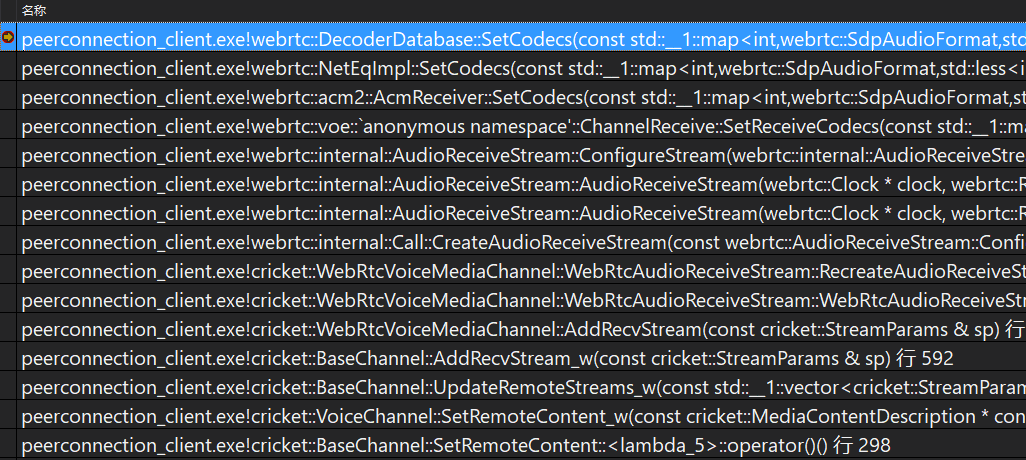

音频解码器的创建调用流程

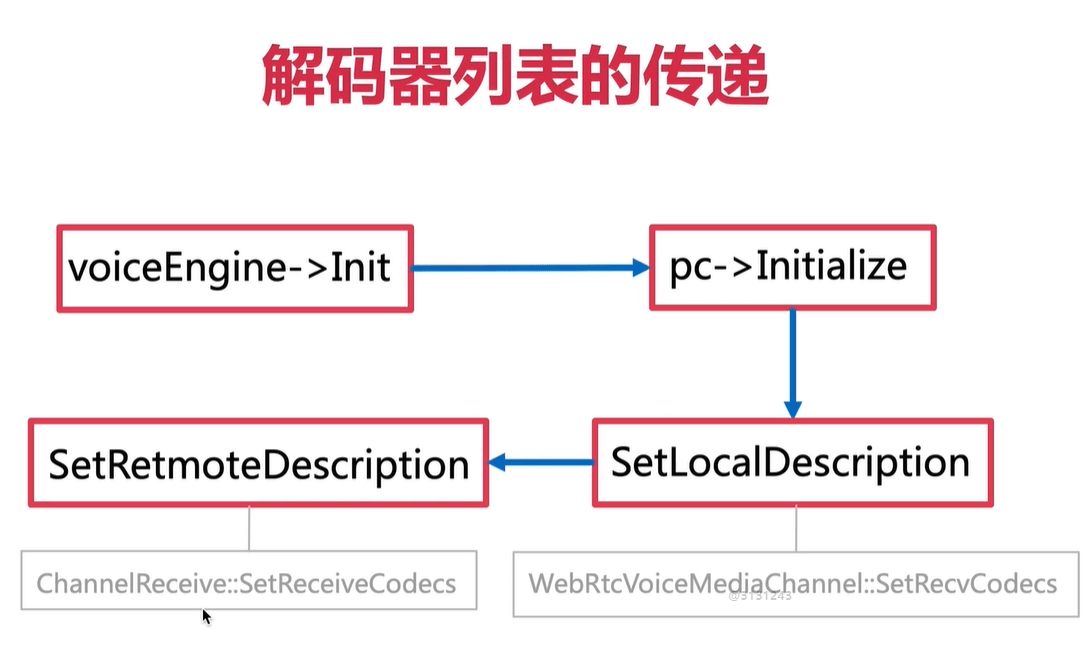

解码器列表的传递

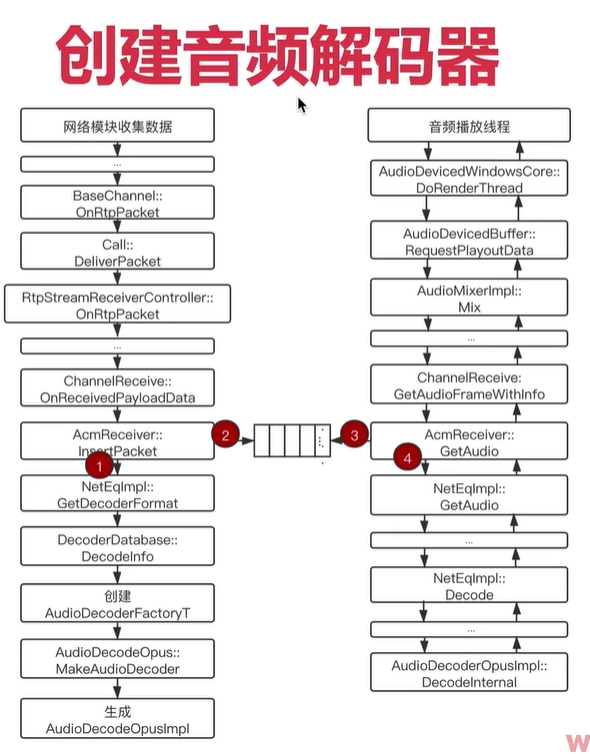

音频解码器的创建

NetEq与Decoder

创建音频解码器

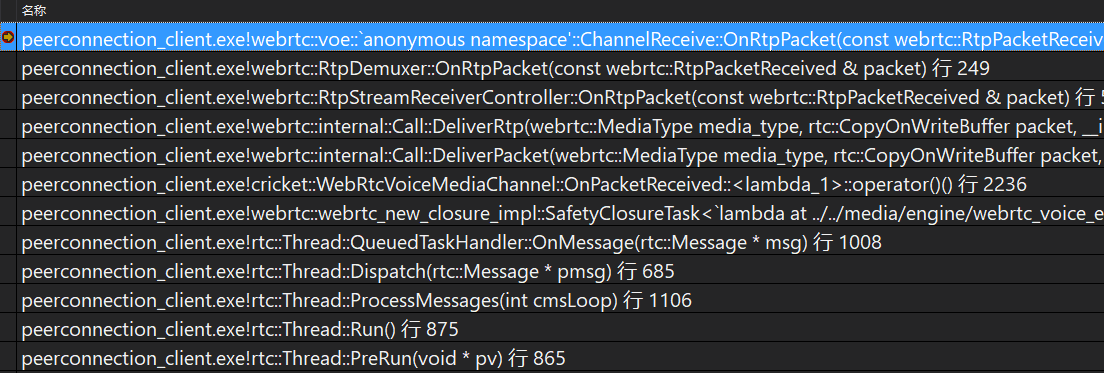

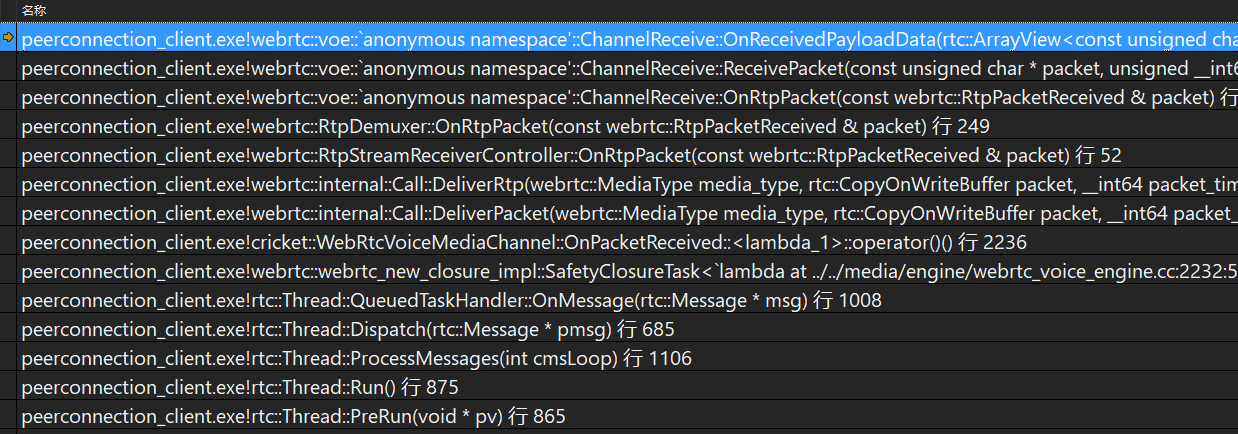

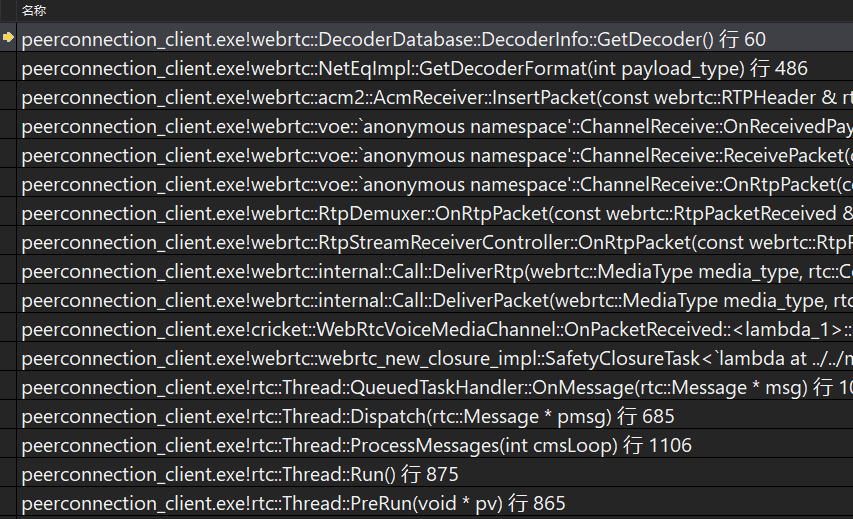

代码分析接收音频并解码流程

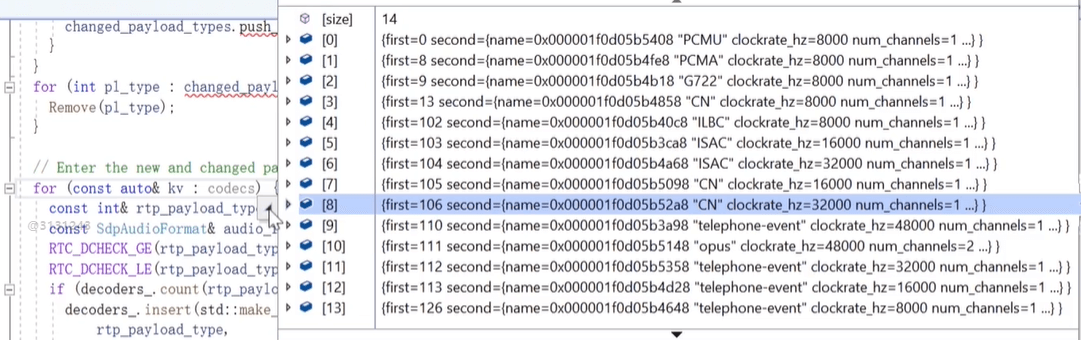

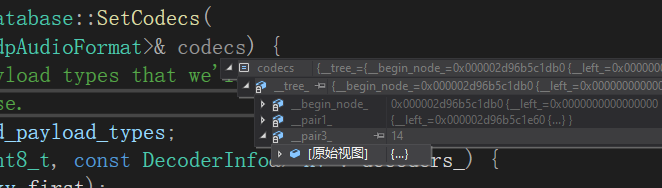

DecoderDatabase::SetCodecs

设置编解码器

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\audio_coding\neteq\decoder_database.cc

std::vector<int> DecoderDatabase::SetCodecs(const std::map<int, SdpAudioFormat>& codecs) {// First collect all payload types that we'll remove or reassign, then remove// them from the database.std::vector<int> changed_payload_types;// 第一次decoders_是没有值的,不进入该循环for (const std::pair<uint8_t, const DecoderInfo&> kv : decoders_) {auto i = codecs.find(kv.first);if (i == codecs.end() || i->second != kv.second.GetFormat()) {changed_payload_types.push_back(kv.first);}}for (int pl_type : changed_payload_types) {Remove(pl_type);}// Enter the new and changed payload type mappings into the database.for (const auto& kv : codecs) {const int& rtp_payload_type = kv.first;const SdpAudioFormat& audio_format = kv.second;RTC_DCHECK_GE(rtp_payload_type, 0);RTC_DCHECK_LE(rtp_payload_type, 0x7f);if (decoders_.count(rtp_payload_type) == 0) {decoders_.insert(std::make_pair(rtp_payload_type,DecoderInfo(audio_format, codec_pair_id_, decoder_factory_.get())));} else {// The mapping for this payload type hasn't changed.}}return changed_payload_types;}

为何我电脑上没有打印这些值,只是显示指针。

ChannelReceive::OnRtpPacket

h:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\audio\channel_receive.cc

每次webrtc接收到数据,都会调用这个函数。

// May be called on either worker thread or network thread.void ChannelReceive::OnRtpPacket(const RtpPacketReceived& packet) {int64_t now_ms = rtc::TimeMillis();{MutexLock lock(&sync_info_lock_);last_received_rtp_timestamp_ = packet.Timestamp();last_received_rtp_system_time_ms_ = now_ms;}// Store playout timestamp for the received RTP packetUpdatePlayoutTimestamp(false, now_ms);const auto& it = payload_type_frequencies_.find(packet.PayloadType());if (it == payload_type_frequencies_.end())return;// TODO(nisse): Set payload_type_frequency earlier, when packet is parsed.RtpPacketReceived packet_copy(packet);packet_copy.set_payload_type_frequency(it->second);rtp_receive_statistics_->OnRtpPacket(packet_copy);RTPHeader header;packet_copy.GetHeader(&header);// Interpolates absolute capture timestamp RTP header extension.header.extension.absolute_capture_time =absolute_capture_time_receiver_.OnReceivePacket(AbsoluteCaptureTimeReceiver::GetSource(header.ssrc,header.arrOfCSRCs),header.timestamp,rtc::saturated_cast<uint32_t>(packet_copy.payload_type_frequency()),header.extension.absolute_capture_time);ReceivePacket(packet_copy.data(), packet_copy.size(), header);}

ChannelReceive::ReceivePacket

void ChannelReceive::ReceivePacket(const uint8_t* packet,size_t packet_length,const RTPHeader& header) {const uint8_t* payload = packet + header.headerLength;assert(packet_length >= header.headerLength);size_t payload_length = packet_length - header.headerLength;size_t payload_data_length = payload_length - header.paddingLength;// E2EE Custom Audio Frame Decryption (This is optional).// Keep this buffer around for the lifetime of the OnReceivedPayloadData call.rtc::Buffer decrypted_audio_payload;if (frame_decryptor_ != nullptr) { // 如果frame_decryptor_为nullptr则表示该音频包没有加密const size_t max_plaintext_size = frame_decryptor_->GetMaxPlaintextByteSize(cricket::MEDIA_TYPE_AUDIO, payload_length);decrypted_audio_payload.SetSize(max_plaintext_size);const std::vector<uint32_t> csrcs(header.arrOfCSRCs,header.arrOfCSRCs + header.numCSRCs);const FrameDecryptorInterface::Result decrypt_result =frame_decryptor_->Decrypt(cricket::MEDIA_TYPE_AUDIO, csrcs,/*additional_data=*/nullptr,rtc::ArrayView<const uint8_t>(payload, payload_data_length),decrypted_audio_payload);if (decrypt_result.IsOk()) {decrypted_audio_payload.SetSize(decrypt_result.bytes_written);} else {// Interpret failures as a silent frame.decrypted_audio_payload.SetSize(0);}payload = decrypted_audio_payload.data();payload_data_length = decrypted_audio_payload.size();} else if (crypto_options_.sframe.require_frame_encryption) {RTC_DLOG(LS_ERROR)<< "FrameDecryptor required but not set, dropping packet";payload_data_length = 0;}rtc::ArrayView<const uint8_t> payload_data(payload, payload_data_length);if (frame_transformer_delegate_) {// Asynchronously transform the received payload. After the payload is// transformed, the delegate will call OnReceivedPayloadData to handle it.frame_transformer_delegate_->Transform(payload_data, header, remote_ssrc_);} else {OnReceivedPayloadData(payload_data, header);}}

ChannelReceive::OnReceivedPayloadData

void ChannelReceive::OnReceivedPayloadData(rtc::ArrayView<const uint8_t> payload,const RTPHeader& rtpHeader) {if (!Playing()) {// Avoid inserting into NetEQ when we are not playing. Count the// packet as discarded.// If we have a source_tracker_, tell it that the frame has been// "delivered". Normally, this happens in AudioReceiveStream when audio// frames are pulled out, but when playout is muted, nothing is pulling// frames. The downside of this approach is that frames delivered this way// won't be delayed for playout, and therefore will be unsynchronized with// (a) audio delay when playing and (b) any audio/video synchronization. But// the alternative is that muting playout also stops the SourceTracker from// updating RtpSource information.if (source_tracker_) {RtpPacketInfos::vector_type packet_vector = {RtpPacketInfo(rtpHeader, clock_->TimeInMilliseconds())};source_tracker_->OnFrameDelivered(RtpPacketInfos(packet_vector));}return;}// Push the incoming payload (parsed and ready for decoding) into the ACM// acm2::AcmReceiver是持有neteq的。if (acm_receiver_.InsertPacket(rtpHeader, payload) != 0) {RTC_DLOG(LS_ERROR) << "ChannelReceive::OnReceivedPayloadData() unable to ""push data to the ACM";return;}int64_t round_trip_time = 0;rtp_rtcp_->RTT(remote_ssrc_, &round_trip_time, NULL, NULL, NULL);std::vector<uint16_t> nack_list = acm_receiver_.GetNackList(round_trip_time);if (!nack_list.empty()) {// Can't use nack_list.data() since it's not supported by all// compilers.ResendPackets(&(nack_list[0]), static_cast<int>(nack_list.size()));}}

AcmReceiver::InsertPacket

int AcmReceiver::InsertPacket(const RTPHeader& rtp_header,rtc::ArrayView<const uint8_t> incoming_payload) {if (incoming_payload.empty()) {neteq_->InsertEmptyPacket(rtp_header);return 0;}int payload_type = rtp_header.payloadType;auto format = neteq_->GetDecoderFormat(payload_type);if (format && absl::EqualsIgnoreCase(format->sdp_format.name, "red")) {// This is a RED packet. Get the format of the audio codec.payload_type = incoming_payload[0] & 0x7f;format = neteq_->GetDecoderFormat(payload_type);}if (!format) {RTC_LOG_F(LS_ERROR) << "Payload-type " << payload_type<< " is not registered.";return -1;}{MutexLock lock(&mutex_);if (absl::EqualsIgnoreCase(format->sdp_format.name, "cn")) {if (last_decoder_ && last_decoder_->num_channels > 1) {// This is a CNG and the audio codec is not mono, so skip pushing in// packets into NetEq.return 0;}} else {//会经常更新last_decoder_ = DecoderInfo{/*payload_type=*/payload_type,/*sample_rate_hz=*/format->sample_rate_hz,/*num_channels=*/format->num_channels,/*sdp_format=*/std::move(format->sdp_format)};}} // |mutex_| is released.if (neteq_->InsertPacket(rtp_header, incoming_payload) < 0) {RTC_LOG(LERROR) << "AcmReceiver::InsertPacket "<< static_cast<int>(rtp_header.payloadType)<< " Failed to insert packet";return -1;}return 0;}

—》 neteq_->GetDecoderFormat(payload_type);

NetEqImpl::GetDecoderFormat

获取解码器的格式信息。

先获取DecoderDatabase::DecoderInfo,然后根据该信息获取解码器。

absl::optional<NetEq::DecoderFormat> NetEqImpl::GetDecoderFormat(int payload_type) const {MutexLock lock(&mutex_);const DecoderDatabase::DecoderInfo* const di =decoder_database_->GetDecoderInfo(payload_type);if (di) {const AudioDecoder* const decoder = di->GetDecoder();// TODO(kwiberg): Why the special case for RED?return DecoderFormat{/*sample_rate_hz=*/di->IsRed() ? 8000 : di->SampleRateHz(),/*num_channels=*/decoder ? rtc::dchecked_cast<int>(decoder->Channels()) : 1,/*sdp_format=*/di->GetFormat()};} else {// Payload type not registered.return absl::nullopt;}}

DecoderDatabase::DecoderInfo::GetDecoder

这里会判断是否已经创建解码器,如果没有则会创建。

AudioDecoder* DecoderDatabase::DecoderInfo::GetDecoder() const {if (subtype_ != Subtype::kNormal) {// These are handled internally, so they have no AudioDecoder objects.return nullptr;}if (!decoder_) {// TODO(ossu): Keep a check here for now, since a number of tests create// DecoderInfos without factories.RTC_DCHECK(factory_);decoder_ = factory_->MakeAudioDecoder(audio_format_, codec_pair_id_);}RTC_DCHECK(decoder_) << "Failed to create: " << rtc::ToString(audio_format_);return decoder_.get();}

NetEqImpl::InsertPacket

int NetEqImpl::InsertPacket(const RTPHeader& rtp_header,rtc::ArrayView<const uint8_t> payload) {rtc::MsanCheckInitialized(payload);TRACE_EVENT0("webrtc", "NetEqImpl::InsertPacket");MutexLock lock(&mutex_);if (InsertPacketInternal(rtp_header, payload) != 0) {return kFail;}return kOK;}

NetEqImpl::InsertPacketInternal

int NetEqImpl::InsertPacketInternal(const RTPHeader& rtp_header,rtc::ArrayView<const uint8_t> payload) {if (payload.empty()) {RTC_LOG_F(LS_ERROR) << "payload is empty";return kInvalidPointer;}int64_t receive_time_ms = clock_->TimeInMilliseconds();stats_->ReceivedPacket();PacketList packet_list;// Insert packet in a packet list.packet_list.push_back([&rtp_header, &payload, &receive_time_ms] {// Convert to Packet.Packet packet;packet.payload_type = rtp_header.payloadType;packet.sequence_number = rtp_header.sequenceNumber;packet.timestamp = rtp_header.timestamp;packet.payload.SetData(payload.data(), payload.size());packet.packet_info = RtpPacketInfo(rtp_header, receive_time_ms);// Waiting time will be set upon inserting the packet in the buffer.RTC_DCHECK(!packet.waiting_time);return packet;}());******}

这里主要是向packet_list插入一个任务,然后会从该链表读取任务取执行。