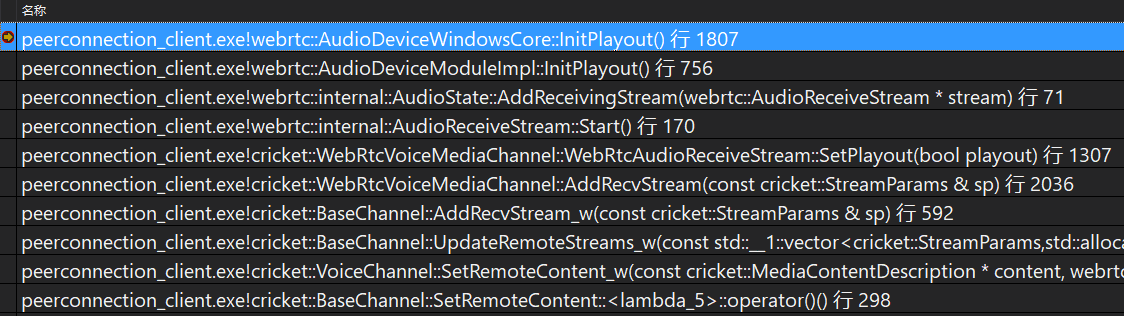

分析承接<7-13 打开播放设备>

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\audio_device\win\audio_device_core_win.cc

AudioDeviceWindowsCore::InitPlayout

int32_t AudioDeviceWindowsCore::InitPlayout() {MutexLock lock(&mutex_);if (_playing) {return -1;}if (_playIsInitialized) {return 0;}if (_ptrDeviceOut == NULL) {return -1;}// Initialize the speaker (devices might have been added or removed)if (InitSpeakerLocked() == -1) {RTC_LOG(LS_WARNING) << "InitSpeaker() failed";}// Ensure that the updated rendering endpoint device is validif (_ptrDeviceOut == NULL) {return -1;}if (_builtInAecEnabled && _recIsInitialized) {// Ensure the correct render device is configured in case// InitRecording() was called before InitPlayout().if (SetDMOProperties() == -1) {return -1;}}HRESULT hr = S_OK;WAVEFORMATEX* pWfxOut = NULL;WAVEFORMATEX Wfx = WAVEFORMATEX();WAVEFORMATEX* pWfxClosestMatch = NULL;// Create COM object with IAudioClient interface.SAFE_RELEASE(_ptrClientOut);hr = _ptrDeviceOut->Activate(__uuidof(IAudioClient), CLSCTX_ALL, NULL,(void**)&_ptrClientOut);EXIT_ON_ERROR(hr);// 获取扬声器支持的参数// Retrieve the stream format that the audio engine uses for its internal// processing (mixing) of shared-mode streams.hr = _ptrClientOut->GetMixFormat(&pWfxOut);if (SUCCEEDED(hr)) {***}// 构造我们想输出的参数// Set wave formatWfx.wFormatTag = WAVE_FORMAT_PCM;Wfx.wBitsPerSample = 16;Wfx.cbSize = 0;const int freqs[] = {48000, 44100, 16000, 96000, 32000, 8000};hr = S_FALSE;// 获取一个支持共享模式下的freqs值// Iterate over frequencies and channels, in order of priorityfor (unsigned int freq = 0; freq < sizeof(freqs) / sizeof(freqs[0]); freq++) {// _playChannelsPrioList两个成员,一个单声道,一个双声道for (unsigned int chan = 0; chan < sizeof(_playChannelsPrioList) /sizeof(_playChannelsPrioList[0]);chan++) {Wfx.nChannels = _playChannelsPrioList[chan];Wfx.nSamplesPerSec = freqs[freq];Wfx.nBlockAlign = Wfx.nChannels * Wfx.wBitsPerSample / 8;Wfx.nAvgBytesPerSec = Wfx.nSamplesPerSec * Wfx.nBlockAlign;// If the method succeeds and the audio endpoint device supports the// specified stream format, it returns S_OK. If the method succeeds and// provides a closest match to the specified format, it returns S_FALSE.// 判断该系统是否在该采样率下支持共享模式hr = _ptrClientOut->IsFormatSupported(AUDCLNT_SHAREMODE_SHARED, &Wfx,&pWfxClosestMatch);if (hr == S_OK) {break;} else {****}}if (hr == S_OK)break;}// TODO(andrew): what happens in the event of failure in the above loop?// Is _ptrClientOut->Initialize expected to fail?// Same in InitRecording().if (hr == S_OK) {_playAudioFrameSize = Wfx.nBlockAlign;// Block size is the number of samples each channel in 10ms._playBlockSize = Wfx.nSamplesPerSec / 100;_playSampleRate = Wfx.nSamplesPerSec;_devicePlaySampleRate =Wfx.nSamplesPerSec; // The device itself continues to run at 44.1 kHz._devicePlayBlockSize = Wfx.nSamplesPerSec / 100;_playChannels = Wfx.nChannels;***}// Create a rendering stream.//// ****************************************************************************// For a shared-mode stream that uses event-driven buffering, the caller must// set both hnsPeriodicity and hnsBufferDuration to 0. The Initialize method// determines how large a buffer to allocate based on the scheduling period// of the audio engine. Although the client's buffer processing thread is// event driven, the basic buffer management process, as described previously,// is unaltered.// Each time the thread awakens, it should call// IAudioClient::GetCurrentPadding to determine how much data to write to a// rendering buffer or read from a capture buffer. In contrast to the two// buffers that the Initialize method allocates for an exclusive-mode stream// that uses event-driven buffering, a shared-mode stream requires a single// buffer.// ****************************************************************************// 指明扬声器的buffer的最小值REFERENCE_TIME hnsBufferDuration =0; // ask for minimum buffer size (default)if (_devicePlaySampleRate == 44100) {// Ask for a larger buffer size (30ms) when using 44.1kHz as render rate.// There seems to be a larger risk of underruns for 44.1 compared// with the default rate (48kHz). When using default, we set the requested// buffer duration to 0, which sets the buffer to the minimum size// required by the engine thread. The actual buffer size can then be// read by GetBufferSize() and it is 20ms on most machines.hnsBufferDuration = 30 * 10000;}hr = _ptrClientOut->Initialize(AUDCLNT_SHAREMODE_SHARED, // share Audio Engine with other applicationsAUDCLNT_STREAMFLAGS_EVENTCALLBACK, // processing of the audio buffer by// the client will be event drivenhnsBufferDuration, // requested buffer capacity as a time value (in// 100-nanosecond units)0, // periodicity&Wfx, // selected wave formatNULL); // session GUIDif (FAILED(hr)) {RTC_LOG(LS_ERROR) << "IAudioClient::Initialize() failed:";}EXIT_ON_ERROR(hr);// 扬声器播放的声音放到_ptrAudioBufferif (_ptrAudioBuffer) {// Update the audio buffer with the selected parameters_ptrAudioBuffer->SetPlayoutSampleRate(_playSampleRate);_ptrAudioBuffer->SetPlayoutChannels((uint8_t)_playChannels);} else {***}// Get the actual size of the shared (endpoint buffer).// Typical value is 960 audio frames <=> 20ms @ 48kHz sample rate.UINT bufferFrameCount(0);hr = _ptrClientOut->GetBufferSize(&bufferFrameCount);if (SUCCEEDED(hr)) {RTC_LOG(LS_VERBOSE) << "IAudioClient::GetBufferSize() => "<< bufferFrameCount << " (<=> "<< bufferFrameCount * _playAudioFrameSize << " bytes)";}// Set the event handle that the system signals when an audio buffer is ready// to be processed by the client.hr = _ptrClientOut->SetEventHandle(_hRenderSamplesReadyEvent);EXIT_ON_ERROR(hr);// Get an IAudioRenderClient interface.SAFE_RELEASE(_ptrRenderClient);hr = _ptrClientOut->GetService(__uuidof(IAudioRenderClient),(void**)&_ptrRenderClient);EXIT_ON_ERROR(hr);// Mark playout side as initialized_playIsInitialized = true;*****}