几种缓冲区

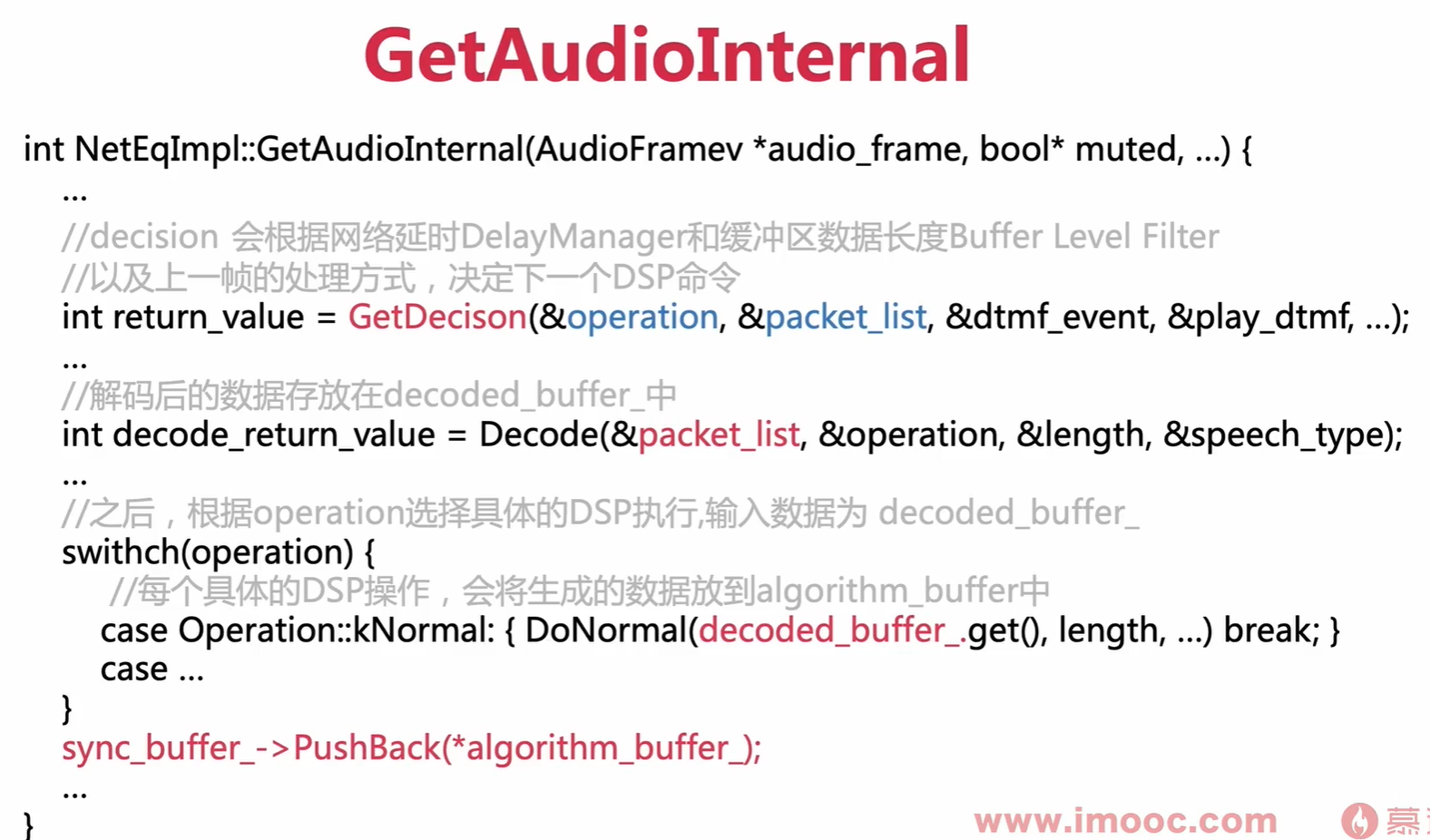

packet_buffer 解码后保存到decoded_buffer,decoded_buffer经过DSP处理后的数据保存到algorithm_buffer,然后从algorithm_buffer中拷贝数据过来到sync_buffer 。

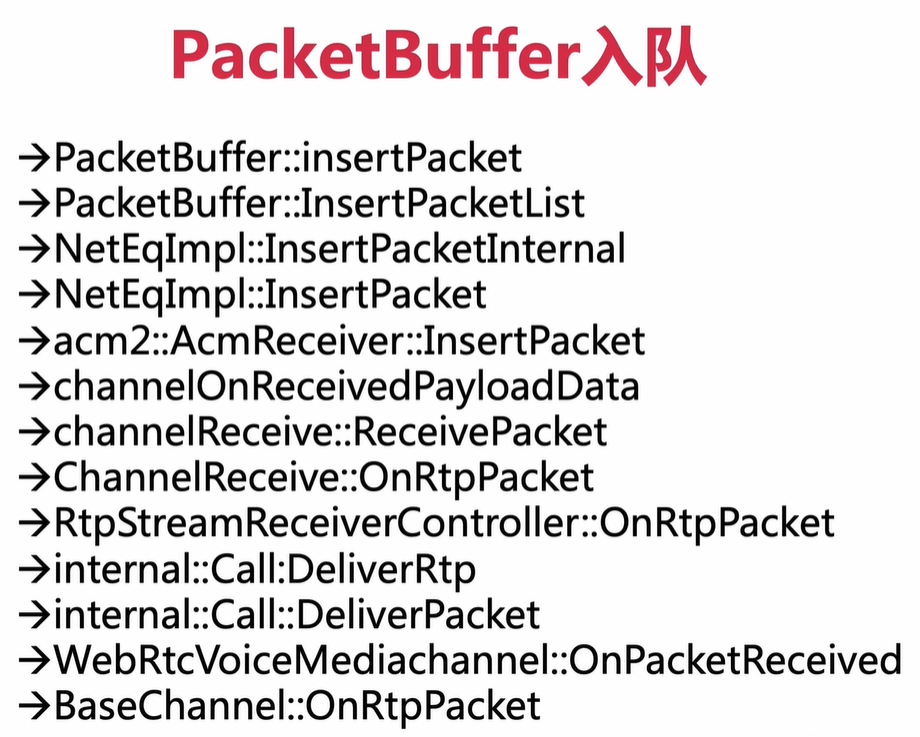

PacketBuffer入队

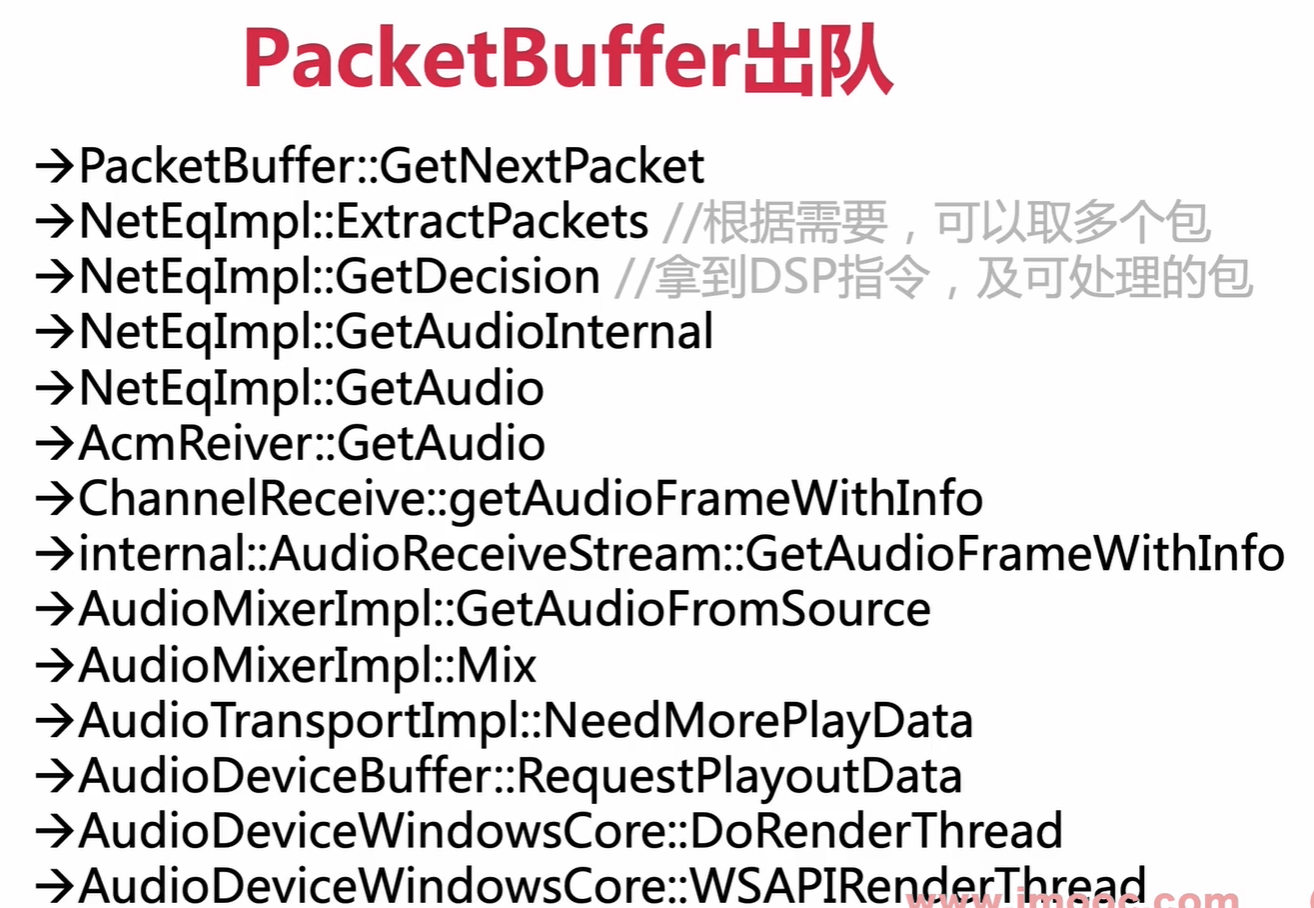

PacketBuffer出队

NetEqImpl::GetAudioInternal

h:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\audio_coding\neteq\neteq_impl.cc

int NetEqImpl::GetAudioInternal(AudioFrame* audio_frame,bool* muted,absl::optional<Operation> action_override) {PacketList packet_list;DtmfEvent dtmf_event;Operation operation;bool play_dtmf;*muted = false;last_decoded_timestamps_.clear();last_decoded_packet_infos_.clear();tick_timer_->Increment();stats_->IncreaseCounter(output_size_samples_, fs_hz_);const auto lifetime_stats = stats_->GetLifetimeStatistics();expand_uma_logger_.UpdateSampleCounter(lifetime_stats.concealed_samples,fs_hz_);speech_expand_uma_logger_.UpdateSampleCounter(lifetime_stats.concealed_samples -lifetime_stats.silent_concealed_samples,fs_hz_);// Check for muted state.if (enable_muted_state_ && expand_->Muted() && packet_buffer_->Empty()) {RTC_DCHECK_EQ(last_mode_, Mode::kExpand);audio_frame->Reset();RTC_DCHECK(audio_frame->muted()); // Reset() should mute the frame.playout_timestamp_ += static_cast<uint32_t>(output_size_samples_);audio_frame->sample_rate_hz_ = fs_hz_;audio_frame->samples_per_channel_ = output_size_samples_;audio_frame->timestamp_ =first_packet_? 0: timestamp_scaler_->ToExternal(playout_timestamp_) -static_cast<uint32_t>(audio_frame->samples_per_channel_);audio_frame->num_channels_ = sync_buffer_->Channels();stats_->ExpandedNoiseSamples(output_size_samples_, false);controller_->NotifyMutedState();*muted = true;return 0;}int return_value = GetDecision(&operation, &packet_list, &dtmf_event,&play_dtmf, action_override);if (return_value != 0) {last_mode_ = Mode::kError;return return_value;}AudioDecoder::SpeechType speech_type;int length = 0;const size_t start_num_packets = packet_list.size();int decode_return_value =Decode(&packet_list, &operation, &length, &speech_type);assert(vad_.get());bool sid_frame_available =(operation == Operation::kRfc3389Cng && !packet_list.empty());vad_->Update(decoded_buffer_.get(), static_cast<size_t>(length), speech_type,sid_frame_available, fs_hz_);// This is the criterion that we did decode some data through the speech// decoder, and the operation resulted in comfort noise.const bool codec_internal_sid_frame =(speech_type == AudioDecoder::kComfortNoise &&start_num_packets > packet_list.size());if (sid_frame_available || codec_internal_sid_frame) {// Start a new stopwatch since we are decoding a new CNG packet.generated_noise_stopwatch_ = tick_timer_->GetNewStopwatch();}algorithm_buffer_->Clear();switch (operation) {case Operation::kNormal: {DoNormal(decoded_buffer_.get(), length, speech_type, play_dtmf);if (length > 0) {stats_->DecodedOutputPlayed();}break;}case Operation::kMerge: {DoMerge(decoded_buffer_.get(), length, speech_type, play_dtmf);break;}case Operation::kExpand: {RTC_DCHECK_EQ(return_value, 0);if (!current_rtp_payload_type_ || !DoCodecPlc()) {return_value = DoExpand(play_dtmf);}RTC_DCHECK_GE(sync_buffer_->FutureLength() - expand_->overlap_length(),output_size_samples_);break;}case Operation::kAccelerate:case Operation::kFastAccelerate: {const bool fast_accelerate =enable_fast_accelerate_ && (operation == Operation::kFastAccelerate);return_value = DoAccelerate(decoded_buffer_.get(), length, speech_type,play_dtmf, fast_accelerate);break;}case Operation::kPreemptiveExpand: {return_value = DoPreemptiveExpand(decoded_buffer_.get(), length,speech_type, play_dtmf);break;}case Operation::kRfc3389Cng:case Operation::kRfc3389CngNoPacket: {return_value = DoRfc3389Cng(&packet_list, play_dtmf);break;}case Operation::kCodecInternalCng: {// This handles the case when there is no transmission and the decoder// should produce internal comfort noise.// TODO(hlundin): Write test for codec-internal CNG.DoCodecInternalCng(decoded_buffer_.get(), length);break;}case Operation::kDtmf: {// TODO(hlundin): Write test for this.return_value = DoDtmf(dtmf_event, &play_dtmf);break;}case Operation::kUndefined: {RTC_LOG(LS_ERROR) << "Invalid operation kUndefined.";assert(false); // This should not happen.last_mode_ = Mode::kError;return kInvalidOperation;}} // End of switch.last_operation_ = operation;if (return_value < 0) {return return_value;}if (last_mode_ != Mode::kRfc3389Cng) {comfort_noise_->Reset();}// We treat it as if all packets referenced to by |last_decoded_packet_infos_|// were mashed together when creating the samples in |algorithm_buffer_|.RtpPacketInfos packet_infos(last_decoded_packet_infos_);// Copy samples from |algorithm_buffer_| to |sync_buffer_|.//// TODO(bugs.webrtc.org/10757):// We would in the future also like to pass |packet_infos| so that we can do// sample-perfect tracking of that information across |sync_buffer_|.sync_buffer_->PushBack(*algorithm_buffer_);// Extract data from |sync_buffer_| to |output|.size_t num_output_samples_per_channel = output_size_samples_;size_t num_output_samples = output_size_samples_ * sync_buffer_->Channels();if (num_output_samples > AudioFrame::kMaxDataSizeSamples) {RTC_LOG(LS_WARNING) << "Output array is too short. "<< AudioFrame::kMaxDataSizeSamples << " < "<< output_size_samples_ << " * "<< sync_buffer_->Channels();num_output_samples = AudioFrame::kMaxDataSizeSamples;num_output_samples_per_channel =AudioFrame::kMaxDataSizeSamples / sync_buffer_->Channels();}sync_buffer_->GetNextAudioInterleaved(num_output_samples_per_channel,audio_frame);audio_frame->sample_rate_hz_ = fs_hz_;// TODO(bugs.webrtc.org/10757):// We don't have the ability to properly track individual packets once their// audio samples have entered |sync_buffer_|. So for now, treat it as if// |packet_infos| from packets decoded by the current |GetAudioInternal()|// call were all consumed assembling the current audio frame and the current// audio frame only.audio_frame->packet_infos_ = std::move(packet_infos);if (sync_buffer_->FutureLength() < expand_->overlap_length()) {// The sync buffer should always contain |overlap_length| samples, but now// too many samples have been extracted. Reinstall the |overlap_length|// lookahead by moving the index.const size_t missing_lookahead_samples =expand_->overlap_length() - sync_buffer_->FutureLength();RTC_DCHECK_GE(sync_buffer_->next_index(), missing_lookahead_samples);sync_buffer_->set_next_index(sync_buffer_->next_index() -missing_lookahead_samples);}if (audio_frame->samples_per_channel_ != output_size_samples_) {RTC_LOG(LS_ERROR) << "audio_frame->samples_per_channel_ ("<< audio_frame->samples_per_channel_<< ") != output_size_samples_ (" << output_size_samples_<< ")";// TODO(minyue): treatment of under-run, filling zerosaudio_frame->Mute();return kSampleUnderrun;}// Should always have overlap samples left in the |sync_buffer_|.RTC_DCHECK_GE(sync_buffer_->FutureLength(), expand_->overlap_length());// TODO(yujo): For muted frames, this can be a copy rather than an addition.if (play_dtmf) {return_value = DtmfOverdub(dtmf_event, sync_buffer_->Channels(),audio_frame->mutable_data());}// Update the background noise parameters if last operation wrote data// straight from the decoder to the |sync_buffer_|. That is, none of the// operations that modify the signal can be followed by a parameter update.if ((last_mode_ == Mode::kNormal) || (last_mode_ == Mode::kAccelerateFail) ||(last_mode_ == Mode::kPreemptiveExpandFail) ||(last_mode_ == Mode::kRfc3389Cng) ||(last_mode_ == Mode::kCodecInternalCng)) {background_noise_->Update(*sync_buffer_, *vad_.get());}if (operation == Operation::kDtmf) {// DTMF data was written the end of |sync_buffer_|.// Update index to end of DTMF data in |sync_buffer_|.sync_buffer_->set_dtmf_index(sync_buffer_->Size());}if (last_mode_ != Mode::kExpand && last_mode_ != Mode::kCodecPlc) {// If last operation was not expand, calculate the |playout_timestamp_| from// the |sync_buffer_|. However, do not update the |playout_timestamp_| if it// would be moved "backwards".uint32_t temp_timestamp =sync_buffer_->end_timestamp() -static_cast<uint32_t>(sync_buffer_->FutureLength());if (static_cast<int32_t>(temp_timestamp - playout_timestamp_) > 0) {playout_timestamp_ = temp_timestamp;}} else {// Use dead reckoning to estimate the |playout_timestamp_|.playout_timestamp_ += static_cast<uint32_t>(output_size_samples_);}// Set the timestamp in the audio frame to zero before the first packet has// been inserted. Otherwise, subtract the frame size in samples to get the// timestamp of the first sample in the frame (playout_timestamp_ is the// last + 1).audio_frame->timestamp_ =first_packet_? 0: timestamp_scaler_->ToExternal(playout_timestamp_) -static_cast<uint32_t>(audio_frame->samples_per_channel_);if (!(last_mode_ == Mode::kRfc3389Cng ||last_mode_ == Mode::kCodecInternalCng || last_mode_ == Mode::kExpand ||last_mode_ == Mode::kCodecPlc)) {generated_noise_stopwatch_.reset();}if (decode_return_value)return decode_return_value;return return_value;}