前言

源码分析

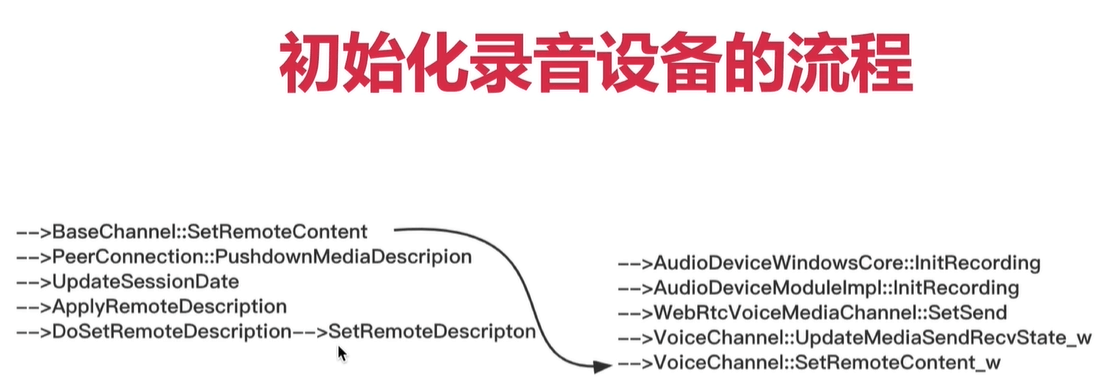

WebRtcVoiceMediaChannel::SetSend

h:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\media\engine\webrtc_voice_engine.cc

void WebRtcVoiceMediaChannel::SetSend(bool send) {***// Apply channel specific options, and initialize the ADM for recording (this// may take time on some platforms, e.g. Android).if (send) {engine()->ApplyOptions(options_);// InitRecording() may return an error if the ADM is already recording.if (!engine()->adm()->RecordingIsInitialized() &&!engine()->adm()->Recording()) {if (engine()->adm()->InitRecording() != 0) {RTC_LOG(LS_WARNING) << "Failed to initialize recording";}}}// Change the settings on each send channel.for (auto& kv : send_streams_) {kv.second->SetSend(send);}send_ = send;}

其中调用AudioDeviceModuleImpl::InitRecording

int32_t AudioDeviceModuleImpl::InitRecording() {RTC_LOG(INFO) << __FUNCTION__;CHECKinitialized_();if (RecordingIsInitialized()) {return 0;}int32_t result = audio_device_->InitRecording();RTC_LOG(INFO) << "output: " << result;RTC_HISTOGRAM_BOOLEAN("WebRTC.Audio.InitRecordingSuccess",static_cast<int>(result == 0));return result;}

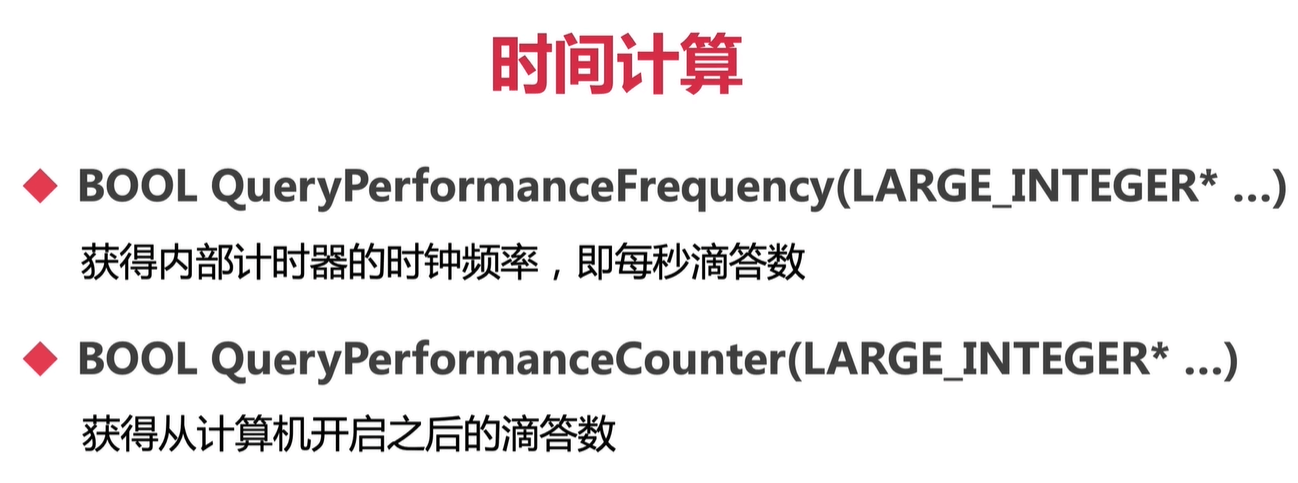

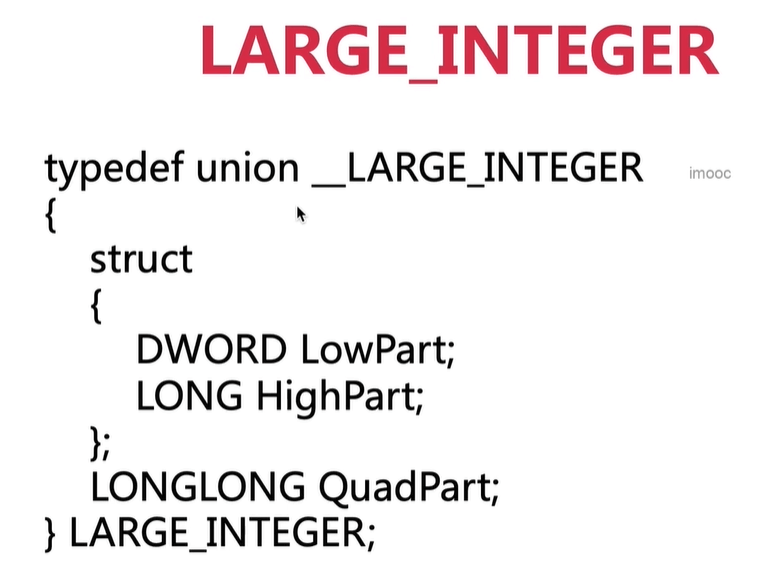

AudioDeviceWindowsCore::InitRecording

int32_t AudioDeviceWindowsCore::InitRecording() {MutexLock lock(&mutex_);if (_recording) {return -1;}if (_recIsInitialized) {return 0;}// 获取时钟频率,1 000 000 0if (QueryPerformanceFrequency(&_perfCounterFreq) == 0) {return -1;}// 本机是1.00000_perfCounterFactor = 10000000.0 / (double)_perfCounterFreq.QuadPart;if (_ptrDeviceIn == NULL) {return -1;}// Initialize the microphone (devices might have been added or removed)if (InitMicrophoneLocked() == -1) {RTC_LOG(LS_WARNING) << "InitMicrophone() failed";}// Ensure that the updated capturing endpoint device is validif (_ptrDeviceIn == NULL) {return -1;}if (_builtInAecEnabled) {// The DMO will configure the capture device.return InitRecordingDMO();}HRESULT hr = S_OK;WAVEFORMATEX* pWfxIn = NULL;WAVEFORMATEXTENSIBLE Wfx = WAVEFORMATEXTENSIBLE();WAVEFORMATEX* pWfxClosestMatch = NULL;// Create COM object with IAudioClient interface.SAFE_RELEASE(_ptrClientIn);hr = _ptrDeviceIn->Activate(__uuidof(IAudioClient), CLSCTX_ALL, NULL,(void**)&_ptrClientIn);EXIT_ON_ERROR(hr);// Retrieve the stream format that the audio engine uses for its internal// processing (mixing) of shared-mode streams.hr = _ptrClientIn->GetMixFormat(&pWfxIn);if (SUCCEEDED(hr)) {RTC_LOG(LS_VERBOSE) << "Audio Engine's current capturing mix format:";// format typeRTC_LOG(LS_VERBOSE) << "wFormatTag : 0x"<< rtc::ToHex(pWfxIn->wFormatTag) << " ("<< pWfxIn->wFormatTag << ")";// number of channels (i.e. mono, stereo...)RTC_LOG(LS_VERBOSE) << "nChannels : " << pWfxIn->nChannels;// sample rateRTC_LOG(LS_VERBOSE) << "nSamplesPerSec : " << pWfxIn->nSamplesPerSec;// for buffer estimationRTC_LOG(LS_VERBOSE) << "nAvgBytesPerSec: " << pWfxIn->nAvgBytesPerSec;// block size of dataRTC_LOG(LS_VERBOSE) << "nBlockAlign : " << pWfxIn->nBlockAlign;// number of bits per sample of mono dataRTC_LOG(LS_VERBOSE) << "wBitsPerSample : " << pWfxIn->wBitsPerSample;RTC_LOG(LS_VERBOSE) << "cbSize : " << pWfxIn->cbSize;}// Set wave formatWfx.Format.wFormatTag = WAVE_FORMAT_EXTENSIBLE;Wfx.Format.wBitsPerSample = 16;Wfx.Format.cbSize = 22;Wfx.dwChannelMask = 0;Wfx.Samples.wValidBitsPerSample = Wfx.Format.wBitsPerSample;Wfx.SubFormat = KSDATAFORMAT_SUBTYPE_PCM;const int freqs[6] = {48000, 44100, 16000, 96000, 32000, 8000};hr = S_FALSE;// Iterate over frequencies and channels, in order of priorityfor (unsigned int freq = 0; freq < sizeof(freqs) / sizeof(freqs[0]); freq++) {for (unsigned int chan = 0;chan < sizeof(_recChannelsPrioList) / sizeof(_recChannelsPrioList[0]);chan++) {Wfx.Format.nChannels = _recChannelsPrioList[chan];Wfx.Format.nSamplesPerSec = freqs[freq];Wfx.Format.nBlockAlign =Wfx.Format.nChannels * Wfx.Format.wBitsPerSample / 8;Wfx.Format.nAvgBytesPerSec =Wfx.Format.nSamplesPerSec * Wfx.Format.nBlockAlign;// If the method succeeds and the audio endpoint device supports the// specified stream format, it returns S_OK. If the method succeeds and// provides a closest match to the specified format, it returns S_FALSE.hr = _ptrClientIn->IsFormatSupported(AUDCLNT_SHAREMODE_SHARED, (WAVEFORMATEX*)&Wfx, &pWfxClosestMatch);if (hr == S_OK) {break;} else {if (pWfxClosestMatch) {RTC_LOG(INFO) << "nChannels=" << Wfx.Format.nChannels<< ", nSamplesPerSec=" << Wfx.Format.nSamplesPerSec<< " is not supported. Closest match: ""nChannels="<< pWfxClosestMatch->nChannels << ", nSamplesPerSec="<< pWfxClosestMatch->nSamplesPerSec;CoTaskMemFree(pWfxClosestMatch);pWfxClosestMatch = NULL;} else {RTC_LOG(INFO) << "nChannels=" << Wfx.Format.nChannels<< ", nSamplesPerSec=" << Wfx.Format.nSamplesPerSec<< " is not supported. No closest match.";}}}if (hr == S_OK)break;}if (hr == S_OK) {_recAudioFrameSize = Wfx.Format.nBlockAlign;_recSampleRate = Wfx.Format.nSamplesPerSec;_recBlockSize = Wfx.Format.nSamplesPerSec / 100;_recChannels = Wfx.Format.nChannels;RTC_LOG(LS_VERBOSE) << "VoE selected this capturing format:";RTC_LOG(LS_VERBOSE) << "wFormatTag : 0x"<< rtc::ToHex(Wfx.Format.wFormatTag) << " ("<< Wfx.Format.wFormatTag << ")";RTC_LOG(LS_VERBOSE) << "nChannels : " << Wfx.Format.nChannels;RTC_LOG(LS_VERBOSE) << "nSamplesPerSec : " << Wfx.Format.nSamplesPerSec;RTC_LOG(LS_VERBOSE) << "nAvgBytesPerSec : " << Wfx.Format.nAvgBytesPerSec;RTC_LOG(LS_VERBOSE) << "nBlockAlign : " << Wfx.Format.nBlockAlign;RTC_LOG(LS_VERBOSE) << "wBitsPerSample : " << Wfx.Format.wBitsPerSample;RTC_LOG(LS_VERBOSE) << "cbSize : " << Wfx.Format.cbSize;RTC_LOG(LS_VERBOSE) << "Additional settings:";RTC_LOG(LS_VERBOSE) << "_recAudioFrameSize: " << _recAudioFrameSize;RTC_LOG(LS_VERBOSE) << "_recBlockSize : " << _recBlockSize;RTC_LOG(LS_VERBOSE) << "_recChannels : " << _recChannels;}// Create a capturing stream.hr = _ptrClientIn->Initialize(AUDCLNT_SHAREMODE_SHARED, // share Audio Engine with other applicationsAUDCLNT_STREAMFLAGS_EVENTCALLBACK | // processing of the audio buffer by// the client will be event drivenAUDCLNT_STREAMFLAGS_NOPERSIST, // volume and mute settings for an// audio session will not persist// across system restarts0, // required for event-driven shared mode0, // periodicity(WAVEFORMATEX*)&Wfx, // selected wave formatNULL); // session GUIDif (hr != S_OK) {RTC_LOG(LS_ERROR) << "IAudioClient::Initialize() failed:";}EXIT_ON_ERROR(hr);if (_ptrAudioBuffer) {// Update the audio buffer with the selected parameters_ptrAudioBuffer->SetRecordingSampleRate(_recSampleRate);_ptrAudioBuffer->SetRecordingChannels((uint8_t)_recChannels);} else {// We can enter this state during CoreAudioIsSupported() when no// AudioDeviceImplementation has been created, hence the AudioDeviceBuffer// does not exist. It is OK to end up here since we don't initiate any media// in CoreAudioIsSupported().RTC_LOG(LS_VERBOSE)<< "AudioDeviceBuffer must be attached before streaming can start";}// Get the actual size of the shared (endpoint buffer).// Typical value is 960 audio frames <=> 20ms @ 48kHz sample rate.UINT bufferFrameCount(0);hr = _ptrClientIn->GetBufferSize(&bufferFrameCount);if (SUCCEEDED(hr)) {RTC_LOG(LS_VERBOSE) << "IAudioClient::GetBufferSize() => "<< bufferFrameCount << " (<=> "<< bufferFrameCount * _recAudioFrameSize << " bytes)";}// Set the event handle that the system signals when an audio buffer is ready// to be processed by the client.hr = _ptrClientIn->SetEventHandle(_hCaptureSamplesReadyEvent);EXIT_ON_ERROR(hr);// Get an IAudioCaptureClient interface.SAFE_RELEASE(_ptrCaptureClient);hr = _ptrClientIn->GetService(__uuidof(IAudioCaptureClient),(void**)&_ptrCaptureClient);EXIT_ON_ERROR(hr);// Mark capture side as initialized_recIsInitialized = true;CoTaskMemFree(pWfxIn);CoTaskMemFree(pWfxClosestMatch);RTC_LOG(LS_VERBOSE) << "capture side is now initialized";return 0;Exit:_TraceCOMError(hr);CoTaskMemFree(pWfxIn);CoTaskMemFree(pWfxClosestMatch);SAFE_RELEASE(_ptrClientIn);SAFE_RELEASE(_ptrCaptureClient);return -1;}

AudioDeviceWindowsCore::InitRecordingDMO

// Capture initialization when the built-in AEC DirectX Media Object (DMO) is// used. Called from InitRecording(), most of which is skipped over. The DMO// handles device initialization itself.// Reference: http://msdn.microsoft.com/en-us/library/ff819492(v=vs.85).aspxint32_t AudioDeviceWindowsCore::InitRecordingDMO() {assert(_builtInAecEnabled);assert(_dmo != NULL);// 主要是if (SetDMOProperties() == -1) {return -1;}DMO_MEDIA_TYPE mt = {};HRESULT hr = MoInitMediaType(&mt, sizeof(WAVEFORMATEX));if (FAILED(hr)) {MoFreeMediaType(&mt);_TraceCOMError(hr);return -1;}mt.majortype = MEDIATYPE_Audio;mt.subtype = MEDIASUBTYPE_PCM;mt.formattype = FORMAT_WaveFormatEx; // 数据格式// Supported formats// nChannels: 1 (in AEC-only mode)// nSamplesPerSec: 8000, 11025, 16000, 22050// wBitsPerSample: 16WAVEFORMATEX* ptrWav = reinterpret_cast<WAVEFORMATEX*>(mt.pbFormat);ptrWav->wFormatTag = WAVE_FORMAT_PCM;ptrWav->nChannels = 1; // 设置DMO的属性为AEC,只能是单通道// 16000 is the highest we can support with our resampler.ptrWav->nSamplesPerSec = 16000;ptrWav->nAvgBytesPerSec = 32000;ptrWav->nBlockAlign = 2;ptrWav->wBitsPerSample = 16;ptrWav->cbSize = 0;// Set the VoE format equal to the AEC output format._recAudioFrameSize = ptrWav->nBlockAlign;_recSampleRate = ptrWav->nSamplesPerSec;_recBlockSize = ptrWav->nSamplesPerSec / 100;//10毫秒的采样个数_recChannels = ptrWav->nChannels;// Set the DMO output format parameters.hr = _dmo->SetOutputType(kAecCaptureStreamIndex, &mt, 0);MoFreeMediaType(&mt);if (FAILED(hr)) {_TraceCOMError(hr);return -1;}// 设置输出的Audio Bufferif (_ptrAudioBuffer) {_ptrAudioBuffer->SetRecordingSampleRate(_recSampleRate);_ptrAudioBuffer->SetRecordingChannels(_recChannels);} else {// Refer to InitRecording() for comments.RTC_LOG(LS_VERBOSE)<< "AudioDeviceBuffer must be attached before streaming can start";}_mediaBuffer = new MediaBufferImpl(_recBlockSize * _recAudioFrameSize);// Optional, but if called, must be after media types are set.// 微软建议使用前调用。其实这时候没有调用的话,内部也会调用。。hr = _dmo->AllocateStreamingResources();if (FAILED(hr)) {_TraceCOMError(hr);return -1;}_recIsInitialized = true;RTC_LOG(LS_VERBOSE) << "Capture side is now initialized";return 0;}

AudioDeviceWindowsCore::SetDMOProperties

AudioDeviceWindowsCore::SetDMOProperties

int AudioDeviceWindowsCore::SetDMOProperties() {HRESULT hr = S_OK;assert(_dmo != NULL);rtc::scoped_refptr<IPropertyStore> ps;{IPropertyStore* ptrPS = NULL;hr = _dmo->QueryInterface(IID_IPropertyStore,reinterpret_cast<void**>(&ptrPS));if (FAILED(hr) || ptrPS == NULL) {_TraceCOMError(hr);return -1;}ps = ptrPS;SAFE_RELEASE(ptrPS);}// Set the AEC system mode.// SINGLE_CHANNEL_AEC - AEC processing only.if (SetVtI4Property(ps, MFPKEY_WMAAECMA_SYSTEM_MODE, SINGLE_CHANNEL_AEC)) {return -1;}// Set the AEC source mode.// VARIANT_TRUE - Source mode (we poll the AEC for captured data).if (SetBoolProperty(ps, MFPKEY_WMAAECMA_DMO_SOURCE_MODE, VARIANT_TRUE) ==-1) {return -1;}// Enable the feature mode.// This lets us override all the default processing settings below.if (SetBoolProperty(ps, MFPKEY_WMAAECMA_FEATURE_MODE, VARIANT_TRUE) == -1) {return -1;}// Disable analog AGC (default enabled).if (SetBoolProperty(ps, MFPKEY_WMAAECMA_MIC_GAIN_BOUNDER, VARIANT_FALSE) ==-1) {return -1;}// Disable noise suppression (default enabled).// 0 - Disabled, 1 - Enabledif (SetVtI4Property(ps, MFPKEY_WMAAECMA_FEATR_NS, 0) == -1) {return -1;}// Relevant parameters to leave at default settings:// MFPKEY_WMAAECMA_FEATR_AGC - Digital AGC (disabled).// MFPKEY_WMAAECMA_FEATR_CENTER_CLIP - AEC center clipping (enabled).// MFPKEY_WMAAECMA_FEATR_ECHO_LENGTH - Filter length (256 ms).// TODO(andrew): investigate decresing the length to 128 ms.// MFPKEY_WMAAECMA_FEATR_FRAME_SIZE - Frame size (0).// 0 is automatic; defaults to 160 samples (or 10 ms frames at the// selected 16 kHz) as long as mic array processing is disabled.// MFPKEY_WMAAECMA_FEATR_NOISE_FILL - Comfort noise (enabled).// MFPKEY_WMAAECMA_FEATR_VAD - VAD (disabled).// Set the devices selected by VoE. If using a default device, we need to// search for the device index.int inDevIndex = _inputDeviceIndex;int outDevIndex = _outputDeviceIndex;if (!_usingInputDeviceIndex) {ERole role = eCommunications;if (_inputDevice == AudioDeviceModule::kDefaultDevice) {role = eConsole;}if (_GetDefaultDeviceIndex(eCapture, role, &inDevIndex) == -1) {return -1;}}if (!_usingOutputDeviceIndex) {ERole role = eCommunications;if (_outputDevice == AudioDeviceModule::kDefaultDevice) {role = eConsole;}if (_GetDefaultDeviceIndex(eRender, role, &outDevIndex) == -1) {return -1;}}DWORD devIndex = static_cast<uint32_t>(outDevIndex << 16) +static_cast<uint32_t>(0x0000ffff & inDevIndex);RTC_LOG(LS_VERBOSE) << "Capture device index: " << inDevIndex<< ", render device index: " << outDevIndex;if (SetVtI4Property(ps, MFPKEY_WMAAECMA_DEVICE_INDEXES, devIndex) == -1) {return -1;}return 0;}

AudioDeviceWindowsCore::_GetDefaultDeviceIndex

-》

AudioDeviceWindowsCore::_GetDefaultDeviceID