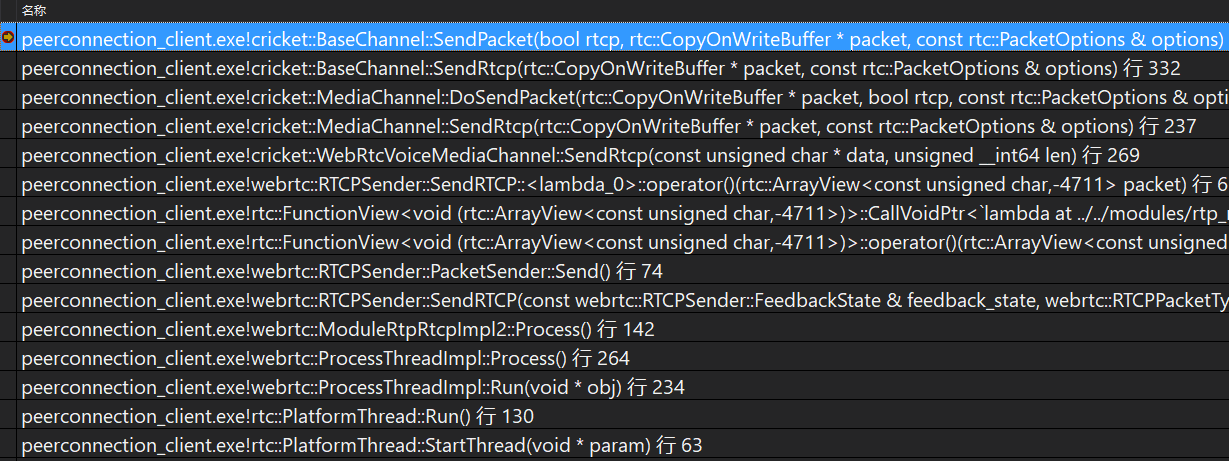

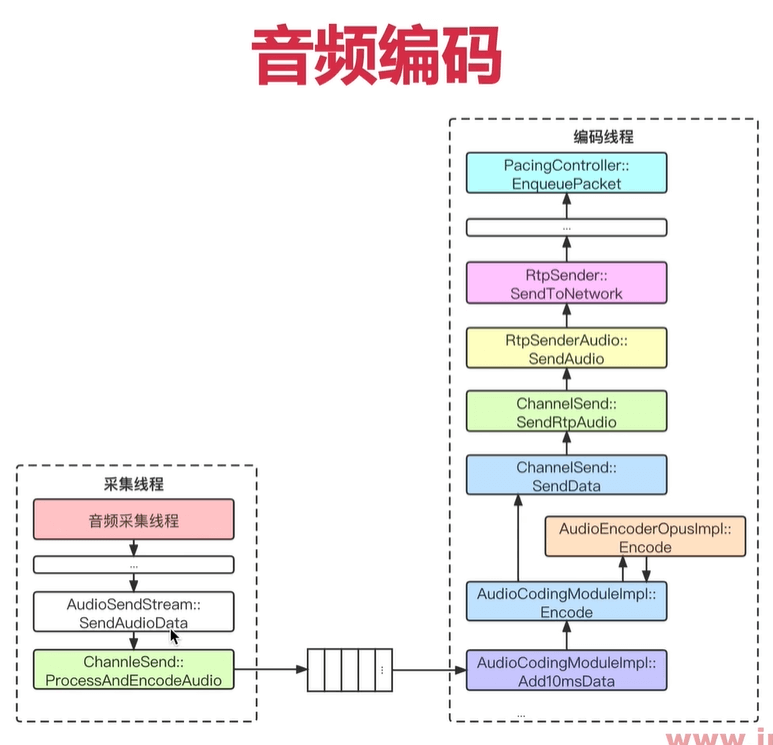

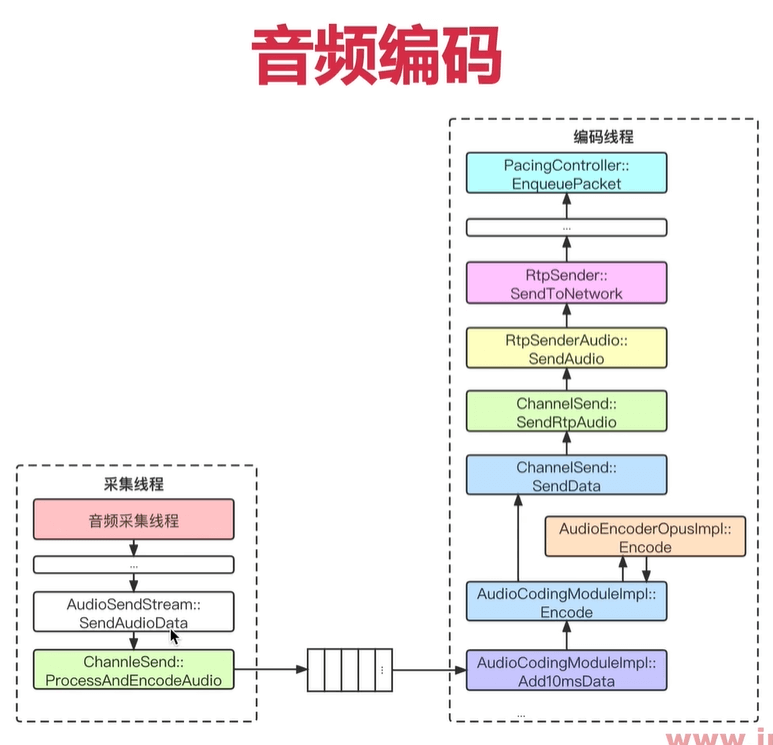

- ">总体流程

- 代码分析

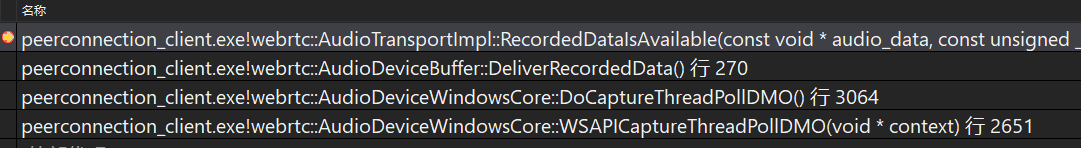

- AudioTransportImpl::RecordedDataIsAvailable

- AudioTransportImpl::SendProcessedData

- AudioSendStream::SendAudioData

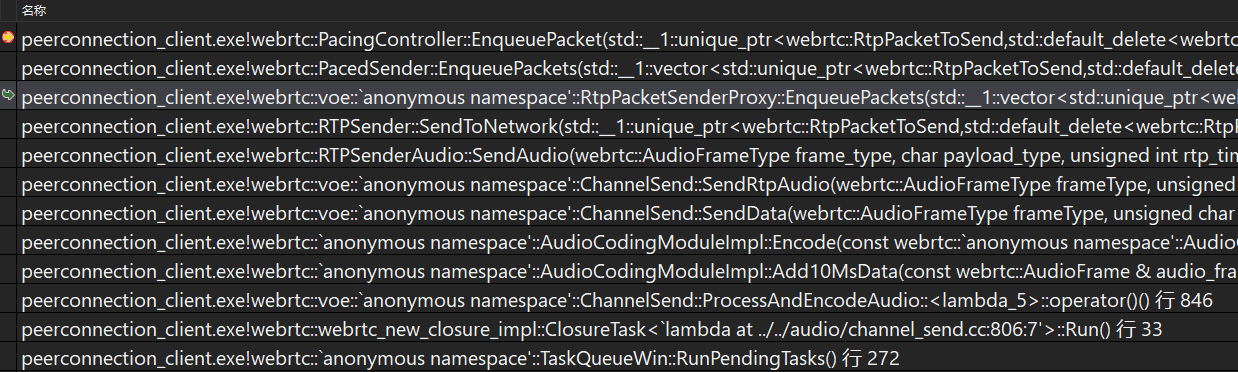

- ChannelSend::ProcessAndEncodeAudio

- AudioCodingModuleImpl::Add10MsData

- ChannelSend::SendData

- ChannelSend::SendRtpAudio

- RTPSenderAudio::SendAudio

- RTPSender::SendToNetwork

- RtpPacketSenderProxy::EnqueuePackets

- PacedSender::EnqueuePackets

- PacingController::EnqueuePacket

- PacingController::EnqueuePacketInternal

- PacingController::GetPendingPacket

- PacingController::ProcessPackets

总体流程

代码分析

AudioTransportImpl::RecordedDataIsAvailable

// Not used in Chromium. Process captured audio and distribute to all sending// streams, and try to do this at the lowest possible sample rate.int32_t AudioTransportImpl::RecordedDataIsAvailable(const void* audio_data,const size_t number_of_frames,const size_t bytes_per_sample,const size_t number_of_channels,const uint32_t sample_rate,const uint32_t audio_delay_milliseconds,const int32_t /*clock_drift*/,const uint32_t /*volume*/,const bool key_pressed,uint32_t& /*new_mic_volume*/) { // NOLINT: to avoid changing APIsRTC_DCHECK(audio_data);RTC_DCHECK_GE(number_of_channels, 1);RTC_DCHECK_LE(number_of_channels, 2);RTC_DCHECK_EQ(2 * number_of_channels, bytes_per_sample);RTC_DCHECK_GE(sample_rate, AudioProcessing::NativeRate::kSampleRate8kHz);// 100 = 1 second / data duration (10 ms).RTC_DCHECK_EQ(number_of_frames * 100, sample_rate);RTC_DCHECK_LE(bytes_per_sample * number_of_frames * number_of_channels,AudioFrame::kMaxDataSizeBytes);int send_sample_rate_hz = 0;size_t send_num_channels = 0;bool swap_stereo_channels = false;{MutexLock lock(&capture_lock_);send_sample_rate_hz = send_sample_rate_hz_;send_num_channels = send_num_channels_;swap_stereo_channels = swap_stereo_channels_;}std::unique_ptr<AudioFrame> audio_frame(new AudioFrame());InitializeCaptureFrame(sample_rate, send_sample_rate_hz, number_of_channels,send_num_channels, audio_frame.get());voe::RemixAndResample(static_cast<const int16_t*>(audio_data),number_of_frames, number_of_channels, sample_rate,&capture_resampler_, audio_frame.get());ProcessCaptureFrame(audio_delay_milliseconds, key_pressed,swap_stereo_channels, audio_processing_,audio_frame.get());// Typing detection (utilizes the APM/VAD decision). We let the VAD determine// if we're using this feature or not.// TODO(solenberg): GetConfig() takes a lock. Work around that.bool typing_detected = false;if (audio_processing_ &&audio_processing_->GetConfig().voice_detection.enabled) {if (audio_frame->vad_activity_ != AudioFrame::kVadUnknown) {bool vad_active = audio_frame->vad_activity_ == AudioFrame::kVadActive;typing_detected = typing_detection_.Process(key_pressed, vad_active);}}// Copy frame and push to each sending stream. The copy is required since an// encoding task will be posted internally to each stream.{MutexLock lock(&capture_lock_);typing_noise_detected_ = typing_detected;}RTC_DCHECK_GT(audio_frame->samples_per_channel_, 0);if (async_audio_processing_)async_audio_processing_->Process(std::move(audio_frame));elseSendProcessedData(std::move(audio_frame));return 0;}

AudioTransportImpl::SendProcessedData

void AudioTransportImpl::SendProcessedData(std::unique_ptr<AudioFrame> audio_frame) {RTC_DCHECK_GT(audio_frame->samples_per_channel_, 0);MutexLock lock(&capture_lock_);if (audio_senders_.empty())return;auto it = audio_senders_.begin();while (++it != audio_senders_.end()) {auto audio_frame_copy = std::make_unique<AudioFrame>();audio_frame_copy->CopyFrom(*audio_frame);(*it)->SendAudioData(std::move(audio_frame_copy));}// Send the original frame to the first stream w/o copying.(*audio_senders_.begin())->SendAudioData(std::move(audio_frame));}

AudioSendStream::SendAudioData

void AudioSendStream::SendAudioData(std::unique_ptr<AudioFrame> audio_frame) {RTC_CHECK_RUNS_SERIALIZED(&audio_capture_race_checker_);RTC_DCHECK_GT(audio_frame->sample_rate_hz_, 0);double duration = static_cast<double>(audio_frame->samples_per_channel_) /audio_frame->sample_rate_hz_;{// Note: SendAudioData() passes the frame further down the pipeline and it// may eventually get sent. But this method is invoked even if we are not// connected, as long as we have an AudioSendStream (created as a result of// an O/A exchange). This means that we are calculating audio levels whether// or not we are sending samples.// TODO(https://crbug.com/webrtc/10771): All "media-source" related stats// should move from send-streams to the local audio sources or tracks; a// send-stream should not be required to read the microphone audio levels.MutexLock lock(&audio_level_lock_);audio_level_.ComputeLevel(*audio_frame, duration);}channel_send_->ProcessAndEncodeAudio(std::move(audio_frame));}

—》开始跟编码层打交道了, channelsend->ProcessAndEncodeAudio(std::move(audio_frame));

ChannelSend::ProcessAndEncodeAudio

void ChannelSend::ProcessAndEncodeAudio(std::unique_ptr<AudioFrame> audio_frame) {RTC_DCHECK_RUNS_SERIALIZED(&audio_thread_race_checker_);RTC_DCHECK_GT(audio_frame->samples_per_channel_, 0);RTC_DCHECK_LE(audio_frame->num_channels_, 8);// Profile time between when the audio frame is added to the task queue and// when the task is actually executed.audio_frame->UpdateProfileTimeStamp();// 添加一个任务到encoder_queue_,然后触发编码线程来处理encoder_queue_.PostTask([this, audio_frame = std::move(audio_frame)]() mutable {RTC_DCHECK_RUN_ON(&encoder_queue_);if (!encoder_queue_is_active_) {if (fixing_timestamp_stall_) {_timeStamp +=static_cast<uint32_t>(audio_frame->samples_per_channel_);}return;}// Measure time between when the audio frame is added to the task queue// and when the task is actually executed. Goal is to keep track of// unwanted extra latency added by the task queue.RTC_HISTOGRAM_COUNTS_10000("WebRTC.Audio.EncodingTaskQueueLatencyMs",audio_frame->ElapsedProfileTimeMs());// 根据输入mute属性,修改数据bool is_muted = InputMute();AudioFrameOperations::Mute(audio_frame.get(), previous_frame_muted_,is_muted);if (_includeAudioLevelIndication) {size_t length =audio_frame->samples_per_channel_ * audio_frame->num_channels_;RTC_CHECK_LE(length, AudioFrame::kMaxDataSizeBytes);if (is_muted && previous_frame_muted_) {rms_level_.AnalyzeMuted(length);} else {rms_level_.Analyze(rtc::ArrayView<const int16_t>(audio_frame->data(), length));}}previous_frame_muted_ = is_muted;// Add 10ms of raw (PCM) audio data to the encoder @ 32kHz.// The ACM resamples internally.audio_frame->timestamp_ = _timeStamp;// This call will trigger AudioPacketizationCallback::SendData if// encoding is done and payload is ready for packetization and// transmission. Otherwise, it will return without invoking the// callback.if (audio_coding_->Add10MsData(*audio_frame) < 0) {RTC_DLOG(LS_ERROR) << "ACM::Add10MsData() failed.";return;}_timeStamp += static_cast<uint32_t>(audio_frame->samples_per_channel_);});}

AudioCodingModuleImpl::Add10MsData

// Add 10MS of raw (PCM) audio data to the encoder.int AudioCodingModuleImpl::Add10MsData(const AudioFrame& audio_frame) {MutexLock lock(&acm_mutex_);int r = Add10MsDataInternal(audio_frame, &input_data_);// TODO(bugs.webrtc.org/10739): add dcheck that// |audio_frame.absolute_capture_timestamp_ms()| always has a value.return r < 0? r: Encode(input_data_, audio_frame.absolute_capture_timestamp_ms());}

—》调用Encode对数据开始编码

AudioCodingModuleImpl::Encode

int32_t AudioCodingModuleImpl::Encode(const InputData& input_data,absl::optional<int64_t> absolute_capture_timestamp_ms) {// TODO(bugs.webrtc.org/10739): add dcheck that// |audio_frame.absolute_capture_timestamp_ms()| always has a value.AudioEncoder::EncodedInfo encoded_info;uint8_t previous_pltype;// Check if there is an encoder before.if (!HaveValidEncoder("Process"))return -1;if (!first_frame_) {RTC_DCHECK(IsNewerTimestamp(input_data.input_timestamp, last_timestamp_))<< "Time should not move backwards";}// Scale the timestamp to the codec's RTP timestamp rate.uint32_t rtp_timestamp =first_frame_? input_data.input_timestamp: last_rtp_timestamp_ +rtc::dchecked_cast<uint32_t>(rtc::CheckedDivExact(int64_t{input_data.input_timestamp - last_timestamp_} *encoder_stack_->RtpTimestampRateHz(),int64_t{encoder_stack_->SampleRateHz()}));last_timestamp_ = input_data.input_timestamp;last_rtp_timestamp_ = rtp_timestamp;first_frame_ = false;// Clear the buffer before reuse - encoded data will get appended.encode_buffer_.Clear();encoded_info = encoder_stack_->Encode(rtp_timestamp,rtc::ArrayView<const int16_t>(input_data.audio,input_data.audio_channel * input_data.length_per_channel),&encode_buffer_);bitrate_logger_.MaybeLog(encoder_stack_->GetTargetBitrate() / 1000);// 采集是每10ms,但是编码是20ms,所以需要第二次才会开始编码if (encode_buffer_.size() == 0 && !encoded_info.send_even_if_empty) {// Not enough data.return 0; // 第一次进来会为0,第一次的数据会缓存到编码器中}previous_pltype = previous_pltype_; // Read it while we have the critsect.// Log codec type to histogram once every 500 packets.if (encoded_info.encoded_bytes == 0) {++number_of_consecutive_empty_packets_;} else {size_t codec_type = static_cast<size_t>(encoded_info.encoder_type);codec_histogram_bins_log_[codec_type] +=number_of_consecutive_empty_packets_ + 1;number_of_consecutive_empty_packets_ = 0;if (codec_histogram_bins_log_[codec_type] >= 500) {codec_histogram_bins_log_[codec_type] -= 500;UpdateCodecTypeHistogram(codec_type);}}AudioFrameType frame_type;if (encode_buffer_.size() == 0 && encoded_info.send_even_if_empty) {frame_type = AudioFrameType::kEmptyFrame;encoded_info.payload_type = previous_pltype;} else {RTC_DCHECK_GT(encode_buffer_.size(), 0);frame_type = encoded_info.speech ? AudioFrameType::kAudioFrameSpeech: AudioFrameType::kAudioFrameCN;}{MutexLock lock(&callback_mutex_);if (packetization_callback_) { // 触发ChannelSend::SendDatapacketization_callback_->SendData(frame_type, encoded_info.payload_type, encoded_info.encoded_timestamp,encode_buffer_.data(), encode_buffer_.size(),absolute_capture_timestamp_ms.value_or(-1));}}previous_pltype_ = encoded_info.payload_type;return static_cast<int32_t>(encode_buffer_.size());}

ChannelSend::SendData

int32_t(AudioFrameType frameType,uint8_t payloadType,uint32_t rtp_timestamp,const uint8_t* payloadData,size_t payloadSize,int64_t absolute_capture_timestamp_ms) {RTC_DCHECK_RUN_ON(&encoder_queue_);rtc::ArrayView<const uint8_t> payload(payloadData, payloadSize);if (frame_transformer_delegate_) {// Asynchronously transform the payload before sending it. After the payload// is transformed, the delegate will call SendRtpAudio to send it.frame_transformer_delegate_->Transform(frameType, payloadType, rtp_timestamp, rtp_rtcp_->StartTimestamp(),payloadData, payloadSize, absolute_capture_timestamp_ms,rtp_rtcp_->SSRC());return 0;}return SendRtpAudio(frameType, payloadType, rtp_timestamp, payload,absolute_capture_timestamp_ms);}

ChannelSend::SendRtpAudio

int32_t ChannelSend::SendRtpAudio(AudioFrameType frameType,uint8_t payloadType,uint32_t rtp_timestamp,rtc::ArrayView<const uint8_t> payload,int64_t absolute_capture_timestamp_ms) {if (_includeAudioLevelIndication) {// Store current audio level in the RTP sender.// The level will be used in combination with voice-activity state// (frameType) to add an RTP header extensionrtp_sender_audio_->SetAudioLevel(rms_level_.Average());}// E2EE Custom Audio Frame Encryption (This is optional).// Keep this buffer around for the lifetime of the send call.rtc::Buffer encrypted_audio_payload;// We don't invoke encryptor if payload is empty, which means we are to send// DTMF, or the encoder entered DTX.// TODO(minyue): see whether DTMF packets should be encrypted or not. In// current implementation, they are not.if (!payload.empty()) {if (frame_encryptor_ != nullptr) {// TODO(benwright@webrtc.org) - Allocate enough to always encrypt inline.// Allocate a buffer to hold the maximum possible encrypted payload.size_t max_ciphertext_size = frame_encryptor_->GetMaxCiphertextByteSize(cricket::MEDIA_TYPE_AUDIO, payload.size());encrypted_audio_payload.SetSize(max_ciphertext_size);// Encrypt the audio payload into the buffer.size_t bytes_written = 0;int encrypt_status = frame_encryptor_->Encrypt(cricket::MEDIA_TYPE_AUDIO, rtp_rtcp_->SSRC(),/*additional_data=*/nullptr, payload, encrypted_audio_payload,&bytes_written);if (encrypt_status != 0) {RTC_DLOG(LS_ERROR)<< "Channel::SendData() failed encrypt audio payload: "<< encrypt_status;return -1;}// Resize the buffer to the exact number of bytes actually used.encrypted_audio_payload.SetSize(bytes_written);// Rewrite the payloadData and size to the new encrypted payload.payload = encrypted_audio_payload;} else if (crypto_options_.sframe.require_frame_encryption) {RTC_DLOG(LS_ERROR) << "Channel::SendData() failed sending audio payload: ""A frame encryptor is required but one is not set.";return -1;}}// Push data from ACM to RTP/RTCP-module to deliver audio frame for// packetization.if (!rtp_rtcp_->OnSendingRtpFrame(rtp_timestamp,// Leaving the time when this frame was// received from the capture device as// undefined for voice for now.-1, payloadType,/*force_sender_report=*/false)) {return -1;}// RTCPSender has it's own copy of the timestamp offset, added in// RTCPSender::BuildSR, hence we must not add the in the offset for the above// call.// TODO(nisse): Delete RTCPSender:timestamp_offset_, and see if we can confine// knowledge of the offset to a single place.// This call will trigger Transport::SendPacket() from the RTP/RTCP module.if (!rtp_sender_audio_->SendAudio(frameType, payloadType, rtp_timestamp + rtp_rtcp_->StartTimestamp(),payload.data(), payload.size(), absolute_capture_timestamp_ms)) {RTC_DLOG(LS_ERROR)<< "ChannelSend::SendData() failed to send data to RTP/RTCP module";return -1;}return 0;}

RTPSenderAudio::SendAudio

bool RTPSenderAudio::SendAudio(AudioFrameType frame_type,int8_t payload_type,uint32_t rtp_timestamp,const uint8_t* payload_data,size_t payload_size,int64_t absolute_capture_timestamp_ms) {#if RTC_TRACE_EVENTS_ENABLEDTRACE_EVENT_ASYNC_STEP1("webrtc", "Audio", rtp_timestamp, "Send", "type",FrameTypeToString(frame_type));#endif*****// 创建一个rtp包,并且填入数据std::unique_ptr<RtpPacketToSend> packet = rtp_sender_->AllocatePacket();packet->SetMarker(MarkerBit(frame_type, payload_type));packet->SetPayloadType(payload_type);packet->SetTimestamp(rtp_timestamp);packet->set_capture_time_ms(clock_->TimeInMilliseconds());// Update audio level extension, if included.packet->SetExtension<AudioLevel>(frame_type == AudioFrameType::kAudioFrameSpeech, audio_level_dbov);// Send absolute capture time periodically in order to optimize and save// network traffic. Missing absolute capture times can be interpolated on the// receiving end if sending intervals are small enough.auto absolute_capture_time = absolute_capture_time_sender_.OnSendPacket(AbsoluteCaptureTimeSender::GetSource(packet->Ssrc(), packet->Csrcs()),packet->Timestamp(),// Replace missing value with 0 (invalid frequency), this will trigger// absolute capture time sending.encoder_rtp_timestamp_frequency.value_or(0),Int64MsToUQ32x32(absolute_capture_timestamp_ms + NtpOffsetMs()),/*estimated_capture_clock_offset=*/include_capture_clock_offset_ ? absl::make_optional(0) : absl::nullopt);if (absolute_capture_time) {// It also checks that extension was registered during SDP negotiation. If// not then setter won't do anything.packet->SetExtension<AbsoluteCaptureTimeExtension>(*absolute_capture_time);}uint8_t* payload = packet->AllocatePayload(payload_size);if (!payload) // Too large payload buffer.return false;memcpy(payload, payload_data, payload_size);if (!rtp_sender_->AssignSequenceNumber(packet.get()))return false;{MutexLock lock(&send_audio_mutex_);last_payload_type_ = payload_type;}TRACE_EVENT_ASYNC_END2("webrtc", "Audio", rtp_timestamp, "timestamp",packet->Timestamp(), "seqnum",packet->SequenceNumber());packet->set_packet_type(RtpPacketMediaType::kAudio);packet->set_allow_retransmission(true);bool send_result = rtp_sender_->SendToNetwork(std::move(packet));if (first_packet_sent_()) {RTC_LOG(LS_INFO) << "First audio RTP packet sent to pacer";}return send_result;}

RTPSender::SendToNetwork

bool RTPSender::SendToNetwork(std::unique_ptr<RtpPacketToSend> packet) {RTC_DCHECK(packet);int64_t now_ms = clock_->TimeInMilliseconds();auto packet_type = packet->packet_type();RTC_CHECK(packet_type) << "Packet type must be set before sending.";if (packet->capture_time_ms() <= 0) {packet->set_capture_time_ms(now_ms);}std::vector<std::unique_ptr<RtpPacketToSend>> packets;packets.emplace_back(std::move(packet));// 将packets插入到pace的队列中paced_sender_->EnqueuePackets(std::move(packets));return true;}

RtpPacketSenderProxy::EnqueuePackets

class RtpPacketSenderProxy : public RtpPacketSender {public:void EnqueuePackets(std::vector<std::unique_ptr<RtpPacketToSend>> packets) override {MutexLock lock(&mutex_);rtp_packet_pacer_->EnqueuePackets(std::move(packets));}***

PacedSender::EnqueuePackets

void PacedSender::EnqueuePackets(std::vector<std::unique_ptr<RtpPacketToSend>> packets) {{TRACE_EVENT0(TRACE_DISABLED_BY_DEFAULT("webrtc"),"PacedSender::EnqueuePackets");MutexLock lock(&mutex_);for (auto& packet : packets) {TRACE_EVENT2(TRACE_DISABLED_BY_DEFAULT("webrtc"),"PacedSender::EnqueuePackets::Loop", "sequence_number",packet->SequenceNumber(), "rtp_timestamp",packet->Timestamp());RTC_DCHECK_GE(packet->capture_time_ms(), 0);pacing_controller_.EnqueuePacket(std::move(packet));}}MaybeWakupProcessThread();}

PacingController::EnqueuePacket

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\pacing\pacing_controller.cc

void PacingController::EnqueuePacket(std::unique_ptr<RtpPacketToSend> packet) {RTC_DCHECK(pacing_bitrate_ > DataRate::Zero())<< "SetPacingRate must be called before InsertPacket.";RTC_CHECK(packet->packet_type());// Get priority first and store in temporary, to avoid chance of object being// moved before GetPriorityForType() being called.const int priority = GetPriorityForType(*packet->packet_type());EnqueuePacketInternal(std::move(packet), priority);}

PacingController::EnqueuePacketInternal

void PacingController::EnqueuePacketInternal(std::unique_ptr<RtpPacketToSend> packet,int priority) {prober_.OnIncomingPacket(DataSize::Bytes(packet->payload_size()));Timestamp now = CurrentTime();if (mode_ == ProcessMode::kDynamic && packet_queue_.Empty() &&NextSendTime() <= now) {TimeDelta elapsed_time = UpdateTimeAndGetElapsed(now);UpdateBudgetWithElapsedTime(elapsed_time);}packet_queue_.Push(priority, now, packet_counter_++, std::move(packet));}

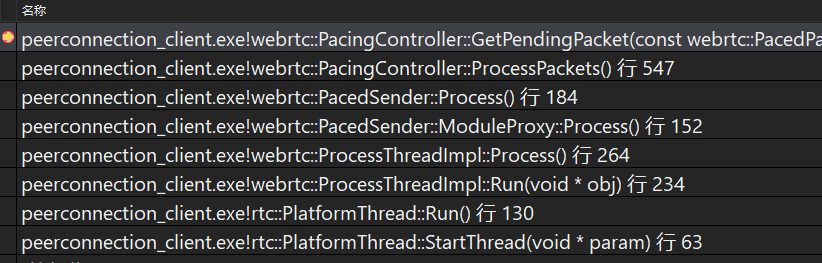

最后是通过PacingController::GetPendingPacket来获取添加的packet数据然后发出去。

PacingController::GetPendingPacket

std::unique_ptr<RtpPacketToSend> PacingController::GetPendingPacket(const PacedPacketInfo& pacing_info,Timestamp target_send_time,Timestamp now) {if (packet_queue_.Empty()) {return nullptr;}// First, check if there is any reason _not_ to send the next queued packet.// Unpaced audio packets and probes are exempted from send checks.bool unpaced_audio_packet =!pace_audio_ && packet_queue_.LeadingAudioPacketEnqueueTime().has_value();bool is_probe = pacing_info.probe_cluster_id != PacedPacketInfo::kNotAProbe;if (!unpaced_audio_packet && !is_probe) {if (Congested()) {// Don't send anything if congested.return nullptr;}if (mode_ == ProcessMode::kPeriodic) {if (media_budget_.bytes_remaining() <= 0) {// Not enough budget.return nullptr;}} else {// Dynamic processing mode.if (now <= target_send_time) {// We allow sending slightly early if we think that we would actually// had been able to, had we been right on time - i.e. the current debt// is not more than would be reduced to zero at the target sent time.TimeDelta flush_time = media_debt_ / media_rate_;if (now + flush_time > target_send_time) {return nullptr;}}}}return packet_queue_.Pop();}

可以看到是PacingController::ProcessPackets中调用了获取PacingController::GetPendingPacket数据。

PacingController::ProcessPackets

void PacingController::ProcessPackets() {Timestamp now = CurrentTime();Timestamp target_send_time = now;if (mode_ == ProcessMode::kDynamic) {target_send_time = NextSendTime();TimeDelta early_execute_margin =prober_.is_probing() ? kMaxEarlyProbeProcessing : TimeDelta::Zero();if (target_send_time.IsMinusInfinity()) {target_send_time = now;} else if (now < target_send_time - early_execute_margin) {// We are too early, but if queue is empty still allow draining some debt.// Probing is allowed to be sent up to kMinSleepTime early.TimeDelta elapsed_time = UpdateTimeAndGetElapsed(now);UpdateBudgetWithElapsedTime(elapsed_time);return;}if (target_send_time < last_process_time_) {// After the last process call, at time X, the target send time// shifted to be earlier than X. This should normally not happen// but we want to make sure rounding errors or erratic behavior// of NextSendTime() does not cause issue. In particular, if the// buffer reduction of// rate * (target_send_time - previous_process_time)// in the main loop doesn't clean up the existing debt we may not// be able to send again. We don't want to check this reordering// there as it is the normal exit condtion when the buffer is// exhausted and there are packets in the queue.UpdateBudgetWithElapsedTime(last_process_time_ - target_send_time);target_send_time = last_process_time_;}}Timestamp previous_process_time = last_process_time_;TimeDelta elapsed_time = UpdateTimeAndGetElapsed(now);if (ShouldSendKeepalive(now)) {// We can not send padding unless a normal packet has first been sent. If// we do, timestamps get messed up.if (packet_counter_ == 0) {last_send_time_ = now;} else {DataSize keepalive_data_sent = DataSize::Zero();std::vector<std::unique_ptr<RtpPacketToSend>> keepalive_packets =packet_sender_->GeneratePadding(DataSize::Bytes(1));for (auto& packet : keepalive_packets) {keepalive_data_sent +=DataSize::Bytes(packet->payload_size() + packet->padding_size());packet_sender_->SendPacket(std::move(packet), PacedPacketInfo());for (auto& packet : packet_sender_->FetchFec()) {EnqueuePacket(std::move(packet));}}OnPaddingSent(keepalive_data_sent);}}if (paused_) {return;}if (elapsed_time > TimeDelta::Zero()) {DataRate target_rate = pacing_bitrate_;DataSize queue_size_data = packet_queue_.Size();if (queue_size_data > DataSize::Zero()) {// Assuming equal size packets and input/output rate, the average packet// has avg_time_left_ms left to get queue_size_bytes out of the queue, if// time constraint shall be met. Determine bitrate needed for that.packet_queue_.UpdateQueueTime(now);if (drain_large_queues_) {TimeDelta avg_time_left =std::max(TimeDelta::Millis(1),queue_time_limit - packet_queue_.AverageQueueTime());DataRate min_rate_needed = queue_size_data / avg_time_left;if (min_rate_needed > target_rate) {target_rate = min_rate_needed;RTC_LOG(LS_VERBOSE) << "bwe:large_pacing_queue pacing_rate_kbps="<< target_rate.kbps();}}}if (mode_ == ProcessMode::kPeriodic) {// In periodic processing mode, the IntevalBudget allows positive budget// up to (process interval duration) * (target rate), so we only need to// update it once before the packet sending loop.media_budget_.set_target_rate_kbps(target_rate.kbps());UpdateBudgetWithElapsedTime(elapsed_time);} else {media_rate_ = target_rate;}}bool first_packet_in_probe = false;PacedPacketInfo pacing_info;DataSize recommended_probe_size = DataSize::Zero();bool is_probing = prober_.is_probing();if (is_probing) {// Probe timing is sensitive, and handled explicitly by BitrateProber, so// use actual send time rather than target.pacing_info = prober_.CurrentCluster(now).value_or(PacedPacketInfo());if (pacing_info.probe_cluster_id != PacedPacketInfo::kNotAProbe) {first_packet_in_probe = pacing_info.probe_cluster_bytes_sent == 0;recommended_probe_size = prober_.RecommendedMinProbeSize();RTC_DCHECK_GT(recommended_probe_size, DataSize::Zero());} else {// No valid probe cluster returned, probe might have timed out.is_probing = false;}}DataSize data_sent = DataSize::Zero();// The paused state is checked in the loop since it leaves the critical// section allowing the paused state to be changed from other code.while (!paused_) {if (first_packet_in_probe) {// If first packet in probe, insert a small padding packet so we have a// more reliable start window for the rate estimation.auto padding = packet_sender_->GeneratePadding(DataSize::Bytes(1));// If no RTP modules sending media are registered, we may not get a// padding packet back.if (!padding.empty()) {// Insert with high priority so larger media packets don't preempt it.EnqueuePacketInternal(std::move(padding[0]), kFirstPriority);// We should never get more than one padding packets with a requested// size of 1 byte.RTC_DCHECK_EQ(padding.size(), 1u);}first_packet_in_probe = false;}if (mode_ == ProcessMode::kDynamic &&previous_process_time < target_send_time) {// Reduce buffer levels with amount corresponding to time between last// process and target send time for the next packet.// If the process call is late, that may be the time between the optimal// send times for two packets we should already have sent.UpdateBudgetWithElapsedTime(target_send_time - previous_process_time);previous_process_time = target_send_time;}// Fetch the next packet, so long as queue is not empty or budget is not// exhausted.std::unique_ptr<RtpPacketToSend> rtp_packet =GetPendingPacket(pacing_info, target_send_time, now);if (rtp_packet == nullptr) {// No packet available to send, check if we should send padding.DataSize padding_to_add = PaddingToAdd(recommended_probe_size, data_sent);if (padding_to_add > DataSize::Zero()) {std::vector<std::unique_ptr<RtpPacketToSend>> padding_packets =packet_sender_->GeneratePadding(padding_to_add);if (padding_packets.empty()) {// No padding packets were generated, quite send loop.break;}for (auto& packet : padding_packets) {EnqueuePacket(std::move(packet));}// Continue loop to send the padding that was just added.continue;}// Can't fetch new packet and no padding to send, exit send loop.break;}RTC_DCHECK(rtp_packet);RTC_DCHECK(rtp_packet->packet_type().has_value());const RtpPacketMediaType packet_type = *rtp_packet->packet_type();DataSize packet_size = DataSize::Bytes(rtp_packet->payload_size() +rtp_packet->padding_size());if (include_overhead_) {packet_size += DataSize::Bytes(rtp_packet->headers_size()) +transport_overhead_per_packet_;}packet_sender_->SendPacket(std::move(rtp_packet), pacing_info);for (auto& packet : packet_sender_->FetchFec()) {EnqueuePacket(std::move(packet));}data_sent += packet_size;// Send done, update send/process time to the target send time.OnPacketSent(packet_type, packet_size, target_send_time);// If we are currently probing, we need to stop the send loop when we have// reached the send target.if (is_probing && data_sent >= recommended_probe_size) {break;}if (mode_ == ProcessMode::kDynamic) {// Update target send time in case that are more packets that we are late// in processing.Timestamp next_send_time = NextSendTime();if (next_send_time.IsMinusInfinity()) {target_send_time = now;} else {target_send_time = std::min(now, next_send_time);}}}last_process_time_ = std::max(last_process_time_, previous_process_time);if (is_probing) {probing_send_failure_ = data_sent == DataSize::Zero();if (!probing_send_failure_) {prober_.ProbeSent(CurrentTime(), data_sent);}}}

里面是调用了packetsender->SendPacket(std::move(packet), PacedPacketInfo());来发送数据。