前言

源码分析

Conductor::AddTracks

void Conductor::AddTracks() {if (!peer_connection_->GetSenders().empty()) {return; // Already added tracks.}// 创建音频轨,并添加到peerconnectionrtc::scoped_refptr<webrtc::AudioTrackInterface> audio_track(peer_connection_factory_->CreateAudioTrack(kAudioLabel, peer_connection_factory_->CreateAudioSource(cricket::AudioOptions())));auto result_or_error = peer_connection_->AddTrack(audio_track, {kStreamId});if (!result_or_error.ok()) {RTC_LOG(LS_ERROR) << "Failed to add audio track to PeerConnection: "<< result_or_error.error().message();}// 创建视频设备rtc::scoped_refptr<CapturerTrackSource> video_device =CapturerTrackSource::Create();if (video_device) {// 创建视频轨rtc::scoped_refptr<webrtc::VideoTrackInterface> video_track_(peer_connection_factory_->CreateVideoTrack(kVideoLabel, video_device));// 显示本地视频main_wnd_->StartLocalRenderer(video_track_);// 添加视频轨result_or_error = peer_connection_->AddTrack(video_track_, {kStreamId});if (!result_or_error.ok()) {RTC_LOG(LS_ERROR) << "Failed to add video track to PeerConnection: "<< result_or_error.error().message();}} else {RTC_LOG(LS_ERROR) << "OpenVideoCaptureDevice failed";}// 切换到显示视频渲染界面main_wnd_->SwitchToStreamingUI();}

—》 rtc::scoped_refptr

CapturerTrackSource::Create();

CapturerTrackSource::Create

class CapturerTrackSource : public webrtc::VideoTrackSource {public:static rtc::scoped_refptr<CapturerTrackSource> Create() {const size_t kWidth = 640;const size_t kHeight = 480;const size_t kFps = 30;std::unique_ptr<webrtc::test::VcmCapturer> capturer;std::unique_ptr<webrtc::VideoCaptureModule::DeviceInfo> info(webrtc::VideoCaptureFactory::CreateDeviceInfo());if (!info) {return nullptr;}int num_devices = info->NumberOfDevices();for (int i = 0; i < num_devices; ++i) {capturer = absl::WrapUnique(webrtc::test::VcmCapturer::Create(kWidth, kHeight, kFps, i));if (capturer) {return new rtc::RefCountedObject<CapturerTrackSource>(std::move(capturer));}}return nullptr;}*********

—》 capturer = absl::WrapUnique(

webrtc::test::VcmCapturer::Create(kWidth, kHeight, kFps, i));

VcmCapturer* VcmCapturer::Create(size_t width,size_t height,size_t target_fps,size_t capture_device_index) {std::unique_ptr<VcmCapturer> vcm_capturer(new VcmCapturer());if (!vcm_capturer->Init(width, height, target_fps, capture_device_index)) {RTC_LOG(LS_WARNING) << "Failed to create VcmCapturer(w = " << width<< ", h = " << height << ", fps = " << target_fps<< ")";return nullptr;}return vcm_capturer.release();}

—》vcm_capturer->Init(width, height, target_fps, capture_device_index)

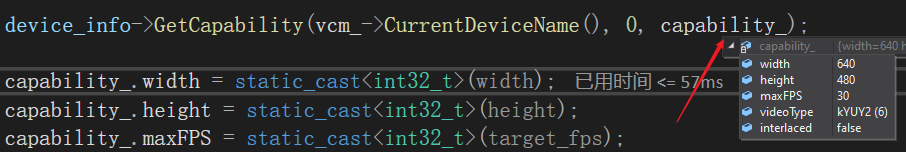

bool VcmCapturer::Init(size_t width,size_t height,size_t target_fps,size_t capture_device_index) {std::unique_ptr<VideoCaptureModule::DeviceInfo> device_info(VideoCaptureFactory::CreateDeviceInfo());// 获取设备名称char device_name[256];char unique_name[256];if (device_info->GetDeviceName(static_cast<uint32_t>(capture_device_index),device_name, sizeof(device_name), unique_name,sizeof(unique_name)) != 0) {Destroy();return false;}//根据设备名称,创建VideoCaptureModule实例vcm_ = webrtc::VideoCaptureFactory::Create(unique_name);if (!vcm_) {return false;}// 创建成功,则传入采集数据回调,当采集到视频数据就会调用回调vcm_->RegisterCaptureDataCallback(this);// 获取设备的能力device_info->GetCapability(vcm_->CurrentDeviceName(), 0, capability_);// 根据传入参数传入到capability_变量中capability_.width = static_cast<int32_t>(width);capability_.height = static_cast<int32_t>(height);capability_.maxFPS = static_cast<int32_t>(target_fps);capability_.videoType = VideoType::kI420;// vcm实例以capability_的值开始采集if (vcm_->StartCapture(capability_) != 0) {Destroy();return false;}RTC_CHECK(vcm_->CaptureStarted());return true;}

获取设备名称

获取设备能力

此时,设备已经开始工作了。

此时 rtc::scoped_refptr

接着是创建视频轨

rtc::scopedrefptr

peerconnection_factory->CreateVideoTrack(kVideoLabel, video_device));

PeerConnectionFactory::CreateVideoTrack

rtc::scoped_refptr<VideoTrackInterface> PeerConnectionFactory::CreateVideoTrack(const std::string& id,VideoTrackSourceInterface* source) {RTC_DCHECK(signaling_thread()->IsCurrent());rtc::scoped_refptr<VideoTrackInterface> track(VideoTrack::Create(id, source, worker_thread()));return VideoTrackProxy::Create(signaling_thread(), worker_thread(), track);}

—》 rtc::scoped_refptr

rtc::scoped_refptr<VideoTrack> VideoTrack::Create(const std::string& id,VideoTrackSourceInterface* source,rtc::Thread* worker_thread) {rtc::RefCountedObject<VideoTrack>* track =new rtc::RefCountedObject<VideoTrack>(id, source, worker_thread);return track;}VideoTrack::VideoTrack(const std::string& label,VideoTrackSourceInterface* video_source,rtc::Thread* worker_thread): MediaStreamTrack<VideoTrackInterface>(label),worker_thread_(worker_thread),video_source_(video_source),content_hint_(ContentHint::kNone) {video_source_->RegisterObserver(this);}rtc::scoped_refptr<VideoTrackSourceInterface> video_source_;

MainWnd::StartLocalRenderer

void MainWnd::StartLocalRenderer(webrtc::VideoTrackInterface* local_video) {local_renderer_.reset(new VideoRenderer(handle(), 1, 1, local_video));}MainWnd::VideoRenderer::VideoRenderer(HWND wnd,int width,int height,webrtc::VideoTrackInterface* track_to_render): wnd_(wnd), rendered_track_(track_to_render) {::InitializeCriticalSection(&buffer_lock_);ZeroMemory(&bmi_, sizeof(bmi_));bmi_.bmiHeader.biSize = sizeof(BITMAPINFOHEADER);bmi_.bmiHeader.biPlanes = 1;bmi_.bmiHeader.biBitCount = 32;bmi_.bmiHeader.biCompression = BI_RGB;bmi_.bmiHeader.biWidth = width;bmi_.bmiHeader.biHeight = -height;bmi_.bmiHeader.biSizeImage =width * height * (bmi_.bmiHeader.biBitCount >> 3);// 设置接收视频数据的对象,如果想要接收视频数据,需要实现VideoSinkInterface,// 此接口暴露了OnFrame函数,只要将Sink通过AddOrUpdateSink函数注册给Source,// 那么Source就会通过OnFrame接口将数据传给Sink。rendered_track_->AddOrUpdateSink(this, rtc::VideoSinkWants());}

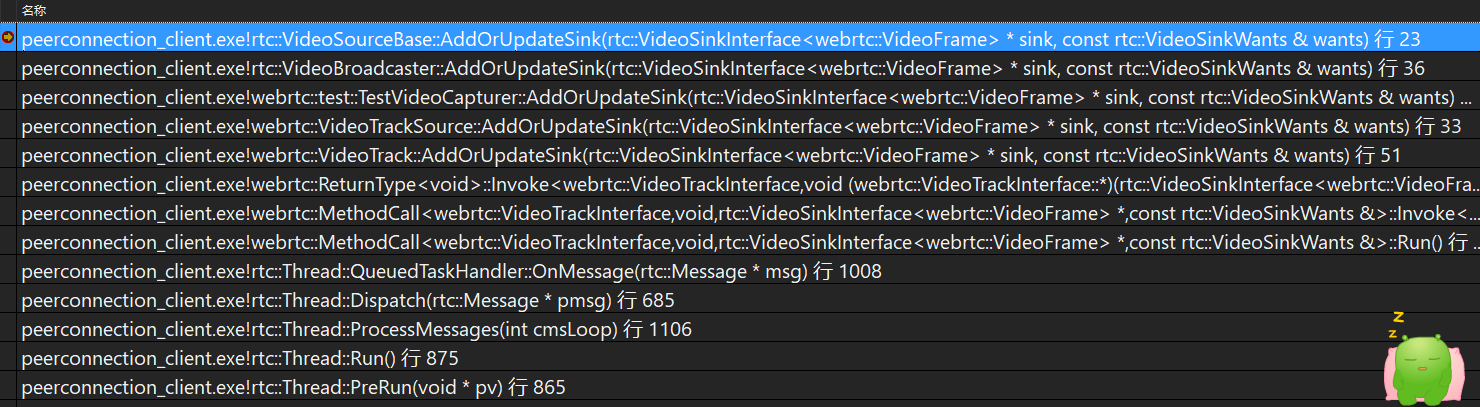

—》 renderedtrack->AddOrUpdateSink(this, rtc::VideoSinkWants());

// AddOrUpdateSink and RemoveSink should be called on the worker// thread.void VideoTrack::AddOrUpdateSink(rtc::VideoSinkInterface<VideoFrame>* sink,const rtc::VideoSinkWants& wants) {RTC_DCHECK(worker_thread_->IsCurrent());VideoSourceBase::AddOrUpdateSink(sink, wants);rtc::VideoSinkWants modified_wants = wants;modified_wants.black_frames = !enabled();video_source_->AddOrUpdateSink(sink, modified_wants);}

—》videosource->AddOrUpdateSink(sink, modified_wants);

void VideoTrackSource::AddOrUpdateSink(rtc::VideoSinkInterface<VideoFrame>* sink,const rtc::VideoSinkWants& wants) {RTC_DCHECK(worker_thread_checker_.IsCurrent());source()->AddOrUpdateSink(sink, wants);}

—》 source()->AddOrUpdateSink(sink, wants);

void TestVideoCapturer::AddOrUpdateSink(rtc::VideoSinkInterface<VideoFrame>* sink,const rtc::VideoSinkWants& wants) {broadcaster_.AddOrUpdateSink(sink, wants);UpdateVideoAdapter();}

-》 broadcaster_.AddOrUpdateSink(sink, wants);

void VideoBroadcaster::AddOrUpdateSink(VideoSinkInterface<webrtc::VideoFrame>* sink,const VideoSinkWants& wants) {RTC_DCHECK(sink != nullptr);webrtc::MutexLock lock(&sinks_and_wants_lock_);if (!FindSinkPair(sink)) {// |Sink| is a new sink, which didn't receive previous frame.previous_frame_sent_to_all_sinks_ = false;}VideoSourceBase::AddOrUpdateSink(sink, wants);UpdateWants();}

—》 VideoSourceBase::AddOrUpdateSink(sink, wants);

void VideoSourceBase::AddOrUpdateSink(VideoSinkInterface<webrtc::VideoFrame>* sink,const VideoSinkWants& wants) {RTC_DCHECK(sink != nullptr);SinkPair* sink_pair = FindSinkPair(sink);if (!sink_pair) {sinks_.push_back(SinkPair(sink, wants));} else {sink_pair->wants = wants;}}std::vector<SinkPair> sinks_;这里是将传入的sink添加到容器中,当有视频数据时,会分发到容器中的每个sink中去