h:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\video_capture\windows\video_capture_ds.cc

VideoCaptureDS::Init

int32_t VideoCaptureDS::Init(const char* deviceUniqueIdUTF8) {***// Temporary connect here.// This is done so that no one else can use the capture device.if (SetCameraOutput(_requestedCapability) != 0) {return -1;}****return 0;}

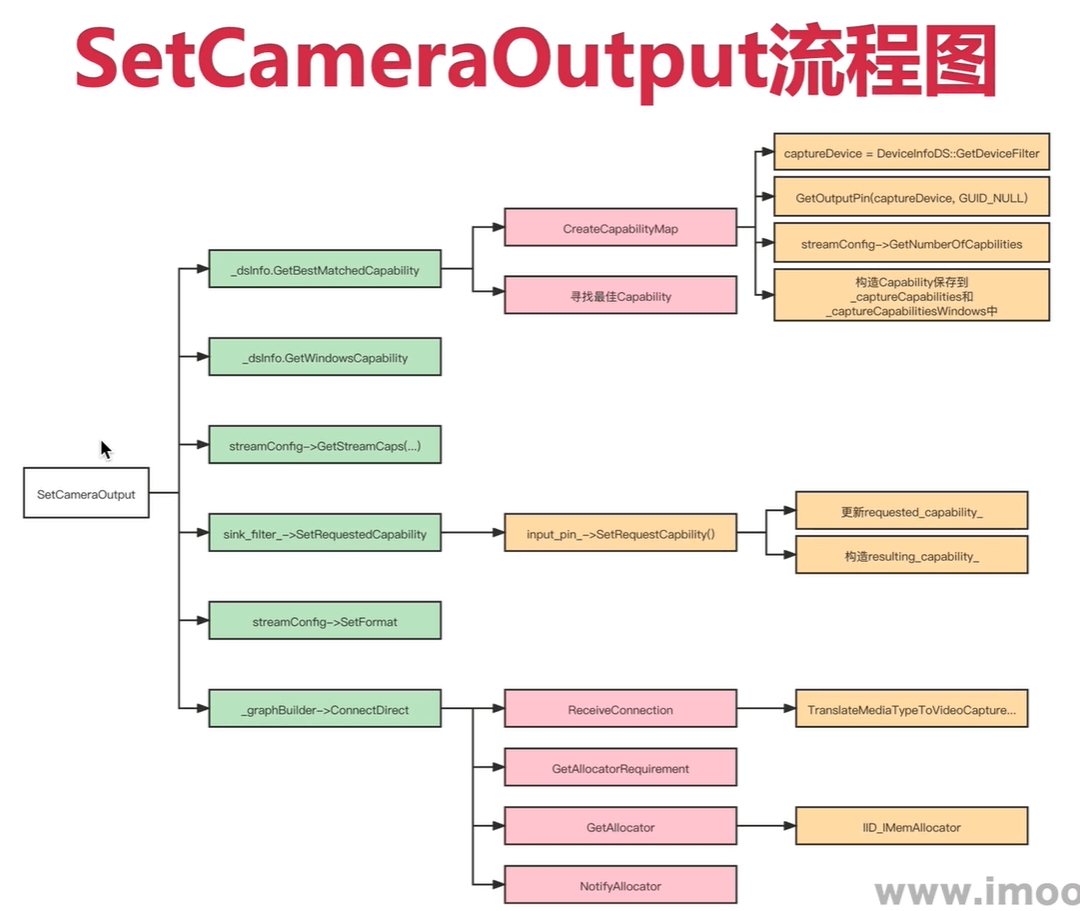

VideoCaptureDS::SetCameraOutput

int32_t VideoCaptureDS::SetCameraOutput(const VideoCaptureCapability& requestedCapability) {// Get the best matching capabilityVideoCaptureCapability capability;int32_t capabilityIndex;// Store the new requested size_requestedCapability = requestedCapability;//1、 Match the requested capability with the supported.// 先调用CreateCapabilityMap获取系统支持的所有capability,然后寻找最佳的capability的索引if ((capabilityIndex = _dsInfo.GetBestMatchedCapability(_deviceUniqueId, _requestedCapability, capability)) < 0) {return -1;}//2、 Reduce the frame rate if possible.if (capability.maxFPS > requestedCapability.maxFPS) {capability.maxFPS = requestedCapability.maxFPS;} else if (capability.maxFPS <= 0) {capability.maxFPS = 30;}//2、 Convert it to the windows capability index since they are not nexessary// the same// 根据索引,获取最佳capabilityVideoCaptureCapabilityWindows windowsCapability;if (_dsInfo.GetWindowsCapability(capabilityIndex, windowsCapability) != 0) {return -1;}IAMStreamConfig* streamConfig = NULL;AM_MEDIA_TYPE* pmt = NULL;VIDEO_STREAM_CONFIG_CAPS caps;HRESULT hr = _outputCapturePin->QueryInterface(IID_IAMStreamConfig,(void**)&streamConfig);if (hr) {RTC_LOG(LS_INFO) << "Can't get the Capture format settings.";return -1;}// Get the windows capability from the capture device// 从采集设备获取系统 capability,用于修改bool isDVCamera = false;hr = streamConfig->GetStreamCaps(windowsCapability.directShowCapabilityIndex,&pmt, reinterpret_cast<BYTE*>(&caps));if (hr == S_OK) {if (pmt->formattype == FORMAT_VideoInfo2) {VIDEOINFOHEADER2* h = reinterpret_cast<VIDEOINFOHEADER2*>(pmt->pbFormat);if (capability.maxFPS > 0 && windowsCapability.supportFrameRateControl) {h->AvgTimePerFrame = REFERENCE_TIME(10000000.0 / capability.maxFPS);}} else { // 本机是这个类型VIDEOINFOHEADER* h = reinterpret_cast<VIDEOINFOHEADER*>(pmt->pbFormat);if (capability.maxFPS > 0 && windowsCapability.supportFrameRateControl) {h->AvgTimePerFrame = REFERENCE_TIME(10000000.0 / capability.maxFPS);}}// Set the sink filter to request this capability// 设置capability到filtersink_filter_->SetRequestedCapability(capability);// Order the capture device to use this capability// 设置最终的格式hr += streamConfig->SetFormat(pmt);// Check if this is a DV camera and we need to add MS DV Filterif (pmt->subtype == MEDIASUBTYPE_dvsl ||pmt->subtype == MEDIASUBTYPE_dvsd || pmt->subtype == MEDIASUBTYPE_dvhd)isDVCamera = true; // This is a DV camera. Use MS DV filter}RELEASE_AND_CLEAR(streamConfig);if (FAILED(hr)) {RTC_LOG(LS_INFO) << "Failed to set capture device output format";return -1;}if (isDVCamera) {hr = ConnectDVCamera();} else {// 调用后,后面会进去CaptureInputPin::ReceiveConnectionhr = _graphBuilder->ConnectDirect(_outputCapturePin, _inputSendPin, NULL);}if (hr != S_OK) {RTC_LOG(LS_INFO) << "Failed to connect the Capture graph " << hr;return -1;}return 0;}

-》1、_dsInfo.GetBestMatchedCapability

DeviceInfoImpl::GetBestMatchedCapability

int32_t DeviceInfoImpl::GetBestMatchedCapability(const char* deviceUniqueIdUTF8,const VideoCaptureCapability& requested, // 可修改VideoCaptureCapability& resulting) { //可以修改if (!deviceUniqueIdUTF8)return -1;MutexLock lock(&_apiLock);if (!absl::EqualsIgnoreCase(deviceUniqueIdUTF8,absl::string_view(_lastUsedDeviceName, _lastUsedDeviceNameLength))) {if (-1 == CreateCapabilityMap(deviceUniqueIdUTF8)) {return -1;}}// 下面的是获取跟想要设备的Capability最接近的Capabilityint32_t bestformatIndex = -1;int32_t bestWidth = 0;int32_t bestHeight = 0;int32_t bestFrameRate = 0;VideoType bestVideoType = VideoType::kUnknown;const int32_t numberOfCapabilies =static_cast<int32_t>(_captureCapabilities.size());for (int32_t tmp = 0; tmp < numberOfCapabilies;++tmp) // Loop through all capabilities{VideoCaptureCapability& capability = _captureCapabilities[tmp];const int32_t diffWidth = capability.width - requested.width;const int32_t diffHeight = capability.height - requested.height;const int32_t diffFrameRate = capability.maxFPS - requested.maxFPS;const int32_t currentbestDiffWith = bestWidth - requested.width;const int32_t currentbestDiffHeight = bestHeight - requested.height;const int32_t currentbestDiffFrameRate = bestFrameRate - requested.maxFPS;if ((diffHeight >= 0 &&diffHeight <= abs(currentbestDiffHeight)) // Height better or equalt// that previouse.|| (currentbestDiffHeight < 0 && diffHeight >= currentbestDiffHeight)) {if (diffHeight ==currentbestDiffHeight) // Found best height. Care about the width){if ((diffWidth >= 0 &&diffWidth <= abs(currentbestDiffWith)) // Width better or equal|| (currentbestDiffWith < 0 && diffWidth >= currentbestDiffWith)) {if (diffWidth == currentbestDiffWith &&diffHeight == currentbestDiffHeight) // Same size as previously{// Also check the best frame rate if the diff is the same as// previouseif (((diffFrameRate >= 0 &&diffFrameRate <=currentbestDiffFrameRate) // Frame rate to high but// better match than previouse// and we have not selected IUV|| (currentbestDiffFrameRate < 0 &&diffFrameRate >=currentbestDiffFrameRate)) // Current frame rate is// lower than requested.// This is better.) {if ((currentbestDiffFrameRate ==diffFrameRate) // Same frame rate as previous or frame rate// allready good enough|| (currentbestDiffFrameRate >= 0)) {if (bestVideoType != requested.videoType &&requested.videoType != VideoType::kUnknown &&(capability.videoType == requested.videoType ||capability.videoType == VideoType::kI420 ||capability.videoType == VideoType::kYUY2 ||capability.videoType == VideoType::kYV12)) {bestVideoType = capability.videoType;bestformatIndex = tmp;}// If width height and frame rate is full filled we can use the// camera for encoding if it is supported.if (capability.height == requested.height &&capability.width == requested.width &&capability.maxFPS >= requested.maxFPS) {bestformatIndex = tmp;}} else // Better frame rate{bestWidth = capability.width;bestHeight = capability.height;bestFrameRate = capability.maxFPS;bestVideoType = capability.videoType;bestformatIndex = tmp;}}} else // Better width than previously{bestWidth = capability.width;bestHeight = capability.height;bestFrameRate = capability.maxFPS;bestVideoType = capability.videoType;bestformatIndex = tmp;}} // else width no good} else // Better height{bestWidth = capability.width;bestHeight = capability.height;bestFrameRate = capability.maxFPS;bestVideoType = capability.videoType;bestformatIndex = tmp;}} // else height not good} // end forRTC_LOG(LS_VERBOSE) << "Best camera format: " << bestWidth << "x"<< bestHeight << "@" << bestFrameRate<< "fps, color format: "<< static_cast<int>(bestVideoType);// Copy the capabilityif (bestformatIndex < 0)return -1;resulting = _captureCapabilities[bestformatIndex];return bestformatIndex;}// Default implementation. This should be overridden by Mobile implementations.int32_t DeviceInfoImpl::GetOrientation(const char* deviceUniqueIdUTF8,VideoRotation& orientation) {orientation = kVideoRotation_0;return -1;}

里面调用 DeviceInfoDS::CreateCapabilityMap

int32_t DeviceInfoDS::CreateCapabilityMap(const char* deviceUniqueIdUTF8){// Reset old capability list_captureCapabilities.clear();const int32_t deviceUniqueIdUTF8Length =(int32_t)strlen((char*)deviceUniqueIdUTF8);if (deviceUniqueIdUTF8Length > kVideoCaptureUniqueNameLength) {RTC_LOG(LS_INFO) << "Device name too long";return -1;}RTC_LOG(LS_INFO) << "CreateCapabilityMap called for device "<< deviceUniqueIdUTF8;char productId[kVideoCaptureProductIdLength];IBaseFilter* captureDevice = DeviceInfoDS::GetDeviceFilter(deviceUniqueIdUTF8, productId, kVideoCaptureProductIdLength);if (!captureDevice)return -1;IPin* outputCapturePin = GetOutputPin(captureDevice, GUID_NULL);if (!outputCapturePin) {RTC_LOG(LS_INFO) << "Failed to get capture device output pin";RELEASE_AND_CLEAR(captureDevice);return -1;}IAMExtDevice* extDevice = NULL;HRESULT hr =captureDevice->QueryInterface(IID_IAMExtDevice, (void**)&extDevice);if (SUCCEEDED(hr) && extDevice) {RTC_LOG(LS_INFO) << "This is an external device";extDevice->Release();}IAMStreamConfig* streamConfig = NULL;hr = outputCapturePin->QueryInterface(IID_IAMStreamConfig,(void**)&streamConfig);if (FAILED(hr)) {RTC_LOG(LS_INFO) << "Failed to get IID_IAMStreamConfig interface ""from capture device";return -1;}// this gets the FPSIAMVideoControl* videoControlConfig = NULL;HRESULT hrVC = captureDevice->QueryInterface(IID_IAMVideoControl,(void**)&videoControlConfig);if (FAILED(hrVC)) {RTC_LOG(LS_INFO) << "IID_IAMVideoControl Interface NOT SUPPORTED";}AM_MEDIA_TYPE* pmt = NULL;VIDEO_STREAM_CONFIG_CAPS caps;int count, size;// 这里本机调试的count=5, size=128// 一共有5个Capabilities,每个fCapabilities占用空间128字节hr = streamConfig->GetNumberOfCapabilities(&count, &size);if (FAILED(hr)) {RTC_LOG(LS_INFO) << "Failed to GetNumberOfCapabilities";RELEASE_AND_CLEAR(videoControlConfig);RELEASE_AND_CLEAR(streamConfig);RELEASE_AND_CLEAR(outputCapturePin);RELEASE_AND_CLEAR(captureDevice);return -1;}// Check if the device support formattype == FORMAT_VideoInfo2 and// FORMAT_VideoInfo. Prefer FORMAT_VideoInfo since some cameras (ZureCam) has// been seen having problem with MJPEG and FORMAT_VideoInfo2 Interlace flag is// only supported in FORMAT_VideoInfo2bool supportFORMAT_VideoInfo2 = false;bool supportFORMAT_VideoInfo = false;bool foundInterlacedFormat = false;GUID preferedVideoFormat = FORMAT_VideoInfo;for (int32_t tmp = 0; tmp < count; ++tmp) {hr = streamConfig->GetStreamCaps(tmp, &pmt, reinterpret_cast<BYTE*>(&caps));if (hr == S_OK) {if (pmt->majortype == MEDIATYPE_Video &&pmt->formattype == FORMAT_VideoInfo2) {****}if (pmt->majortype == MEDIATYPE_Video &&pmt->formattype == FORMAT_VideoInfo) {RTC_LOG(LS_INFO) << "Device support FORMAT_VideoInfo2";supportFORMAT_VideoInfo = true; // 本机只支持这个}}}if (supportFORMAT_VideoInfo2) {if (supportFORMAT_VideoInfo && !foundInterlacedFormat) {preferedVideoFormat = FORMAT_VideoInfo;} else {preferedVideoFormat = FORMAT_VideoInfo2;}}for (int32_t tmp = 0; tmp < count; ++tmp) {hr = streamConfig->GetStreamCaps(tmp, &pmt, reinterpret_cast<BYTE*>(&caps));if (hr != S_OK) {RTC_LOG(LS_INFO) << "Failed to GetStreamCaps";RELEASE_AND_CLEAR(videoControlConfig);RELEASE_AND_CLEAR(streamConfig);RELEASE_AND_CLEAR(outputCapturePin);RELEASE_AND_CLEAR(captureDevice);return -1;}if (pmt->majortype == MEDIATYPE_Video &&pmt->formattype == preferedVideoFormat) {VideoCaptureCapabilityWindows capability;int64_t avgTimePerFrame = 0;if (pmt->formattype == FORMAT_VideoInfo) {VIDEOINFOHEADER* h = reinterpret_cast<VIDEOINFOHEADER*>(pmt->pbFormat);assert(h);capability.directShowCapabilityIndex = tmp;capability.width = h->bmiHeader.biWidth;capability.height = h->bmiHeader.biHeight;avgTimePerFrame = h->AvgTimePerFrame;}***if (hrVC == S_OK) {LONGLONG* frameDurationList;LONGLONG maxFPS;long listSize;SIZE size;size.cx = capability.width;size.cy = capability.height;// GetMaxAvailableFrameRate doesn't return max frame rate always// eg: Logitech Notebook. This may be due to a bug in that API// because GetFrameRateList array is reversed in the above camera. So// a util method written. Can't assume the first value will return// the max fps.hrVC = videoControlConfig->GetFrameRateList(outputCapturePin, tmp, size, &listSize, &frameDurationList);// On some odd cameras, you may get a 0 for duration.// GetMaxOfFrameArray returns the lowest duration (highest FPS)if (hrVC == S_OK && listSize > 0 &&0 != (maxFPS = GetMaxOfFrameArray(frameDurationList, listSize))) {capability.maxFPS = static_cast<int>(10000000 / maxFPS); // 本机是30fpscapability.supportFrameRateControl = true;}****// can't switch MEDIATYPE :~(if (pmt->subtype == MEDIASUBTYPE_I420) {capability.videoType = VideoType::kI420;} else if (pmt->subtype == MEDIASUBTYPE_IYUV) {capability.videoType = VideoType::kIYUV;} else if (pmt->subtype == MEDIASUBTYPE_RGB24) {capability.videoType = VideoType::kRGB24;} else if (pmt->subtype == MEDIASUBTYPE_YUY2) {capability.videoType = VideoType::kYUY2; // 本机支持这个适配类型} else if (pmt->subtype == MEDIASUBTYPE_RGB565) {capability.videoType = VideoType::kRGB565;} else if (pmt->subtype == MEDIASUBTYPE_MJPG) {capability.videoType = VideoType::kMJPEG;}****_captureCapabilities.push_back(capability);_captureCapabilitiesWindows.push_back(capability);RTC_LOG(LS_INFO) << "Camera capability, width:" << capability.width<< " height:" << capability.height<< " type:" << static_cast<int>(capability.videoType)<< " fps:" << capability.maxFPS;}FreeMediaType(pmt);pmt = NULL;}****return static_cast<int32_t>(_captureCapabilities.size());}

—》2、_dsInfo.GetWindowsCapability(capabilityIndex, windowsCapability)

DeviceInfoDS::GetWindowsCapability

int32_t DeviceInfoDS::GetWindowsCapability(const int32_t capabilityIndex,VideoCaptureCapabilityWindows& windowsCapability) {MutexLock lock(&_apiLock);if (capabilityIndex < 0 || static_cast<size_t>(capabilityIndex) >=_captureCapabilitiesWindows.size()) {return -1;}windowsCapability = _captureCapabilitiesWindows[capabilityIndex];return 0;}

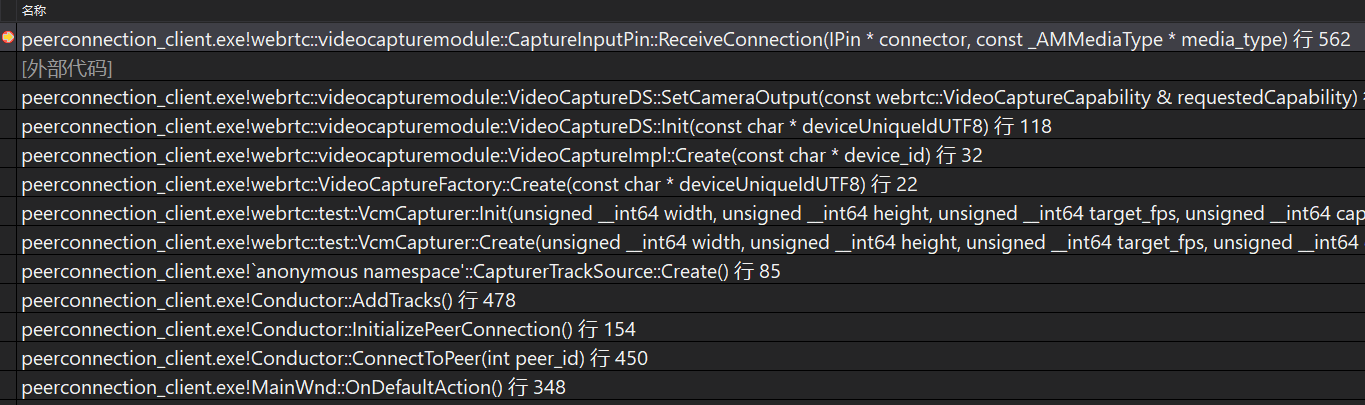

—》3、 hr = _graphBuilder->ConnectDirect(_outputCapturePin, _inputSendPin, NULL);

调用后,会进入CaptureInputPin::ReceiveConnection

CaptureInputPin::ReceiveConnection

里面会判断是否会支持这个连接

STDMETHODIMP CaptureInputPin::ReceiveConnection(IPin* connector,const AM_MEDIA_TYPE* media_type) {RTC_DCHECK_RUN_ON(&main_checker_);RTC_DCHECK(Filter()->IsStopped());if (receive_pin_) {RTC_DCHECK(false);return VFW_E_ALREADY_CONNECTED;}HRESULT hr = CheckDirection(connector);if (FAILED(hr))return hr;// 将media_type,转为resulting_capability_if (!TranslateMediaTypeToVideoCaptureCapability(media_type,&resulting_capability_))return VFW_E_TYPE_NOT_ACCEPTED;// Complete the connectionreceive_pin_ = connector;ResetMediaType(&media_type_);CopyMediaType(&media_type_, media_type);return S_OK;}

该函数返回后,就好开始创建内存分配器了CaptureInputPin::GetAllocator