上一节《7-17 播放声音的具体流程》

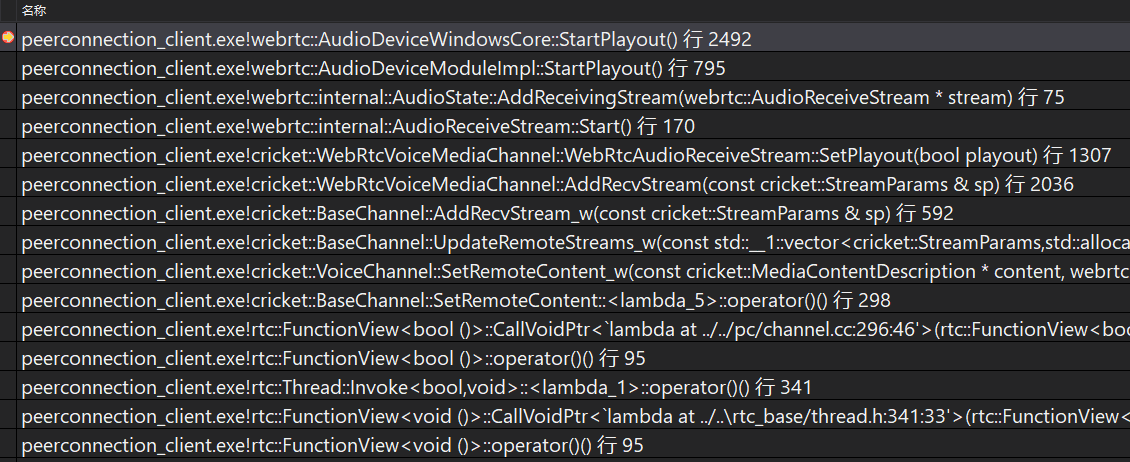

AudioState::AddReceivingStream

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\audio\audio_state.cc

void AudioState::AddReceivingStream(webrtc::AudioReceiveStream* stream) {***auto* adm = config_.audio_device_module.get();if (!adm->Playing()) {if (adm->InitPlayout() == 0) {if (playout_enabled_) {adm->StartPlayout();***}

AudioDeviceModuleImpl::StartPlayout

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\audio_device\audio_device_impl.cc

int32_t AudioDeviceModuleImpl::StartPlayout() {RTC_LOG(INFO) << __FUNCTION__;CHECKinitialized_();if (Playing()) {return 0;}audio_device_buffer_.StartPlayout();int32_t result = audio_device_->StartPlayout();RTC_LOG(INFO) << "output: " << result;RTC_HISTOGRAM_BOOLEAN("WebRTC.Audio.StartPlayoutSuccess",static_cast<int>(result == 0));return result;}

AudioDeviceWindowsCore::StartPlayout

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\audio_device\win\audio_device_core_win.cc

播放声音的主要逻辑都是在这里完成。

int32_t AudioDeviceWindowsCore::StartPlayout() {if (!_playIsInitialized) {return -1;}if (_hPlayThread != NULL) {return 0;}if (_playing) {return 0;}{MutexLock lockScoped(&mutex_);// Create thread which will drive the rendering.assert(_hPlayThread == NULL);_hPlayThread = CreateThread(NULL, 0, WSAPIRenderThread, this, 0, NULL);if (_hPlayThread == NULL) {RTC_LOG(LS_ERROR) << "failed to create the playout thread";return -1;}// Set thread priority to highest possible.SetThreadPriority(_hPlayThread, THREAD_PRIORITY_TIME_CRITICAL);} // critScopedDWORD ret = WaitForSingleObject(_hRenderStartedEvent, 1000);if (ret != WAIT_OBJECT_0) {RTC_LOG(LS_VERBOSE) << "rendering did not start up properly";return -1;}_playing = true;RTC_LOG(LS_VERBOSE) << "rendering audio stream has now started...";return 0;}DWORD WINAPI AudioDeviceWindowsCore::WSAPIRenderThread(LPVOID context) {return reinterpret_cast<AudioDeviceWindowsCore*>(context)->DoRenderThread();}

WSAPIRenderThread线程函数

DWORD WINAPI AudioDeviceWindowsCore::WSAPIRenderThread(LPVOID context) {return reinterpret_cast<AudioDeviceWindowsCore*>(context)->DoRenderThread();}

AudioDeviceWindowsCore::DoRenderThread

DWORD AudioDeviceWindowsCore::DoRenderThread() {***// Initialize COM as MTA in this thread.ScopedCOMInitializer comInit(ScopedCOMInitializer::kMTA);if (!comInit.Succeeded()) {RTC_LOG(LS_ERROR) << "failed to initialize COM in render thread";return 1;}rtc::SetCurrentThreadName("webrtc_core_audio_render_thread");// Use Multimedia Class Scheduler Service (MMCSS) to boost the thread// priority.// 是否支持avrtif (_winSupportAvrt) {DWORD taskIndex(0);hMmTask = _PAvSetMmThreadCharacteristicsA("Pro Audio", &taskIndex);if (hMmTask) {if (FALSE == _PAvSetMmThreadPriority(hMmTask, AVRT_PRIORITY_CRITICAL)) {****}_Lock();IAudioClock* clock = NULL;// Get size of rendering buffer (length is expressed as the number of audio// frames the buffer can hold). This value is fixed during the rendering// session.// 获取扬声器的buffer大小,断点值为1056UINT32 bufferLength = 0;hr = _ptrClientOut->GetBufferSize(&bufferLength);EXIT_ON_ERROR(hr);RTC_LOG(LS_VERBOSE) << "[REND] size of buffer : " << bufferLength;// Get maximum latency for the current stream (will not change for the// lifetime of the IAudioClient object).//REFERENCE_TIME latency;_ptrClientOut->GetStreamLatency(&latency); //打断点值为0,没有延迟RTC_LOG(LS_VERBOSE) << "[REND] max stream latency : " << (DWORD)latency<< " (" << (double)(latency / 10000.0) << " ms)";// Get the length of the periodic interval separating successive processing// passes by the audio engine on the data in the endpoint buffer.//// The period between processing passes by the audio engine is fixed for a// particular audio endpoint device and represents the smallest processing// quantum for the audio engine. This period plus the stream latency between// the buffer and endpoint device represents the minimum possible latency that// an audio application can achieve. Typical value: 100000 <=> 0.01 sec =// 10ms.// 播放周期REFERENCE_TIME devPeriod = 0;REFERENCE_TIME devPeriodMin = 0;_ptrClientOut->GetDevicePeriod(&devPeriod, &devPeriodMin);//断点值, 10万,3万RTC_LOG(LS_VERBOSE) << "[REND] device period : " << (DWORD)devPeriod<< " (" << (double)(devPeriod / 10000.0) << " ms)";// Derive initial rendering delay.// Example: 10*(960/480) + 15 = 20 + 15 = 35ms// 这里的10是10毫秒,播放缓冲区大小,当前断点值 10* (1056/480) + (0+ 10万)/1万 = 30int playout_delay = 10 * (bufferLength / _playBlockSize) +(int)((latency + devPeriod) / 10000);_sndCardPlayDelay = playout_delay;_writtenSamples = 0;RTC_LOG(LS_VERBOSE) << "[REND] initial delay : " << playout_delay;// 缓存区可以播放的时间double endpointBufferSizeMS =10.0 * ((double)bufferLength / (double)_devicePlayBlockSize);RTC_LOG(LS_VERBOSE) << "[REND] endpointBufferSizeMS : "<< endpointBufferSizeMS;// Before starting the stream, fill the rendering buffer with silence.//获取缓存区的地址,后面将数据写到这里BYTE* pData = NULL;hr = _ptrRenderClient->GetBuffer(bufferLength, &pData);EXIT_ON_ERROR(hr);// 释放缓存区,同时默认写入静默音hr =_ptrRenderClient->ReleaseBuffer(bufferLength, AUDCLNT_BUFFERFLAGS_SILENT);EXIT_ON_ERROR(hr);_writtenSamples += bufferLength;// 获取IAudioClock服务hr = _ptrClientOut->GetService(__uuidof(IAudioClock), (void**)&clock);if (FAILED(hr)) {RTC_LOG(LS_WARNING)<< "failed to get IAudioClock interface from the IAudioClient";}// 启动音频流// Start up the rendering audio stream.hr = _ptrClientOut->Start();EXIT_ON_ERROR(hr);_UnLock();// Set event which will ensure that the calling thread modifies the playing// state to true.// 发送一个开始播放的通知SetEvent(_hRenderStartedEvent);// >> ------------------ THREAD LOOP ------------------while (keepPlaying) {// Wait for a render notification event or a shutdown event// 等待信号和解析DWORD waitResult = WaitForMultipleObjects(2, waitArray, FALSE, 500);switch (waitResult) {case WAIT_OBJECT_0 + 0: // _hShutdownRenderEventkeepPlaying = false;break;case WAIT_OBJECT_0 + 1: // _hRenderSamplesReadyEventbreak;case WAIT_TIMEOUT: // timeout notificationRTC_LOG(LS_WARNING) << "render event timed out after 0.5 seconds";goto Exit;default: // unexpected errorRTC_LOG(LS_WARNING) << "unknown wait termination on render side";goto Exit;}while (keepPlaying) {_Lock();// Sanity check to ensure that essential states are not modified// during the unlocked period.if (_ptrRenderClient == NULL || _ptrClientOut == NULL) {_UnLock();RTC_LOG(LS_ERROR)<< "output state has been modified during unlocked period";goto Exit;}// Get the number of frames of padding (queued up to play) in the endpoint// buffer. 获取要填充的大小UINT32 padding = 0;hr = _ptrClientOut->GetCurrentPadding(&padding);EXIT_ON_ERROR(hr);// Derive the amount of available space in the output bufferuint32_t framesAvailable = bufferLength - padding;// Do we have 10 ms available in the render buffer?if (framesAvailable < _playBlockSize) { // 没有空间填写// Not enough space in render buffer to store next render packet._UnLock();break;}// Write n*10ms buffers to the render bufferconst uint32_t n10msBuffers = (framesAvailable / _playBlockSize);for (uint32_t n = 0; n < n10msBuffers; n++) {// Get pointer (i.e., grab the buffer) to next space in the shared// render buffer.hr = _ptrRenderClient->GetBuffer(_playBlockSize, &pData);EXIT_ON_ERROR(hr);if (_ptrAudioBuffer) {// Request data to be played out (#bytes =// _playBlockSize*_audioFrameSize)_UnLock();int32_t nSamples =_ptrAudioBuffer->RequestPlayoutData(_playBlockSize); // 获取播放数据大小_Lock();if (nSamples == -1) {_UnLock();RTC_LOG(LS_ERROR) << "failed to read data from render client";goto Exit;}// Sanity check to ensure that essential states are not modified// during the unlocked periodif (_ptrRenderClient == NULL || _ptrClientOut == NULL) {_UnLock();RTC_LOG(LS_ERROR)<< "output state has been modified during unlocked"" period";goto Exit;}if (nSamples != static_cast<int32_t>(_playBlockSize)) {RTC_LOG(LS_WARNING)<< "nSamples(" << nSamples << ") != _playBlockSize"<< _playBlockSize << ")";}// Get the actual (stored) data// 将_ptrAudioBuffer的数据读取放到扬声器的bufer中,扬声器会不停的去读来播放nSamples = _ptrAudioBuffer->GetPlayoutData((int8_t*)pData);}DWORD dwFlags(0);hr = _ptrRenderClient->ReleaseBuffer(_playBlockSize, dwFlags);// See http://msdn.microsoft.com/en-us/library/dd316605(VS.85).aspx// for more details regarding AUDCLNT_E_DEVICE_INVALIDATED.EXIT_ON_ERROR(hr);// 写入的大小要递增_writtenSamples += _playBlockSize;}// Check the current delay on the playout side.if (clock) {UINT64 pos = 0;UINT64 freq = 1;clock->GetPosition(&pos, NULL);clock->GetFrequency(&freq);playout_delay = ROUND((double(_writtenSamples) / _devicePlaySampleRate -double(pos) / freq) *1000.0);// 每次播放完都要更新该值,时刻获取播放延迟时间_sndCardPlayDelay = playout_delay;}_UnLock();}}// ------------------ THREAD LOOP ------------------ <<SleepMs(static_cast<DWORD>(endpointBufferSizeMS + 0.5));hr = _ptrClientOut->Stop();****}