前言

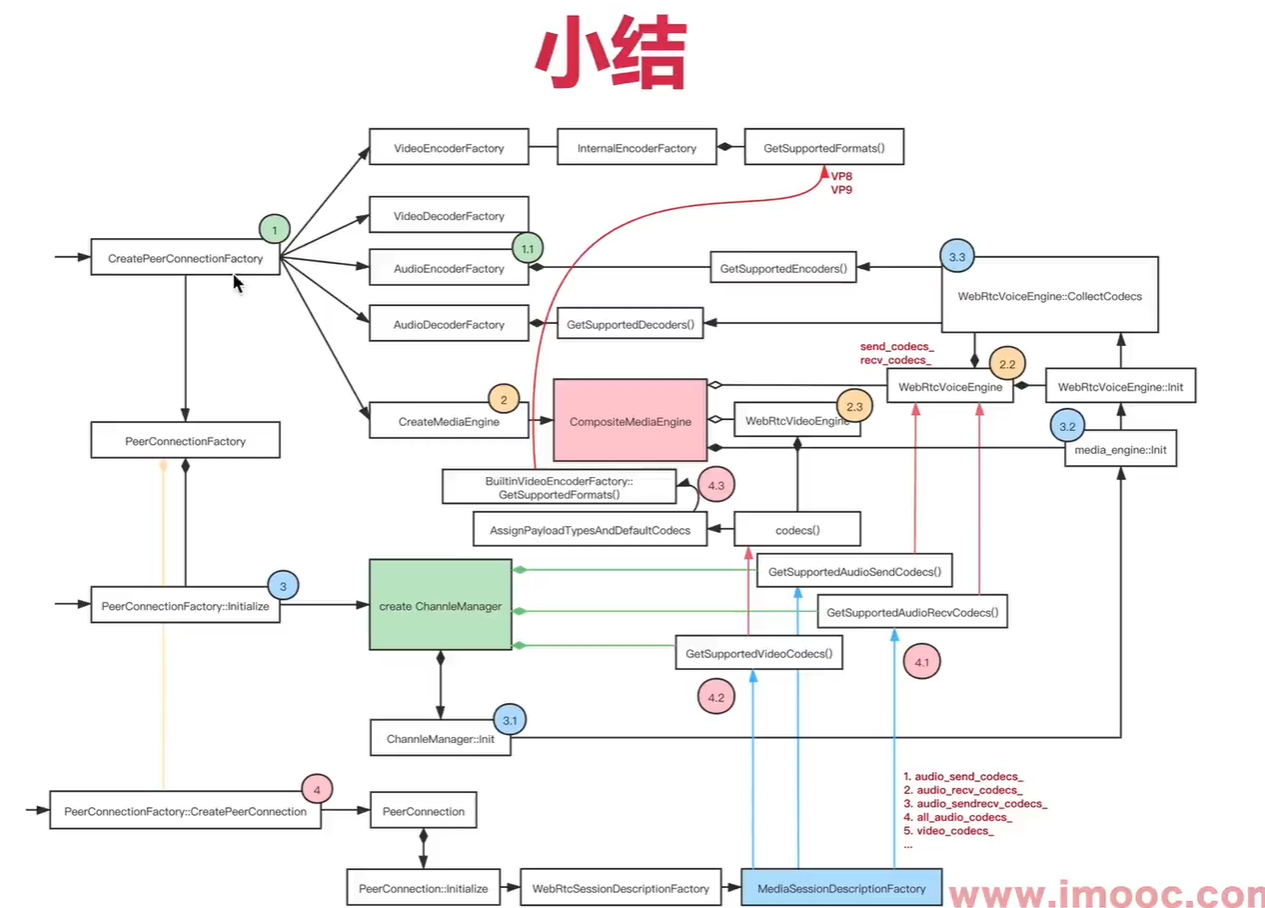

编解码器信息收集的步骤

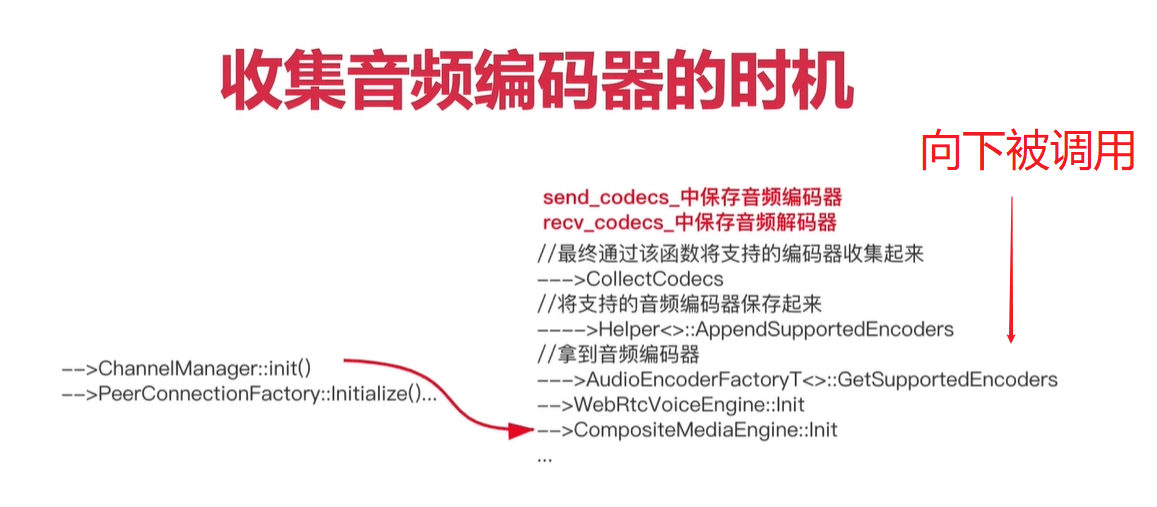

收集音频编解码器的时机

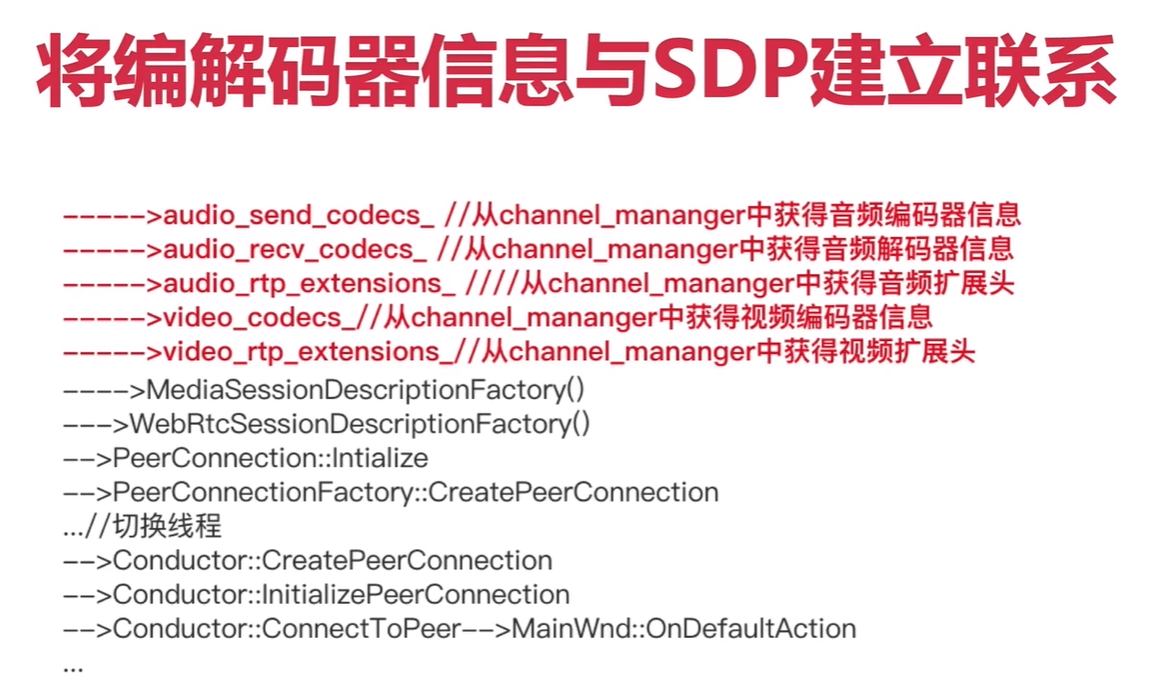

将编解码器信息与SDP建立联系

代码分析

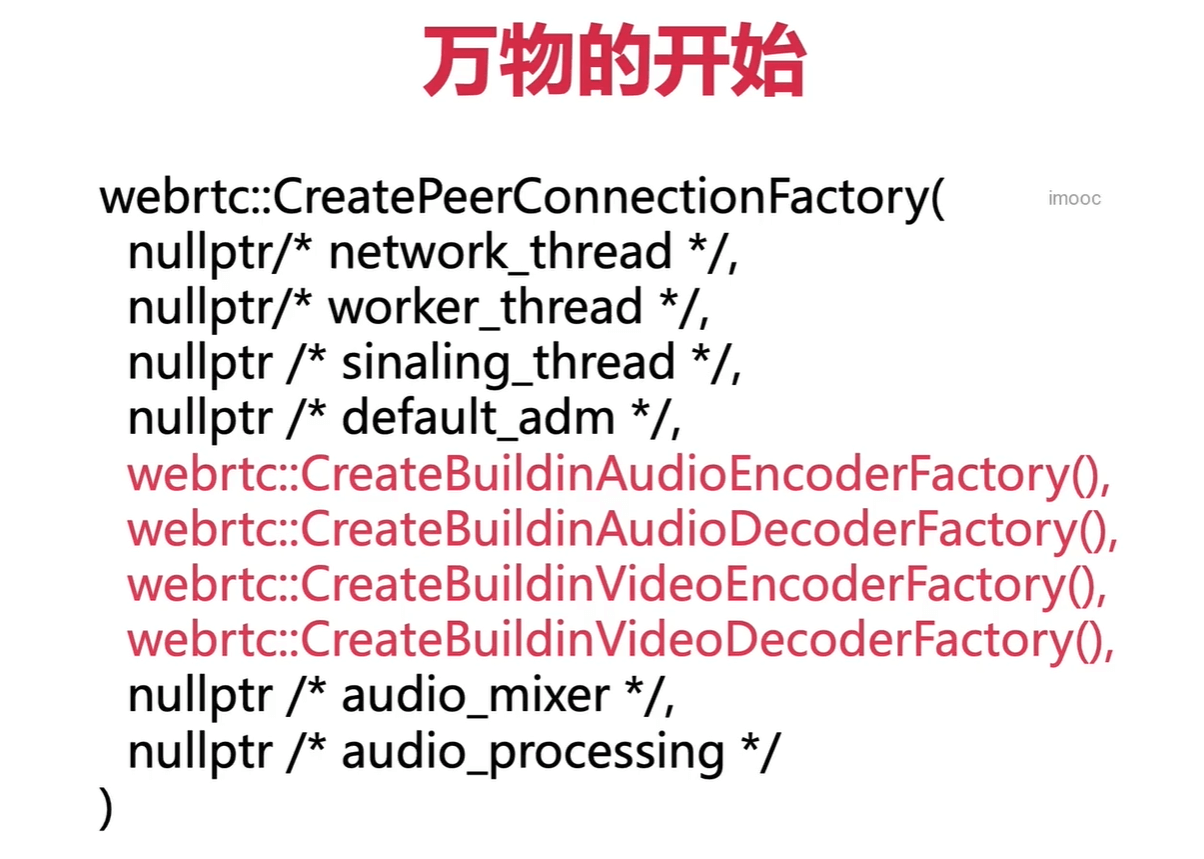

Conductor::InitializePeerConnection ->webrtc::CreatePeerConnectionFactory

peer_connection_factory_ = webrtc::CreatePeerConnectionFactory(nullptr /* network_thread */, nullptr /* worker_thread */,nullptr /* signaling_thread */, nullptr /* default_adm */,webrtc::CreateBuiltinAudioEncoderFactory(),webrtc::CreateBuiltinAudioDecoderFactory(),webrtc::CreateBuiltinVideoEncoderFactory(),webrtc::CreateBuiltinVideoDecoderFactory(), nullptr /* audio_mixer */,nullptr /* audio_processing */);

webrtc::CreateBuiltinAudioEncoderFactory(),

->

CreateAudioEncoderFactory 可变参数模板

->

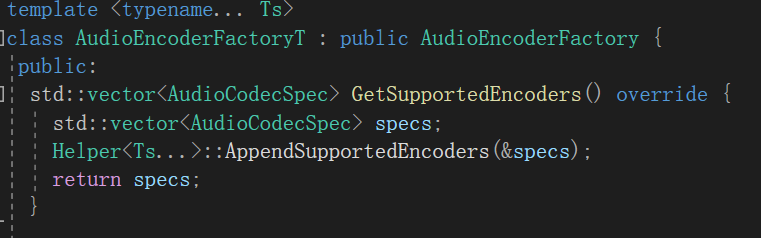

audio_encoder_factory_template_impl::AudioEncoderFactoryT

->

class AudioEncoderFactoryT : public AudioEncoderFactory {

public:

std::vector

std::vector

Helper

return specs;

}

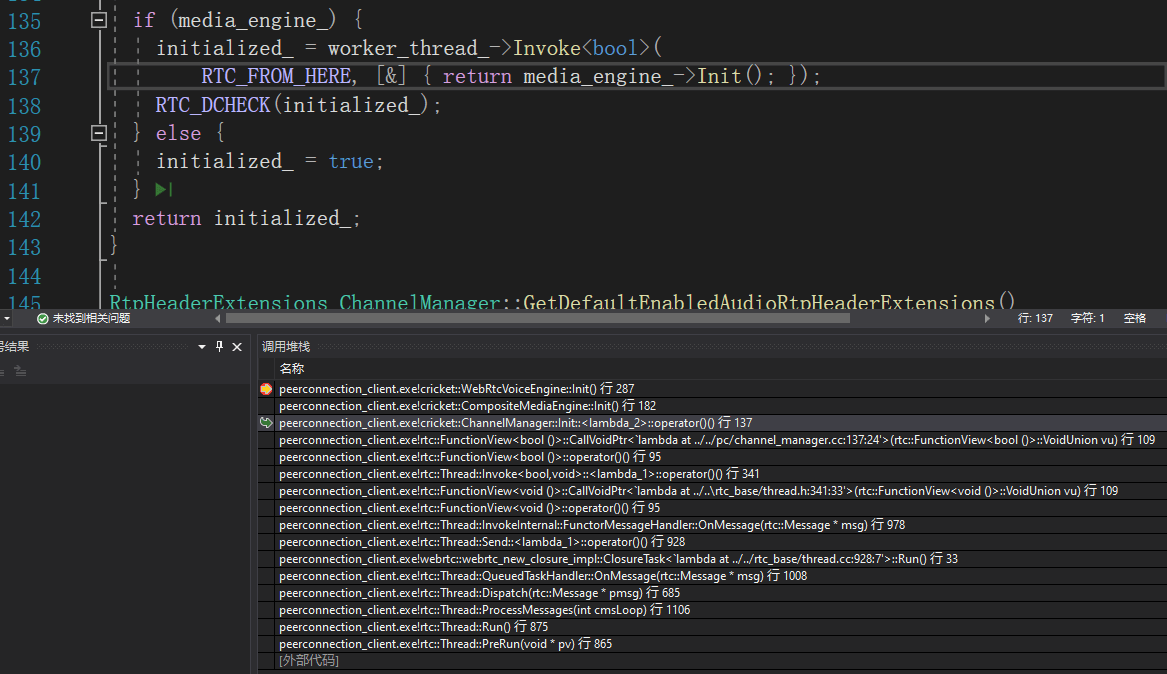

ChannelManager::Init

cricket::ChannelManager::Init() 行 123 C++webrtc::ConnectionContext::Create(webrtc::PeerConnectionFactoryDependencies * dependencies) 行 79 C++webrtc::PeerConnectionFactory::Create(webrtc::PeerConnectionFactoryDependencies dependencies) 行 84 C++webrtc::CreateModularPeerConnectionFactory(webrtc::PeerConnectionFactoryDependencies dependencies) 行 70 C++webrtc::CreatePeerConnectionFactory(rtc::Thread * network_thread, rtc::Thread * worker_thread, rtc::Thread * signaling_thread, rtc::scoped_refptr<webrtc::AudioDeviceModule> default_adm, rtc::scoped_refptr<webrtc::AudioEncoderFactory> audio_encoder_factory, rtc::scoped_refptr<webrtc::AudioDecoderFactory> audio_decoder_factory, std::__1::unique_ptr<webrtc::VideoEncoderFactory,std::default_delete<webrtc::VideoEncoderFactory>> video_encoder_factory, std::__1::unique_ptr<webrtc::VideoDecoderFactory,std::default_delete<webrtc::VideoDecoderFactory>> video_decoder_factory, rtc::scoped_refptr<webrtc::AudioMixer> audio_mixer, rtc::scoped_refptr<webrtc::AudioProcessing> audio_processing, webrtc::AudioFrameProcessor * audio_frame_processor) 行 71 C++Conductor::InitializePeerConnection() 行 133 C++Conductor::ConnectToPeer(int peer_id) 行 450 C++MainWnd::OnDefaultAction() 行 348 C++MainWnd::OnMessage(unsigned int msg, unsigned __int64 wp, __int64 lp, __int64 * result) 行 392 C++MainWnd::WndProc(HWND__ * hwnd, unsigned int msg, unsigned __int64 wp, __int64 lp) 行 419 C++

bool ChannelManager::Init() {RTC_DCHECK(!initialized_);if (initialized_) {return false;}RTC_DCHECK(network_thread_);RTC_DCHECK(worker_thread_);if (!network_thread_->IsCurrent()) {// Do not allow invoking calls to other threads on the network thread.network_thread_->Invoke<void>(RTC_FROM_HERE, [&] { network_thread_->DisallowBlockingCalls(); });}if (media_engine_) {initialized_ = worker_thread_->Invoke<bool>(RTC_FROM_HERE, [&] { return media_engine_->Init(); });RTC_DCHECK(initialized_);} else {initialized_ = true;}return initialized_;}

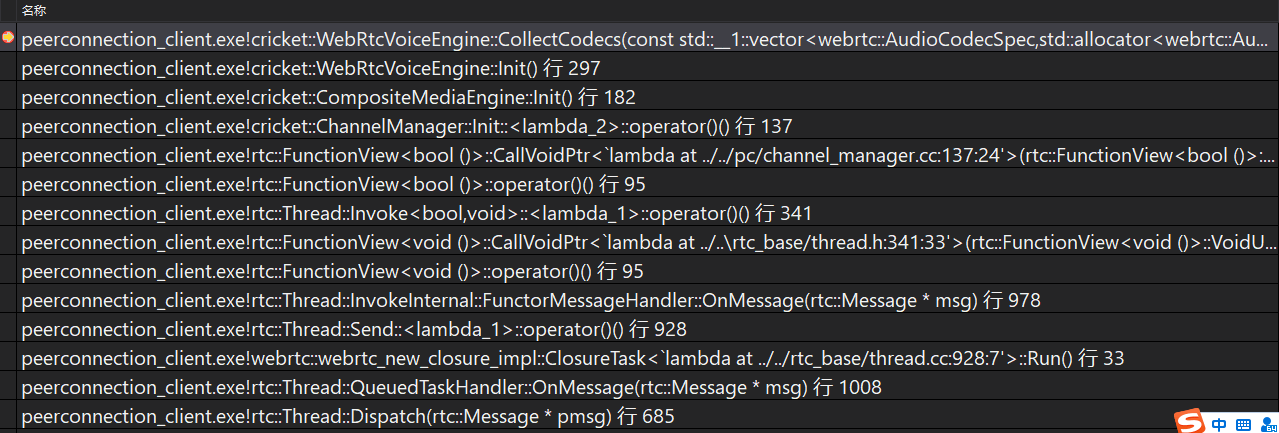

WebRtcVoiceEngine::Init

cricket::WebRtcVoiceEngine::Init() 行 287 C++cricket::CompositeMediaEngine::Init() 行 182 C++cricket::ChannelManager::Init() 行 123 C++webrtc::ConnectionContext::Create(webrtc::PeerConnectionFactoryDependencies * dependencies) 行 79 C++webrtc::PeerConnectionFactory::Create(webrtc::PeerConnectionFactoryDependencies dependencies) 行 84 C++webrtc::CreateModularPeerConnectionFactory(webrtc::PeerConnectionFactoryDependencies dependencies) 行 70 C++webrtc::CreatePeerConnectionFactory(rtc::Thread * network_thread, rtc::Thread * worker_thread, rtc::Thread * signaling_thread, rtc::scoped_refptr<webrtc::AudioDeviceModule> default_adm, rtc::scoped_refptr<webrtc::AudioEncoderFactory> audio_encoder_factory, rtc::scoped_refptr<webrtc::AudioDecoderFactory> audio_decoder_factory, std::__1::unique_ptr<webrtc::VideoEncoderFactory,std::default_delete<webrtc::VideoEncoderFactory>> video_encoder_factory, std::__1::unique_ptr<webrtc::VideoDecoderFactory,std::default_delete<webrtc::VideoDecoderFactory>> video_decoder_factory, rtc::scoped_refptr<webrtc::AudioMixer> audio_mixer, rtc::scoped_refptr<webrtc::AudioProcessing> audio_processing, webrtc::AudioFrameProcessor * audio_frame_processor) 行 71 C++Conductor::InitializePeerConnection() 行 133 C++Conductor::ConnectToPeer(int peer_id) 行 450 C++MainWnd::OnDefaultAction() 行 348 C++MainWnd::OnMessage(unsigned int msg, unsigned __int64 wp, __int64 lp, __int64 * result) 行 392 C++MainWnd::WndProc(HWND__ * hwnd, unsigned int msg, unsigned __int64 wp, __int64 lp) 行 419 C++

void WebRtcVoiceEngine::Init() {RTC_DCHECK_RUN_ON(&worker_thread_checker_);RTC_LOG(LS_INFO) << "WebRtcVoiceEngine::Init";// TaskQueue expects to be created/destroyed on the same thread.low_priority_worker_queue_.reset(new rtc::TaskQueue(task_queue_factory_->CreateTaskQueue("rtc-low-prio", webrtc::TaskQueueFactory::Priority::LOW)));// Load our audio codec lists.RTC_LOG(LS_VERBOSE) << "Supported send codecs in order of preference:";send_codecs_ = CollectCodecs(encoder_factory_->GetSupportedEncoders());for (const AudioCodec& codec : send_codecs_) {RTC_LOG(LS_VERBOSE) << ToString(codec);}RTC_LOG(LS_VERBOSE) << "Supported recv codecs in order of preference:";recv_codecs_ = CollectCodecs(decoder_factory_->GetSupportedDecoders());for (const AudioCodec& codec : recv_codecs_) {RTC_LOG(LS_VERBOSE) << ToString(codec);*************}

CollectCodecs(encoderfactory->GetSupportedEncoders());

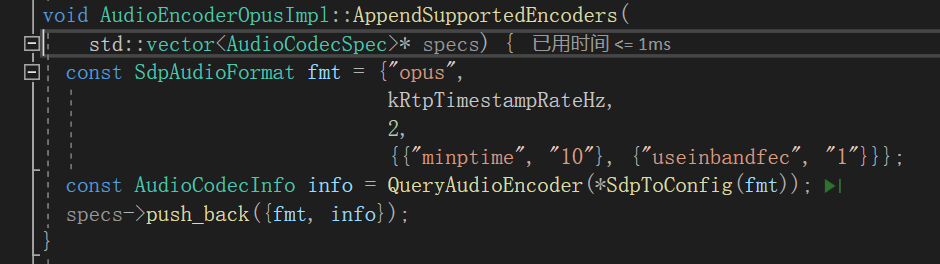

AudioEncoderOpusImpl::AppendSupportedEncoders

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\audio_coding\codecs\opus\audio_encoder_opus.cc

WebRtcVoiceEngine::CollectCodecs

收集编码器信息。

std::vector<AudioCodec> WebRtcVoiceEngine::CollectCodecs(const std::vector<webrtc::AudioCodecSpec>& specs) const {PayloadTypeMapper mapper;std::vector<AudioCodec> out;// Only generate CN payload types for these clockrates:std::map<int, bool, std::greater<int>> generate_cn = {{8000, false}, {16000, false}, {32000, false}};// Only generate telephone-event payload types for these clockrates:std::map<int, bool, std::greater<int>> generate_dtmf = {{8000, false}, {16000, false}, {32000, false}, {48000, false}};auto map_format = [&mapper](const webrtc::SdpAudioFormat& format,std::vector<AudioCodec>* out) {absl::optional<AudioCodec> opt_codec = mapper.ToAudioCodec(format);if (opt_codec) {if (out) {out->push_back(*opt_codec);}} else {RTC_LOG(LS_ERROR) << "Unable to assign payload type to format: "<< rtc::ToString(format);}return opt_codec;};for (const auto& spec : specs) {// We need to do some extra stuff before adding the main codecs to out.absl::optional<AudioCodec> opt_codec = map_format(spec.format, nullptr);if (opt_codec) {AudioCodec& codec = *opt_codec;if (spec.info.supports_network_adaption) {codec.AddFeedbackParam(FeedbackParam(kRtcpFbParamTransportCc, kParamValueEmpty));}if (spec.info.allow_comfort_noise) {// Generate a CN entry if the decoder allows it and we support the// clockrate.auto cn = generate_cn.find(spec.format.clockrate_hz);if (cn != generate_cn.end()) {cn->second = true;}}// Generate a telephone-event entry if we support the clockrate.auto dtmf = generate_dtmf.find(spec.format.clockrate_hz);if (dtmf != generate_dtmf.end()) {dtmf->second = true;}out.push_back(codec);if (codec.name == kOpusCodecName && audio_red_for_opus_trial_enabled_) {map_format({kRedCodecName, 48000, 2}, &out);}}}// Add CN codecs after "proper" audio codecs.for (const auto& cn : generate_cn) {if (cn.second) {map_format({kCnCodecName, cn.first, 1}, &out);}}// Add telephone-event codecs last.for (const auto& dtmf : generate_dtmf) {if (dtmf.second) {map_format({kDtmfCodecName, dtmf.first, 1}, &out);}}return out;}

PayloadTypeMapper::PayloadTypeMapper

保存类型信息

PayloadTypeMapper::PayloadTypeMapper()// RFC 3551 reserves payload type numbers in the range 96-127 exclusively// for dynamic assignment. Once those are used up, it is recommended that// payload types unassigned by the RFC are used for dynamic payload type// mapping, before any static payload ids. At this point, we only support// mapping within the exclusive range.: next_unused_payload_type_(96),max_payload_type_(127),mappings_({// Static payload type assignments according to RFC 3551.{{kPcmuCodecName, 8000, 1}, 0},{{"GSM", 8000, 1}, 3},{{"G723", 8000, 1}, 4},{{"DVI4", 8000, 1}, 5},{{"DVI4", 16000, 1}, 6},{{"LPC", 8000, 1}, 7},{{kPcmaCodecName, 8000, 1}, 8},{{kG722CodecName, 8000, 1}, 9},{{kL16CodecName, 44100, 2}, 10},{{kL16CodecName, 44100, 1}, 11},{{"QCELP", 8000, 1}, 12},{{kCnCodecName, 8000, 1}, 13},// RFC 4566 is a bit ambiguous on the contents of the "encoding// parameters" field, which, for audio, encodes the number of// channels. It is "optional and may be omitted if the number of// channels is one". Does that necessarily imply that an omitted// encoding parameter means one channel? Since RFC 3551 doesn't// specify a value for this parameter for MPA, I've included both 0// and 1 here, to increase the chances it will be correctly used if// someone implements an MPEG audio encoder/decoder.{{"MPA", 90000, 0}, 14},{{"MPA", 90000, 1}, 14},{{"G728", 8000, 1}, 15},{{"DVI4", 11025, 1}, 16},{{"DVI4", 22050, 1}, 17},{{"G729", 8000, 1}, 18},// Payload type assignments currently used by WebRTC.// Includes data to reduce collisions (and thus reassignments){{kGoogleRtpDataCodecName, 0, 0}, kGoogleRtpDataCodecPlType},{{kIlbcCodecName, 8000, 1}, 102},{{kIsacCodecName, 16000, 1}, 103},{{kIsacCodecName, 32000, 1}, 104},{{kCnCodecName, 16000, 1}, 105},{{kCnCodecName, 32000, 1}, 106},{{kOpusCodecName,48000,2,{{kCodecParamMinPTime, "10"},{kCodecParamUseInbandFec, kParamValueTrue}}},111},// TODO(solenberg): Remove the hard coded 16k,32k,48k DTMF once we// assign payload types dynamically for send side as well.{{kDtmfCodecName, 48000, 1}, 110},{{kDtmfCodecName, 32000, 1}, 112},{{kDtmfCodecName, 16000, 1}, 113},{{kDtmfCodecName, 8000, 1}, 126}}) {// TODO(ossu): Try to keep this as change-proof as possible until we're able// to remove the payload type constants from everywhere in the code.for (const auto& mapping : mappings_) {used_payload_types_.insert(mapping.second);}}

小结