前言

分为外部AudioState和内部AudioState

代码分析

WebRtcVoiceEngine::Init

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\media\engine\webrtc_voice_engine.cc

void WebRtcVoiceEngine::Init() {RTC_DCHECK_RUN_ON(&worker_thread_checker_);RTC_LOG(LS_INFO) << "WebRtcVoiceEngine::Init";// 1、 TaskQueue expects to be created/destroyed on the same thread.low_priority_worker_queue_.reset(new rtc::TaskQueue(task_queue_factory_->CreateTaskQueue("rtc-low-prio", webrtc::TaskQueueFactory::Priority::LOW)));// 2、 收集音频编码器RTC_LOG(LS_VERBOSE) << "Supported send codecs in order of preference:";send_codecs_ = CollectCodecs(encoder_factory_->GetSupportedEncoders());for (const AudioCodec& codec : send_codecs_) {RTC_LOG(LS_VERBOSE) << ToString(codec);}// 3、 收集音频解码器RTC_LOG(LS_VERBOSE) << "Supported recv codecs in order of preference:";recv_codecs_ = CollectCodecs(decoder_factory_->GetSupportedDecoders());for (const AudioCodec& codec : recv_codecs_) {RTC_LOG(LS_VERBOSE) << ToString(codec);}// 4、创建ADM#if defined(WEBRTC_INCLUDE_INTERNAL_AUDIO_DEVICE)// No ADM supplied? Create a default one.if (!adm_) {adm_ = webrtc::AudioDeviceModule::Create(webrtc::AudioDeviceModule::kPlatformDefaultAudio, task_queue_factory_);}#endif // WEBRTC_INCLUDE_INTERNAL_AUDIO_DEVICERTC_CHECK(adm());// 5、 初始化admwebrtc::adm_helpers::Init(adm());// 6、 Set up AudioState. 当前讲解这一步{webrtc::AudioState::Config config;if (audio_mixer_) {config.audio_mixer = audio_mixer_;} else {config.audio_mixer = webrtc::AudioMixerImpl::Create();}config.audio_processing = apm_;config.audio_device_module = adm_;if (audio_frame_processor_)config.async_audio_processing_factory =new rtc::RefCountedObject<webrtc::AsyncAudioProcessing::Factory>(*audio_frame_processor_, *task_queue_factory_);audio_state_ = webrtc::AudioState::Create(config);}// 7、 Connect the ADM to our audio path.// 注册回调,采集到音频数据后,由audio_transport()传输adm()->RegisterAudioCallback(audio_state()->audio_transport());// 8、 Set default engine options.{AudioOptions options;options.echo_cancellation = true;options.auto_gain_control = true;#if defined(WEBRTC_IOS)// On iOS, VPIO provides built-in NS.options.noise_suppression = false;options.typing_detection = false;#elseoptions.noise_suppression = true;options.typing_detection = true;#endifoptions.experimental_ns = false;options.highpass_filter = true;options.stereo_swapping = false;options.audio_jitter_buffer_max_packets = 200;options.audio_jitter_buffer_fast_accelerate = false;options.audio_jitter_buffer_min_delay_ms = 0;options.audio_jitter_buffer_enable_rtx_handling = false;options.experimental_agc = false;options.residual_echo_detector = true;bool error = ApplyOptions(options);RTC_DCHECK(error);}initialized_ = true;}

—》

// 6、 Set up AudioState. 当前讲解这一步

{

webrtc::AudioState::Config config;

if (audiomixer) {

config.audiomixer = audio_mixer;

} else {

config.audiomixer = webrtc::AudioMixerImpl::Create();

}

config.audio_processing = apm;

config.audiodevice_module = adm;

if (audioframe_processor)

config.asyncaudio_processing_factory =

new rtc::RefCountedObject

*audio_frame_processor

audiostate = webrtc::AudioState::Create(config);

}

当前堆栈

—》

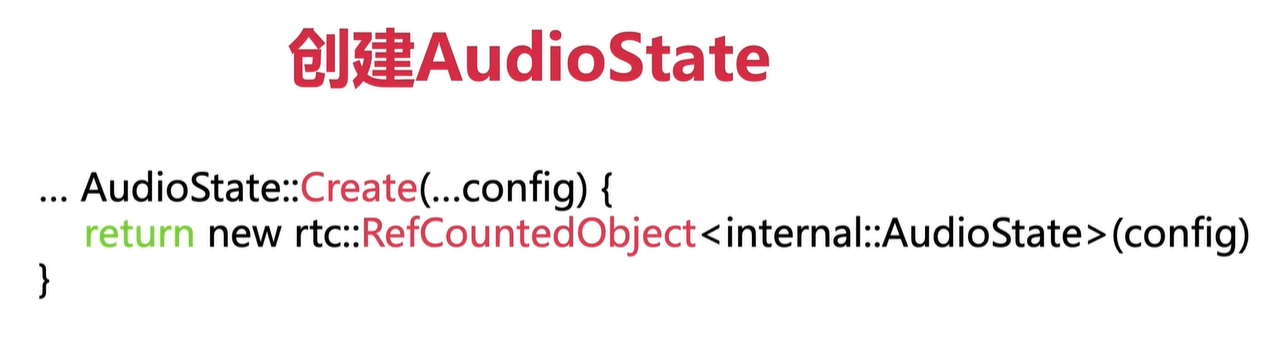

AudioState::Create

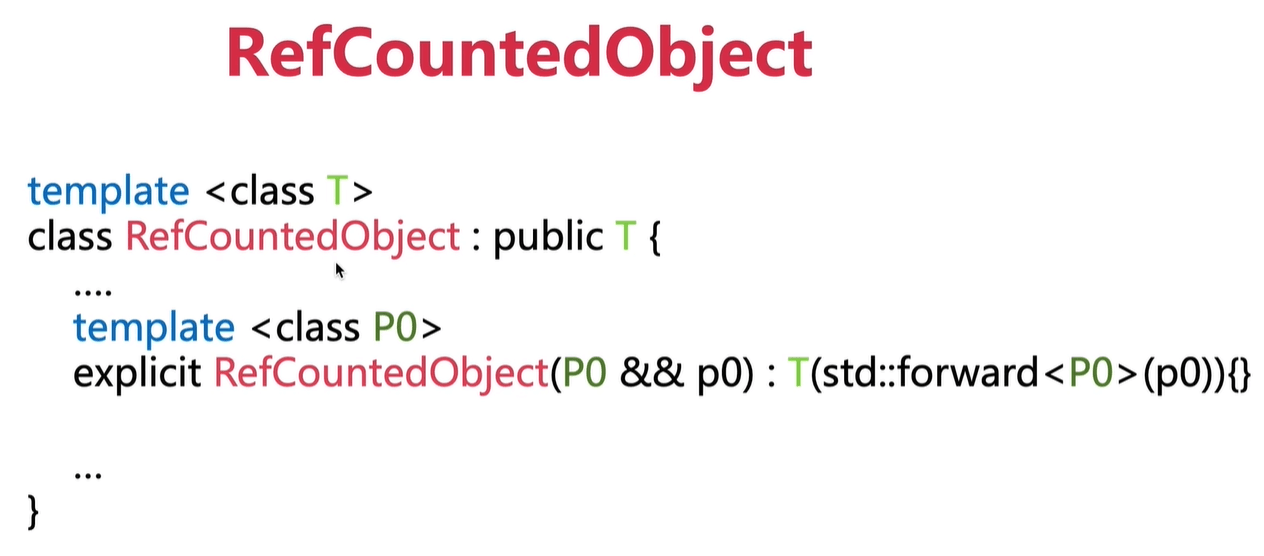

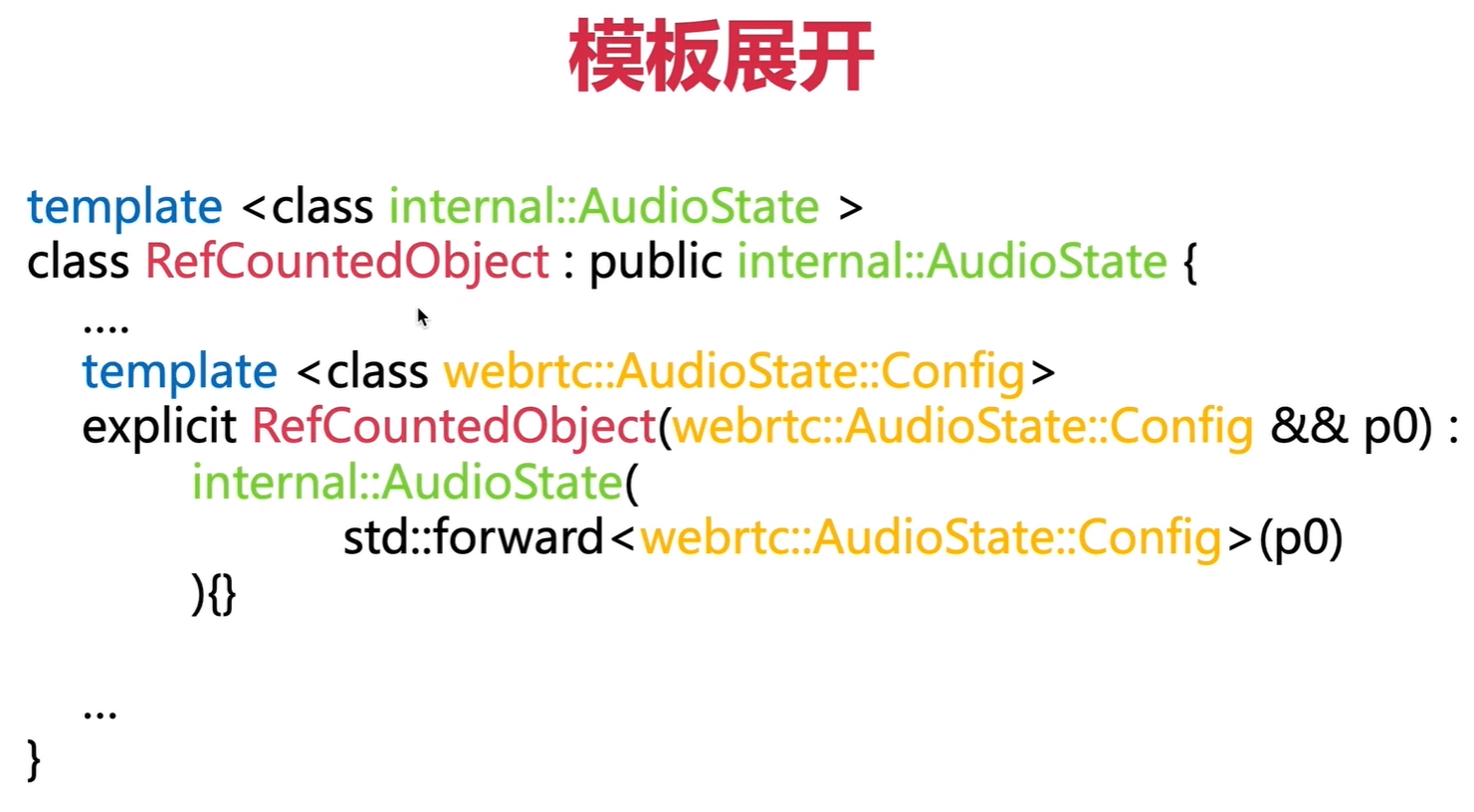

rtc::scoped_refptr<AudioState> AudioState::Create(const AudioState::Config& config) {return new rtc::RefCountedObject<internal::AudioState>(config);}

internal::AudioState::AudioState

namespace webrtc {namespace internal {AudioState::AudioState(const AudioState::Config& config): config_(config),audio_transport_(config_.audio_mixer,config_.audio_processing.get(),config_.async_audio_processing_factory.get()) {process_thread_checker_.Detach();RTC_DCHECK(config_.audio_mixer);RTC_DCHECK(config_.audio_device_module);}****