两种Opus编码器

当我们使用单通道或双通道的时候就使用webrtc_opus,超过2个通道的音频就选择webrtc_multiopus。

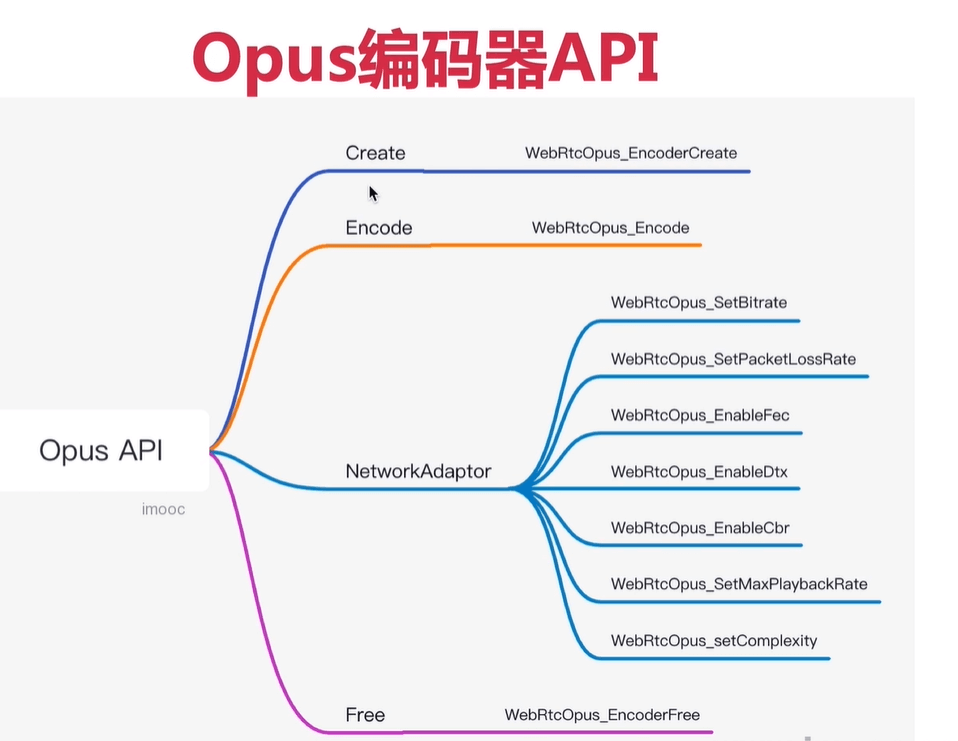

Opus编码器API

下面的api介绍来自《webrtc音频QOS方法二(opus编码器自适应网络参数调整功能)》

https://blog.csdn.net/CrystalShaw/article/details/106940151

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\audio_coding\codecs\opus\opus_interface.h

1、WebRtcOpus_SetBitRate

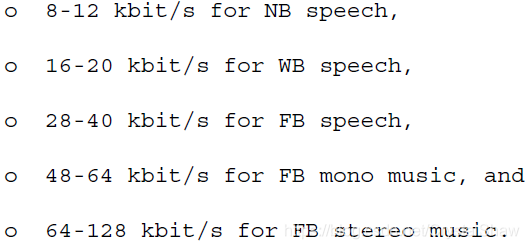

Opus支持码率从6 kbit/s到510 kbit/s的切换功能,以适应这种网络状态。以20ms单帧数据编码为例,下面是各种配置的Opus的比特率最佳点。<br />

2、WebRtcOpus_SetPacketLossRate

动态配置丢包率,是为了动态调整opus FEC的冗余度。opus编码器自带inband FEC冗余算法,增强抗丢包能力。大概使用的是非对称冗余协议。将一些关键信息多次编码重传。

3、WebRtcOpus_EnableFec/DisableFec

开启或者关闭inband FEC功能。<br /> 走读opus代码,发现只有silk编码支持inband FEC。函数实现调用栈如下:

opus_encode_native->silk_Encode->silk_encode_frame_Fxx->silk_encode_frame_FLP->silk_LBRR_encode_FLP

celt不支持inband FEC。猜测celt是通过改变参考帧长度,来增强抗网络丢包能力。

4、WebRtcOpus_EnableDtx/DisableDtx

DTX:Discontinuous Transmission。不同于music场景,在voip场景下,声音不是持续的,会有一段一段的间歇期。这个间歇期若是也正常编码音频数据,对带宽有些浪费。所以opus支持DTX功能,若是检测当前会议没有明显通话声音,仅定期发送(400ms)静音指示报文给对方。对方收到静音指示报文可以补舒适噪音包(opus不支持CNG,不能补舒适噪音包)或者静音包给音频渲染器。

5、WebRtcOpus_EnableCbr/DisableCbr

opus支持恒定码率和变码率两种编码方式。一般流媒体使用CBR,voip场景使用VBR。

6、WebRtcOpus_SetMaxPlaybackRate

根据采样率调整算法bandwidth参数。<br />

7、WebRtcOpus_SetComplexity

取值范围0-10。值越大代码复杂度越高,音质越好。webrtc里面只有安卓、IOS、ARM支持复杂度切换功能。windows系统默认都是9。

8、WebRtcOpus_SetForceChannels

opus支持单双声道切换功能。当传入数据是双声道,解码器是单声道,解码器会average左右声道数据,以单声道数据输出。

当传入数据是单声道,解码器是双声道,解码器会给左右声道输出同一份数据。一般voip使用单声道传输,music使用双声道,这种单双声道切换,主要提升music场景下抗弱网能力。

9、WebRtcOpus_EncoderCreate.application

#define OPUS_APPLICATION_VOIP 2048<br /> #define OPUS_APPLICATION_AUDIO 2049<br /> #define OPUS_APPLICATION_RESTRICTED_LOWDELAY 2051<br />application有三种模式:voip、music、lowdelay三种模式。<br />voip主要使用SILK编码,music主要使用CELT编码。lowdelay取消voip场景的一些优化方案,换取一丢丢低延时。

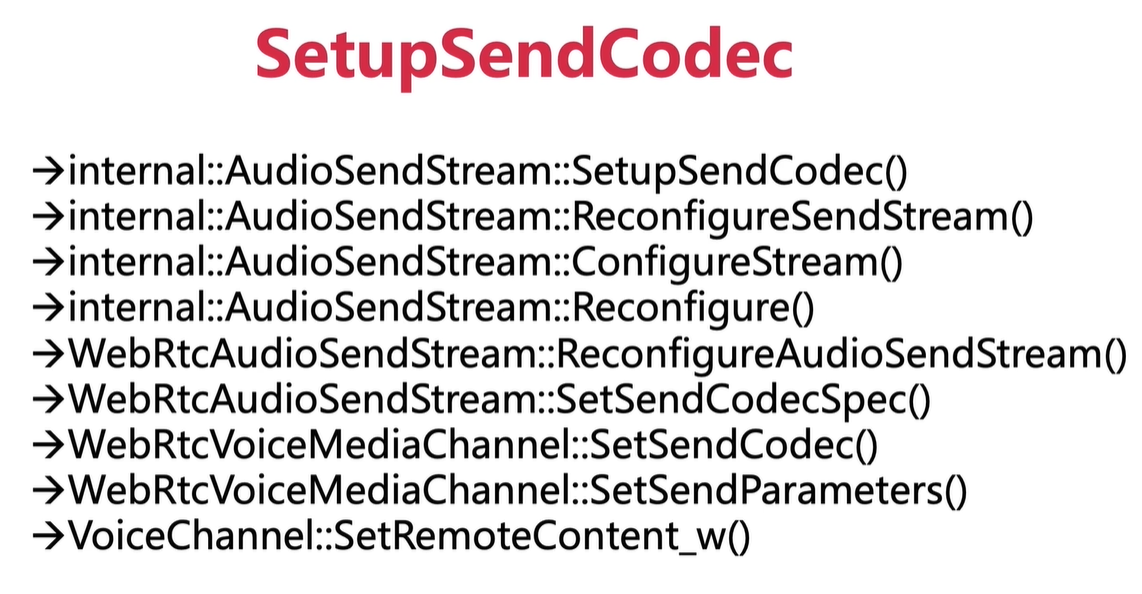

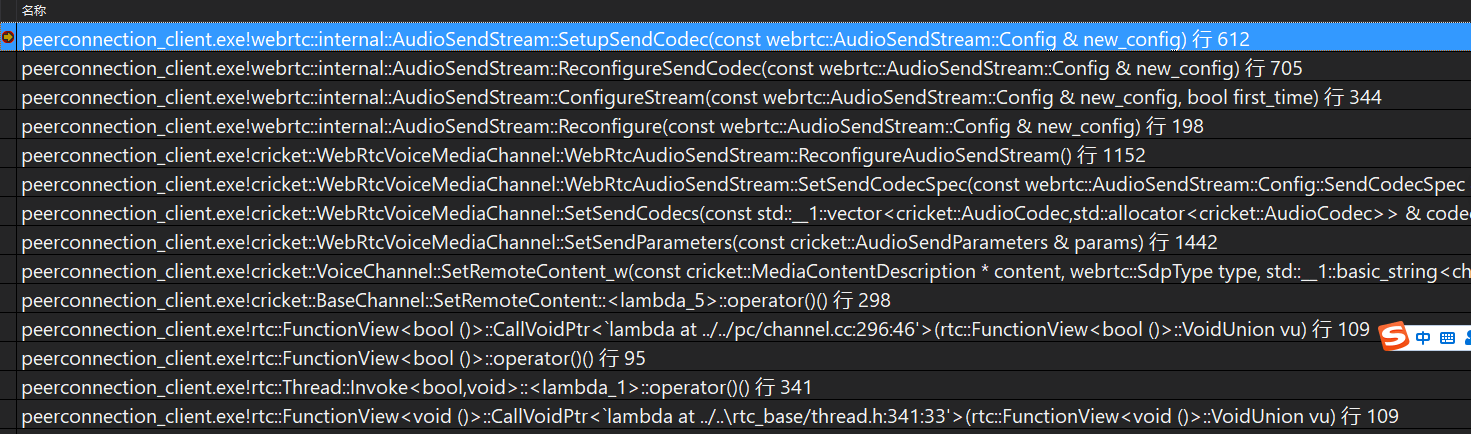

SetupSendCodec代码调用流程

代码分析

AudioSendStream::SetupSendCodec

bool AudioSendStream::SetupSendCodec(const Config& new_config) {RTC_DCHECK(new_config.send_codec_spec);const auto& spec = *new_config.send_codec_spec;RTC_DCHECK(new_config.encoder_factory);std::unique_ptr<AudioEncoder> encoder =new_config.encoder_factory->MakeAudioEncoder(spec.payload_type, spec.format, new_config.codec_pair_id);if (!encoder) {RTC_DLOG(LS_ERROR) << "Unable to create encoder for "<< rtc::ToString(spec.format);return false;}// If a bitrate has been specified for the codec, use it over the// codec's default.if (spec.target_bitrate_bps) {encoder->OnReceivedTargetAudioBitrate(*spec.target_bitrate_bps);}// Enable ANA if configured (currently only used by Opus).if (new_config.audio_network_adaptor_config) {if (encoder->EnableAudioNetworkAdaptor(*new_config.audio_network_adaptor_config, event_log_)) {RTC_LOG(LS_INFO) << "Audio network adaptor enabled on SSRC "<< new_config.rtp.ssrc;} else {RTC_LOG(LS_INFO) << "Failed to enable Audio network adaptor on SSRC "<< new_config.rtp.ssrc;}}// Wrap the encoder in an AudioEncoderCNG, if VAD is enabled.if (spec.cng_payload_type) {AudioEncoderCngConfig cng_config;cng_config.num_channels = encoder->NumChannels();cng_config.payload_type = *spec.cng_payload_type;cng_config.speech_encoder = std::move(encoder);cng_config.vad_mode = Vad::kVadNormal;encoder = CreateComfortNoiseEncoder(std::move(cng_config));RegisterCngPayloadType(*spec.cng_payload_type,new_config.send_codec_spec->format.clockrate_hz);}// Wrap the encoder in a RED encoder, if RED is enabled.if (spec.red_payload_type) {AudioEncoderCopyRed::Config red_config;red_config.payload_type = *spec.red_payload_type;red_config.speech_encoder = std::move(encoder);encoder = std::make_unique<AudioEncoderCopyRed>(std::move(red_config));}// Set currently known overhead (used in ANA, opus only).// If overhead changes later, it will be updated in UpdateOverheadForEncoder.{MutexLock lock(&overhead_per_packet_lock_);size_t overhead = GetPerPacketOverheadBytes();if (overhead > 0) {encoder->OnReceivedOverhead(overhead);}}StoreEncoderProperties(encoder->SampleRateHz(), encoder->NumChannels());channel_send_->SetEncoder(new_config.send_codec_spec->payload_type,std::move(encoder));return true;}

断点调试,当前选择的就是opus

获取编码器参数后,就会调用MakeAudioEncoder创建opus编码器

—》

MakeAudioEncoder

-》

std::unique_ptr<AudioEncoder> MakeAudioEncoder(int payload_type,const SdpAudioFormat& format,absl::optional<AudioCodecPairId> codec_pair_id) override {// 模板递归创建音频编码器return Helper<Ts...>::MakeAudioEncoder(payload_type, format, codec_pair_id);}--》static std::unique_ptr<AudioEncoder> MakeAudioEncoder(int payload_type,const SdpAudioFormat& format,absl::optional<AudioCodecPairId> codec_pair_id) {auto opt_config = T::SdpToConfig(format);if (opt_config) {return T::MakeAudioEncoder(*opt_config, payload_type, codec_pair_id);} else {return Helper<Ts...>::MakeAudioEncoder(payload_type, format,codec_pair_id);}}

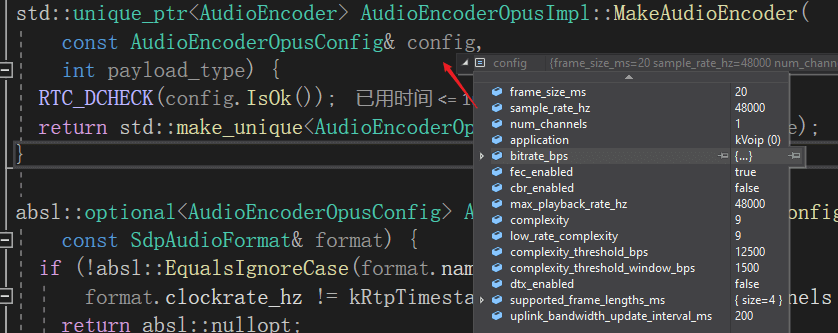

AudioEncoderOpusImpl::MakeAudioEncoder

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\modules\audio_coding\codecs\opus\audio_encoder_opus.cc

std::unique_ptr<AudioEncoder> AudioEncoderOpusImpl::MakeAudioEncoder(const AudioEncoderOpusConfig& config,int payload_type) {RTC_DCHECK(config.IsOk());return std::make_unique<AudioEncoderOpusImpl>(config, payload_type);}

这里音频的每帧帧大小为20ms,opus支持最大为120ms,意思就是一帧最大可以播放120ms的音频。

—》

AudioEncoderOpusImpl::AudioEncoderOpusImpl(const AudioEncoderOpusConfig& config,int payload_type): AudioEncoderOpusImpl(config,payload_type,[this](const std::string& config_string, RtcEventLog* event_log) {return DefaultAudioNetworkAdaptorCreator(config_string, event_log);},// We choose 5sec as initial time constant due to empirical data.std::make_unique<SmoothingFilterImpl>(5000)) {}-》AudioEncoderOpusImpl::AudioEncoderOpusImpl(const AudioEncoderOpusConfig& config,int payload_type,const AudioNetworkAdaptorCreator& audio_network_adaptor_creator,std::unique_ptr<SmoothingFilter> bitrate_smoother): payload_type_(payload_type),send_side_bwe_with_overhead_(!webrtc::field_trial::IsDisabled("WebRTC-SendSideBwe-WithOverhead")),use_stable_target_for_adaptation_(!webrtc::field_trial::IsDisabled("WebRTC-Audio-StableTargetAdaptation")),adjust_bandwidth_(webrtc::field_trial::IsEnabled("WebRTC-AdjustOpusBandwidth")),bitrate_changed_(true),bitrate_multipliers_(GetBitrateMultipliers()),packet_loss_rate_(0.0),inst_(nullptr),packet_loss_fraction_smoother_(new PacketLossFractionSmoother()),audio_network_adaptor_creator_(audio_network_adaptor_creator),bitrate_smoother_(std::move(bitrate_smoother)),consecutive_dtx_frames_(0) {RTC_DCHECK(0 <= payload_type && payload_type <= 127);// Sanity check of the redundant payload type field that we want to get rid// of. See https://bugs.chromium.org/p/webrtc/issues/detail?id=7847RTC_CHECK(config.payload_type == -1 || config.payload_type == payload_type);RTC_CHECK(RecreateEncoderInstance(config));SetProjectedPacketLossRate(packet_loss_rate_);}

真正创建编码器的地方RecreateEncoderInstance

—》

AudioEncoderOpusImpl::RecreateEncoderInstance

// If the given config is OK, recreate the Opus encoder instance with those// settings, save the config, and return true. Otherwise, do nothing and return// false.bool AudioEncoderOpusImpl::RecreateEncoderInstance(const AudioEncoderOpusConfig& config) {if (!config.IsOk())return false;config_ = config;if (inst_)RTC_CHECK_EQ(0, WebRtcOpus_EncoderFree(inst_));input_buffer_.clear();input_buffer_.reserve(Num10msFramesPerPacket() * SamplesPer10msFrame());RTC_CHECK_EQ(0, WebRtcOpus_EncoderCreate(&inst_, config.num_channels,config.application ==AudioEncoderOpusConfig::ApplicationMode::kVoip? 0: 1,config.sample_rate_hz));const int bitrate = GetBitrateBps(config);RTC_CHECK_EQ(0, WebRtcOpus_SetBitRate(inst_, bitrate));RTC_LOG(LS_VERBOSE) << "Set Opus bitrate to " << bitrate << " bps.";if (config.fec_enabled) {RTC_CHECK_EQ(0, WebRtcOpus_EnableFec(inst_));} else {RTC_CHECK_EQ(0, WebRtcOpus_DisableFec(inst_));}RTC_CHECK_EQ(0, WebRtcOpus_SetMaxPlaybackRate(inst_, config.max_playback_rate_hz));// Use the default complexity if the start bitrate is within the hysteresis// window.complexity_ = GetNewComplexity(config).value_or(config.complexity);RTC_CHECK_EQ(0, WebRtcOpus_SetComplexity(inst_, complexity_));bitrate_changed_ = true;if (config.dtx_enabled) {RTC_CHECK_EQ(0, WebRtcOpus_EnableDtx(inst_));} else { // 这里不启动dtx_enabledRTC_CHECK_EQ(0, WebRtcOpus_DisableDtx(inst_));}RTC_CHECK_EQ(0,WebRtcOpus_SetPacketLossRate(inst_, static_cast<int32_t>(packet_loss_rate_ * 100 + .5))); //千分之五if (config.cbr_enabled) {RTC_CHECK_EQ(0, WebRtcOpus_EnableCbr(inst_));} else {// 这里不启动cbr_enabledRTC_CHECK_EQ(0, WebRtcOpus_DisableCbr(inst_));}num_channels_to_encode_ = NumChannels();next_frame_length_ms_ = config_.frame_size_ms;return true;}

WebRtcOpus_EncoderCreate

int16_t WebRtcOpus_EncoderCreate(OpusEncInst** inst,size_t channels,int32_t application,int sample_rate_hz) {int opus_app;if (!inst)return -1;switch (application) {case 0: // 当前是这里opus_app = OPUS_APPLICATION_VOIP;break;case 1:opus_app = OPUS_APPLICATION_AUDIO;break;default:return -1;}OpusEncInst* state =reinterpret_cast<OpusEncInst*>(calloc(1, sizeof(OpusEncInst)));RTC_DCHECK(state);int error;state->encoder = opus_encoder_create(sample_rate_hz, static_cast<int>(channels), opus_app, &error);if (error != OPUS_OK || (!state->encoder && !state->multistream_encoder)) {WebRtcOpus_EncoderFree(state);return -1;}state->in_dtx_mode = 0;state->channels = channels;state->sample_rate_hz = sample_rate_hz;state->smooth_energy_non_active_frames = 0.0f;state->avoid_noise_pumping_during_dtx =webrtc::field_trial::IsEnabled(kAvoidNoisePumpingDuringDtxFieldTrial);*inst = state;return 0;}

创建OpusEncInst实例,然后调用了opus库的opus_encoder_create函数,将创建好的编码器赋值给OpusEncInst实例的encoder变量,设置好其他参数后,返回该实例。

AudioSendStream::SetupSendCodec中最后面调用了

channelsend->SetEncoder(new_config.send_codec_spec->payload_type, std::move(encoder));。

ChannelSend::SetEncoder

void ChannelSend::SetEncoder(int payload_type,std::unique_ptr<AudioEncoder> encoder) {RTC_DCHECK_RUN_ON(&worker_thread_checker_);RTC_DCHECK_GE(payload_type, 0);RTC_DCHECK_LE(payload_type, 127);// The RTP/RTCP module needs to know the RTP timestamp rate (i.e. clockrate)// as well as some other things, so we collect this info and send it along.rtp_rtcp_->RegisterSendPayloadFrequency(payload_type,encoder->RtpTimestampRateHz());rtp_sender_audio_->RegisterAudioPayload("audio", payload_type,encoder->RtpTimestampRateHz(),encoder->NumChannels(), 0);audio_coding_->SetEncoder(std::move(encoder));}-》// Utility method for simply replacing the existing encoder with a new one.void SetEncoder(std::unique_ptr<AudioEncoder> new_encoder) {ModifyEncoder([&](std::unique_ptr<AudioEncoder>* encoder) {*encoder = std::move(new_encoder);});}

问题

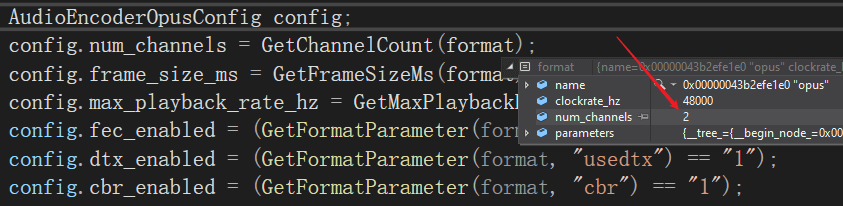

为什么用户传入的通道是2, 创建的时候却为1了呢?

这时候需要进入static std::unique_ptr

absl::optional<AudioEncoderOpusConfig> AudioEncoderOpusImpl::SdpToConfig(const SdpAudioFormat& format) {if (!absl::EqualsIgnoreCase(format.name, "opus") ||format.clockrate_hz != kRtpTimestampRateHz || format.num_channels != 2) {return absl::nullopt;}AudioEncoderOpusConfig config;config.num_channels = GetChannelCount(format);config.frame_size_ms = GetFrameSizeMs(format); // 默认返回AudioEncoderOpusConfig::kDefaultFrameSizeMs=20config.max_playback_rate_hz = GetMaxPlaybackRate(format);config.fec_enabled = (GetFormatParameter(format, "useinbandfec") == "1");config.dtx_enabled = (GetFormatParameter(format, "usedtx") == "1");config.cbr_enabled = (GetFormatParameter(format, "cbr") == "1");config.bitrate_bps =CalculateBitrate(config.max_playback_rate_hz, config.num_channels,GetFormatParameter(format, "maxaveragebitrate"));config.application = config.num_channels == 1? AudioEncoderOpusConfig::ApplicationMode::kVoip: AudioEncoderOpusConfig::ApplicationMode::kAudio;constexpr int kMinANAFrameLength = kANASupportedFrameLengths[0];constexpr int kMaxANAFrameLength =kANASupportedFrameLengths[arraysize(kANASupportedFrameLengths) - 1];// For now, minptime and maxptime are only used with ANA. If ptime is outside// of this range, it will get adjusted once ANA takes hold. Ideally, we'd know// if ANA was to be used when setting up the config, and adjust accordingly.const int min_frame_length_ms =GetFormatParameter<int>(format, "minptime").value_or(kMinANAFrameLength);const int max_frame_length_ms =GetFormatParameter<int>(format, "maxptime").value_or(kMaxANAFrameLength);FindSupportedFrameLengths(min_frame_length_ms, max_frame_length_ms,&config.supported_frame_lengths_ms);RTC_DCHECK(config.IsOk());return config;}

这里看 config.num_channels = GetChannelCount(format); 获取通道数。

int GetChannelCount(const SdpAudioFormat& format) {const auto param = GetFormatParameter(format, "stereo");if (param == "1") {return 2;} else {return 1;}}

因为format参数中没有stereo参数,所以会返回1。如果要使用双声道就需要设置该参数。

这里并没有使用num_channels参数。

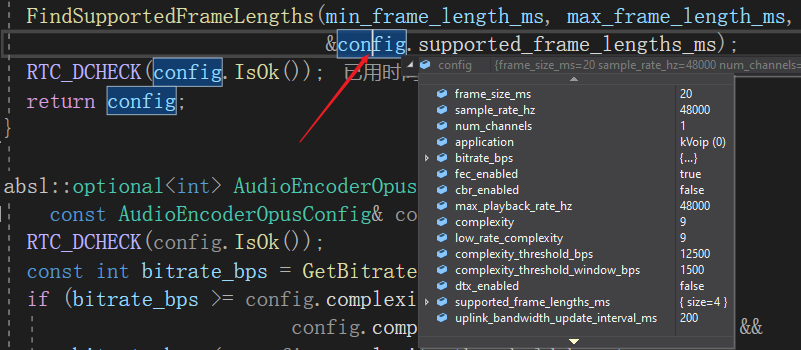

最后,可以看到AudioEncoderOpusImpl::SdpToConfig打印的config值。