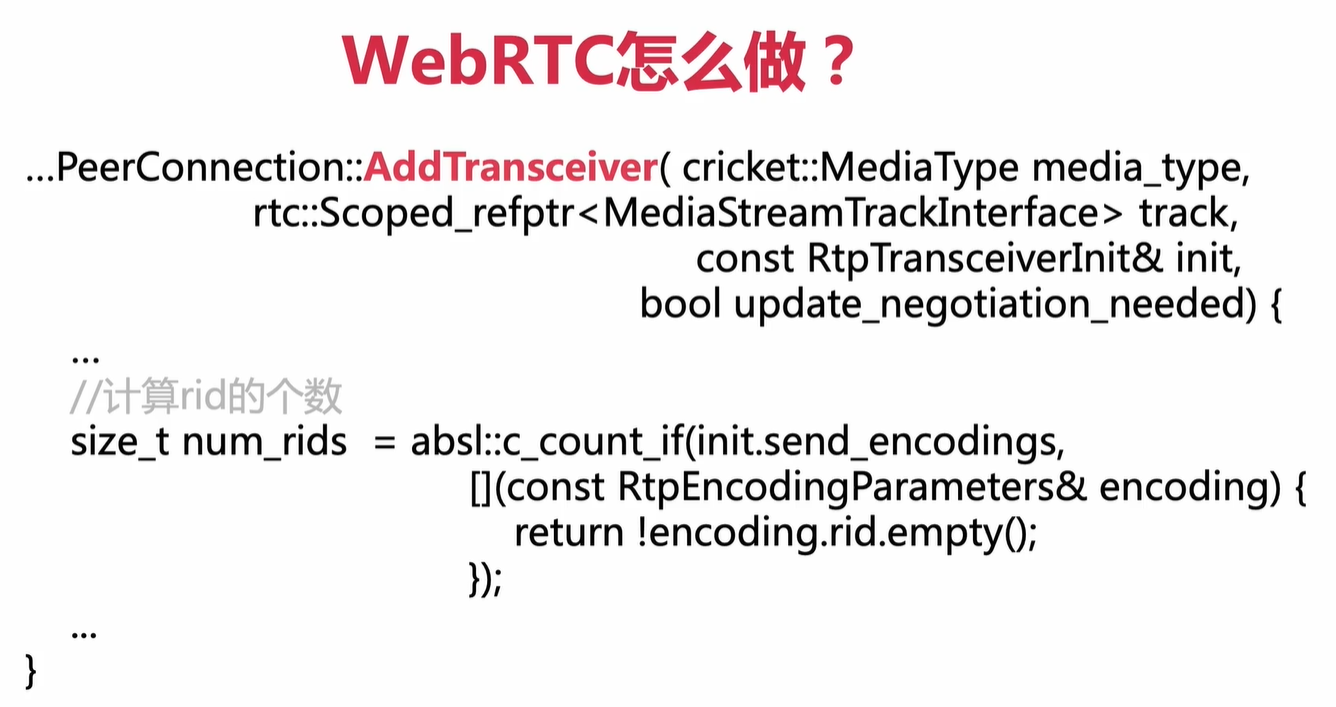

AddTransceiver

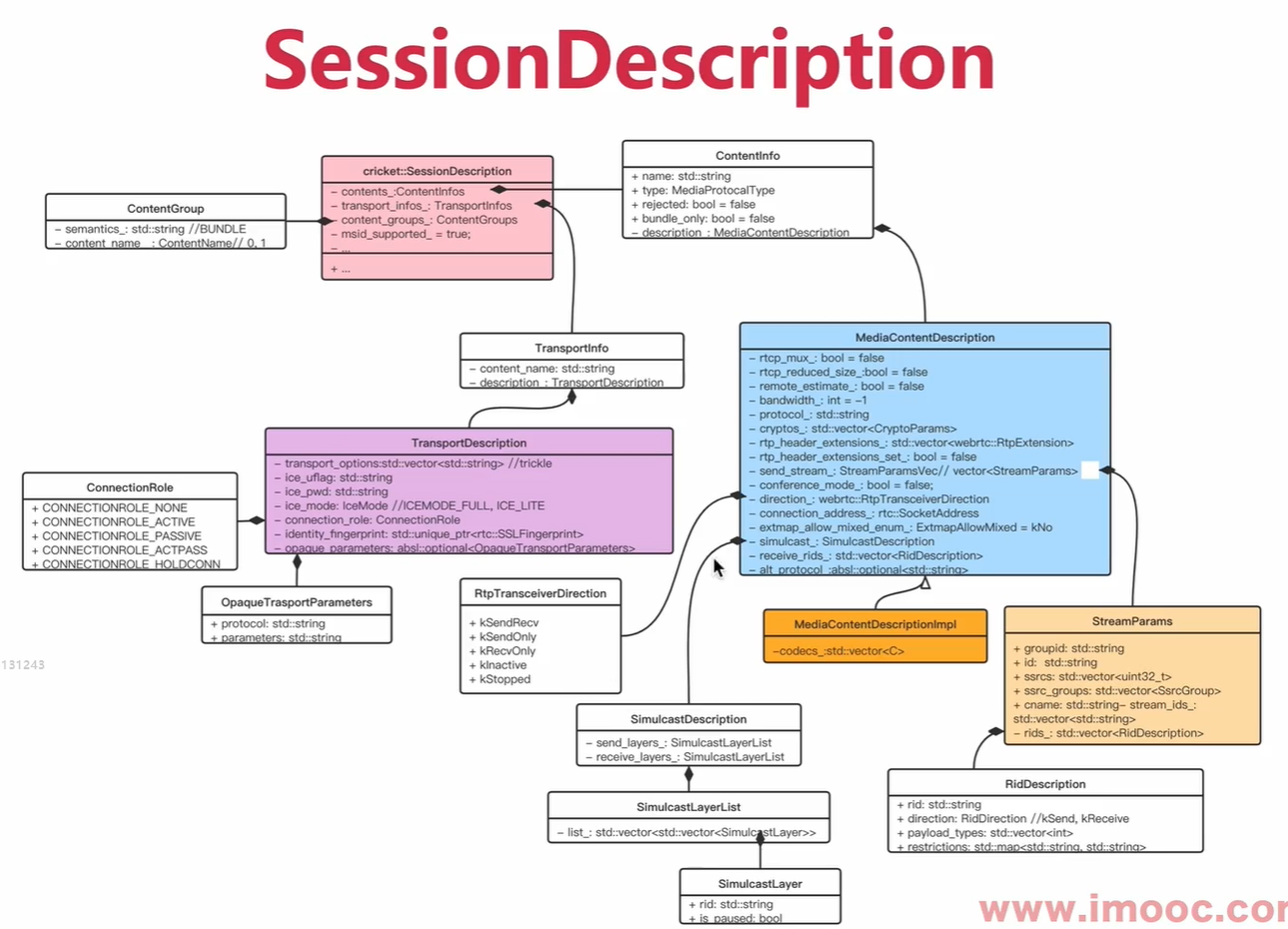

SessionDescription

SimulcastDescription中

sendlayers:发送多少路simlucast流

receivelayers: 接收多少路simlucast流

如果一路流开启了Simulcast,则会有三路流,这些流信息保存在StreamParams的rids_中,每一项都是RidDescritiion 。

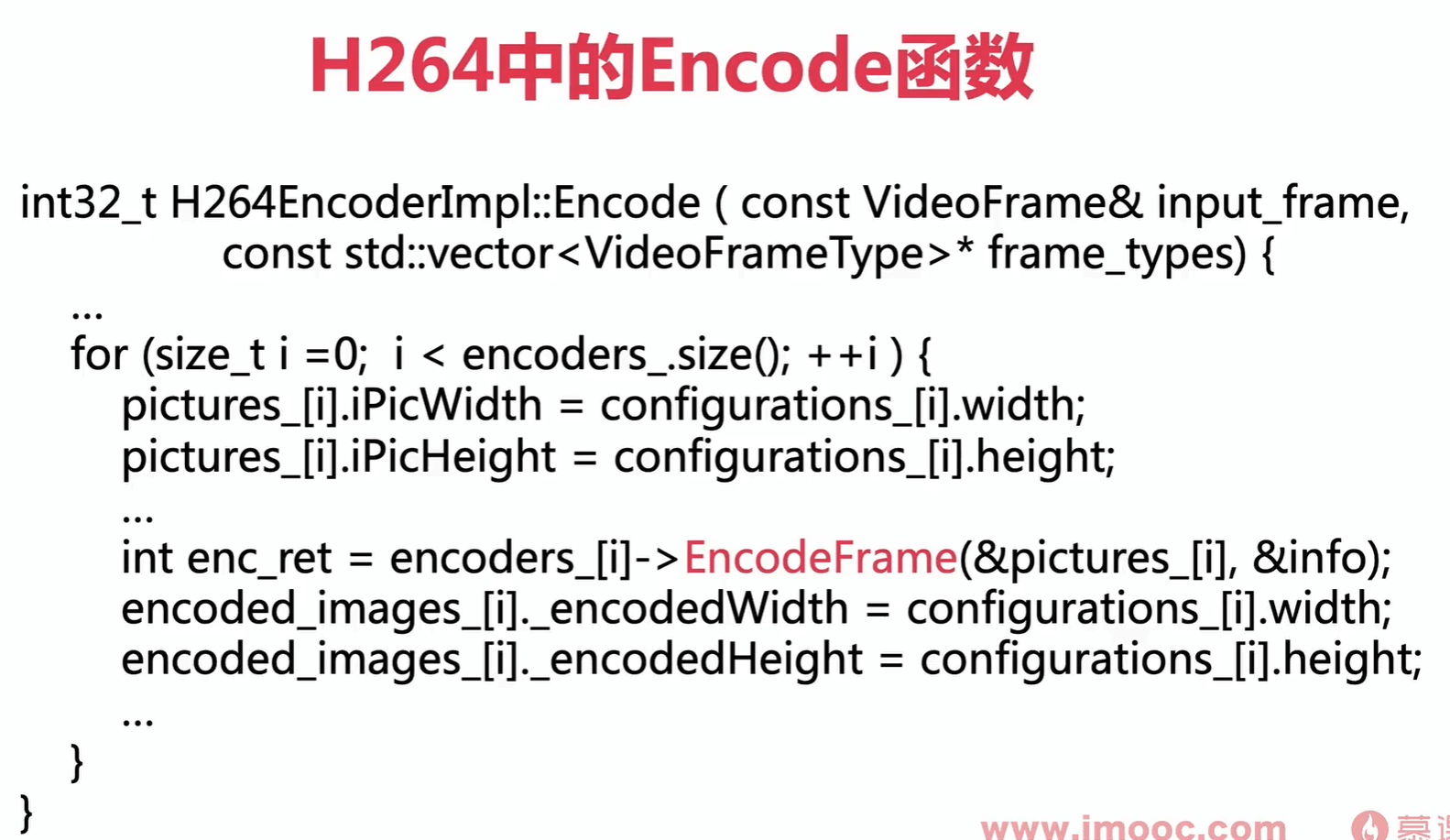

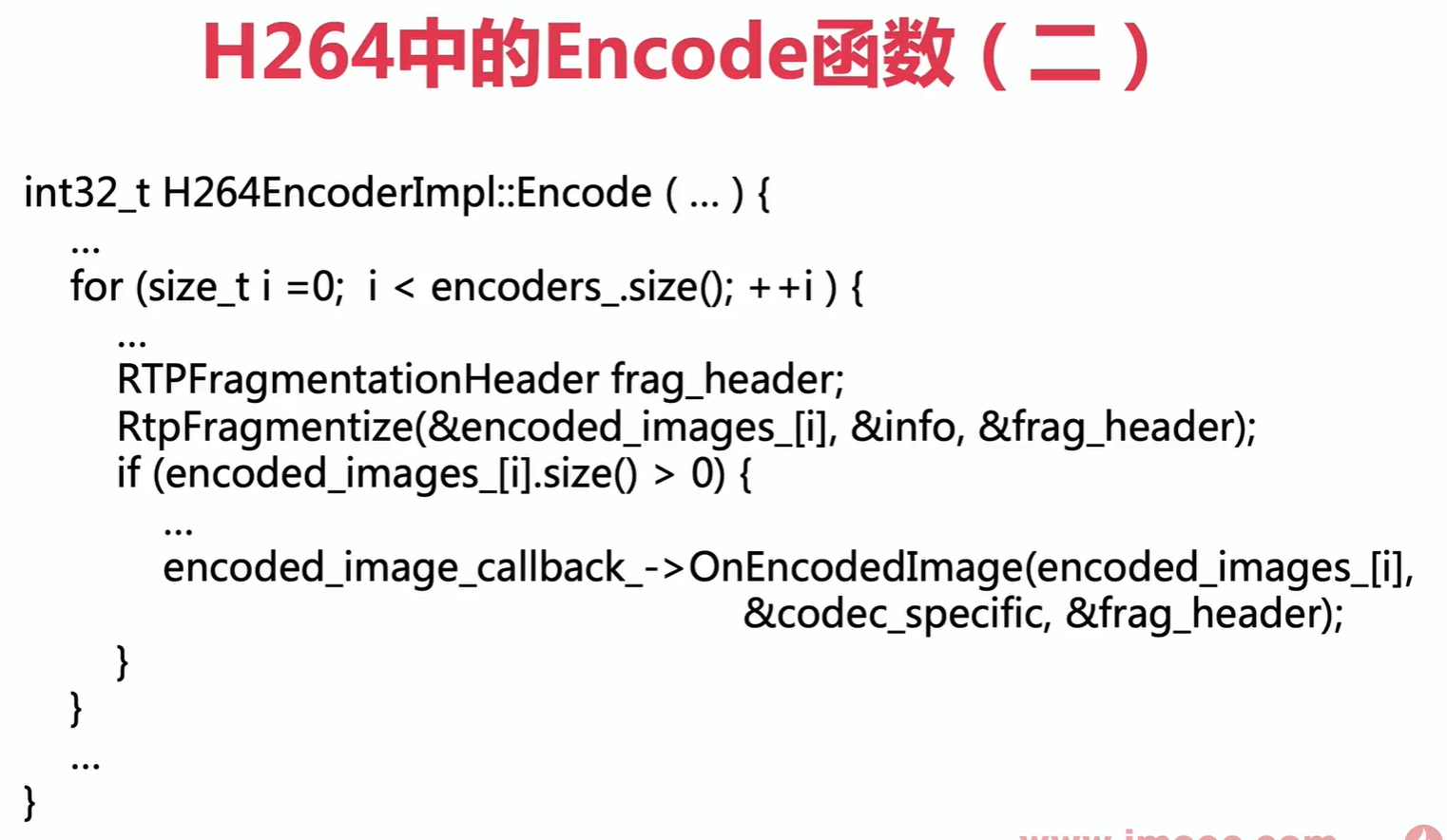

H264中的Encode函数

H264EncoderImpl::Encode

encoders长度,默认为1.开启Simulcast,则是3

OnEncodeImage 将编码后的数据上传到应用层。

int32_t H264EncoderImpl::Encode(const VideoFrame& input_frame,const std::vector<VideoFrameType>* frame_types) {if (encoders_.empty()) {ReportError();return WEBRTC_VIDEO_CODEC_UNINITIALIZED;}if (!encoded_image_callback_) {RTC_LOG(LS_WARNING)<< "InitEncode() has been called, but a callback function ""has not been set with RegisterEncodeCompleteCallback()";ReportError();return WEBRTC_VIDEO_CODEC_UNINITIALIZED;}rtc::scoped_refptr<const I420BufferInterface> frame_buffer =input_frame.video_frame_buffer()->ToI420();bool send_key_frame = false;for (size_t i = 0; i < configurations_.size(); ++i) {if (configurations_[i].key_frame_request && configurations_[i].sending) {send_key_frame = true;break;}}if (!send_key_frame && frame_types) {for (size_t i = 0; i < configurations_.size(); ++i) {const size_t simulcast_idx =static_cast<size_t>(configurations_[i].simulcast_idx);if (configurations_[i].sending && simulcast_idx < frame_types->size() &&(*frame_types)[simulcast_idx] == VideoFrameType::kVideoFrameKey) {send_key_frame = true;break;}}}RTC_DCHECK_EQ(configurations_[0].width, frame_buffer->width());RTC_DCHECK_EQ(configurations_[0].height, frame_buffer->height());// Encode image for each layer.for (size_t i = 0; i < encoders_.size(); ++i) {// EncodeFrame input.pictures_[i] = {0};pictures_[i].iPicWidth = configurations_[i].width;pictures_[i].iPicHeight = configurations_[i].height;pictures_[i].iColorFormat = EVideoFormatType::videoFormatI420;pictures_[i].uiTimeStamp = input_frame.ntp_time_ms();// Downscale images on second and ongoing layers.if (i == 0) {pictures_[i].iStride[0] = frame_buffer->StrideY();pictures_[i].iStride[1] = frame_buffer->StrideU();pictures_[i].iStride[2] = frame_buffer->StrideV();pictures_[i].pData[0] = const_cast<uint8_t*>(frame_buffer->DataY());pictures_[i].pData[1] = const_cast<uint8_t*>(frame_buffer->DataU());pictures_[i].pData[2] = const_cast<uint8_t*>(frame_buffer->DataV());} else {pictures_[i].iStride[0] = downscaled_buffers_[i - 1]->StrideY();pictures_[i].iStride[1] = downscaled_buffers_[i - 1]->StrideU();pictures_[i].iStride[2] = downscaled_buffers_[i - 1]->StrideV();pictures_[i].pData[0] =const_cast<uint8_t*>(downscaled_buffers_[i - 1]->DataY());pictures_[i].pData[1] =const_cast<uint8_t*>(downscaled_buffers_[i - 1]->DataU());pictures_[i].pData[2] =const_cast<uint8_t*>(downscaled_buffers_[i - 1]->DataV());// Scale the image down a number of times by downsampling factor.libyuv::I420Scale(pictures_[i - 1].pData[0], pictures_[i - 1].iStride[0],pictures_[i - 1].pData[1], pictures_[i - 1].iStride[1],pictures_[i - 1].pData[2], pictures_[i - 1].iStride[2],configurations_[i - 1].width,configurations_[i - 1].height, pictures_[i].pData[0],pictures_[i].iStride[0], pictures_[i].pData[1],pictures_[i].iStride[1], pictures_[i].pData[2],pictures_[i].iStride[2], configurations_[i].width,configurations_[i].height, libyuv::kFilterBilinear);}if (!configurations_[i].sending) {continue;}if (frame_types != nullptr) {// Skip frame?if ((*frame_types)[i] == VideoFrameType::kEmptyFrame) {continue;}}if (send_key_frame) {// API doc says ForceIntraFrame(false) does nothing, but calling this// function forces a key frame regardless of the |bIDR| argument's value.// (If every frame is a key frame we get lag/delays.)encoders_[i]->ForceIntraFrame(true);configurations_[i].key_frame_request = false;}// EncodeFrame output.SFrameBSInfo info;memset(&info, 0, sizeof(SFrameBSInfo));// Encode!int enc_ret = encoders_[i]->EncodeFrame(&pictures_[i], &info);if (enc_ret != 0) {RTC_LOG(LS_ERROR)<< "OpenH264 frame encoding failed, EncodeFrame returned " << enc_ret<< ".";ReportError();return WEBRTC_VIDEO_CODEC_ERROR;}encoded_images_[i]._encodedWidth = configurations_[i].width;encoded_images_[i]._encodedHeight = configurations_[i].height;encoded_images_[i].SetTimestamp(input_frame.timestamp());encoded_images_[i]._frameType = ConvertToVideoFrameType(info.eFrameType);encoded_images_[i].SetSpatialIndex(configurations_[i].simulcast_idx);// Split encoded image up into fragments. This also updates// |encoded_image_|.RtpFragmentize(&encoded_images_[i], &info);// Encoder can skip frames to save bandwidth in which case// |encoded_images_[i]._length| == 0.if (encoded_images_[i].size() > 0) {// Parse QP.h264_bitstream_parser_.ParseBitstream(encoded_images_[i]);encoded_images_[i].qp_ =h264_bitstream_parser_.GetLastSliceQp().value_or(-1);// Deliver encoded image.CodecSpecificInfo codec_specific;codec_specific.codecType = kVideoCodecH264;codec_specific.codecSpecific.H264.packetization_mode =packetization_mode_;codec_specific.codecSpecific.H264.temporal_idx = kNoTemporalIdx;codec_specific.codecSpecific.H264.idr_frame =info.eFrameType == videoFrameTypeIDR;codec_specific.codecSpecific.H264.base_layer_sync = false;if (configurations_[i].num_temporal_layers > 1) {const uint8_t tid = info.sLayerInfo[0].uiTemporalId;codec_specific.codecSpecific.H264.temporal_idx = tid;codec_specific.codecSpecific.H264.base_layer_sync =tid > 0 && tid < tl0sync_limit_[i];if (codec_specific.codecSpecific.H264.base_layer_sync) {tl0sync_limit_[i] = tid;}if (tid == 0) {tl0sync_limit_[i] = configurations_[i].num_temporal_layers;}}encoded_image_callback_->OnEncodedImage(encoded_images_[i],&codec_specific);}}return WEBRTC_VIDEO_CODEC_OK;}

VP8中的vpx_codec_encode函数

H:\webrtc-20210315\webrtc-20210315\webrtc\webrtc-checkout\src\third_party\libvpx\source\libvpx\vpx\src\vpx_encoder.c

vpx_codec_err_t vpx_codec_encode(vpx_codec_ctx_t *ctx, const vpx_image_t *img,vpx_codec_pts_t pts, unsigned long duration,vpx_enc_frame_flags_t flags,unsigned long deadline) {vpx_codec_err_t res = VPX_CODEC_OK;if (!ctx || (img && !duration))res = VPX_CODEC_INVALID_PARAM;else if (!ctx->iface || !ctx->priv)res = VPX_CODEC_ERROR;else if (!(ctx->iface->caps & VPX_CODEC_CAP_ENCODER))res = VPX_CODEC_INCAPABLE;else {unsigned int num_enc = ctx->priv->enc.total_encoders;/* Execute in a normalized floating point environment, if the platform* requires it.*/FLOATING_POINT_INIT();if (num_enc == 1)res = ctx->iface->enc.encode(get_alg_priv(ctx), img, pts, duration, flags,deadline);else {/* Multi-resolution encoding:* Encode multi-levels in reverse order. For example,* if mr_total_resolutions = 3, first encode level 2,* then encode level 1, and finally encode level 0.*/int i;ctx += num_enc - 1;if (img) img += num_enc - 1;for (i = num_enc - 1; i >= 0; i--) {if ((res = ctx->iface->enc.encode(get_alg_priv(ctx), img, pts, duration,flags, deadline)))break;ctx--;if (img) img--;}ctx++;}FLOATING_POINT_RESTORE();}return SAVE_STATUS(ctx, res);}

Simulcast还需要SFU服务器的协助,将各路流发送到不同网络情况的PC端