Nack调用栈

数据送解码器的图

9-7 Channel-Stream与编解码器(重要)

10-18 视频解码渲染(重点)

RtpDemuxer::OnRtpPacket 收到的如果是Rtx包,则会调用RtxReceiveStream::OnRtpPacket,否则会直接调用 RtpVideoStreamReceiver::OnRtpPacket.

RtxReceiveStream::OnRtpPacket处理完后,也会调用RtpVideoStreamReceiver::OnRtpPacket。

OnReceivedPacket

说明

正常收到rtp会使用fec和nack,这里为了避免fec干扰到nack处理,将关闭fec red。

当前分析的是视频的rtp包。

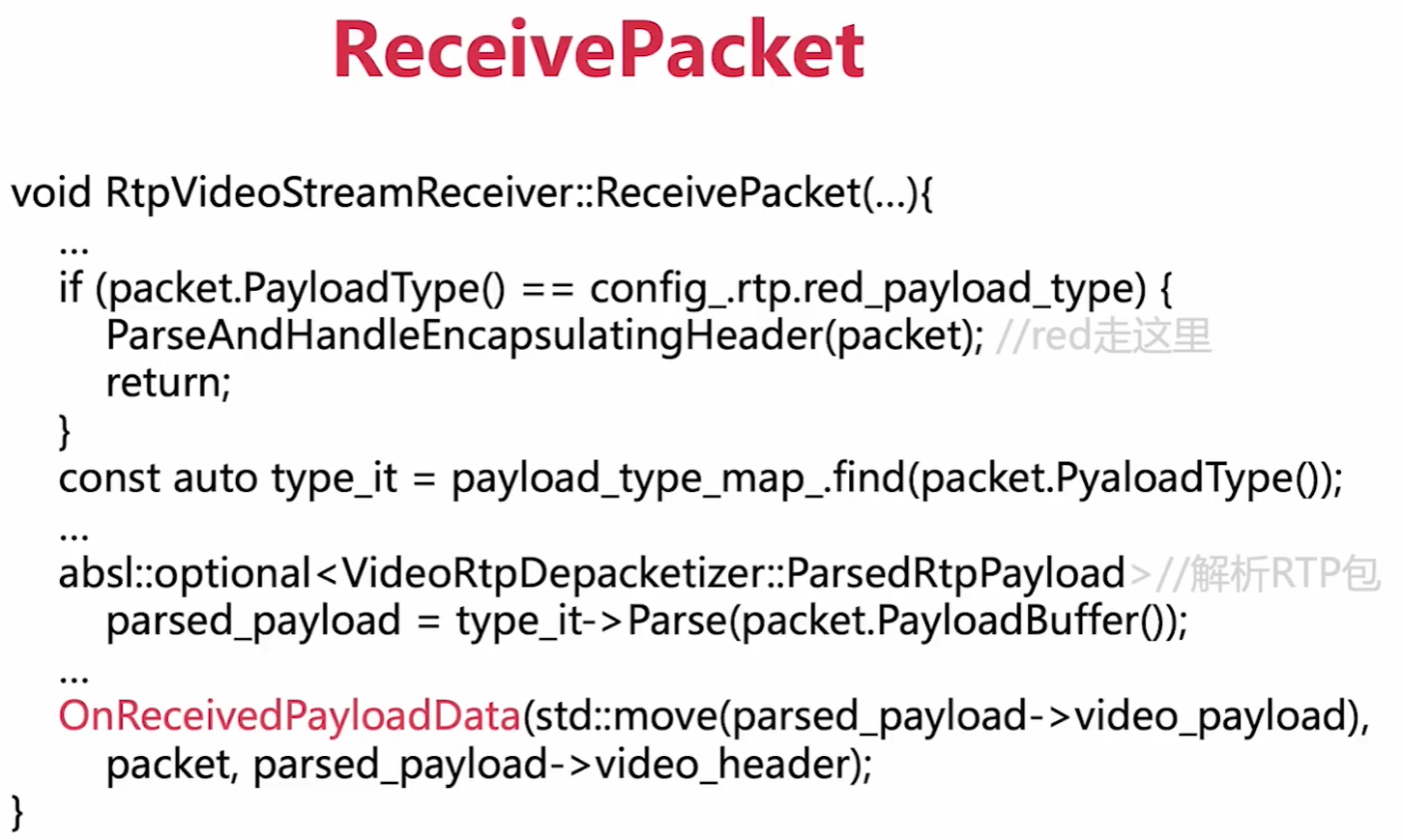

源码

void RtpVideoStreamReceiver::ReceivePacket(const RtpPacketReceived& packet) {if (packet.payload_size() == 0) {// Padding or keep-alive packet.// TODO(nisse): Could drop empty packets earlier, but need to figure out how// they should be counted in stats.NotifyReceiverOfEmptyPacket(packet.SequenceNumber());return;}if (packet.PayloadType() == config_.rtp.red_payload_type) {ParseAndHandleEncapsulatingHeader(packet);return;}const auto type_it = payload_type_map_.find(packet.PayloadType());if (type_it == payload_type_map_.end()) {return;}absl::optional<VideoRtpDepacketizer::ParsedRtpPayload> parsed_payload =type_it->second->Parse(packet.PayloadBuffer());if (parsed_payload == absl::nullopt) {RTC_LOG(LS_WARNING) << "Failed parsing payload.";return;}OnReceivedPayloadData(std::move(parsed_payload->video_payload), packet,parsed_payload->video_header);}

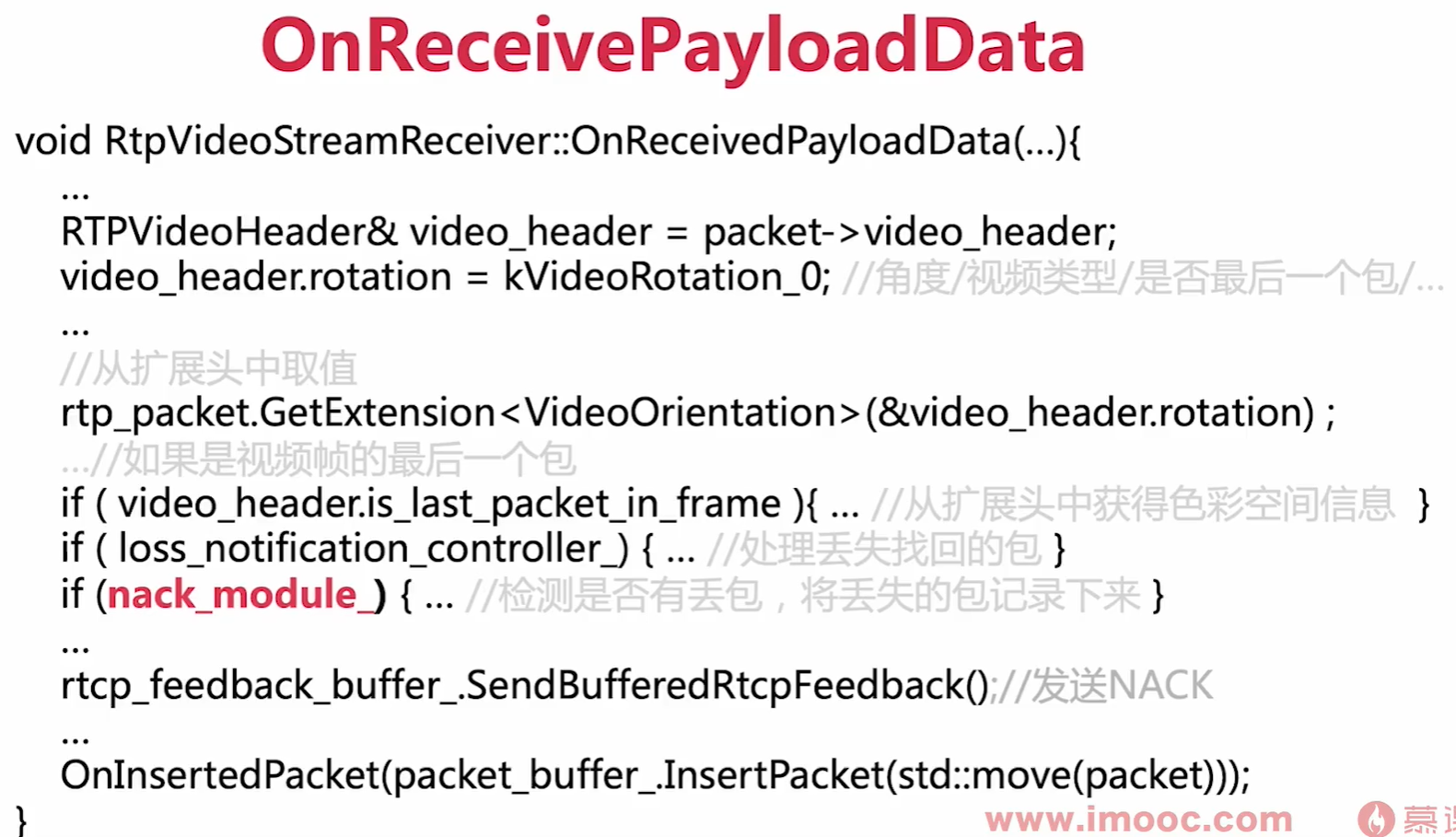

OnReceivedPayloadData

说明

RtpVideoStreamReceiver::OnReceivedPayloadData

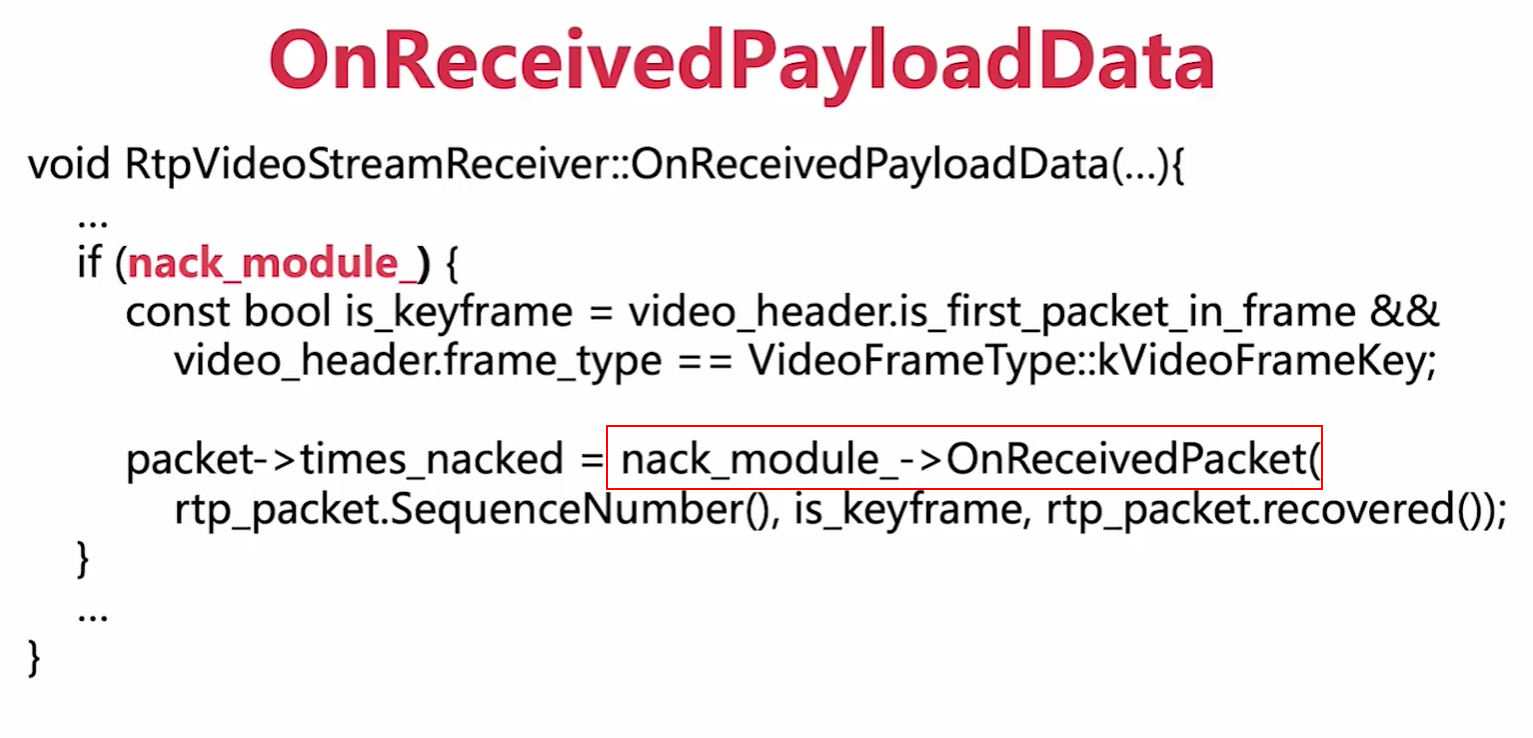

这里其实主要是三个if的分支,都是比较复杂和重要的。当前说的是第三个分支,检测丢包。 std::uniqueptr

源码

void RtpVideoStreamReceiver::OnReceivedPayloadData(rtc::CopyOnWriteBuffer codec_payload,const RtpPacketReceived& rtp_packet,const RTPVideoHeader& video) {RTC_DCHECK_RUN_ON(&worker_task_checker_);auto packet = std::make_unique<video_coding::PacketBuffer::Packet>(rtp_packet, video, clock_->TimeInMilliseconds());// Try to extrapolate absolute capture time if it is missing.packet->packet_info.set_absolute_capture_time(absolute_capture_time_receiver_.OnReceivePacket(AbsoluteCaptureTimeReceiver::GetSource(packet->packet_info.ssrc(),packet->packet_info.csrcs()),packet->packet_info.rtp_timestamp(),// Assume frequency is the same one for all video frames.kVideoPayloadTypeFrequency,packet->packet_info.absolute_capture_time()));RTPVideoHeader& video_header = packet->video_header;video_header.rotation = kVideoRotation_0;video_header.content_type = VideoContentType::UNSPECIFIED;video_header.video_timing.flags = VideoSendTiming::kInvalid;video_header.is_last_packet_in_frame |= rtp_packet.Marker();if (const auto* vp9_header =absl::get_if<RTPVideoHeaderVP9>(&video_header.video_type_header)) {video_header.is_last_packet_in_frame |= vp9_header->end_of_frame;video_header.is_first_packet_in_frame |= vp9_header->beginning_of_frame;}rtp_packet.GetExtension<VideoOrientation>(&video_header.rotation);rtp_packet.GetExtension<VideoContentTypeExtension>(&video_header.content_type);rtp_packet.GetExtension<VideoTimingExtension>(&video_header.video_timing);if (forced_playout_delay_max_ms_ && forced_playout_delay_min_ms_) {video_header.playout_delay.max_ms = *forced_playout_delay_max_ms_;video_header.playout_delay.min_ms = *forced_playout_delay_min_ms_;} else {rtp_packet.GetExtension<PlayoutDelayLimits>(&video_header.playout_delay);}ParseGenericDependenciesResult generic_descriptor_state =ParseGenericDependenciesExtension(rtp_packet, &video_header);if (generic_descriptor_state == kDropPacket)return;// Color space should only be transmitted in the last packet of a frame,// therefore, neglect it otherwise so that last_color_space_ is not reset by// mistake.if (video_header.is_last_packet_in_frame) {video_header.color_space = rtp_packet.GetExtension<ColorSpaceExtension>();if (video_header.color_space ||video_header.frame_type == VideoFrameType::kVideoFrameKey) {// Store color space since it's only transmitted when changed or for key// frames. Color space will be cleared if a key frame is transmitted// without color space information.last_color_space_ = video_header.color_space;} else if (last_color_space_) {video_header.color_space = last_color_space_;}}if (loss_notification_controller_) {if (rtp_packet.recovered()) {// TODO(bugs.webrtc.org/10336): Implement support for reordering.RTC_LOG(LS_INFO)<< "LossNotificationController does not support reordering.";} else if (generic_descriptor_state == kNoGenericDescriptor) {RTC_LOG(LS_WARNING) << "LossNotificationController requires generic ""frame descriptor, but it is missing.";} else {if (video_header.is_first_packet_in_frame) {RTC_DCHECK(video_header.generic);LossNotificationController::FrameDetails frame;frame.is_keyframe =video_header.frame_type == VideoFrameType::kVideoFrameKey;frame.frame_id = video_header.generic->frame_id;frame.frame_dependencies = video_header.generic->dependencies;loss_notification_controller_->OnReceivedPacket(rtp_packet.SequenceNumber(), &frame);} else {loss_notification_controller_->OnReceivedPacket(rtp_packet.SequenceNumber(), nullptr);}}}if (nack_module_) {const bool is_keyframe =video_header.is_first_packet_in_frame &&video_header.frame_type == VideoFrameType::kVideoFrameKey;packet->times_nacked = nack_module_->OnReceivedPacket(rtp_packet.SequenceNumber(), is_keyframe, rtp_packet.recovered());} else {packet->times_nacked = -1;}if (codec_payload.size() == 0) {NotifyReceiverOfEmptyPacket(packet->seq_num);rtcp_feedback_buffer_.SendBufferedRtcpFeedback();return;}if (packet->codec() == kVideoCodecH264) {// Only when we start to receive packets will we know what payload type// that will be used. When we know the payload type insert the correct// sps/pps into the tracker.if (packet->payload_type != last_payload_type_) {last_payload_type_ = packet->payload_type;InsertSpsPpsIntoTracker(packet->payload_type);}video_coding::H264SpsPpsTracker::FixedBitstream fixed =tracker_.CopyAndFixBitstream(rtc::MakeArrayView(codec_payload.cdata(), codec_payload.size()),&packet->video_header);switch (fixed.action) {case video_coding::H264SpsPpsTracker::kRequestKeyframe:rtcp_feedback_buffer_.RequestKeyFrame();rtcp_feedback_buffer_.SendBufferedRtcpFeedback();ABSL_FALLTHROUGH_INTENDED;case video_coding::H264SpsPpsTracker::kDrop:return;case video_coding::H264SpsPpsTracker::kInsert:packet->video_payload = std::move(fixed.bitstream);break;}} else {packet->video_payload = std::move(codec_payload);}rtcp_feedback_buffer_.SendBufferedRtcpFeedback();frame_counter_.Add(packet->timestamp);OnInsertedPacket(packet_buffer_.InsertPacket(std::move(packet)));}