X-volution: On the Unification of Convolution and Self-attention

本文原始文档:https://www.yuque.com/lart/papers/zpdmek

今天,上交&华为海思提出了新的“卷王”Xvolution:它对卷积与自注意力进行了统一集成,同时利用了卷积的局部特征提取能力与自注意力的全局建模能力。更重要的是,它通过结构重参数化思想将训练与推理进行了解耦:在训练阶段采用多分支结构进行训练,在推理阶段等价转换为单一动态卷积形式。

——“新卷王”X-volution | 将卷积与自注意力进行高效集成,上交与华为海思提出了Xvolution:https://mp.weixin.qq.com/s/yE9nwWjAUjAkmDSpnVuzKA

从摘要读论文

Convolution and self-attention are acting as two fundamental building blocks in deep neural networks, where the former extracts local image features in a linear way while the latter non-locally encodes high-order contextual relationships.

这里很精炼的总结了卷积和自注意力计算的计算形式的差异。一个是线性提取局部特征,另一个是计算非局部的上下文关系。

Though essentially complementary to each other (i.e., first-/high-order), stat-of-the-art ar-chitectures (i.e., CNNs or transformers) lack a principled way to simultaneously apply both operations in a single computational module, due to their heterogeneous computing pattern and excessive burden of global dot-product for visual tasks.

由于两种操作的不同计算模式和自注意力在视觉任务中全局点积计算的巨大负担,所以现有的架构缺少一种在单一计算单元同时应用两种具有互补价值的操作有原则的方式。 难道作者要降低自注意力运算的负担么?

In this work, we theoretically derive a global self-attention approximation scheme, which approximates self-attention via the convolution operation on transformed features.

果然是简化了自注意力操作。

Based on the approximate scheme, we establish a multi-branch elementary module composed of both convolution and self-attention operation, capable of unifying both local and non-local feature interaction.

Importantly, once trained, this multi-branch module could be conditionally converted into a single standard convolution operation via structural re-parameterization, rendering a pure convolution styled operator named X-volution, ready to be plugged into any modern networks as an atomic operation.

自注意力操作与卷积相结合,通过近似策略以及重参数化策略进行转化后,整体形成了一个多分支的卷积结构——

X-volution。

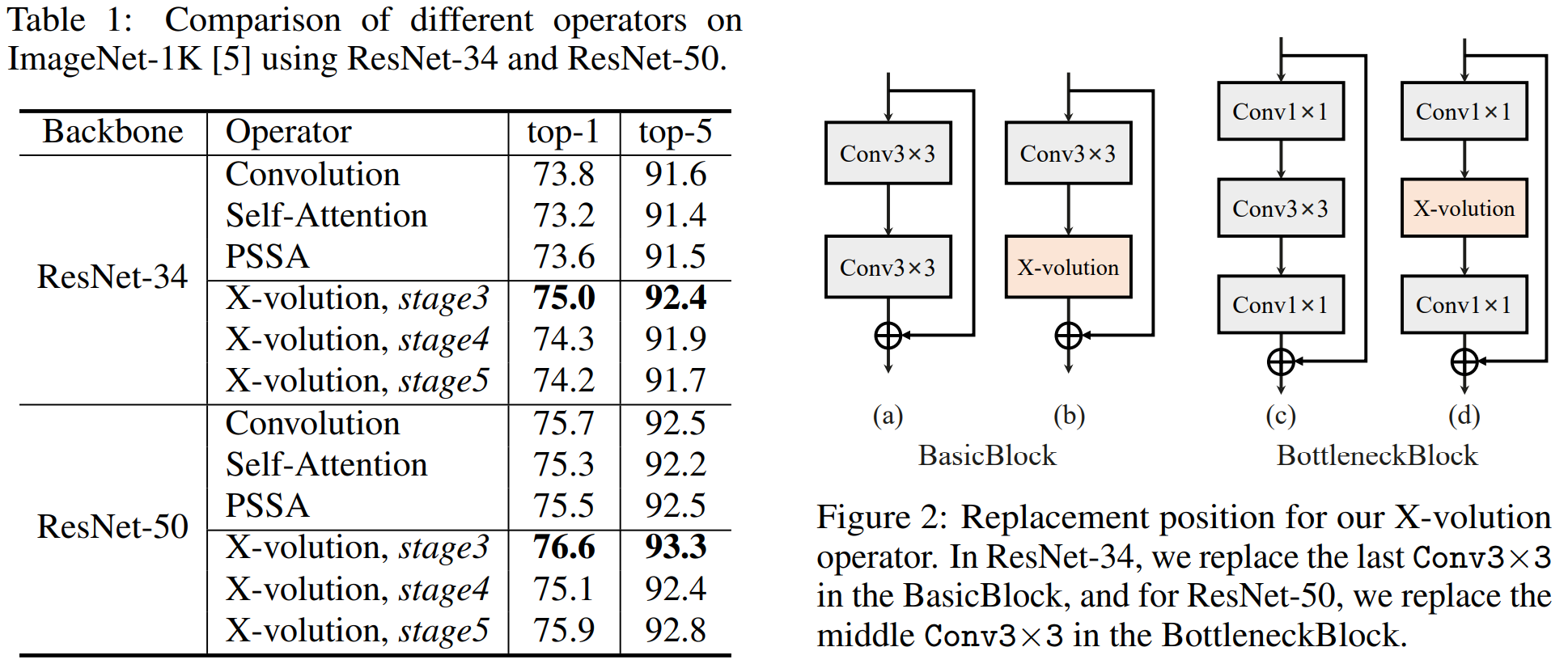

Extensive experiments demonstrate that the pro-posed X-volution, achieves highly competitive visual understanding improvements (+1.2% top-1 accuracy on ImageNet classification, +1.7 box AP and +1.5 mask AP on COCO detection and segmentation).

从摘要整体来看,这篇文章主要做的就是将自注意力操作通过特定的近似策略实现了与卷积运算的统一。

也就是说,如何保证这种近似足够有效果和有效率才是关键。

主要方法

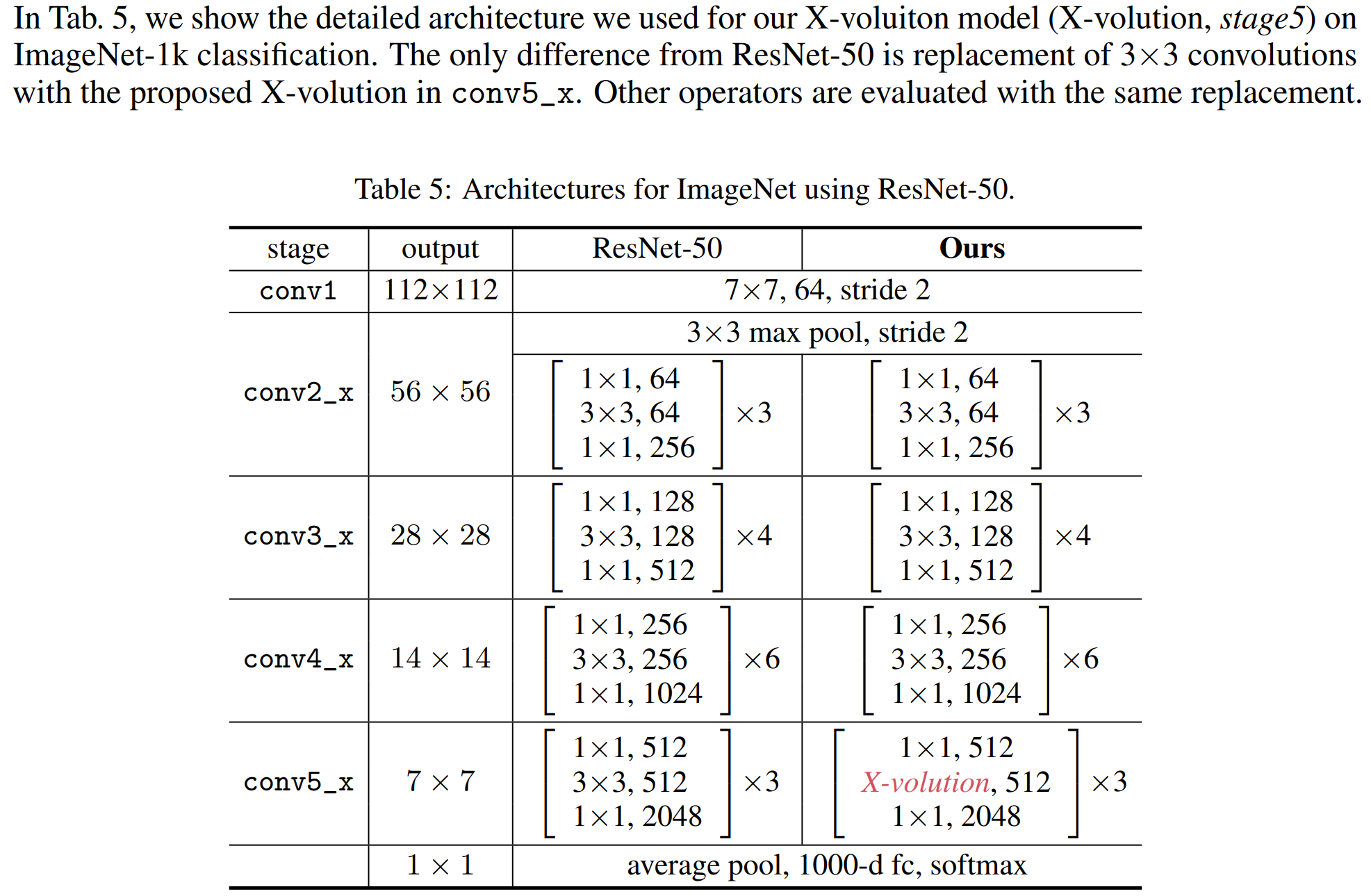

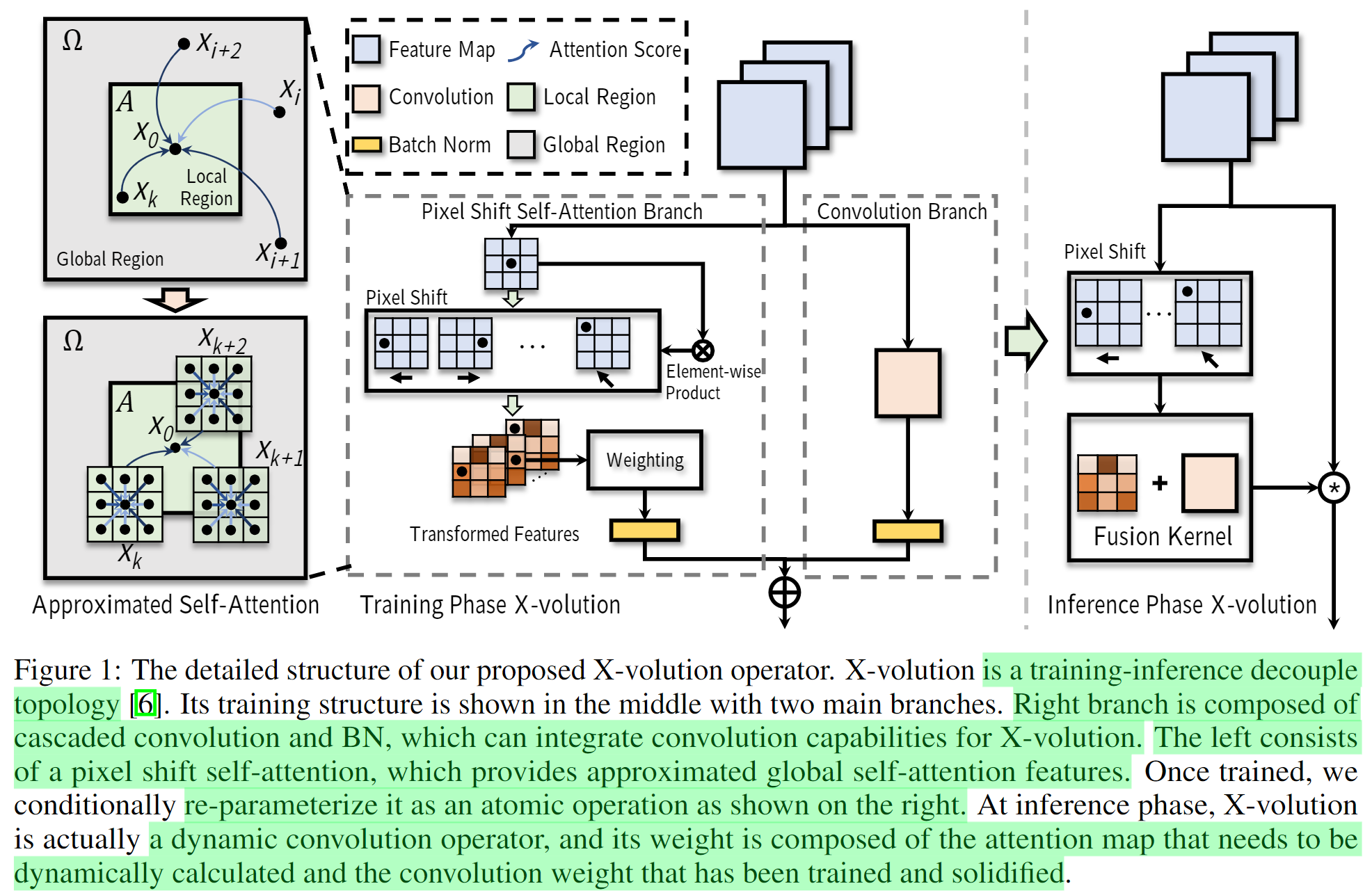

右侧结构为推理阶段重参数化后的结构。而模型细节应该重点看图中左侧的结构,也就是训练阶段的模型。

左侧结构的核心是一个双分支结构:

- 右侧支路包含级联的卷积层和BN层(这样的结构可以在推理阶段被融合为一个单一的卷积层),这一结构可以保证模型利用卷积来获得局部信息的增强。

- 左侧支路构建在自注意力操作之上。是一个基于(空间)像素偏移操作(Pytorch中Spatial-Shift-Operation的5种实现策略)与卷积构成的单元和一个BN层,这用于近似全局自注意力操作。

关于Conv和BN层的推理时融合是一个常用的加速推理的策略,因为BN层的参数在推理时是固定的,即失去了训练时需要跨batch计算均值标准差的需求,所以可以被简单等价于batch共享的分组数输入通道数(也等于输出通道数)的

卷积,从而便于与前一层合并。当然,实际代码可以直接通过将两个结构的计算过程合并后的公式进行构造。

为了更好的表述这个将注意力和卷积结合起来的过程,接下来将按照论文中的构思过程来描述。

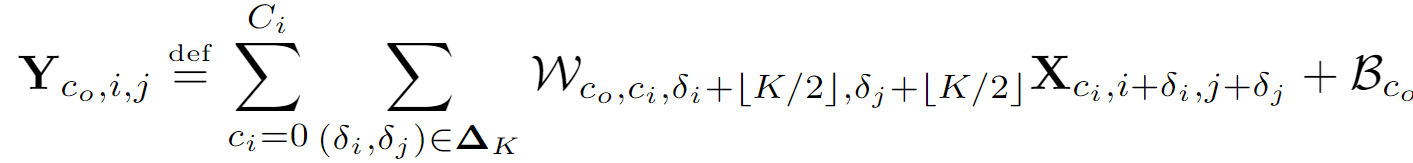

卷积运算

对于输入X使用空间共享的权重矩阵W(参数与输入独立)来聚合大小为的局部窗口

中的特征(这里公式中的

是相对计算的局部区域的中心的偏移量,有正有负,而在W中索引的形式里,通过加上了一个矩阵中心的最大距离,从而将其调整为了W索引形式),同时使用偏置B来进行调整。

这可以看作是一种一阶的线性加权操作。

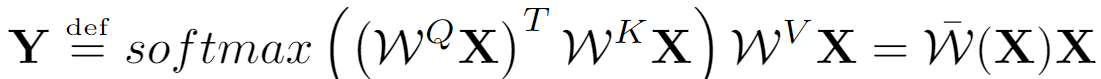

自注意力运算

注意,这里讨论的是原始的全局自注意力机制,而非后续提出的各种简化版本。

自注意力通过对线性变换过的X计算全局的相似性关系,从而获得一个对于输入X的全局不共享的动态权重(参数与输入有关)。在文中所述的形式中,下式最后的等价权重可以看作是a dynamic (element-wise content-dependent) and spatially varying convolutional filter。

这可以看作是一种高阶的全局操作。

这里要注意的是,这个公式实际上有问题。

假定按照文中的设定,输入序列设为%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-58%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2208%22%20x%3D%221130%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2075%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJAMS-52%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(722%2C412)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-43%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-D7%22%20x%3D%22760%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-4C%22%20x%3D%221539%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=X%20%5Cin%20%5Cmathbb%7BR%7D%5E%7BC%20%5Ctimes%20L%7D&id=z0XQp),序列长度

,操作中包含有独立的空间共享的线性嵌入变换权重

(这里按照常规设定,三者形状一致)。那么这里最后的

应该有形状

才对,但是按照其实际形状为

。所以合理的形式应该是:

%5E%7B%5Ctop%7D%7D%7BL%20%5Ctimes%20D%7D%0A%5Cunderbrace%7BW%5EKX%7D%7BD%20%5Ctimes%20L%7D)%20%0A%5Cunderbrace%7B(W%5EVX)%5E%7B%5Ctop%7D%7D%7BL%20%5Ctimes%20D%7D%3C%2Ftitle%3E%0A%3Cdefs%20aria-hidden%3D%22true%22%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-59%22%20d%3D%22M66%20637Q54%20637%2049%20637T39%20638T32%20641T30%20647T33%20664T42%20682Q44%20683%2056%20683Q104%20680%20165%20680Q288%20680%20306%20683H316Q322%20677%20322%20674T320%20656Q316%20643%20310%20637H298Q242%20637%20242%20624Q242%20619%20292%20477T343%20333L346%20336Q350%20340%20358%20349T379%20373T411%20410T454%20461Q546%20568%20561%20587T577%20618Q577%20634%20545%20637Q528%20637%20528%20647Q528%20649%20530%20661Q533%20676%20535%20679T549%20683Q551%20683%20578%20682T657%20680Q684%20680%20713%20681T746%20682Q763%20682%20763%20673Q763%20669%20760%20657T755%20643Q753%20637%20734%20637Q662%20632%20617%20587Q608%20578%20477%20424L348%20273L322%20169Q295%2062%20295%2057Q295%2046%20363%2046Q379%2046%20384%2045T390%2035Q390%2033%20388%2023Q384%206%20382%204T366%201Q361%201%20324%201T232%202Q170%202%20138%202T102%201Q84%201%2084%209Q84%2014%2087%2024Q88%2027%2089%2030T90%2035T91%2039T93%2042T96%2044T101%2045T107%2045T116%2046T129%2046Q168%2047%20180%2050T198%2063Q201%2068%20227%20171L252%20274L129%20623Q128%20624%20127%20625T125%20627T122%20629T118%20631T113%20633T105%20634T96%20635T83%20636T66%20637Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2208%22%20d%3D%22M84%20250Q84%20372%20166%20450T360%20539Q361%20539%20377%20539T419%20540T469%20540H568Q583%20532%20583%20520Q583%20511%20570%20501L466%20500Q355%20499%20329%20494Q280%20482%20242%20458T183%20409T147%20354T129%20306T124%20272V270H568Q583%20262%20583%20250T568%20230H124V228Q124%20207%20134%20177T167%20112T231%2048T328%207Q355%201%20466%200H570Q583%20-10%20583%20-20Q583%20-32%20568%20-40H471Q464%20-40%20446%20-40T417%20-41Q262%20-41%20172%2045Q84%20127%2084%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJAMS-52%22%20d%3D%22M17%20665Q17%20672%2028%20683H221Q415%20681%20439%20677Q461%20673%20481%20667T516%20654T544%20639T566%20623T584%20607T597%20592T607%20578T614%20565T618%20554L621%20548Q626%20530%20626%20497Q626%20447%20613%20419Q578%20348%20473%20326L455%20321Q462%20310%20473%20292T517%20226T578%20141T637%2072T686%2035Q705%2030%20705%2016Q705%207%20693%20-1H510Q503%206%20404%20159L306%20310H268V183Q270%2067%20271%2059Q274%2042%20291%2038Q295%2037%20319%2035Q344%2035%20353%2028Q362%2017%20353%203L346%20-1H28Q16%205%2016%2016Q16%2035%2055%2035Q96%2038%20101%2052Q106%2060%20106%20341T101%20632Q95%20645%2055%20648Q17%20648%2017%20665ZM241%2035Q238%2042%20237%2045T235%2078T233%20163T233%20337V621L237%20635L244%20648H133Q136%20641%20137%20638T139%20603T141%20517T141%20341Q141%20131%20140%2089T134%2037Q133%2036%20133%2035H241ZM457%20496Q457%20540%20449%20570T425%20615T400%20634T377%20643Q374%20643%20339%20648Q300%20648%20281%20635Q271%20628%20270%20610T268%20481V346H284Q327%20346%20375%20352Q421%20364%20439%20392T457%20496ZM492%20537T492%20496T488%20427T478%20389T469%20371T464%20361Q464%20360%20465%20360Q469%20360%20497%20370Q593%20400%20593%20495Q593%20592%20477%20630L457%20637L461%20626Q474%20611%20488%20561Q492%20537%20492%20496ZM464%20243Q411%20317%20410%20317Q404%20317%20401%20315Q384%20315%20370%20312H346L526%2035H619L606%2050Q553%20109%20464%20243Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-4C%22%20d%3D%22M228%20637Q194%20637%20192%20641Q191%20643%20191%20649Q191%20673%20202%20682Q204%20683%20217%20683Q271%20680%20344%20680Q485%20680%20506%20683H518Q524%20677%20524%20674T522%20656Q517%20641%20513%20637H475Q406%20636%20394%20628Q387%20624%20380%20600T313%20336Q297%20271%20279%20198T252%2088L243%2052Q243%2048%20252%2048T311%2046H328Q360%2046%20379%2047T428%2054T478%2072T522%20106T564%20161Q580%20191%20594%20228T611%20270Q616%20273%20628%20273H641Q647%20264%20647%20262T627%20203T583%2083T557%209Q555%204%20553%203T537%200T494%20-1Q483%20-1%20418%20-1T294%200H116Q32%200%2032%2010Q32%2017%2034%2024Q39%2043%2044%2045Q48%2046%2059%2046H65Q92%2046%20125%2049Q139%2052%20144%2061Q147%2065%20216%20339T285%20628Q285%20635%20228%20637Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-D7%22%20d%3D%22M630%2029Q630%209%20609%209Q604%209%20587%2025T493%20118L389%20222L284%20117Q178%2013%20175%2011Q171%209%20168%209Q160%209%20154%2015T147%2029Q147%2036%20161%2051T255%20146L359%20250L255%20354Q174%20435%20161%20449T147%20471Q147%20480%20153%20485T168%20490Q173%20490%20175%20489Q178%20487%20284%20383L389%20278L493%20382Q570%20459%20587%20475T609%20491Q630%20491%20630%20471Q630%20464%20620%20453T522%20355L418%20250L522%20145Q606%2061%20618%2048T630%2029Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-44%22%20d%3D%22M287%20628Q287%20635%20230%20637Q207%20637%20200%20638T193%20647Q193%20655%20197%20667T204%20682Q206%20683%20403%20683Q570%20682%20590%20682T630%20676Q702%20659%20752%20597T803%20431Q803%20275%20696%20151T444%203L430%201L236%200H125H72Q48%200%2041%202T33%2011Q33%2013%2036%2025Q40%2041%2044%2043T67%2046Q94%2046%20127%2049Q141%2052%20146%2061Q149%2065%20218%20339T287%20628ZM703%20469Q703%20507%20692%20537T666%20584T629%20613T590%20629T555%20636Q553%20636%20541%20636T512%20636T479%20637H436Q392%20637%20386%20627Q384%20623%20313%20339T242%2052Q242%2048%20253%2048T330%2047Q335%2047%20349%2047T373%2046Q499%2046%20581%20128Q617%20164%20640%20212T683%20339T703%20469Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJAMS-225C%22%20d%3D%22M192%20482H190Q187%20483%20185%20484T181%20488T177%20493T175%20501Q175%20506%20178%20512Q184%20523%20278%20687T375%20853Q379%20857%20383%20857Q385%20857%20387%20858T390%20859Q397%20859%20403%20853Q405%20851%20499%20687T600%20512Q603%20506%20603%20501Q603%20488%20587%20482H192ZM548%20523L389%20798Q388%20798%20309%20661T230%20523T389%20522T548%20523ZM56%20347Q56%20360%2070%20367H708Q723%20359%20723%20347Q723%20336%20709%20328L390%20327H72Q56%20332%2056%20347ZM56%20153Q56%20168%2072%20173H709Q723%20163%20723%20153Q723%20140%20708%20133H70Q56%20140%2056%20153Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-73%22%20d%3D%22M295%20316Q295%20356%20268%20385T190%20414Q154%20414%20128%20401Q98%20382%2098%20349Q97%20344%2098%20336T114%20312T157%20287Q175%20282%20201%20278T245%20269T277%20256Q294%20248%20310%20236T342%20195T359%20133Q359%2071%20321%2031T198%20-10H190Q138%20-10%2094%2026L86%2019L77%2010Q71%204%2065%20-1L54%20-11H46H42Q39%20-11%2033%20-5V74V132Q33%20153%2035%20157T45%20162H54Q66%20162%2070%20158T75%20146T82%20119T101%2077Q136%2026%20198%2026Q295%2026%20295%20104Q295%20133%20277%20151Q257%20175%20194%20187T111%20210Q75%20227%2054%20256T33%20318Q33%20357%2050%20384T93%20424T143%20442T187%20447H198Q238%20447%20268%20432L283%20424L292%20431Q302%20440%20314%20448H322H326Q329%20448%20335%20442V310L329%20304H301Q295%20310%20295%20316Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-6F%22%20d%3D%22M28%20214Q28%20309%2093%20378T250%20448Q340%20448%20405%20380T471%20215Q471%20120%20407%2055T250%20-10Q153%20-10%2091%2057T28%20214ZM250%2030Q372%2030%20372%20193V225V250Q372%20272%20371%20288T364%20326T348%20362T317%20390T268%20410Q263%20411%20252%20411Q222%20411%20195%20399Q152%20377%20139%20338T126%20246V226Q126%20130%20145%2091Q177%2030%20250%2030Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-66%22%20d%3D%22M273%200Q255%203%20146%203Q43%203%2034%200H26V46H42Q70%2046%2091%2049Q99%2052%20103%2060Q104%2062%20104%20224V385H33V431H104V497L105%20564L107%20574Q126%20639%20171%20668T266%20704Q267%20704%20275%20704T289%20705Q330%20702%20351%20679T372%20627Q372%20604%20358%20590T321%20576T284%20590T270%20627Q270%20647%20288%20667H284Q280%20668%20273%20668Q245%20668%20223%20647T189%20592Q183%20572%20182%20497V431H293V385H185V225Q185%2063%20186%2061T189%2057T194%2054T199%2051T206%2049T213%2048T222%2047T231%2047T241%2046T251%2046H282V0H273Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-74%22%20d%3D%22M27%20422Q80%20426%20109%20478T141%20600V615H181V431H316V385H181V241Q182%20116%20182%20100T189%2068Q203%2029%20238%2029Q282%2029%20292%20100Q293%20108%20293%20146V181H333V146V134Q333%2057%20291%2017Q264%20-10%20221%20-10Q187%20-10%20162%202T124%2033T105%2068T98%20100Q97%20107%2097%20248V385H18V422H27Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-6D%22%20d%3D%22M41%2046H55Q94%2046%20102%2060V68Q102%2077%20102%2091T102%20122T103%20161T103%20203Q103%20234%20103%20269T102%20328V351Q99%20370%2088%20376T43%20385H25V408Q25%20431%2027%20431L37%20432Q47%20433%2065%20434T102%20436Q119%20437%20138%20438T167%20441T178%20442H181V402Q181%20364%20182%20364T187%20369T199%20384T218%20402T247%20421T285%20437Q305%20442%20336%20442Q351%20442%20364%20440T387%20434T406%20426T421%20417T432%20406T441%20395T448%20384T452%20374T455%20366L457%20361L460%20365Q463%20369%20466%20373T475%20384T488%20397T503%20410T523%20422T546%20432T572%20439T603%20442Q729%20442%20740%20329Q741%20322%20741%20190V104Q741%2066%20743%2059T754%2049Q775%2046%20803%2046H819V0H811L788%201Q764%202%20737%202T699%203Q596%203%20587%200H579V46H595Q656%2046%20656%2062Q657%2064%20657%20200Q656%20335%20655%20343Q649%20371%20635%20385T611%20402T585%20404Q540%20404%20506%20370Q479%20343%20472%20315T464%20232V168V108Q464%2078%20465%2068T468%2055T477%2049Q498%2046%20526%2046H542V0H534L510%201Q487%202%20460%202T422%203Q319%203%20310%200H302V46H318Q379%2046%20379%2062Q380%2064%20380%20200Q379%20335%20378%20343Q372%20371%20358%20385T334%20402T308%20404Q263%20404%20229%20370Q202%20343%20195%20315T187%20232V168V108Q187%2078%20188%2068T191%2055T200%2049Q221%2046%20249%2046H265V0H257L234%201Q210%202%20183%202T145%203Q42%203%2033%200H25V46H41Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-61%22%20d%3D%22M137%20305T115%20305T78%20320T63%20359Q63%20394%2097%20421T218%20448Q291%20448%20336%20416T396%20340Q401%20326%20401%20309T402%20194V124Q402%2076%20407%2058T428%2040Q443%2040%20448%2056T453%20109V145H493V106Q492%2066%20490%2059Q481%2029%20455%2012T400%20-6T353%2012T329%2054V58L327%2055Q325%2052%20322%2049T314%2040T302%2029T287%2017T269%206T247%20-2T221%20-8T190%20-11Q130%20-11%2082%2020T34%20107Q34%20128%2041%20147T68%20188T116%20225T194%20253T304%20268H318V290Q318%20324%20312%20340Q290%20411%20215%20411Q197%20411%20181%20410T156%20406T148%20403Q170%20388%20170%20359Q170%20334%20154%20320ZM126%20106Q126%2075%20150%2051T209%2026Q247%2026%20276%2049T315%20109Q317%20116%20318%20175Q318%20233%20317%20233Q309%20233%20296%20232T251%20223T193%20203T147%20166T126%20106Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-78%22%20d%3D%22M201%200Q189%203%20102%203Q26%203%2017%200H11V46H25Q48%2047%2067%2052T96%2061T121%2078T139%2096T160%20122T180%20150L226%20210L168%20288Q159%20301%20149%20315T133%20336T122%20351T113%20363T107%20370T100%20376T94%20379T88%20381T80%20383Q74%20383%2044%20385H16V431H23Q59%20429%20126%20429Q219%20429%20229%20431H237V385Q201%20381%20201%20369Q201%20367%20211%20353T239%20315T268%20274L272%20270L297%20304Q329%20345%20329%20358Q329%20364%20327%20369T322%20376T317%20380T310%20384L307%20385H302V431H309Q324%20428%20408%20428Q487%20428%20493%20431H499V385H492Q443%20385%20411%20368Q394%20360%20377%20341T312%20257L296%20236L358%20151Q424%2061%20429%2057T446%2050Q464%2046%20499%2046H516V0H510H502Q494%201%20482%201T457%202T432%202T414%203Q403%203%20377%203T327%201L304%200H295V46H298Q309%2046%20320%2051T331%2063Q331%2065%20291%20120L250%20175Q249%20174%20219%20133T185%2088Q181%2083%20181%2074Q181%2063%20188%2055T206%2046Q208%2046%20208%2023V0H201Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-28%22%20d%3D%22M94%20250Q94%20319%20104%20381T127%20488T164%20576T202%20643T244%20695T277%20729T302%20750H315H319Q333%20750%20333%20741Q333%20738%20316%20720T275%20667T226%20581T184%20443T167%20250T184%2058T225%20-81T274%20-167T316%20-220T333%20-241Q333%20-250%20318%20-250H315H302L274%20-226Q180%20-141%20137%20-14T94%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-57%22%20d%3D%22M436%20683Q450%20683%20486%20682T553%20680Q604%20680%20638%20681T677%20682Q695%20682%20695%20674Q695%20670%20692%20659Q687%20641%20683%20639T661%20637Q636%20636%20621%20632T600%20624T597%20615Q597%20603%20613%20377T629%20138L631%20141Q633%20144%20637%20151T649%20170T666%20200T690%20241T720%20295T759%20362Q863%20546%20877%20572T892%20604Q892%20619%20873%20628T831%20637Q817%20637%20817%20647Q817%20650%20819%20660Q823%20676%20825%20679T839%20682Q842%20682%20856%20682T895%20682T949%20681Q1015%20681%201034%20683Q1048%20683%201048%20672Q1048%20666%201045%20655T1038%20640T1028%20637Q1006%20637%20988%20631T958%20617T939%20600T927%20584L923%20578L754%20282Q586%20-14%20585%20-15Q579%20-22%20561%20-22Q546%20-22%20542%20-17Q539%20-14%20523%20229T506%20480L494%20462Q472%20425%20366%20239Q222%20-13%20220%20-15T215%20-19Q210%20-22%20197%20-22Q178%20-22%20176%20-15Q176%20-12%20154%20304T131%20622Q129%20631%20121%20633T82%20637H58Q51%20644%2051%20648Q52%20671%2064%20683H76Q118%20680%20176%20680Q301%20680%20313%20683H323Q329%20677%20329%20674T327%20656Q322%20641%20318%20637H297Q236%20634%20232%20620Q262%20160%20266%20136L501%20550L499%20587Q496%20629%20489%20632Q483%20636%20447%20637Q428%20637%20422%20639T416%20648Q416%20650%20418%20660Q419%20664%20420%20669T421%20676T424%20680T428%20682T436%20683Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-51%22%20d%3D%22M399%20-80Q399%20-47%20400%20-30T402%20-11V-7L387%20-11Q341%20-22%20303%20-22Q208%20-22%20138%2035T51%20201Q50%20209%2050%20244Q50%20346%2098%20438T227%20601Q351%20704%20476%20704Q514%20704%20524%20703Q621%20689%20680%20617T740%20435Q740%20255%20592%20107Q529%2047%20461%2016L444%208V3Q444%202%20449%20-24T470%20-66T516%20-82Q551%20-82%20583%20-60T625%20-3Q631%2011%20638%2011Q647%2011%20649%202Q649%20-6%20639%20-34T611%20-100T557%20-165T481%20-194Q399%20-194%20399%20-87V-80ZM636%20468Q636%20523%20621%20564T580%20625T530%20655T477%20665Q429%20665%20379%20640Q277%20591%20215%20464T153%20216Q153%20110%20207%2059Q231%2038%20236%2038V46Q236%2086%20269%20120T347%20155Q372%20155%20390%20144T417%20114T429%2082T435%2055L448%2064Q512%20108%20557%20185T619%20334T636%20468ZM314%2018Q362%2018%20404%2039L403%2049Q399%20104%20366%20115Q354%20117%20347%20117Q344%20117%20341%20117T337%20118Q317%20118%20296%2098T274%2052Q274%2018%20314%2018Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-58%22%20d%3D%22M42%200H40Q26%200%2026%2011Q26%2015%2029%2027Q33%2041%2036%2043T55%2046Q141%2049%20190%2098Q200%20108%20306%20224T411%20342Q302%20620%20297%20625Q288%20636%20234%20637H206Q200%20643%20200%20645T202%20664Q206%20677%20212%20683H226Q260%20681%20347%20681Q380%20681%20408%20681T453%20682T473%20682Q490%20682%20490%20671Q490%20670%20488%20658Q484%20643%20481%20640T465%20637Q434%20634%20411%20620L488%20426L541%20485Q646%20598%20646%20610Q646%20628%20622%20635Q617%20635%20609%20637Q594%20637%20594%20648Q594%20650%20596%20664Q600%20677%20606%20683H618Q619%20683%20643%20683T697%20681T738%20680Q828%20680%20837%20683H845Q852%20676%20852%20672Q850%20647%20840%20637H824Q790%20636%20763%20628T722%20611T698%20593L687%20584Q687%20585%20592%20480L505%20384Q505%20383%20536%20304T601%20142T638%2056Q648%2047%20699%2046Q734%2046%20734%2037Q734%2035%20732%2023Q728%207%20725%204T711%201Q708%201%20678%201T589%202Q528%202%20496%202T461%201Q444%201%20444%2010Q444%2011%20446%2025Q448%2035%20450%2039T455%2044T464%2046T480%2047T506%2054Q523%2062%20523%2064Q522%2064%20476%20181L429%20299Q241%2095%20236%2084Q232%2076%20232%2072Q232%2053%20261%2047Q262%2047%20267%2047T273%2046Q276%2046%20277%2046T280%2045T283%2042T284%2035Q284%2026%20282%2019Q279%206%20276%204T261%201Q258%201%20243%201T201%202T142%202Q64%202%2042%200Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-29%22%20d%3D%22M60%20749L64%20750Q69%20750%2074%20750H86L114%20726Q208%20641%20251%20514T294%20250Q294%20182%20284%20119T261%2012T224%20-76T186%20-143T145%20-194T113%20-227T90%20-246Q87%20-249%2086%20-250H74Q66%20-250%2063%20-250T58%20-247T55%20-238Q56%20-237%2066%20-225Q221%20-64%20221%20250T66%20725Q56%20737%2055%20738Q55%20746%2060%20749Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-22A4%22%20d%3D%22M55%20642T55%20648T59%20659T66%20666T71%20668H708Q723%20660%20723%20648T708%20628H409V15Q402%202%20391%200Q387%200%20384%201T379%203T375%206T373%209T371%2013T369%2016V628H71Q70%20628%2067%20630T59%20637Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E152%22%20d%3D%22M-24%20327L-18%20333H-1Q11%20333%2015%20333T22%20329T27%20322T35%20308T54%20284Q115%20203%20225%20162T441%20120Q454%20120%20457%20117T460%2095V60V28Q460%208%20457%204T442%200Q355%200%20260%2036Q75%20118%20-16%20278L-24%20292V327Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E153%22%20d%3D%22M-10%2060V95Q-10%20113%20-7%20116T9%20120Q151%20120%20250%20171T396%20284Q404%20293%20412%20305T424%20324T431%20331Q433%20333%20451%20333H468L474%20327V292L466%20278Q375%20118%20190%2036Q95%200%208%200Q-5%200%20-7%203T-10%2024V60Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E151%22%20d%3D%22M-10%2060Q-10%20104%20-10%20111T-5%20118Q-1%20120%2010%20120Q96%20120%20190%2084Q375%202%20466%20-158L474%20-172V-207L468%20-213H451H447Q437%20-213%20434%20-213T428%20-209T423%20-202T414%20-187T396%20-163Q331%20-82%20224%20-41T9%200Q-4%200%20-7%203T-10%2025V60Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E150%22%20d%3D%22M-18%20-213L-24%20-207V-172L-16%20-158Q75%202%20260%2084Q334%20113%20415%20119Q418%20119%20427%20119T440%20120Q454%20120%20457%20117T460%2098V60V25Q460%207%20457%204T441%200Q308%200%20193%20-55T25%20-205Q21%20-211%2018%20-212T-1%20-213H-18Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJSZ4-E154%22%20d%3D%22M-10%200V120H410V0H-10Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-4B%22%20d%3D%22M285%20628Q285%20635%20228%20637Q205%20637%20198%20638T191%20647Q191%20649%20193%20661Q199%20681%20203%20682Q205%20683%20214%20683H219Q260%20681%20355%20681Q389%20681%20418%20681T463%20682T483%20682Q500%20682%20500%20674Q500%20669%20497%20660Q496%20658%20496%20654T495%20648T493%20644T490%20641T486%20639T479%20638T470%20637T456%20637Q416%20636%20405%20634T387%20623L306%20305Q307%20305%20490%20449T678%20597Q692%20611%20692%20620Q692%20635%20667%20637Q651%20637%20651%20648Q651%20650%20654%20662T659%20677Q662%20682%20676%20682Q680%20682%20711%20681T791%20680Q814%20680%20839%20681T869%20682Q889%20682%20889%20672Q889%20650%20881%20642Q878%20637%20862%20637Q787%20632%20726%20586Q710%20576%20656%20534T556%20455L509%20418L518%20396Q527%20374%20546%20329T581%20244Q656%2067%20661%2061Q663%2059%20666%2057Q680%2047%20717%2046H738Q744%2038%20744%2037T741%2019Q737%206%20731%200H720Q680%203%20625%203Q503%203%20488%200H478Q472%206%20472%209T474%2027Q478%2040%20480%2043T491%2046H494Q544%2046%20544%2071Q544%2075%20517%20141T485%20216L427%20354L359%20301L291%20248L268%20155Q245%2063%20245%2058Q245%2051%20253%2049T303%2046H334Q340%2037%20340%2035Q340%2019%20333%205Q328%200%20317%200Q314%200%20280%201T180%202Q118%202%2085%202T49%201Q31%201%2031%2011Q31%2013%2034%2025Q38%2041%2042%2043T65%2046Q92%2046%20125%2049Q139%2052%20144%2061Q147%2065%20216%20339T285%20628Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-56%22%20d%3D%22M52%20648Q52%20670%2065%20683H76Q118%20680%20181%20680Q299%20680%20320%20683H330Q336%20677%20336%20674T334%20656Q329%20641%20325%20637H304Q282%20635%20274%20635Q245%20630%20242%20620Q242%20618%20271%20369T301%20118L374%20235Q447%20352%20520%20471T595%20594Q599%20601%20599%20609Q599%20633%20555%20637Q537%20637%20537%20648Q537%20649%20539%20661Q542%20675%20545%20679T558%20683Q560%20683%20570%20683T604%20682T668%20681Q737%20681%20755%20683H762Q769%20676%20769%20672Q769%20655%20760%20640Q757%20637%20743%20637Q730%20636%20719%20635T698%20630T682%20623T670%20615T660%20608T652%20599T645%20592L452%20282Q272%20-9%20266%20-16Q263%20-18%20259%20-21L241%20-22H234Q216%20-22%20216%20-15Q213%20-9%20177%20305Q139%20623%20138%20626Q133%20637%2076%20637H59Q52%20642%2052%20648Z%22%3E%3C%2Fpath%3E%0A%3C%2Fdefs%3E%0A%3Cg%20stroke%3D%22currentColor%22%20fill%3D%22currentColor%22%20stroke-width%3D%220%22%20transform%3D%22matrix(1%200%200%20-1%200%200)%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-59%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2208%22%20x%3D%221041%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1986%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJAMS-52%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(722%2C412)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-4C%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-D7%22%20x%3D%22681%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-44%22%20x%3D%221460%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJAMS-225C%22%20x%3D%224705%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(5761%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-73%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-6F%22%20x%3D%22394%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-66%22%20x%3D%22895%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-74%22%20x%3D%221201%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-6D%22%20x%3D%221591%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-61%22%20x%3D%222424%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-78%22%20x%3D%222925%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%229214%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(9604%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-57%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-51%22%20x%3D%221526%22%20y%3D%22583%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-58%22%20x%3D%222128%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2981%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-22A4%22%20x%3D%22550%22%20y%3D%22583%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(12%2C-765)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E152%22%20x%3D%2223%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(508.34270552547304%2C0)%20scale(2.562390055312952%2C1)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E154%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(1560%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E151%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E150%22%20x%3D%22450%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(2485.5465287569127%2C0)%20scale(2.562390055312952%2C1)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E154%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E153%22%20x%3D%223546%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(1201%2C-1630)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-4C%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-D7%22%20x%3D%22681%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-44%22%20x%3D%221460%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(13625%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-57%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-4B%22%20x%3D%221526%22%20y%3D%22583%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-58%22%20x%3D%221808%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(-4%2C-537)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E152%22%20x%3D%2223%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(492.9596477115734%2C0)%20scale(0.9431208117445641%2C1)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E154%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(880%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E151%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E150%22%20x%3D%22450%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(1790.0703886442902%2C0)%20scale(0.9431208117445641%2C1)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E154%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E153%22%20x%3D%222186%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(521%2C-1402)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-44%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-D7%22%20x%3D%22828%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-4C%22%20x%3D%221606%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%2216286%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(16676%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(389%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-57%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-56%22%20x%3D%221526%22%20y%3D%22583%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-58%22%20x%3D%222113%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2965%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-22A4%22%20x%3D%22550%22%20y%3D%22583%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(12%2C-765)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E152%22%20x%3D%2223%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(508.16677062396354%2C0)%20scale(2.5438705919961615%2C1)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E154%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(1552%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E151%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E150%22%20x%3D%22450%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(2477.5924192623515%2C0)%20scale(2.5438705919961615%2C1)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E154%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJSZ4-E153%22%20x%3D%223531%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(1193%2C-1630)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-4C%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-D7%22%20x%3D%22681%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-44%22%20x%3D%221460%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=Y%20%5Cin%20%5Cmathbb%7BR%7D%5E%7BL%20%5Ctimes%20D%7D%20%5Ctriangleq%0A%5Ctext%7Bsoftmax%7D%28%5Cunderbrace%7B%28W%5EQX%29%5E%7B%5Ctop%7D%7D%7BL%20%5Ctimes%20D%7D%0A%5Cunderbrace%7BW%5EKX%7D%7BD%20%5Ctimes%20L%7D%29%20%0A%5Cunderbrace%7B%28W%5EVX%29%5E%7B%5Ctop%7D%7D%7BL%20%5Ctimes%20D%7D&id=G8tiH)

这里是无法等价于论文中公式最终的形式的,(Y的形状与X并不一致),除非是一个概念意义上的等价,而非公式推导。

更合理的表示应该将该过程表示为。但是该形式虽然在形状上符合,但是却并不能得到对应于论文公式最终的权重

,除非去掉softmax。

如果去掉softmax,则式子可以转化为:

看起来挺合适的 :>。

不过需要注意的是,作者并没有按照self-attention本身的形式来构建结构,并且后续的推导也不是基于softmax形式的。所以logits一直都是logits😅。

全局自注意力机制的近似

为了降低自注意力机制在计算动态权重过程中的的复杂度,作者们将目光放到了自注意力操作中的动态权重的计算上。即通过现成的(off-the-shelf)操作符例如卷积或者元素乘积等将其计算过程进行简化。

在最终的形式上,作者实际上使用空间元素偏移和元素点乘的策略将自注意力操作表示成了卷积的形式。

需要注意的是,这里的运算和注意力机制中的运算并不在数学推导上等价,而仅仅是概念上的等价。

不过,从另一个角度来说,为什么我们必须延续self-attention的形式来构造运算呢?self-attention的有效点应该是其运算的逻辑,而非形式吧?我又为什么始终会期待其从self-attention上开始推导呢? 但是我似乎意识到了我为什么会产生这样的纠结:作者旨在近似自注意力操作,却将推导过程建立在一个仅仅是概念等价的构造之上,这就有些立不住脚了。 这不同于之前的类似于lambda network(LambdaNetworks: Modeling long-range Interactions without Attention)那样,人家直接就说了是一种新的形式,本就不再遵循self-attention的固有运算,所以后续的改造也无可非议,是顺理成章地。但是本文在前文的描述中却缺少了这样的铺垫与过渡。让阅读增加了不必要的期待,换句话说,如果延续self-attention自身的运算结构,那还是很难被简化的到本文的框架上的。

所以为了理解本文的推导,我们需要抛开self-attention本身的形式,而要以其运算的核心概念来作为基础,即特定位置的结果是通过利用其与全局上下文的相似关系来整合全局信息得到的。

self-attention是一种基于相似性的聚合运算。 convolution是一种基于预设权重的聚合运算。这里只考虑普通卷积,不考虑动态权重。

所以在本文新构造的形式中,包含了这两点——相似性计算(特征矢量的点积)、聚合操作(基于点积得到的相似性加权聚合全局特征)。

接下来介绍下作者的推导思路。

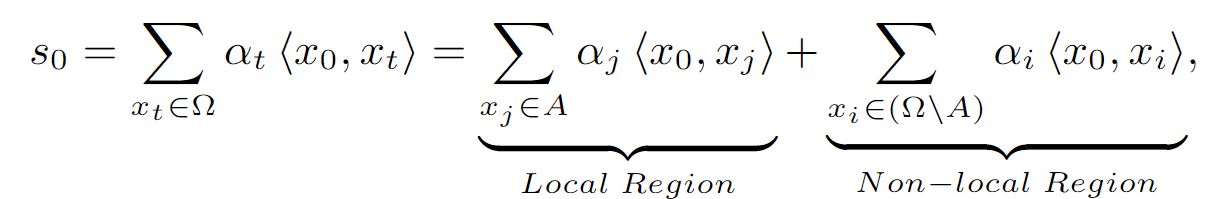

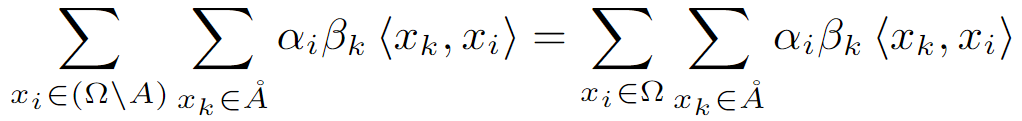

首先按照文中的表述,输入位置对应的attention logits表示如下:

从这个式子可以知道,这里的attention logits并不是指代原始self-attention中的attention logits map(即Q^TK),而是指代Q^TKV^T。

由点乘运算可知,这里的是一个向量,其中用到输入X中的向量元素

以及对应于输入的三个权重矩阵中的元素,这里的运算都是element-wise乘法。

而这里使用相似性计算基本可以对标QK的计算。而在QK的计算中,每个输入特征对应的权重w都是全局共享通道不共享的,而这里和

被取了出来,独立于相似性计算的过程,并整合到了对于“V”的变换中。相当于直接基于了原始的输入计算了相似性。

原本对于全局的聚合,作者将其拆分成了两部分,一部分是如同卷积那样,将其局限于特定窗口内的局部区域,另一部分则是窗口之外的其他非局部区域。

考虑到图像自身具有较强的markovian property[Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials],所以特定位置可以近似地由其局部邻域线性表示:,这里

是线性权重。于是前面式子的非局部项可以被改造如下:

式子中的当前位置的特征被局部近似并替换成了特定窗口内的特征组合。

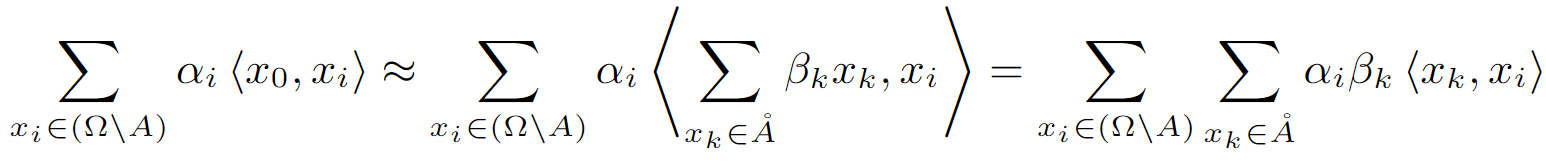

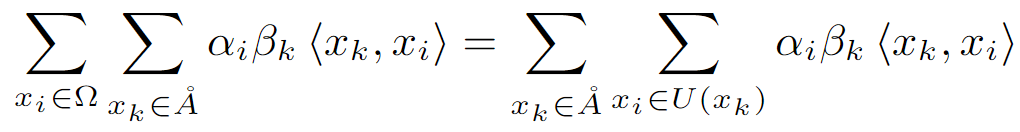

不失一般性,这里将非局部区域运算中被排除在外的“局部区域”的系数设为0,从而将空缺填补上去:

考虑到图像的markovian property,作者假设对于参考点(以及附近的位置

)和远离参考点的位置

的交互是非常弱的。所以进一步简化得到:

即将进一步约束到

的临近点

的局部区域内。

这里有一种类似于二阶近似的感觉,通过特定位置局部邻域和其自身邻域的相似性(即邻域像素的注意力结果),从而近似表征该位置与更大范围邻域的相似性。即保留了最相关的两层关系。

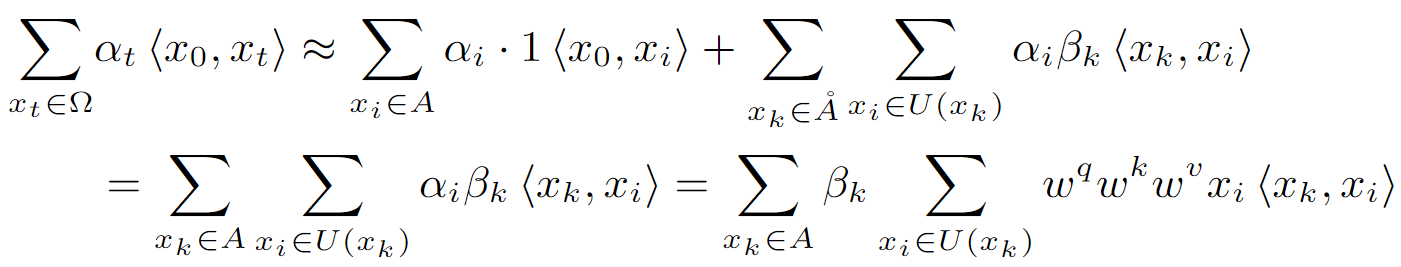

通过整体替换原始式子,可以得到最终的attention logits形式:

这里的结合过程似乎有点让人迷惑。头顶的圈是怎么没的?

表示的区域中排除了中心点

。

这局部项正好填补了被排除的中心点。所以

头顶的圈被去掉了。 于是这里公式对应的具体含义为:局部项中“局部区域中心点与其邻居的关系的加权和”加上非局部项中“局部区域中心点之外的其他点和其自身邻居的关系的加权和结果的加权和”,得到了“以

为中心的局部区域内所有点与各自邻居的关系加权和结果的加权和”。

两项的结合好像显得前面的“局部”与“非局部”的拆分多此一举?

直接按照全局计算前面的推导看起来也是满足的?

实际上还是有必要的,因为这里的近似仅仅是在非局部项中。前式可以直接合并仅仅是因为局部近似的窗口大小和前一项局部窗口大小一样。如果不一样了,那就不能这样融合了。

最后通过这样一个局部邻域的聚合过程,实现了对于全局关系的近似,即Pixel Shift Self-Attention (PSSA)。

接下来就是本文的重头戏,即如何将前述的推导得到的形式使用空间偏移和卷积操作实现出来。

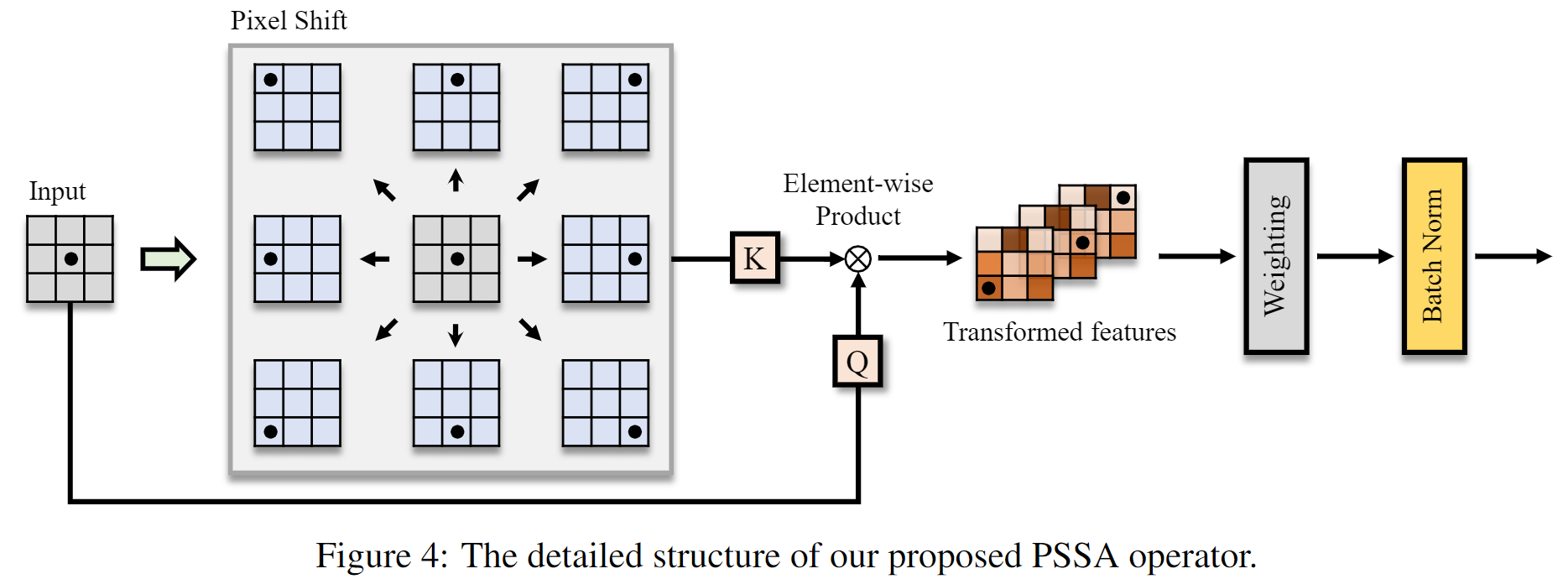

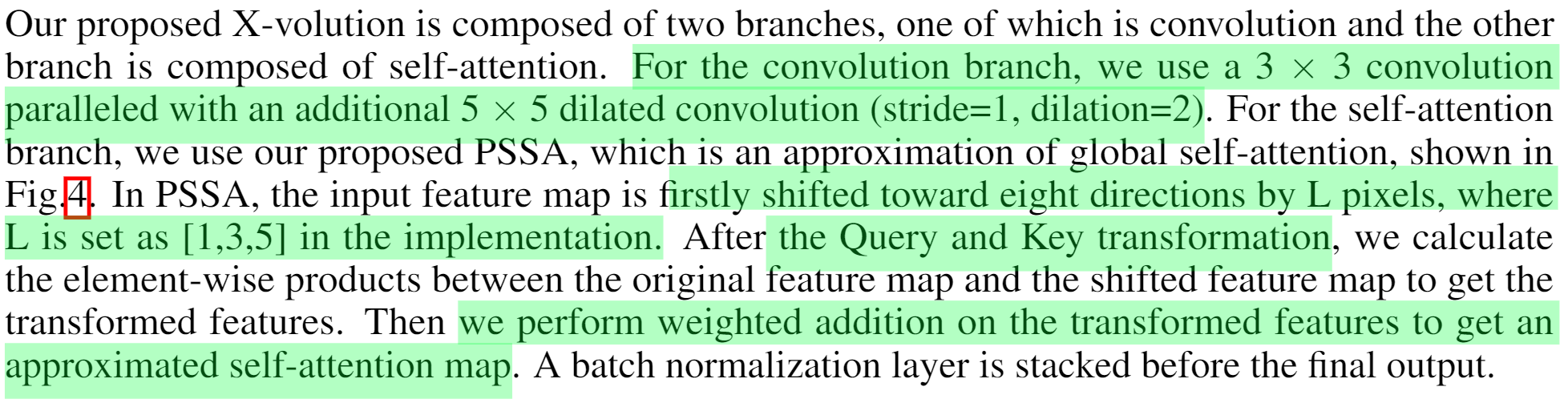

Pixel Shift Self-Attention (PSSA)的实现方式

上图展示了附录中PSSA的具体过程。文章正文中的描述对此非常容易让人产生误解(这个糟糕的写作风格:<)。

首先对特征图进行空间偏移,沿着给定方向的八个方向各偏移L个像素位置,之后使用Key变换整合特征。

然后对经过Query变换后的原始特征和Key变换之后的特征使用element-wise乘法获得转换后的特征。

实际上,shift-product操作构建邻域点之间的上下文关系,并且通过分层堆叠的形式,上下文信息被逐渐传播到全局范围。

最后通过使用卷积加权求和聚集这些邻域信息来获得一个近似的self-attention map。

由于空间偏移、element-wise product和加权求和的复杂度都是,所以整体构成的PSSA也是

复杂度的运算单元。

总而言之,通过这样的策略,self-attention被转换为一个作用域变换特征上的标准卷积操作。通过多层堆叠,该结构可以通过上下文关系传播来实现对于全局自注意力的有效近似。

等等~说到这里,我们需要考虑,为什么前面的式子就可以通过这样的偏移加变换的形式来等效呢?

在最终的公式中,attention过程可以拆分成级联的相似性计算和局部集成拆两步操作,即先在每个小的局部区域计算相似性,之后再集成所有的这些小区域的结果来得到最终的结果。

- 每个小的局部区域的相似性计算可以直接通过空间偏移和乘法来实现。特定方向的偏移和乘法实现了特定方向上距离等于偏移量的两点之间的相似性计算。通过使用(共享?)权重

(key变换和query变换),不同方向的特征图会被单独处理。从而可以得到每个位置和相邻局部区域内各点之间的相似性。

- 这里的计算过程的加权明显是在计算相关性(乘法)之前的,和前面公式中的设计并不一致(真不知道作者怎么回事)。

- 通过query变换后的原始特征与key变换后的偏移特征计算得到相似性之后,接下来就该第一次聚合了(即后续推导中和忽略的系数

),正常来说,这该将不同的偏移的结果进行合并,从而得到局部聚合后的结果。之后该第二次加权聚合了,在特征图上应用卷积来整合局部特征(我觉得这里的参数可以看作是

)。

- 两次加权聚合进而实现了对于全局信息的近似聚合。获得的值可以看作是对于局部区域内的位置

的一个动态加权后的结果。作者提到“实际上,我们通常通过分层堆叠使用PSSA,并且可以省略堆叠结构中的加权操作,因为分层堆叠意味着加权邻居像素的操作。”所以应该是第一次聚合被省略了,直接一次聚合搞定。

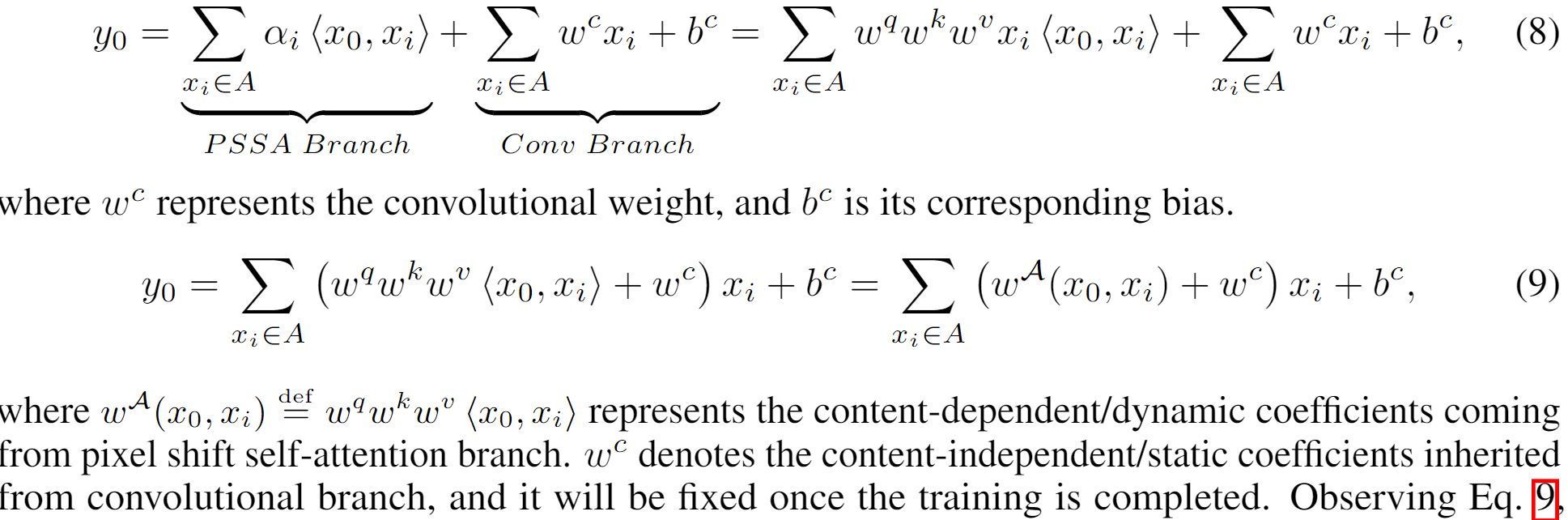

对卷积和自注意力的统一

关于卷积和自注意力是互补的,作者总结的内容非常精辟,这里摘录下来:The convolution employs the inductive bias of locality and isotropy endowing it the capability of translation equivariance [1]. However, the local inherent instinct makes convolution failed to establish the long-term relationship which is necessary to formulate a Turing complete atomic operator [4, 23]. In contrast to convolution, self-attention discards mentioned inductive bias, so-called low-bias, and strives to discover natural patterns from a data-set without explicit model assumption. The low-bias principle gives self-attention the freedom to explore complex relationships (e.g., long-term dependency, anisotropic semantics, strong local correlations in CNNs [7], e.t.c.), resulting in the scheme usually requires pre-training on extra oversize data-sets (e.g., JFT-300M, ImageNet21K). In addition, self-attention is difficult to optimize, requiring a longer training cycle and complex tricks [31, 20, 38, 2]. Witnessing this, several works [1, 36] propose that convolution should be introduced into the self-attention mechanism to improve its robustness and performance. In short, different model assumptions are adopted to make convolution and self-attention complement each other in terms of optimization characteristics (i.e., well-condition/ill-condition), attention scope (i.e., local/long-term), and content dependence (content-dependent/independent) e.t.c..

这里总结道:不同的模型假设被用来使卷积和自注意互相补充,例如在优化特性方面(即well-condition/ill-condition),注意范围方面(即局部还是长距离)以及内容依赖性方面 (内容依赖的还是内容独立的)等。

为了实现对于二者的统一,现有方法已经有一些尝试,例如Cvt: Introducing convolutions to vision transformers和AANet: Attention augmented convolutional networks。然而他们的策略过于粗糙,例如直接分层堆叠或者是拼接,这并不便于获得一个单独的原子操作(即在相同模块中应用卷积和自注意操作),并且使得结构不够标准化。虽然两种操作之间差异明显的计算模式带来了许多阻碍,但是通过前面的近似处理,二者可以实现基于统一的模式来实现,即卷积操作。从另一个视角来看,卷积反过来也可以看作是自注意力操作的空间归纳偏置的形式。由此,作者们将利用二者构建了图1所示的多分支结构,这可以同时受益于二者。

于是训练阶段,该结构的公式表达如下:

这里的运算中的相关计算,由于使用空间偏移来模拟领域与领域的关系建模,所以上式展现的局部运算形式中只体现了偏移后的运算。

通过已经计算得到的相关性和卷积操作,自注意力运算被转化成了一个局部的动态卷积的过程。

这样的结构通过重参数化在推理时可以合并为一个独立的动态卷积算子,命名为X-volution。

实验结果

感想

论文的方法包装的有点过度,想法确实挺有趣,将全局的自注意力计算近似为一种局部范围内各点与其自身局部范围的关系的整体聚合。

但是文章的方法介绍却并不清晰。

最为让我不舒服的一点就是,公式与方法的对应并不紧密,看了半天也对不上方法的介绍。不知道这些公式在写给谁看。

极其不痛快的一次阅读经历。