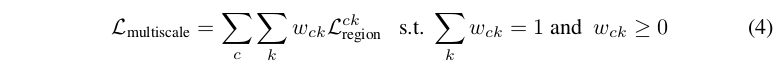

关键是文章中提出的一个损失函数, 可以实现区域级别的监督.

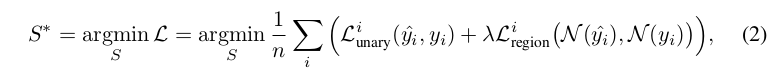

这里表述的是附加了区域损失的目标函数, 其中N表示邻域像素集合, 加了帽的y表示预测值, 没加的表示真值.

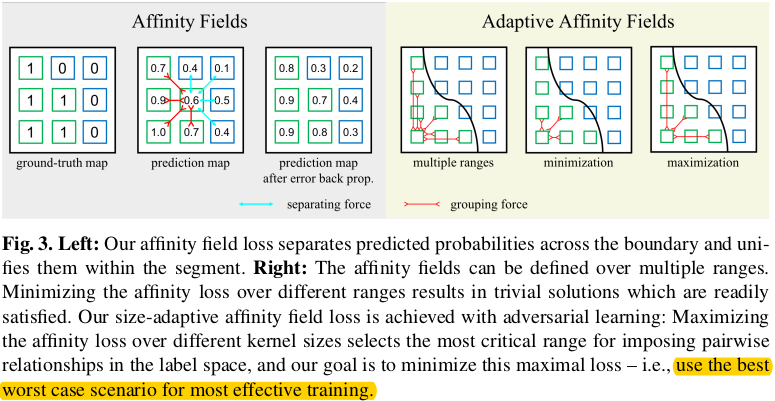

Affinity Field Loss Function

初步的区域监督可以表述如下, 对于像素i与其临近像素j之间的预测的预测结果之间计算交叉熵, 并根据该位置的真值的情况进行分别处理(也就是区别了边界与非边界区域)

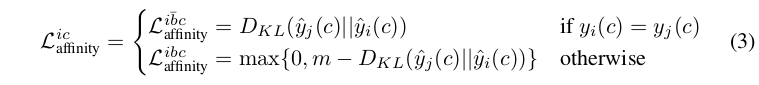

实验中,设定如下:

约束效果如下:

这幅途中的左侧反应的就是这样的损失的一个约束状况. 但是也反映出来该方法的一个限制:

Region-wise supervision often requires a preset kernel size for CNNs, where pairwise pixel relationships are measured in the same fashion across all pixel locations. However, we cannot expect one kernel size fits all categories, since the ideal kernel size for each category varies with the average object size and the object shape complexity.

Adaptive Kernel Sizes from Adversarial Learning

于是进一步提出了一个尺寸自适应的关联区域损失函数:

这里的区域损失就是在式子2中定义的区域损失. 这里是针对于特定类别通道c下, 使用大小为k的核时, 对应有权重wck(对应于这个区域的损失). 相当于对不同类别, 不同尺度的核使用了不同的加权. 同时对于特定类别下, 不同尺寸的核得出的区域损失对应的权重wck的权重加和为1.

但是还要注意:

If we just minimize the affinity loss with size weighting w included, w would likely fall into a trivial solution. As illustrated in Fig 3 right, the affinity loss would be minimum if the smallest kernels are highly weighted for non-boundary terms and the largest kernels for boundary terms, since nearby pixels are more likely to belong to the same object and far-away pixels to different objects. Unary predictions based on the image would naturally have such statistics, nullifying any potential effect from our pairwise affinity supervision.

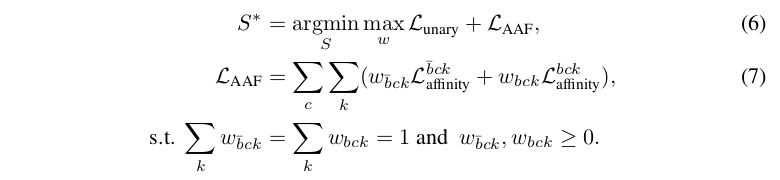

如果我们只在包括尺寸权重 w 的情况下直接最小化关联损失,w 很可能 陷入一个比较差的解。 如图 3 所示,如果非边界项的最小的核和边界项的最大核被高度加权,关联损失将最小化,因为附近的像素更有可能属于同一对象而距离较远的像素更有可能属于不同对象。基于图像的一元预测自然会有这样的统计特性,抵消了我们成对关联损失造成的任何潜在影响。 To optimize the size weighting without trivializing the affinity loss, we need to push the selection of kernel sizes to the extreme. Intuitively, we need to enforce pixels in the same segment to have the same label prediction as far as possible, and likewise to enforce pixels in different segments to have different predictions as close as possible. We use the best worst case scenario for most effective training. We formulate the adaptive kernel size selection process as optimizing a two-player minimax game: While the segmenter should always attempt to minimize the total loss, the weighting for different kernel sizes in the loss should attempt to maximize the total loss in order to capture the most critical neighbourhood sizes.

为了在不降低关联损失的情况下优化尺寸权重,我们需要推动核大小的选择达到极致。 直观地说,需要尽可能使相同分割区域内的像素具有相同预测标记,同样强制不同分割区域中的像素尽可能接近不同的预测。 我们使用最好的最差情况进行最有效的训练。我们将自适应核大小的选择过程表述为优化two-player minimax game:虽然分割器应该总是试图最小化总损失,但是损失中不同核大小的权重应该试图最大化总的损失来捕捉最关键的邻域大小。 来让损失的计算更有针对性,更加有力的对于误差进行监督**。

正式来说,我们有:

结合式子3,这里的损失中的区域损失可以拆分为边界项与非边界项。如下:

这里将二者查分, 因为认为对于两种区域的损失而言, 理想的核大小是不同的。

其他

- 学习率衰减:多项式衰减(1-iter/max_iter)^0.9

- 初始学习率:0.001

- 迭代步数:The training iterations for all experiments is 30K on VOC dataset and 90K on Cityscapes dataset while the performance can be further improved by increasing the iteration number.

- Momentum and weight decay are set to 0.9 and 0.0005, respectively.

- For data augmentation, we adopt random mirroring and random resizing between 0.5 and 2 for all datasets.

- 关于监督使真值与特征图不同尺度的问题:We do not upscale the logits (prediction map) back to the input image resolution, instead, we follow [DeepLab]’s setting by downsampling the ground-truth labels for training (output stride = 8).

- PSPNet shows that larger “cropsize” and “batchsize” can yield better performance. In their implementation, “cropsize” can be up to 720 × 720 and “batchsize” to 16 using 16 GPUs.

- To speed up the experiments for validation on VOC, we downsize “cropsize” to 336 × 336 and “batchsize” to 8 so that a single GTX Titan X GPU is sufficient for training. We set “cropsize” to 480 × 480 during inference.

- For testing on PASCAL VOC 2012 and all experiments on Cityscapes dataset, we use 4-GPUs to train the network. On VOC dataset, we set the “batchsize” to 16 and set “cropsize” to 480 × 480. On Cityscapes, we set the “batchsize” to 8 and “cropsize” to 720 × 720.

- For inference, we boost the performance by averaging scores from left-right flipped and multi-scale inputs (scales = {0.5, 0.75, 1, 1.25, 1.5, 1.75}).

总结

We propose adaptive affinity fields (AAF) for semantic segmentation, which incorporate geometric regularities into segmentation models, and learn local relations with adaptive ranges through adversarial training. Compared to other alternatives, our AAF model is 1) effective (encoding rich structural relations), 2) efficient (introducing no inference overhead), and 3) robust (not sensitive to domain changes). Our approach achieves competitive performance on standard benchmarks and also generalizes well on unseen data. It provides a novel perspective towards structure modeling in deep learning.

提出了一种基于自适应关联域 (AAF) 的语义分割方法,将几何规律纳入分割模型,并通过对抗训练自适应学习局部关系。

与其他替代方案相比,我们的 AAF 模型:

- 有效 (编码丰富的结构关系)

- 有效率 (不引入推断开销)

- 健壮 (对域更改不敏感)

我们的方法在标准基准测试上获得了可竞争的性能,并且在不可见的数据上也得到了很好的泛化。它为深度学习中的结构建模提供了一个新的视角。