偶然间从arxiv上翻到的论文,可以看做是对之前工作Hierarchical multi-scale attention for semantic segmentation的一个扩展。

从摘要读论文

Aggregating information from features across different layers is an essential operation for dense prediction models.

本文重点关注与跨层的特征集成的问题。

Despite its limited expressiveness, feature concatenation dominates the choice of aggregation operations.

虽说是特征拼接,但是大多数还会跟一些复杂的卷积结构。

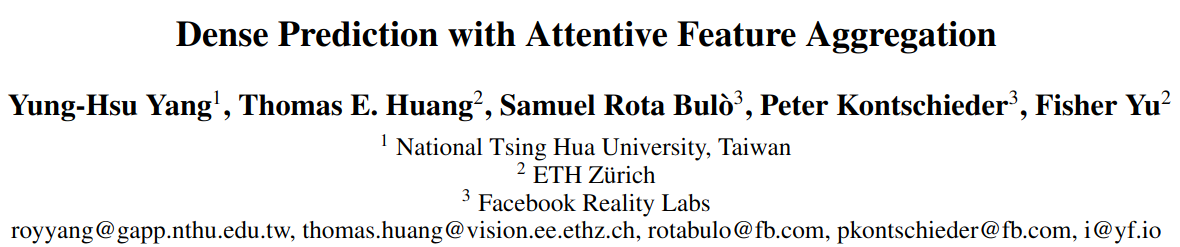

In this paper, we introduce Attentive Feature Aggregation (AFA) to fuse different network layers with more expressive non-linear operations. AFA exploits both spatial and channel attention to compute weighted average of the layer activations.

核心模块之AFA。使用空间和通道注意力来加权求和不同层的特征。从而构建一种非线性的集成操作。

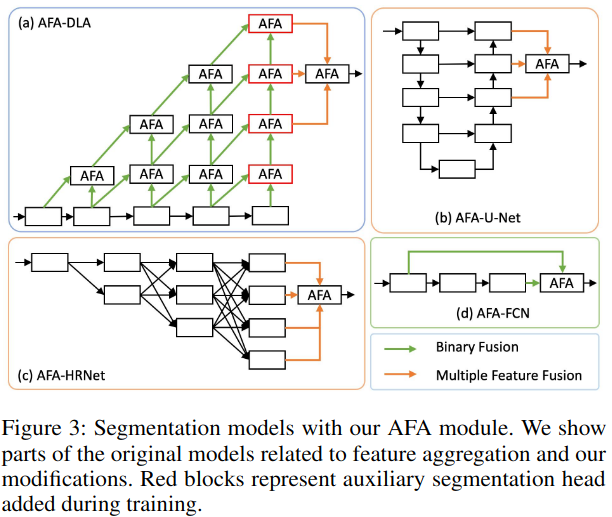

Inspired by neural volume rendering, we extend AFA with Scale-Space Rendering (SSR) to perform late fusion of multi-scale predictions.

这里提到一个很有趣的点,是用来融合多尺度预测的结构。结构的设计借鉴了神经体渲染的想法(我不太了解这个方面)。

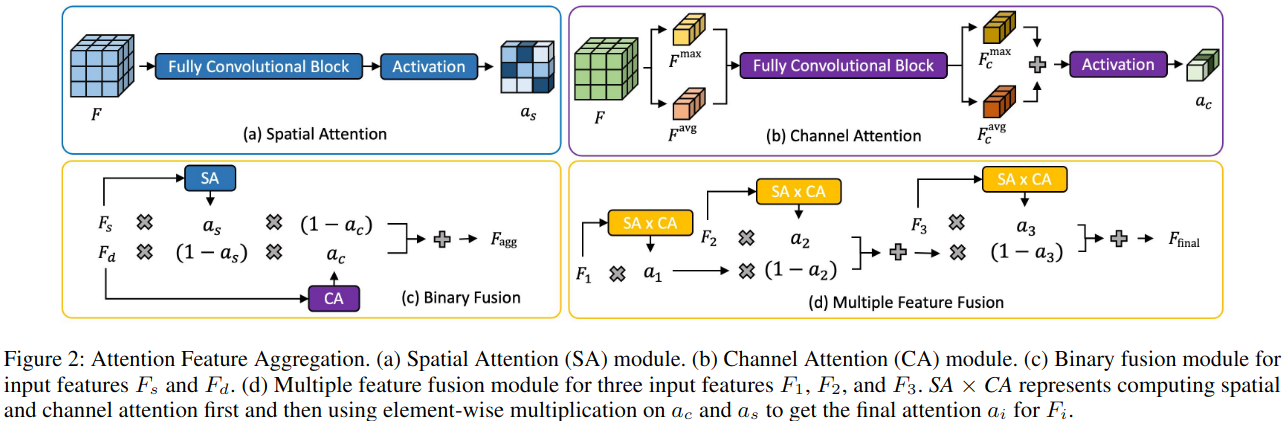

AFA is applicable to a wide range of existing network designs.

由于AFA本身就是一个模型通用的模块,所以可以很容易的迁移到不同的模型中来实现特征的跨层集成。

Our experiments show consistent and significant improvements on challenging semantic segmentation benchmarks, including Cityscapes, BDD100K, and Mapillary Vistas, at negligible computational and parameter overhead. In particular, AFA im-proves the performance of the Deep Layer Aggregation (DLA) model by nearly 6% mIoU on Cityscapes. Our experimental analyses show that AFA learns to progressively refine segmentation maps and to improve boundary details, leading to new state-of-the-art results on boundary detection benchmarks on BSDS500 and NYUDv2.

尝试了分割任务和边缘检测任务。

主要内容

- We propose Attentive Feature Aggregation (AFA) as a non-linear feature fusion operation to replace the prevailing tensor concatenation or summation strategies.

- Our attention module uses both spatial and channel attention to learn and predict the importance of each input signal during fusion. Aggregation is accomplished by computing a linear combination of the input features at each spatial location, weighted by their relevance.

- Compared to linear fusion operations, our AFA module can take into consideration complex feature interactions and attend to different feature levels depending on their importance.

- AFA introduces negligible computation and parameter overhead and can be easily used to replace fusion operations in existing methods, such as skip connections.

- Unlike linear aggregation, our AFA module leverages extracted spatial and channel information to efficiently select the essential features and to increase the receptive field at the same time.

- Inspired by neural volume rendering [Volume rendering, Nerf: Representing scenes as neural radiance fields for view synthesis], we propose Scale-Space Rendering (SSR) as a novel attention computation mechanism to fuse multi-scale predictions.

- We treat those predictions as sampled data in scale-space and design a coarse-to-fine attention concept to render final predictions. (这个想法很有意思。把最终预测的获取看做是一个从尺度空间中采样不同尺度的预测来渲染最终预测的问题)

- Repeated use of attention layers may lead to numerical instability or vanishing gradients. We extend the above-mentioned attention mechanism to fuse the dense predictions from multi-scale inputs more effectively.

- Our solution resembles a volume rendering scheme applied to the scale space. This scheme provides a hierarchical, coarse-to-fine strategy to combine features, leveraging a scale-specific attention mechanism. We will also show that our approach generalizes the hierarchical multi-scale attention method [Hierarchical multi-scale attention for semantic segmentation].

Attentive Feature Aggregation (AFA)

这里设计了两种整合形式,一种适用于双输入,另一种适合用于多输入递进式集成。核心都是基于空间注意力和通道注意力。注意,这里的计算都是两两集成的形式,所以都是算出一个注意力后,使用sigmoid来构造相对权重。

对于双输入形式,空间注意力由较浅层特征计算,因为其包含着丰富的空间信息。而通道注意力由较深层特征计算,因为其包含着更复杂的通道特征。对于多输入形式(图中仅仅展示了三层,实际上可以引入更多层的输入),通道和空间注意力完全由当前层输入计算,并且如果有靠前计算的一级的化,该注意力会用来对当前和之前的输出作加权。另外集成的顺序原文中如此描述“a feature with higher priority will have gone through a higher number of aggregations”,我的理解是,应该就是从深到浅层的一个过程。

提出的集成模块可以用于许多结构中,例如DLA、UNet、HRNet和FCN中。Scale-Space Rendering (SSR)

这里提出的SSR是一个更加类似于模型集成的策略。

其通过计算针对不同尺度下预测的输出的相对权重来对多尺度推理进行集成。所以,这里涉及到两个问题:

- SSR如何学习?论文中并没有提到。但是按照上图中的说法,训练使用两个尺度的输入,说明这是可以训练SSR的。由于是个会预测参数的可学习的结构,对于每个输入会自动预测一个注意力参数。通过这些不同尺度输入下对应计算得到的参数从而获得最终针对多个尺度的加权比重。

- 不同大小的预测最后会整合到哪个尺度?这一点论文中没有提。但是按照上图使用基于原始输入的相对尺寸的表述来看,最终应该还是会集成到1.0倍原始输入尺度上(与hierarchical multi-scale attention中的设计形式应该是一致的)。

表达形式

为了表达对多尺度预测的集成的问题,作者首先将关注的重点放在单个像素上。并且假设模型为目标像素在k个不同的尺度上提供了预测。

对于第个尺度的预测可以表示为

。由此,在尺度空间中针对目标像素的特征表征可以定义为

。进一步,这里假设

表示尺度

%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=i&id=wLYqp)比尺度

更加粗糙。

于是目标像素就可以想象成在尺度空间中移动的光线,从尺度朝向尺度

。

基于这样的想法,重新设计在提出的多特征融合机制中的原始的分层注意力,并且模拟volume-rendering equation,这里的volume由尺度空间隐式给出。

为此,除了位于尺度的特征表征

,假设模型还会针对目标像素预测一个标量

。在volume rendering的语境下,粒子将会穿过尺度

的概率,在给定一些非负标量函数

时,就可以表示为

。

于是可以将尺度注意力表达为粒子到达尺度

并停留在这里的概率(每一次都满足伯努利分布,非留即走,前面都走,就当前次留了下来):

表示针对各个尺度的目标像素预测的标量参数。

最终,按照volume rendering equation,针对目标像素多尺度预测融合得到的最终预测,由不同尺度的注意力参数来加权求和获得。这也反映了对于目标像素获得的最终特征,是在驱动下融合所有尺度的特征表达获得的。

综合上下文的分析,这里的设计中应该是最终是要将所有尺度集成到1上的。

提出的SSR可以看作是Hierarchical Multi-Scale Attention (HMA) [Hierarchical multi-scale attention for semantic segmentation, https://github.com/NVIDIA/semantic-segmentation]的一种一般化形式。通过设置%20%5Ctriangleq%20%5Clog(1%20%2B%20e%5E%7Byi%7D)%3C%2Ftitle%3E%0A%3Cdefs%20aria-hidden%3D%22true%22%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-3D5%22%20d%3D%22M409%20688Q413%20694%20421%20694H429H442Q448%20688%20448%20686Q448%20679%20418%20563Q411%20535%20404%20504T392%20458L388%20442Q388%20441%20397%20441T429%20435T477%20418Q521%20397%20550%20357T579%20260T548%20151T471%2065T374%2011T279%20-10H275L251%20-105Q245%20-128%20238%20-160Q230%20-192%20227%20-198T215%20-205H209Q189%20-205%20189%20-198Q189%20-193%20211%20-103L234%20-11Q234%20-10%20226%20-10Q221%20-10%20206%20-8T161%206T107%2036T62%2089T43%20171Q43%20231%2076%20284T157%20370T254%20422T342%20441Q347%20441%20348%20445L378%20567Q409%20686%20409%20688ZM122%20150Q122%20116%20134%2091T167%2053T203%2035T237%2027H244L337%20404Q333%20404%20326%20403T297%20395T255%20379T211%20350T170%20304Q152%20276%20137%20237Q122%20191%20122%20150ZM500%20282Q500%20320%20484%20347T444%20385T405%20400T381%20404H378L332%20217L284%2029Q284%2027%20285%2027Q293%2027%20317%2033T357%2047Q400%2066%20431%20100T475%20170T494%20234T500%20282Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-28%22%20d%3D%22M94%20250Q94%20319%20104%20381T127%20488T164%20576T202%20643T244%20695T277%20729T302%20750H315H319Q333%20750%20333%20741Q333%20738%20316%20720T275%20667T226%20581T184%20443T167%20250T184%2058T225%20-81T274%20-167T316%20-220T333%20-241Q333%20-250%20318%20-250H315H302L274%20-226Q180%20-141%20137%20-14T94%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-79%22%20d%3D%22M21%20287Q21%20301%2036%20335T84%20406T158%20442Q199%20442%20224%20419T250%20355Q248%20336%20247%20334Q247%20331%20231%20288T198%20191T182%20105Q182%2062%20196%2045T238%2027Q261%2027%20281%2038T312%2061T339%2094Q339%2095%20344%20114T358%20173T377%20247Q415%20397%20419%20404Q432%20431%20462%20431Q475%20431%20483%20424T494%20412T496%20403Q496%20390%20447%20193T391%20-23Q363%20-106%20294%20-155T156%20-205Q111%20-205%2077%20-183T43%20-117Q43%20-95%2050%20-80T69%20-58T89%20-48T106%20-45Q150%20-45%20150%20-87Q150%20-107%20138%20-122T115%20-142T102%20-147L99%20-148Q101%20-153%20118%20-160T152%20-167H160Q177%20-167%20186%20-165Q219%20-156%20247%20-127T290%20-65T313%20-9T321%2021L315%2017Q309%2013%20296%206T270%20-6Q250%20-11%20231%20-11Q185%20-11%20150%2011T104%2082Q103%2089%20103%20113Q103%20170%20138%20262T173%20379Q173%20380%20173%20381Q173%20390%20173%20393T169%20400T158%20404H154Q131%20404%20112%20385T82%20344T65%20302T57%20280Q55%20278%2041%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-69%22%20d%3D%22M184%20600Q184%20624%20203%20642T247%20661Q265%20661%20277%20649T290%20619Q290%20596%20270%20577T226%20557Q211%20557%20198%20567T184%20600ZM21%20287Q21%20295%2030%20318T54%20369T98%20420T158%20442Q197%20442%20223%20419T250%20357Q250%20340%20236%20301T196%20196T154%2083Q149%2061%20149%2051Q149%2026%20166%2026Q175%2026%20185%2029T208%2043T235%2078T260%20137Q263%20149%20265%20151T282%20153Q302%20153%20302%20143Q302%20135%20293%20112T268%2061T223%2011T161%20-11Q129%20-11%20102%2010T74%2074Q74%2091%2079%20106T122%20220Q160%20321%20166%20341T173%20380Q173%20404%20156%20404H154Q124%20404%2099%20371T61%20287Q60%20286%2059%20284T58%20281T56%20279T53%20278T49%20278T41%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-29%22%20d%3D%22M60%20749L64%20750Q69%20750%2074%20750H86L114%20726Q208%20641%20251%20514T294%20250Q294%20182%20284%20119T261%2012T224%20-76T186%20-143T145%20-194T113%20-227T90%20-246Q87%20-249%2086%20-250H74Q66%20-250%2063%20-250T58%20-247T55%20-238Q56%20-237%2066%20-225Q221%20-64%20221%20250T66%20725Q56%20737%2055%20738Q55%20746%2060%20749Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJAMS-225C%22%20d%3D%22M192%20482H190Q187%20483%20185%20484T181%20488T177%20493T175%20501Q175%20506%20178%20512Q184%20523%20278%20687T375%20853Q379%20857%20383%20857Q385%20857%20387%20858T390%20859Q397%20859%20403%20853Q405%20851%20499%20687T600%20512Q603%20506%20603%20501Q603%20488%20587%20482H192ZM548%20523L389%20798Q388%20798%20309%20661T230%20523T389%20522T548%20523ZM56%20347Q56%20360%2070%20367H708Q723%20359%20723%20347Q723%20336%20709%20328L390%20327H72Q56%20332%2056%20347ZM56%20153Q56%20168%2072%20173H709Q723%20163%20723%20153Q723%20140%20708%20133H70Q56%20140%2056%20153Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-6C%22%20d%3D%22M42%2046H56Q95%2046%20103%2060V68Q103%2077%20103%2091T103%20124T104%20167T104%20217T104%20272T104%20329Q104%20366%20104%20407T104%20482T104%20542T103%20586T103%20603Q100%20622%2089%20628T44%20637H26V660Q26%20683%2028%20683L38%20684Q48%20685%2067%20686T104%20688Q121%20689%20141%20690T171%20693T182%20694H185V379Q185%2062%20186%2060Q190%2052%20198%2049Q219%2046%20247%2046H263V0H255L232%201Q209%202%20183%202T145%203T107%203T57%201L34%200H26V46H42Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-6F%22%20d%3D%22M28%20214Q28%20309%2093%20378T250%20448Q340%20448%20405%20380T471%20215Q471%20120%20407%2055T250%20-10Q153%20-10%2091%2057T28%20214ZM250%2030Q372%2030%20372%20193V225V250Q372%20272%20371%20288T364%20326T348%20362T317%20390T268%20410Q263%20411%20252%20411Q222%20411%20195%20399Q152%20377%20139%20338T126%20246V226Q126%20130%20145%2091Q177%2030%20250%2030Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-67%22%20d%3D%22M329%20409Q373%20453%20429%20453Q459%20453%20472%20434T485%20396Q485%20382%20476%20371T449%20360Q416%20360%20412%20390Q410%20404%20415%20411Q415%20412%20416%20414V415Q388%20412%20363%20393Q355%20388%20355%20386Q355%20385%20359%20381T368%20369T379%20351T388%20325T392%20292Q392%20230%20343%20187T222%20143Q172%20143%20123%20171Q112%20153%20112%20133Q112%2098%20138%2081Q147%2075%20155%2075T227%2073Q311%2072%20335%2067Q396%2058%20431%2026Q470%20-13%20470%20-72Q470%20-139%20392%20-175Q332%20-206%20250%20-206Q167%20-206%20107%20-175Q29%20-140%2029%20-75Q29%20-39%2050%20-15T92%2018L103%2024Q67%2055%2067%20108Q67%20155%2096%20193Q52%20237%2052%20292Q52%20355%20102%20398T223%20442Q274%20442%20318%20416L329%20409ZM299%20343Q294%20371%20273%20387T221%20404Q192%20404%20171%20388T145%20343Q142%20326%20142%20292Q142%20248%20149%20227T179%20192Q196%20182%20222%20182Q244%20182%20260%20189T283%20207T294%20227T299%20242Q302%20258%20302%20292T299%20343ZM403%20-75Q403%20-50%20389%20-34T348%20-11T299%20-2T245%200H218Q151%200%20138%20-6Q118%20-15%20107%20-34T95%20-74Q95%20-84%20101%20-97T122%20-127T170%20-155T250%20-167Q319%20-167%20361%20-139T403%20-75Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-31%22%20d%3D%22M213%20578L200%20573Q186%20568%20160%20563T102%20556H83V602H102Q149%20604%20189%20617T245%20641T273%20663Q275%20666%20285%20666Q294%20666%20302%20660V361L303%2061Q310%2054%20315%2052T339%2048T401%2046H427V0H416Q395%203%20257%203Q121%203%20100%200H88V46H114Q136%2046%20152%2046T177%2047T193%2050T201%2052T207%2057T213%2061V578Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2B%22%20d%3D%22M56%20237T56%20250T70%20270H369V420L370%20570Q380%20583%20389%20583Q402%20583%20409%20568V270H707Q722%20262%20722%20250T707%20230H409V-68Q401%20-82%20391%20-82H389H387Q375%20-82%20369%20-68V230H70Q56%20237%2056%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-65%22%20d%3D%22M39%20168Q39%20225%2058%20272T107%20350T174%20402T244%20433T307%20442H310Q355%20442%20388%20420T421%20355Q421%20265%20310%20237Q261%20224%20176%20223Q139%20223%20138%20221Q138%20219%20132%20186T125%20128Q125%2081%20146%2054T209%2026T302%2045T394%20111Q403%20121%20406%20121Q410%20121%20419%20112T429%2098T420%2082T390%2055T344%2024T281%20-1T205%20-11Q126%20-11%2083%2042T39%20168ZM373%20353Q367%20405%20305%20405Q272%20405%20244%20391T199%20357T170%20316T154%20280T149%20261Q149%20260%20169%20260Q282%20260%20327%20284T373%20353Z%22%3E%3C%2Fpath%3E%0A%3C%2Fdefs%3E%0A%3Cg%20stroke%3D%22currentColor%22%20fill%3D%22currentColor%22%20stroke-width%3D%220%22%20transform%3D%22matrix(1%200%200%20-1%200%200)%22%20aria-hidden%3D%22true%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-3D5%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%22596%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(986%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22693%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%221820%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJAMS-225C%22%20x%3D%222488%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(3544%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-6C%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-6F%22%20x%3D%22278%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-67%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%224823%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%225213%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2B%22%20x%3D%225936%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(6936%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-65%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(466%2C412)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22604%22%20y%3D%22-304%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%228119%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=%5Cphi%28y_i%29%20%5Ctriangleq%20%5Clog%281%20%2B%20e%5E%7By_i%7D%29&id=u7zLF),并固定

,就可以获得后者的形式了。此时有:

从这里的形式来看,这里有两处令人疑惑的地方:

- 形式不太对。原本的hierarchical multi-scale attention使用的是sigmoid来集成不同的尺度。这里与sigmoid并不一致。

- 按照这里的形式,并结合空间注意力(sigmoid)的级联关系,可以看出输出是在

的位置,也就是其它层的信息按照层序号递减的形式逐步集成起来。这倒是和下图的形式大致类似。

输入是被再次放缩后才送入模型的。而这里最终输出的尺寸是对应于1.0倍原始输入尺寸的。 所以,假设按照尺度编号从k到1集成特征,并在1层输出结果。 由于本文中构造的注意力是基于不选择当前层的概率(穿过当前层),则对应上图的形式,总的形式为:

可以看到,对于第一层的注意力权重就是直接sigmoid的输出结果。而对于第k层的输出,则是对各层sigmoid输出取补并类乘而获得。

的选择

的选择

实验中使用绝对值函数:。这受启发于更好的保留经过注意力机制的梯度流的分析,因为作者们发现现存的注意力机制可能会遭受梯度消失的问题。

前面整理的注意力系数的形式:

考虑第层系数

关于可学习参数

的导数:

当考虑两个尺度的时候,即时:

左上角计算的是第1层的注意力系数关于第1层的参数的导数,右上角则是第1层关于第2层的导数。可以看到,如果的时候,梯度会消失,不管

是多少。

所以为了避免梯度消失的问题,这里需要对进行仔细的设定。当选择绝对值函数的时候,这里的Jacobian矩阵将不会在

且

的情况出现消失的问题。

但是这里如果取了绝对值函数,求导数是+-1,这依然会有梯度消失的问题诶?

考虑HMA中的情况,按照作者给出的形式,此时有:%20%3D%20%5Cfrac%7B%5Cpartial%20%5Clog(1%2Be%5E%7By_i%7D)%7D%7B%5Cpartial%20y_i%7D%20%3D%20%5Cfrac%7Be%5E%7By_i%7D%7D%7B1%2Be%5E%7By_i%7D%7D%20%3D%201%20-%20%5Cfrac%7B1%7D%7B1%2Be%5E%7By_i%7D%7D%20%3D%201%20-%20e%5E%7B-%5Clog(1%2Be%5E%7By_i%7D)%7D%20%3D%201%20-%20a_i%2C%20%5C%5C%0Aa_2%20%3D%200.%3C%2Ftitle%3E%0A%3Cdefs%20aria-hidden%3D%22true%22%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-3D5%22%20d%3D%22M409%20688Q413%20694%20421%20694H429H442Q448%20688%20448%20686Q448%20679%20418%20563Q411%20535%20404%20504T392%20458L388%20442Q388%20441%20397%20441T429%20435T477%20418Q521%20397%20550%20357T579%20260T548%20151T471%2065T374%2011T279%20-10H275L251%20-105Q245%20-128%20238%20-160Q230%20-192%20227%20-198T215%20-205H209Q189%20-205%20189%20-198Q189%20-193%20211%20-103L234%20-11Q234%20-10%20226%20-10Q221%20-10%20206%20-8T161%206T107%2036T62%2089T43%20171Q43%20231%2076%20284T157%20370T254%20422T342%20441Q347%20441%20348%20445L378%20567Q409%20686%20409%20688ZM122%20150Q122%20116%20134%2091T167%2053T203%2035T237%2027H244L337%20404Q333%20404%20326%20403T297%20395T255%20379T211%20350T170%20304Q152%20276%20137%20237Q122%20191%20122%20150ZM500%20282Q500%20320%20484%20347T444%20385T405%20400T381%20404H378L332%20217L284%2029Q284%2027%20285%2027Q293%2027%20317%2033T357%2047Q400%2066%20431%20100T475%20170T494%20234T500%20282Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2032%22%20d%3D%22M79%2043Q73%2043%2052%2049T30%2061Q30%2068%2085%20293T146%20528Q161%20560%20198%20560Q218%20560%20240%20545T262%20501Q262%20496%20260%20486Q259%20479%20173%20263T84%2045T79%2043Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-28%22%20d%3D%22M94%20250Q94%20319%20104%20381T127%20488T164%20576T202%20643T244%20695T277%20729T302%20750H315H319Q333%20750%20333%20741Q333%20738%20316%20720T275%20667T226%20581T184%20443T167%20250T184%2058T225%20-81T274%20-167T316%20-220T333%20-241Q333%20-250%20318%20-250H315H302L274%20-226Q180%20-141%20137%20-14T94%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-79%22%20d%3D%22M21%20287Q21%20301%2036%20335T84%20406T158%20442Q199%20442%20224%20419T250%20355Q248%20336%20247%20334Q247%20331%20231%20288T198%20191T182%20105Q182%2062%20196%2045T238%2027Q261%2027%20281%2038T312%2061T339%2094Q339%2095%20344%20114T358%20173T377%20247Q415%20397%20419%20404Q432%20431%20462%20431Q475%20431%20483%20424T494%20412T496%20403Q496%20390%20447%20193T391%20-23Q363%20-106%20294%20-155T156%20-205Q111%20-205%2077%20-183T43%20-117Q43%20-95%2050%20-80T69%20-58T89%20-48T106%20-45Q150%20-45%20150%20-87Q150%20-107%20138%20-122T115%20-142T102%20-147L99%20-148Q101%20-153%20118%20-160T152%20-167H160Q177%20-167%20186%20-165Q219%20-156%20247%20-127T290%20-65T313%20-9T321%2021L315%2017Q309%2013%20296%206T270%20-6Q250%20-11%20231%20-11Q185%20-11%20150%2011T104%2082Q103%2089%20103%20113Q103%20170%20138%20262T173%20379Q173%20380%20173%20381Q173%20390%20173%20393T169%20400T158%20404H154Q131%20404%20112%20385T82%20344T65%20302T57%20280Q55%20278%2041%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-69%22%20d%3D%22M184%20600Q184%20624%20203%20642T247%20661Q265%20661%20277%20649T290%20619Q290%20596%20270%20577T226%20557Q211%20557%20198%20567T184%20600ZM21%20287Q21%20295%2030%20318T54%20369T98%20420T158%20442Q197%20442%20223%20419T250%20357Q250%20340%20236%20301T196%20196T154%2083Q149%2061%20149%2051Q149%2026%20166%2026Q175%2026%20185%2029T208%2043T235%2078T260%20137Q263%20149%20265%20151T282%20153Q302%20153%20302%20143Q302%20135%20293%20112T268%2061T223%2011T161%20-11Q129%20-11%20102%2010T74%2074Q74%2091%2079%20106T122%20220Q160%20321%20166%20341T173%20380Q173%20404%20156%20404H154Q124%20404%2099%20371T61%20287Q60%20286%2059%20284T58%20281T56%20279T53%20278T49%20278T41%20278H27Q21%20284%2021%20287Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-29%22%20d%3D%22M60%20749L64%20750Q69%20750%2074%20750H86L114%20726Q208%20641%20251%20514T294%20250Q294%20182%20284%20119T261%2012T224%20-76T186%20-143T145%20-194T113%20-227T90%20-246Q87%20-249%2086%20-250H74Q66%20-250%2063%20-250T58%20-247T55%20-238Q56%20-237%2066%20-225Q221%20-64%20221%20250T66%20725Q56%20737%2055%20738Q55%20746%2060%20749Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-3D%22%20d%3D%22M56%20347Q56%20360%2070%20367H707Q722%20359%20722%20347Q722%20336%20708%20328L390%20327H72Q56%20332%2056%20347ZM56%20153Q56%20168%2072%20173H708Q722%20163%20722%20153Q722%20140%20707%20133H70Q56%20140%2056%20153Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2202%22%20d%3D%22M202%20508Q179%20508%20169%20520T158%20547Q158%20557%20164%20577T185%20624T230%20675T301%20710L333%20715H345Q378%20715%20384%20714Q447%20703%20489%20661T549%20568T566%20457Q566%20362%20519%20240T402%2053Q321%20-22%20223%20-22Q123%20-22%2073%2056Q42%20102%2042%20148V159Q42%20276%20129%20370T322%20465Q383%20465%20414%20434T455%20367L458%20378Q478%20461%20478%20515Q478%20603%20437%20639T344%20676Q266%20676%20223%20612Q264%20606%20264%20572Q264%20547%20246%20528T202%20508ZM430%20306Q430%20372%20401%20400T333%20428Q270%20428%20222%20382Q197%20354%20183%20323T150%20221Q132%20149%20132%20116Q132%2021%20232%2021Q244%2021%20250%2022Q327%2035%20374%20112Q389%20137%20409%20196T430%20306Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-6C%22%20d%3D%22M42%2046H56Q95%2046%20103%2060V68Q103%2077%20103%2091T103%20124T104%20167T104%20217T104%20272T104%20329Q104%20366%20104%20407T104%20482T104%20542T103%20586T103%20603Q100%20622%2089%20628T44%20637H26V660Q26%20683%2028%20683L38%20684Q48%20685%2067%20686T104%20688Q121%20689%20141%20690T171%20693T182%20694H185V379Q185%2062%20186%2060Q190%2052%20198%2049Q219%2046%20247%2046H263V0H255L232%201Q209%202%20183%202T145%203T107%203T57%201L34%200H26V46H42Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-6F%22%20d%3D%22M28%20214Q28%20309%2093%20378T250%20448Q340%20448%20405%20380T471%20215Q471%20120%20407%2055T250%20-10Q153%20-10%2091%2057T28%20214ZM250%2030Q372%2030%20372%20193V225V250Q372%20272%20371%20288T364%20326T348%20362T317%20390T268%20410Q263%20411%20252%20411Q222%20411%20195%20399Q152%20377%20139%20338T126%20246V226Q126%20130%20145%2091Q177%2030%20250%2030Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-67%22%20d%3D%22M329%20409Q373%20453%20429%20453Q459%20453%20472%20434T485%20396Q485%20382%20476%20371T449%20360Q416%20360%20412%20390Q410%20404%20415%20411Q415%20412%20416%20414V415Q388%20412%20363%20393Q355%20388%20355%20386Q355%20385%20359%20381T368%20369T379%20351T388%20325T392%20292Q392%20230%20343%20187T222%20143Q172%20143%20123%20171Q112%20153%20112%20133Q112%2098%20138%2081Q147%2075%20155%2075T227%2073Q311%2072%20335%2067Q396%2058%20431%2026Q470%20-13%20470%20-72Q470%20-139%20392%20-175Q332%20-206%20250%20-206Q167%20-206%20107%20-175Q29%20-140%2029%20-75Q29%20-39%2050%20-15T92%2018L103%2024Q67%2055%2067%20108Q67%20155%2096%20193Q52%20237%2052%20292Q52%20355%20102%20398T223%20442Q274%20442%20318%20416L329%20409ZM299%20343Q294%20371%20273%20387T221%20404Q192%20404%20171%20388T145%20343Q142%20326%20142%20292Q142%20248%20149%20227T179%20192Q196%20182%20222%20182Q244%20182%20260%20189T283%20207T294%20227T299%20242Q302%20258%20302%20292T299%20343ZM403%20-75Q403%20-50%20389%20-34T348%20-11T299%20-2T245%200H218Q151%200%20138%20-6Q118%20-15%20107%20-34T95%20-74Q95%20-84%20101%20-97T122%20-127T170%20-155T250%20-167Q319%20-167%20361%20-139T403%20-75Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-31%22%20d%3D%22M213%20578L200%20573Q186%20568%20160%20563T102%20556H83V602H102Q149%20604%20189%20617T245%20641T273%20663Q275%20666%20285%20666Q294%20666%20302%20660V361L303%2061Q310%2054%20315%2052T339%2048T401%2046H427V0H416Q395%203%20257%203Q121%203%20100%200H88V46H114Q136%2046%20152%2046T177%2047T193%2050T201%2052T207%2057T213%2061V578Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2B%22%20d%3D%22M56%20237T56%20250T70%20270H369V420L370%20570Q380%20583%20389%20583Q402%20583%20409%20568V270H707Q722%20262%20722%20250T707%20230H409V-68Q401%20-82%20391%20-82H389H387Q375%20-82%20369%20-68V230H70Q56%20237%2056%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-65%22%20d%3D%22M39%20168Q39%20225%2058%20272T107%20350T174%20402T244%20433T307%20442H310Q355%20442%20388%20420T421%20355Q421%20265%20310%20237Q261%20224%20176%20223Q139%20223%20138%20221Q138%20219%20132%20186T125%20128Q125%2081%20146%2054T209%2026T302%2045T394%20111Q403%20121%20406%20121Q410%20121%20419%20112T429%2098T420%2082T390%2055T344%2024T281%20-1T205%20-11Q126%20-11%2083%2042T39%20168ZM373%20353Q367%20405%20305%20405Q272%20405%20244%20391T199%20357T170%20316T154%20280T149%20261Q149%20260%20169%20260Q282%20260%20327%20284T373%20353Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2212%22%20d%3D%22M84%20237T84%20250T98%20270H679Q694%20262%20694%20250T679%20230H98Q84%20237%2084%20250Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMATHI-61%22%20d%3D%22M33%20157Q33%20258%20109%20349T280%20441Q331%20441%20370%20392Q386%20422%20416%20422Q429%20422%20439%20414T449%20394Q449%20381%20412%20234T374%2068Q374%2043%20381%2035T402%2026Q411%2027%20422%2035Q443%2055%20463%20131Q469%20151%20473%20152Q475%20153%20483%20153H487Q506%20153%20506%20144Q506%20138%20501%20117T481%2063T449%2013Q436%200%20417%20-8Q409%20-10%20393%20-10Q359%20-10%20336%205T306%2036L300%2051Q299%2052%20296%2050Q294%2048%20292%2046Q233%20-10%20172%20-10Q117%20-10%2075%2030T33%20157ZM351%20328Q351%20334%20346%20350T323%20385T277%20405Q242%20405%20210%20374T160%20293Q131%20214%20119%20129Q119%20126%20119%20118T118%20106Q118%2061%20136%2044T179%2026Q217%2026%20254%2059T298%20110Q300%20114%20325%20217T351%20328Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2C%22%20d%3D%22M78%2035T78%2060T94%20103T137%20121Q165%20121%20187%2096T210%208Q210%20-27%20201%20-60T180%20-117T154%20-158T130%20-185T117%20-194Q113%20-194%20104%20-185T95%20-172Q95%20-168%20106%20-156T131%20-126T157%20-76T173%20-3V9L172%208Q170%207%20167%206T161%203T152%201T140%200Q113%200%2096%2017Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-32%22%20d%3D%22M109%20429Q82%20429%2066%20447T50%20491Q50%20562%20103%20614T235%20666Q326%20666%20387%20610T449%20465Q449%20422%20429%20383T381%20315T301%20241Q265%20210%20201%20149L142%2093L218%2092Q375%2092%20385%2097Q392%2099%20409%20186V189H449V186Q448%20183%20436%2095T421%203V0H50V19V31Q50%2038%2056%2046T86%2081Q115%20113%20136%20137Q145%20147%20170%20174T204%20211T233%20244T261%20278T284%20308T305%20340T320%20369T333%20401T340%20431T343%20464Q343%20527%20309%20573T212%20619Q179%20619%20154%20602T119%20569T109%20550Q109%20549%20114%20549Q132%20549%20151%20535T170%20489Q170%20464%20154%20447T109%20429Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-30%22%20d%3D%22M96%20585Q152%20666%20249%20666Q297%20666%20345%20640T423%20548Q460%20465%20460%20320Q460%20165%20417%2083Q397%2041%20362%2016T301%20-15T250%20-22Q224%20-22%20198%20-16T137%2016T82%2083Q39%20165%2039%20320Q39%20494%2096%20585ZM321%20597Q291%20629%20250%20629Q208%20629%20178%20597Q153%20571%20145%20525T137%20333Q137%20175%20145%20125T181%2046Q209%2016%20250%2016Q290%2016%20318%2046Q347%2076%20354%20130T362%20333Q362%20478%20354%20524T321%20597Z%22%3E%3C%2Fpath%3E%0A%3Cpath%20stroke-width%3D%221%22%20id%3D%22E1-MJMAIN-2E%22%20d%3D%22M78%2060Q78%2084%2095%20102T138%20120Q162%20120%20180%20104T199%2061Q199%2036%20182%2018T139%200T96%2017T78%2060Z%22%3E%3C%2Fpath%3E%0A%3C%2Fdefs%3E%0A%3Cg%20stroke%3D%22currentColor%22%20fill%3D%22currentColor%22%20stroke-width%3D%220%22%20transform%3D%22matrix(1%200%200%20-1%200%200)%22%20aria-hidden%3D%22true%22%3E%0A%3Cg%20transform%3D%22translate(5192%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-3D5%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-2032%22%20x%3D%22843%22%20y%3D%22583%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%22891%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1280%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22693%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%222115%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%222782%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(3561%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(397%2C0)%22%3E%0A%3Crect%20stroke%3D%22none%22%20width%3D%225818%22%20height%3D%2260%22%20x%3D%220%22%20y%3D%22220%22%3E%3C%2Frect%3E%0A%3Cg%20transform%3D%22translate(60%2C770)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2202%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(734%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-6C%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-6F%22%20x%3D%22278%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-67%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%222013%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%222403%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2B%22%20x%3D%223125%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(4126%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-65%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(466%2C362)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22604%22%20y%3D%22-304%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%225308%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(2208%2C-736)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2202%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(567%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22693%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%2210175%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(10953%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(397%2C0)%22%3E%0A%3Crect%20stroke%3D%22none%22%20width%3D%223025%22%20height%3D%2260%22%20x%3D%220%22%20y%3D%22220%22%3E%3C%2Frect%3E%0A%3Cg%20transform%3D%22translate(921%2C676)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-65%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(466%2C362)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22604%22%20y%3D%22-304%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(60%2C-687)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2B%22%20x%3D%22722%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1723%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-65%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(466%2C288)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22604%22%20y%3D%22-304%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%2214775%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%2215831%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%2216554%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(17332%2C0)%22%3E%0A%3Cg%20transform%3D%22translate(342%2C0)%22%3E%0A%3Crect%20stroke%3D%22none%22%20width%3D%223025%22%20height%3D%2260%22%20x%3D%220%22%20y%3D%22220%22%3E%3C%2Frect%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%221262%22%20y%3D%22676%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(60%2C-687)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2B%22%20x%3D%22722%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(1723%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-65%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(466%2C288)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22604%22%20y%3D%22-304%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%2221098%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%2222154%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%2222877%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(23878%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-65%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(466%2C412)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(717%2C0)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-6C%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-6F%22%20x%3D%22278%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-67%22%20x%3D%22779%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-28%22%20x%3D%222293%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%222683%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-2B%22%20x%3D%223183%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2801%2C0)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-65%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(329%2C265)%22%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-79%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.574)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22490%22%20y%3D%22-318%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-29%22%20x%3D%225288%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%2228737%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-31%22%20x%3D%2229793%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2212%22%20x%3D%2230516%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(31517%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-61%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMATHI-69%22%20x%3D%22748%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2C%22%20x%3D%2232391%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3Cg%20transform%3D%22translate(19979%2C-2039)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMATHI-61%22%20x%3D%220%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20transform%3D%22scale(0.707)%22%20xlink%3Ahref%3D%22%23E1-MJMAIN-32%22%20x%3D%22748%22%20y%3D%22-213%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-3D%22%20x%3D%221261%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3Cg%20transform%3D%22translate(2317%2C0)%22%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-30%22%3E%3C%2Fuse%3E%0A%20%3Cuse%20xlink%3Ahref%3D%22%23E1-MJMAIN-2E%22%20x%3D%22500%22%20y%3D%220%22%3E%3C%2Fuse%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fg%3E%0A%3C%2Fsvg%3E#card=math&code=%5Cphi%20%27%28y_i%29%20%3D%20%5Cfrac%7B%5Cpartial%20%5Clog%281%2Be%5E%7By_i%7D%29%7D%7B%5Cpartial%20y_i%7D%20%3D%20%5Cfrac%7Be%5E%7By_i%7D%7D%7B1%2Be%5E%7By_i%7D%7D%20%3D%201%20-%20%5Cfrac%7B1%7D%7B1%2Be%5E%7By_i%7D%7D%20%3D%201%20-%20e%5E%7B-%5Clog%281%2Be%5E%7By_i%7D%29%7D%20%3D%201%20-%20a_i%2C%20%5C%5C%0Aa_2%20%3D%200.&id=Ssugt)分支2不参与注意力计算。

当时会出现梯度消失。

而按照我前面的形式,则有:

同样也会出现消失的问题。

链接

- 论文:https://arxiv.org/abs/2111.00770

- 代码:http://vis.xyz/pub/dla-afa

- 本文的思想来源于NeRF,可以看看NeRF的介绍再来看着SSR的设计。

- 关于体绘制的一些资料:

- 非常丰富全面的一份中文CG学习材料:GPU 编程与 CG 语言之阳春白雪下里巴人(GPU Programming And Cg Language Primer)

- 21年出现在知网上的一份小综述:基于神经辐射场的视点合成算法综述