说在开头

这篇文章的代码用了一些很有用的trick,其中我觉得最重要的就是多尺度训练。这确实是一个在一定程度上可以缓解多尺度问题的方法(之后再从知乎上扒一扒,记得有个问题问的就是类似的内容)。

在我自己的一份工作里,将其训练策略迁移过来,实现了非常大的性能提升。一些实验结果可见:

主要工作

本文主要是在解决这样的两个问题:

- reduce the impact of inconsistency between features of different levels

- assign larger weights to those truly important pixels

分别提出了不同的组件来处理:

- CFM&CFD:

- First, to mitigate the discrepancy between features, we design cross feature module (CFM), which fuses features of different levels by element-wise multiplication. Different from addition and concatenation, CFM takes a selective fusion strategy, where redundant information will be suppressed to avoid the contamination between features and important features will complement each other. Compared with traditional fusion methods, CFM is able to remove background noises and sharpen boundaries.

- Second, due to downsampling, high level features may suffer from information loss and distortion, which can not be solved by CFM. Therefore, we develop the cascaded feedback decoder (CFD) to refine these features iteratively. CFD contains multiple sub-decoders, each of which contains both bottom-up and top-down processes.

- For bottom-up process, multi-level features are aggregated by CFM gradually.

- For top-down process, aggregated features are feedback into previous features to refine them.

PPA:

- We propose the pixel position aware loss (PPA) to improve the commonly used binary cross entropy loss which treats all pixels equally. In fact, pixels located at boundaries or elongated areas are more difficult and discriminating. Paying more attention to these hard pixels can further enhance model generalization. PPA loss assigns different weights to different pixels, which extends binary cross entropy. The weight of each pixel is determined by its surrounding pixels. Hard pixels will get larger weights and easy pixels will get smaller ones.

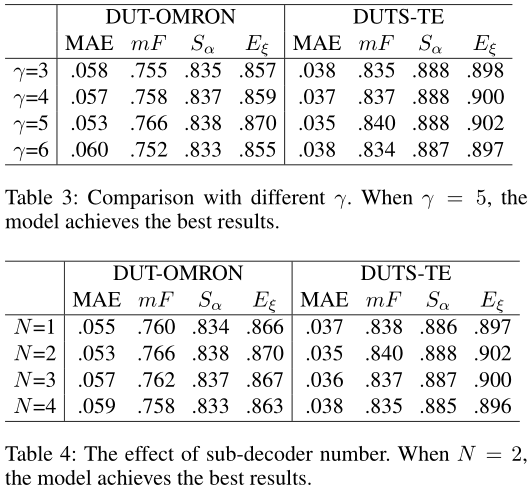

主要结构

从图上看,结构很直观。通过这里的CFM结构,作者想要实现:By multiple feature crossings, fl and fh will gradually absorb useful information from each other to complement themselves, i.e., noises of fl will be

suppressed and boundaries of fh will be sharpened.

这里也使用了多次级联的双向解码器的策略:

- We propose the pixel position aware loss (PPA) to improve the commonly used binary cross entropy loss which treats all pixels equally. In fact, pixels located at boundaries or elongated areas are more difficult and discriminating. Paying more attention to these hard pixels can further enhance model generalization. PPA loss assigns different weights to different pixels, which extends binary cross entropy. The weight of each pixel is determined by its surrounding pixels. Hard pixels will get larger weights and easy pixels will get smaller ones.

关于级联:

Cascaded feedback decoder (CFD) is built upon CFM which refines the multi-level features and generate saliency maps iteratively.

关于双向:

In fact, features of different levels may have missing or redundant parts because of downsamplings and noises. Even with CFM, these parts are still difficult to identify and restore, which may hurt the final performance. Considering the output saliency map is relatively complete and approximate to ground truth, we propose to propagate the features of the last convolution layer back to features of previous layers to correct and refine them.

损失函数

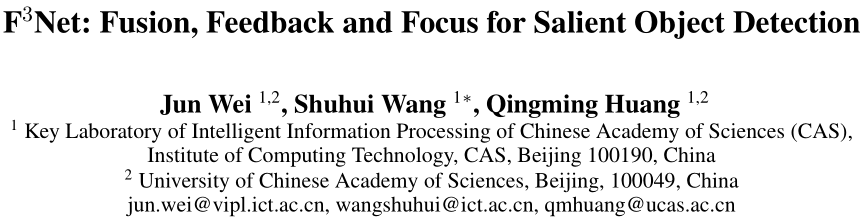

这里指出了BCE的三个缺点:

- 像素级损失:First, it calculates the loss for each pixel independently and ignores the global structure of the image.

- 易受大的区域的引导:Second, in pictures where the background is dominant, loss of foreground pixels will be diluted.

- 平等对待每个像素:Third, it treats all pixels equally. In fact, pixels located on cluttered or elongated areas (e.g., pole and horn) are prone to wrong predictions and deserve more attention and pixels located areas, like sky and grass, deserveless attention.

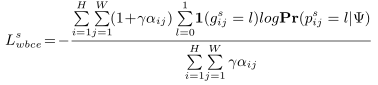

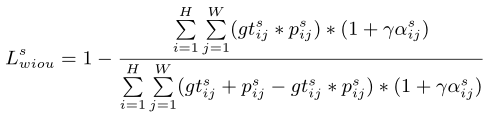

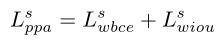

最终使用位置重加权的方式,结合了像素级损失BEL和区域级损失IOU来进行监督:

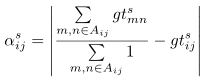

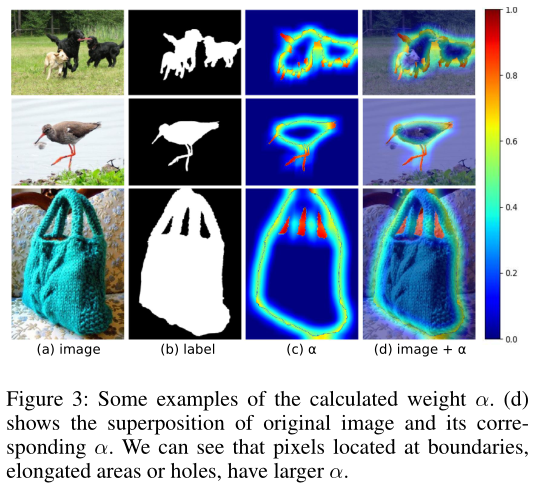

这里的其他不必细谈,主要是这个权重参数alpha。它使用了当前位置周围的窗口内的像素的真值,来评估当前像素是否处于较难的位置,可视化结果如下:

可以看到,对于较难的区域,确实可以实现不错的关注。整体损失如下:

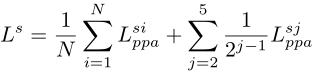

这里也使用了深监督策略,哥哥监督位置如前面的网络结构图所示。这里的Lsi表示中间各个级联解码器的预测损失,而后面的Lsj表示最终一个解码器里的各个层级的预测损失。

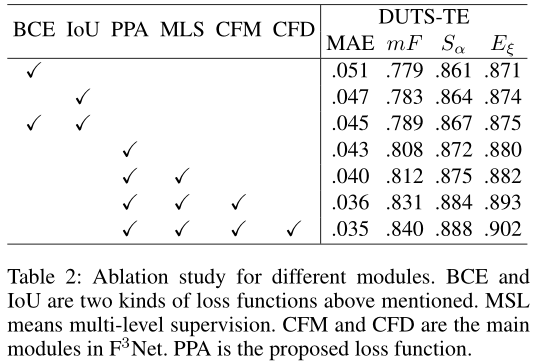

实验细节

- DUTS-TR is used to train F3Net and other above mentioned datasets are used to evaluate F3Net.

- For data augmentation, we use horizontal flip, random crop and multi-scale input images.

- ResNet-50 (He et al. 2016), pre-trained on ImageNet, is used as the backbone network. Maximum learning rate is set to 0.005 for ResNet-50 backbone and 0.05 for other parts.

- Warm-up and linear decay strategies are used to adjust the learning rate.

- The whole network is trained end-to-end, using stochastic gradient descent (SGD). Momentum and weight decay are set to 0.9 and 0.0005, respectively.

- Batchsize is set to 32 and maximum epoch is set to 32.

- We use Pytorch 1.3 to implement our model. An RTX 2080Ti GPU is used for acceleration.

- During testing, we resized each image to 352 x 352 and then feed it to F3Net to predict saliency maps without any post-processing.