Pascal VOC

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtrainval_06-Nov-2007.tarwget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCtest_06-Nov-2007.tarwget http://host.robots.ox.ac.uk/pascal/VOC/voc2007/VOCdevkit_08-Jun-2007.tartar xvf VOCtrainval_06-Nov-2007.tartar xvf VOCtest_06-Nov-2007.tartar xvf VOCdevkit_08-Jun-2007.tar

目录结构

VOC 数据集包括VOC2007和VOC2012两个,包含有 20 个类别:

VOC_CLASSES = ( # always index 0'aeroplane', 'bicycle', 'bird', 'boat','bottle', 'bus', 'car', 'cat', 'chair','cow', 'diningtable', 'dog', 'horse','motorbike', 'person', 'pottedplant','sheep', 'sofa', 'train', 'tvmonitor')

以VOC2007 为例,其整体的文件结构如下:

VOC2007/├── Annotations│ ├── 000001.xml│ ├── 000002.xml│ ├── 000003.xml│ └── ...│├── ImageSets│ ├── Layout│ │ ├── test.txt│ │ ├── train.txt│ │ ├── trainval.txt│ │ └── val.txt│ ├── Main│ │ ├── test.txt│ │ ├── train.txt│ │ └── val.txt│ └── Segmentation│ ├── test.txt│ ├── train.txt│ ├── trainval.txt│ └── val.txt│├── JPEGImages│ ├── 000001.jpg│ ├── 000002.jpg│ ├── 000003.jpg│ └── ...│├── SegmentationClass└── SegmentationObject

标注格式

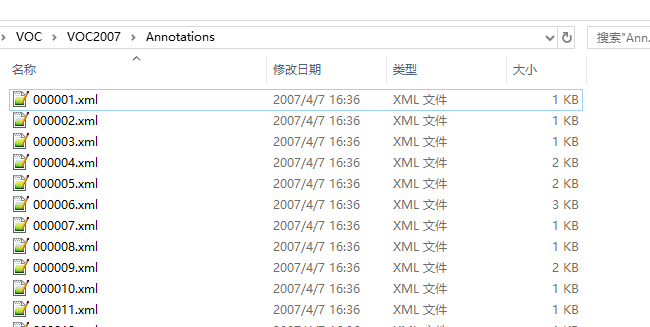

- Annotations:文件夹下存放的是 xml 格式的文件,是标注后生成的一种格式文件

以 000001.xml 为例

<annotation><folder>VOC2007</folder><filename>000001.jpg</filename><source><database>The VOC2007 Database</database><annotation>PASCAL VOC2007</annotation><image>flickr</image><flickrid>341012865</flickrid></source><owner><flickrid>Fried Camels</flickrid><name>Jinky the Fruit Bat</name></owner><size><width>353</width><height>500</height><depth>3</depth></size><segmented>0</segmented><object><name>dog</name><pose>Left</pose><truncated>1</truncated><difficult>0</difficult><bndbox><xmin>48</xmin><ymin>240</ymin><xmax>195</xmax><ymax>371</ymax></bndbox></object><object><name>person</name><pose>Left</pose><truncated>1</truncated><difficult>0</difficult><bndbox><xmin>8</xmin><ymin>12</ymin><xmax>352</xmax><ymax>498</ymax></bndbox></object></annotation>

- JPEGImages:文件夹下存放的是原始的图片文件

(注意:Annotations 文件夹和 JPEGImages 文件夹下的文件数量是相等的,文件名也是一一对应的,只是后缀名不一样而已)

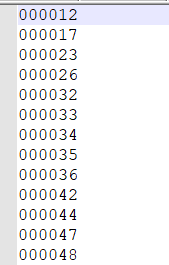

- ImageSets:文件夹下存放的是一些 txt 文件,里面记录的是每个类别的 txt 文件,主要关注的是 ImageSets/Main 文件夹下的三个文件train.txt、 test.txt、val.txt ,这三个文件相当于对数据进行拆分成训练集、测试集、验证集,文件里面的内容是图片的文件名(不包含后缀名),读者们自行打开就知道了。

以 train.txt 为例

- SegmentationClass 和 SegmentationObject:分割相关的文件夹,在目标检测中用不到。

VOC2007 数据集,总共有样本 9963 张图片,训练集 2501张、测试集 4952张、验证集 2510张。

COCO

关于数据集

- 数据集名称:COCO 大型图像数据集

- 发布机构:Microsoft

- 发布地址:http://cocodataset.org/#

- 下载地址:https://hyper.ai/datasets/4909

- 简介:COCO 数据集是一个大型图像数据集,设计用于机器视觉领域的对象检测、分割,人物关键点检测,填充分割和字幕生成。COCO 数据集以场景理解为目标,主要从复杂的日常场景中截取,图像中的目标通过精确的分割进行位置的标定。

COCO 数据集具有以下几个特征:目标分割,情景中的感知,超像素分割,33 万张图像(20 余万个标签),150 万个目标实例,80 个目标类,91 个物品类,25 万有关键点的人。

COCO 数据集于 2014 年由微软发布,现已成为图像字幕的标准测试平台。

目录结构

COCO/├── annotations│ ├──captions_train2014.json│ ├──captions_val2014.json│ ├──image_info_test2014.json│ ├──instances_minival2014.json│ ├──instances_train2014.json│ ├──instances_val2014.json│ ├──instances_valminusminival2014.json│ ├──person_keypoints_minival2014.json│ ├──person_keypoints_train2014.json│ ├──person_keypoints_val2014.json│ ├──person_keypoints_valminusminival2014.json│├── train2014│ ├── COCO_train2014_000000000009.jpg│ ├── COCO_train2014_000000000025.jpg│ ├── COCO_train2014_000000000030.jpg│ ├── COCO_train2014_000000000034.jpg│ ├── COCO_train2014_000000000036.jpg│ ├── COCO_train2014_000000000049.jpg│ ├── COCO_train2014_000000000061.jpg│ └── ...│├── val2014│ ├── COCO_val2014_000000000042.jpg│ ├── COCO_val2014_000000000073.jpg│ ├── COCO_val2014_000000000074.jpg│ ├── COCO_val2014_000000000133.jpg│ ├── COCO_val2014_000000000136.jpg│ ├── COCO_val2014_000000000139.jpg│ └── ...

标注格式

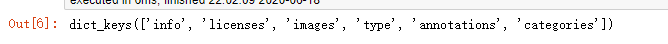

以 instances_minival2014.json 文件为例

主要字段

info

"info":{"description": "This is stable 1.0 version of the 2014 MS COCO dataset.","url": "http:\/\/mscoco.org","version": "1.0","year": 2014,"contributor": "Microsoft COCO group",}

licenses

"licenses": [{"url" : str, # 协议链接"id" : int, # 协议id编号"name" : str, # 协议名},....{"url": "http:\/\/creativecommons.org\/licenses\/by-nc-sa\/2.0\/","id": 1,"name": "Attribution-NonCommercial-ShareAlike License"}]

images

"images": [{"license" : int, # 遵循哪个协议"url" : str, # COCO图片链接url"file_name" : str, # 文件名"height" : int, # 图像的高"width" : int, # 图像的宽"date_captured" : datetime, # 获取数据的日期"id" : int, # 图像id,可从0开始},{"license": 4,"url": "http:\/\/farm7.staticflickr.com\/6116\/6255196340_da26cf2c9e_z.jpg","file_name": "COCO_val2014_000000397133.jpg","height": 427,"width": 640,"date_captured": "2013-11-14 17:02:52","id": 397133},...]

type

annotations

"annotation"[{"segmentation" : RLE or [polygon], # 分割具体数据"area" : float, # 目标检测的区域大小"iscrowd" : 0 or 1, # 目标是否被遮盖,默认为0"image_id" : int, # 图像id编号"bbox" : [x,y,width,height], # 目标检测框的坐标详细位置信息"category_id" : int, # 类别id编号"id" : int, # 注释id编号},{"segmentation": [[510.66,423.01,......510.03,423.01,510.45,423.01]],"area": 702.10575,"iscrowd": 0,"image_id": 289343,"bbox": [473.07,395.93,38.65,28.67],"category_id": 18,"id": 1768},....]

categories

每个类别的信息

"categories":[{"supercategory" : str, # 类别所属的大类,如哈巴狗和狐狸犬都属于犬科这个大类"id" : int, # 类别id编号"name" : str, # 类别名字},.....{"supercategory": "person","id": 1,"name": "person"}]

Pascal VOC to COCO

# pip install mmcvimport os.path as ospimport xml.etree.ElementTree as ETimport mmcv# 根据自己的数据进行修改def underwater_classes():return ['holothurian', 'echinus', 'scallop', 'starfish']from glob import globfrom tqdm import tqdmfrom PIL import Imagelabel_ids = {name: i + 1 for i, name in enumerate(underwater_classes())}def get_segmentation(points):return [points[0], points[1], points[2] + points[0], points[1],points[2] + points[0], points[3] + points[1], points[0], points[3] + points[1]]def parse_xml(xml_path, img_id, anno_id):tree = ET.parse(xml_path)root = tree.getroot()annotation = []for obj in root.findall('object'):name = obj.find('name').textif name == 'waterweeds':continuecategory_id = label_ids[name]bnd_box = obj.find('bndbox')xmin = int(bnd_box.find('xmin').text)ymin = int(bnd_box.find('ymin').text)xmax = int(bnd_box.find('xmax').text)ymax = int(bnd_box.find('ymax').text)w = xmax - xmin + 1h = ymax - ymin + 1area = w*hsegmentation = get_segmentation([xmin, ymin, w, h])annotation.append({"segmentation": segmentation,"area": area,"iscrowd": 0,"image_id": img_id,"bbox": [xmin, ymin, w, h],"category_id": category_id,"id": anno_id,"ignore": 0})anno_id += 1return annotation, anno_iddef cvt_annotations(img_path, xml_path, out_file):images = []annotations = []# xml_paths = glob(xml_path + '/*.xml')img_id = 1anno_id = 1for img_path in tqdm(glob(img_path + '/*.jpg')):w, h = Image.open(img_path).sizeimg_name = osp.basename(img_path)img = {"file_name": img_name, "height": int(h), "width": int(w), "id": img_id}images.append(img)xml_file_name = img_name.split('.')[0] + '.xml'xml_file_path = osp.join(xml_path, xml_file_name)annos, anno_id = parse_xml(xml_file_path, img_id, anno_id)annotations.extend(annos)img_id += 1categories = []for k,v in label_ids.items():categories.append({"name": k, "id": v})final_result = {"images": images, "annotations": annotations, "categories": categories}mmcv.dump(final_result, out_file)return annotationsdef main():xml_path = 'F:/jupyter/Underwater_detection/data/train/box' # xml 所在文件夹img_path = 'F:/jupyter/Underwater_detection/data/train/image' # 图片所在文件夹print('processing {} ...'.format("xml format annotations"))cvt_annotations(img_path, xml_path, 'F:/jupyter/Underwater_detection/data/train/annotations/train.json') # 保存的结果print('Done!')if __name__ == '__main__':main()