VOC2007 数据集作为演示

环境准备

https://github.com/FLyingLSJ/yolov5.githttps://gitee.com/Flying_2016/yolov5.git # 码云,下载速度比较快conda create -n yolo python=3.7 # 创建一个虚拟环境cd yolov5# 安装依赖,用清华源pip install -qr requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple # install dependencies

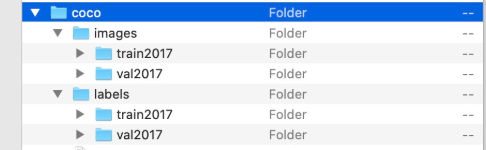

准备数据

数据集文件结构如下:

拆分成训练集和测试集,images 文件夹是训练集和测试集的图片(本次使用的是 VOC2007 数据集),按照官方的拆分来拆分成训练集和验证集。

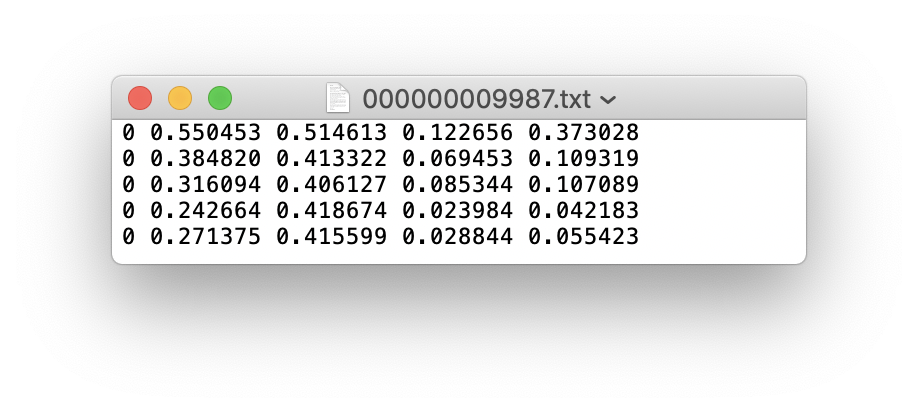

labels 文件夹是训练集和测试集的标注文件(txt 文件)格式如下:

注意:图片名和标注名需要一一对应

YOLOv5 与 YOLOv3 在数据集的文件结构上是有差异的

路径构建好后,修改 data 文件夹下 voc.yaml 配置文件

# PASCAL VOC dataset http://host.robots.ox.ac.uk/pascal/VOC/# Download command: bash ./data/get_voc.sh# Train command: python train.py --data voc.yaml# Default dataset location is next to /yolov5:# /parent_folder# /VOC# /yolov5# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]train: /openbayes/home/yolov5/data/images/train # 16551 images # 改成自己的路径val: /openbayes/home/yolov5/data/images/val/ # 4952 images# number of classesnc: 20# class namesnames: ['aeroplane', 'bicycle', 'bird', 'boat', 'bottle', 'bus', 'car', 'cat', 'chair', 'cow', 'diningtable', 'dog','horse', 'motorbike', 'person', 'pottedplant', 'sheep', 'sofa', 'train', 'tvmonitor']

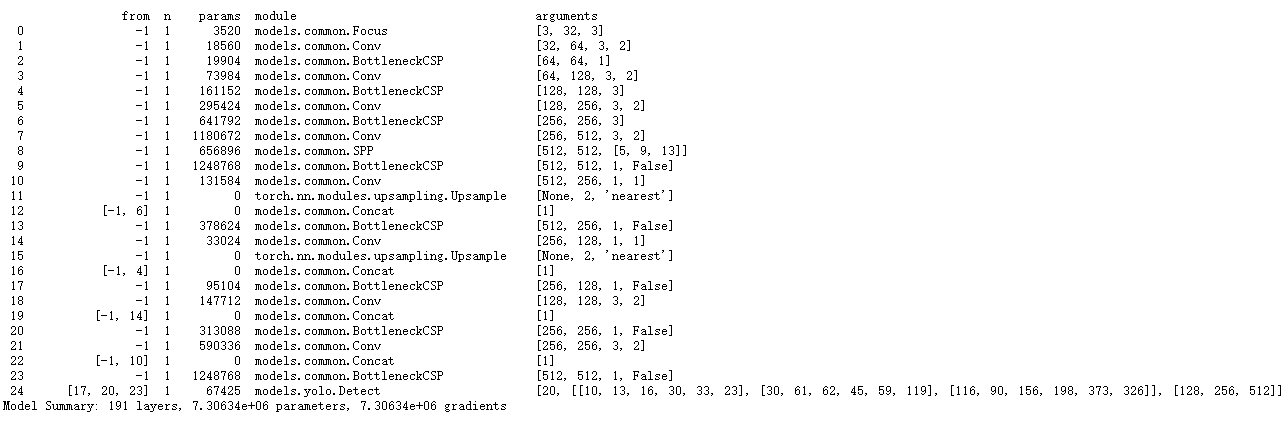

模型选择

model 文件夹下的模型配置文件,我们使用的是 yolov5s.yaml 并用预训练模型进行训练

# parametersnc: 20 # number of classesdepth_multiple: 0.33 # model depth multiplewidth_multiple: 0.50 # layer channel multiple# anchorsanchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 backbonebackbone:# [from, number, module, args][[-1, 1, Focus, [64, 3]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, BottleneckCSP, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 9, BottleneckCSP, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, BottleneckCSP, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 1, SPP, [1024, [5, 9, 13]]],[-1, 3, BottleneckCSP, [1024, False]], # 9]# YOLOv5 headhead:[[-1, 1, Conv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, Concat, [1]], # cat backbone P4[-1, 3, BottleneckCSP, [512, False]], # 13[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, Concat, [1]], # cat backbone P3[-1, 3, BottleneckCSP, [256, False]], # 17 (P3/8-small)[-1, 1, Conv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, BottleneckCSP, [512, False]], # 20 (P4/16-medium)[-1, 1, Conv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, BottleneckCSP, [1024, False]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)]

训练

python train.py --img 640 --batch 32 --epochs 30 --data ./data/voc.yaml --cfg ./models/yolov5s.yaml --weights 'weights/yolov5s.pt'

30 个轮次差不多用了 40 分钟

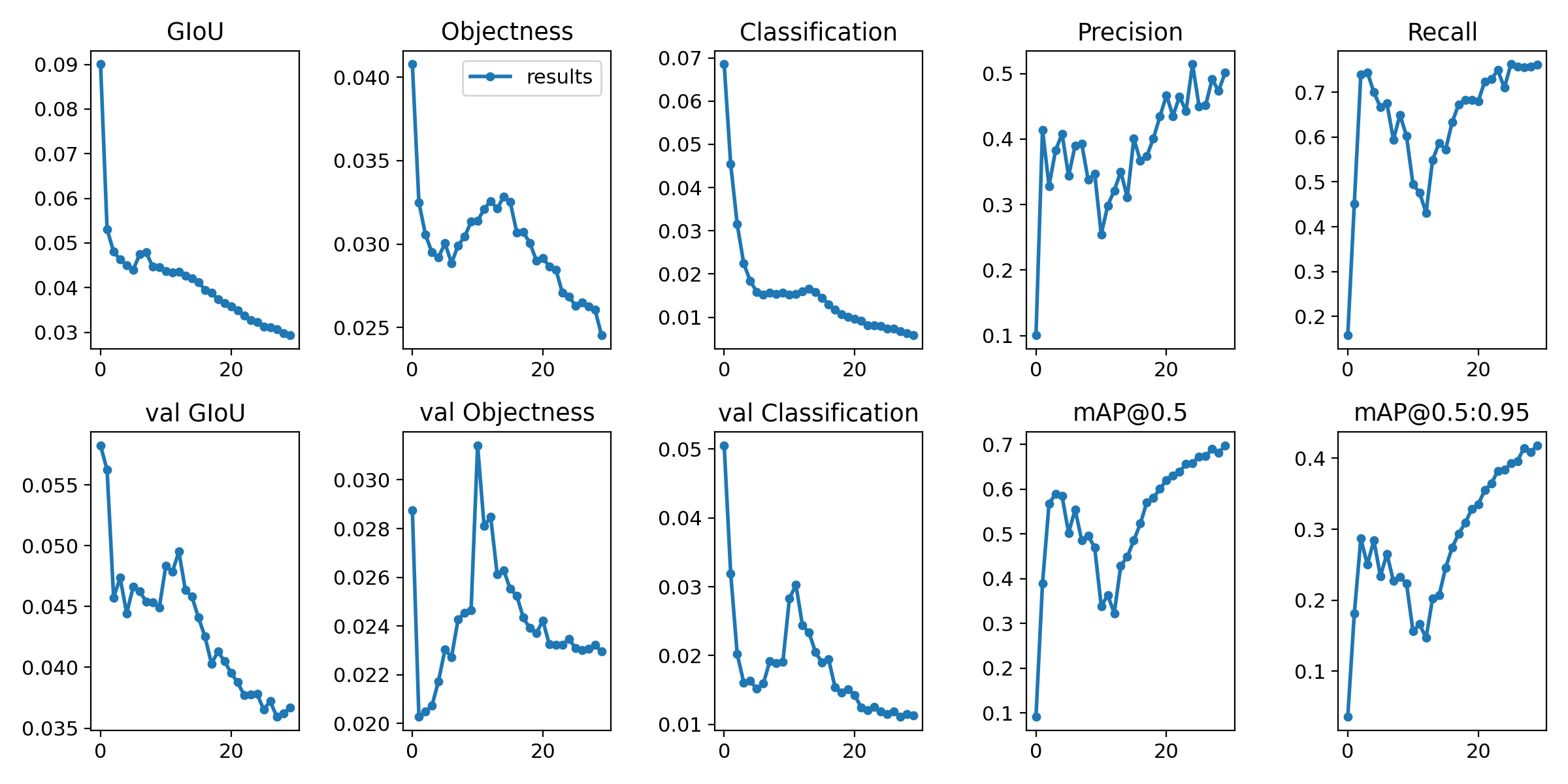

测试

python test.py --weights tf_log/exp0/weights/best.pt --data data/voc.yaml --img 640

查看 测试集上 mAP 等指标信息

可视化

runs/exp0 文件下可以查看训练的各种指标信息