概念

类似全连接,但是在同一个RDD中对key聚合

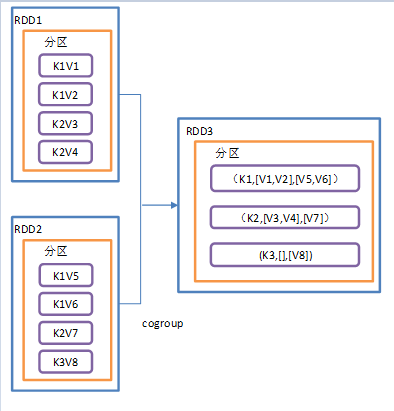

作用:在类型为(K,V)和(K,W)的 RDD 上调用,返回一个(K,(Iterable

案例

import org.apache.spark.rdd.RDDimport org.apache.spark.{SparkConf, SparkContext}object demo {def main(args: Array[String]): Unit = {val conf: SparkConf = new SparkConf().setAppName("SparkCoreTest").setMaster("local[*]")val sc: SparkContext = new SparkContext(conf)//3.1 创建第一个RDDval rdd: RDD[(Int, String)] = sc.makeRDD(Array((1, "a"), (2, "b"), (3, "c")))//3.2 创建第二个pairRDDval rdd1: RDD[(Int, Int)] = sc.makeRDD(Array((1, 4), (2, 5), (4, 6), (2, 8)))val newRDD: RDD[(Int, (Iterable[String], Iterable[Int]))] = rdd.cogroup(rdd1)newRDD.collect().foreach(println)/* 输出(1,(CompactBuffer(a),CompactBuffer(4)))(2,(CompactBuffer(b),CompactBuffer(5, 8)))(3,(CompactBuffer(c),CompactBuffer()))(4,(CompactBuffer(),CompactBuffer(6)))*/sc.stop()}}