爬取豆瓣电影排行榜

准备工作

首先引入包

from bs4 import BeautifulSoup #网页解析,获取数据import re #正则表达式,进行文字匹配import urllib.request,urllib.error #制定url,获取网页数据import xlwt #进行excel操作import sqlite3 #sqlite数据库操作

urllib的介绍

urllib是一个爬虫函数库,非常实用。在python2时,有urllib和urllib2.0,到了python3,将这两个urllib结合了。

可以进入http://httpbin.org/ 该测试界面,更能了解爬虫的作用

获取一个get请求

get请求通常为

import urllib.request #包的导入#urllib.request.urlopen() 打开指定网页,获取其中的信息#response.read().decode('utf-8') 对获取到的网页进行读的操作,并且转换成utf-8的形式response = urllib.request.urlopen("http://www.baidu.com")print(response.read().decode('utf-8'))#将会把百度页面的代码给爬下来

获取一个post请求

import urllib.parse #额外导入一个包,用来转换数据date = bytes(urllib.parse.urlencode({ #设置post数据"hello":"world"}),encoding="utf-8") #必须转换成utf-8才可以使用response = urllib.request.urlopen("http://httpbin.org/post",data=date)print(response.read().decode('utf-8'))

超时处理

有些时候难免会出现禁止爬虫,网速太慢的情况。会一直卡着,并且需要手动退出,此时我们需要添加个额外数值

try:response = urllib.request.urlopen("http://httpbin.org/get",timeout=1)#如果1秒后没有响应,会停止程序,并且报错print(response.read().decode('utf-8'))except urllib.error.URLError as e:print("timeout")

一些属性

response = urllib.request.urlopen("http://httpbin.org/get", timeout=1)print(response.status) # 获取状态码print(response.getheader("Server"))

定制请求头,不让网页发现我们是爬虫

url = "https://www.douban.com" #设置urlurl_s = "http://httpbin.org/post"header = { #设置请求头"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36 Edg/95.0.1020.40"}#使用Request方法request = urllib.request.Request(url=url, headers=header)response = urllib.request.urlopen(request)print(response.read().decode("utf-8"))#添加post数据data = bytes(urllib.parse.urlencode({'name': 'liao'}),encoding='utf-8')request_s = urllib.request.Request(url=url_s, headers=header, method="POST")response_s = urllib.request.urlopen(request_s)print(response_s.read().decode('utf-8'))

Bs4获取数据

我们使用bs4来进行演示

<!DOCTYPE html><html lang="en"><head><meta charset="UTF-8"><title>Title</title></head><body><div><ul><li id ="liao1">张三</li><li id = "l2">李四</li><li>王五</li><a href="" class="a1">里奥奥</a><span>test hahahah</span></ul></div><a href="" title="a2">baidu</a><div id="d1"><span>笑死我了,全是男同</span></div><p id="wuhu" class="qwq">wdnmd</p></body></html>

from bs4 import BeautifulSoupsoup = BeautifulSoup(open('test.html',encoding='utf-8'),'lxml')#1.根据标签名称查找print(soup.a) #查找第一个标签为a的内容print(soup.a.attrs) #展示标签的属性和属性值#bs4的一些函数#1.findprint(soup.find('a')) #查找第一个标签为a的内容print(soup.find('a',title="a2")) #查找第一个标签为a并且title=a2的内容print(soup.find('a',class_="a1")) #查找第一个标签为a并且class=1的内容#2.find_allprint(soup.find_all('a')) #查找所有的a标签,并且返回列表print(soup.find_all(['a','span'])) #查找所有的a和span标签print(soup.find_all('li',limit=2)) #查找li标签,前两个的内容#3.selectprint(soup.select('a')) #查找所有a标签,返回列表print(soup.select(('.a1'))) #查找class为a1的内容print(soup.select('#liao1')) #查找id为liao1的内容#属性选择器print(soup.select('li[id]')) #查找li中包含id属性的内容print(soup.select('li[id="l2"]')) #查找li中id为l2的内容#层级选择器#后代print(soup.select('div li')) #查找div后代有li的内容#子代print(soup.select('div > ul > li')) #查找div后ul后li的内容print(soup.select('a,li')) #查找a标签和li标签的所有对象#节点信息obj = soup.select('#d1')[0] #查找id为d1的属性的第一个内容print(obj) #打印htmlprint(obj.get_text()) #只打印字符内容#节点属性obj = soup.select('#wuhu')[0]print(soup.select('#wuhu'))print(obj.name) #打印该内容的属性值#获取节点的print(obj.attrs.get('class')) #打印class属性的值print(obj.get('class')) #打印class属性的值print(obj['class']) #打印class属性的值

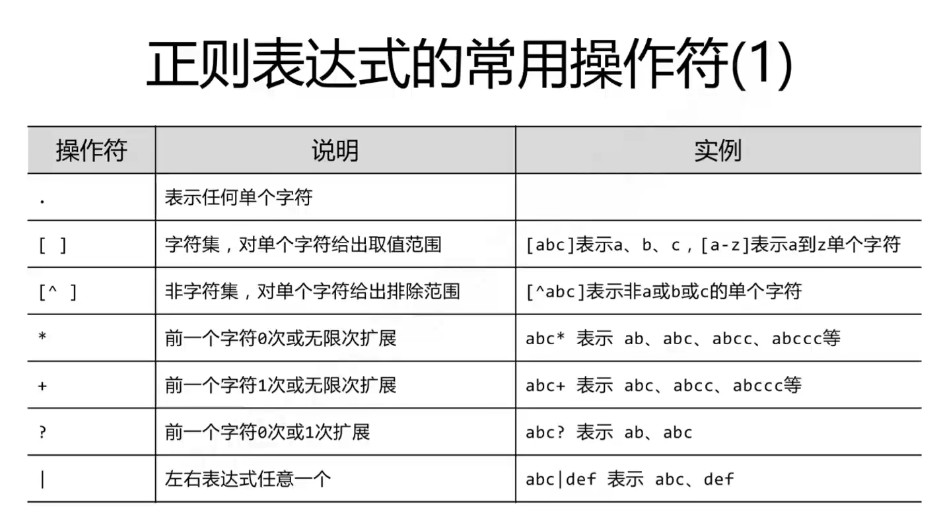

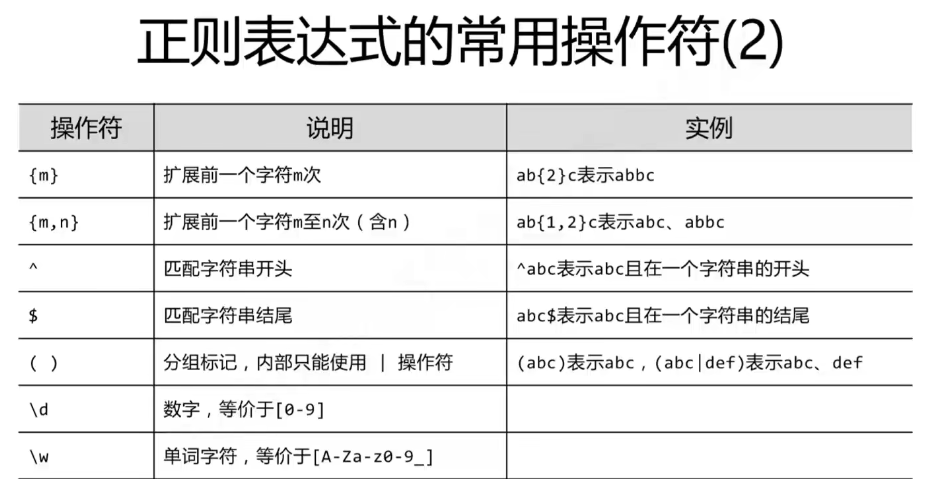

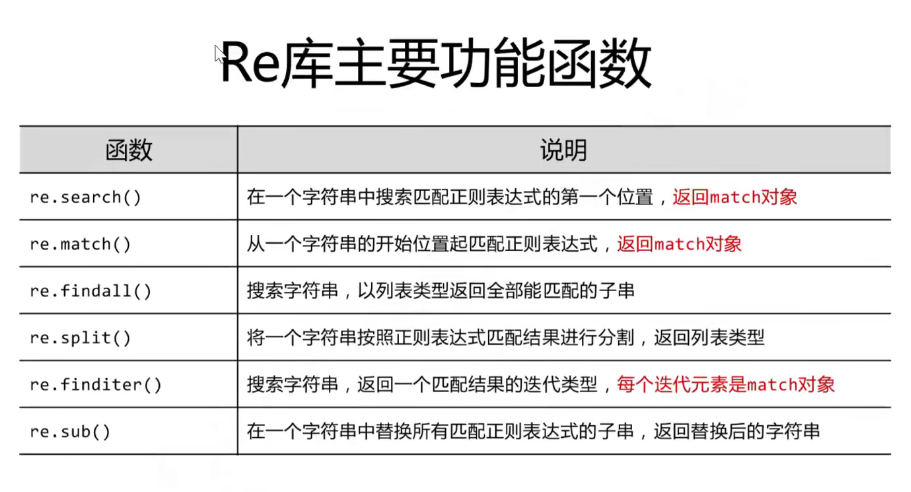

正则表达式

示例:

#正则表达式:字符串模式,判断字符串是否符合一定的规律import re# 创建模板对象pat = re.compile("AA") #此处的AA 是正则表达式 用来去验证其他的字符串m = pat.search("AABCAABADAA") #search方法,查找是否存在字符并且打印位置信息print(m) #<re.Match object; span=(3, 5), match='AA'>#没有模板对象n = re.search("ABC","ASDABC") #前面的字符串是规则,后面的是被校验的对象print(n)#findall 打印出相匹配的内容print(re.findall("a","asdcawerqaa")) #前面是规则,后面是被校验的字符串#查找所有的大写字母print(re.findall("[A-Z]","ASDCsdsaSADW"))#查找所有大写字母print(re.findall("[A-Z]+","ASDCsdsaSADW"))#查找不包含a的字母print(re.findall("[^a]","abcsadwcasabccx"))#sub 内容替换print(re.sub("a","A","asdcasADASD")) #找到a用A来替换第三个字符串的内容#建议在正则表达式中,被比较的字符串前面加上r,不用担心转义字符的问题

数据保存

保存到excel

将所打印的数据保存到excel文件中。

流程:创建workbook对象——创建工作表——添加数据——保存workbook

import xlwt #导入包workbook = xlwt.Workbook(encoding='utf-8') # 创建workbook对象worksheet = workbook.add_sheet('sheet1') # 创建工作表worksheet.write(0, 0, 'hello') # 写入数据,第一个为”行“,第二个为”列“for i in range(1, 10):for j in range(1, i + 1):worksheet.write(i-1, j-1, '%d*%d=%d' % (i, j, i * j))workbook.save('student.xls')

保存到sqlite

import sqlite3# 连接数据表conn = sqlite3.connect("test.db") # 在当前路径创建数据库print("成功打开数据库")c = conn.cursor() # 获取游标# 创建数据表sql1 = '''create table company(id int primary key not null,name text not null,age int not null,address char(50),salary real);'''c.execute(sql1) # 执行sql语句print("成功创建表")# 插入数据sql2 = '''insert into company(id,name,age,address,salary)values (1,"张三",32,"成都",8000)'''c.execute(sql2) # 执行sql语句# 查询数据sql3 = '''select id,name,address,salary from company;'''cursor = c.execute(sql3)for row in cursor:print(row[0])print(row[1])print(row[2])print(row[3])print("查询完毕")conn.commit() # 提交数据库操作conn.close() # 关闭数据库连接

代码示例

将数据保存在excel

# -*- coding = utf-8 -*-# @Time : 2022/1/2 19:38# @Author : LiAo# @File : spider_douban.py# @Software : PyCharmfrom bs4 import BeautifulSoup # 网页解析,获取数据import re # 正则表达式,进行文字匹配import urllib.request, urllib.error # 制定url,获取网页数据import xlwt # 进行excel操作import sqlite3 # sqlite数据库操作def main():baseurl = "https://movie.douban.com/top250?start="# 1.爬取网页datalist = getData(baseurl)# 3.保存数据savepath = "豆瓣电影top250.xls"saveData(datalist, savepath)askURL("https://movie.douban.com/top250?start=")# 影片的链接findLink = re.compile(r'<a href="(.*?)">') # 创建正则表达式对象# 影片的图片findImageSrc = re.compile(r'<img.*src="(.*?)"', re.S) # 让换行符包含在字符中# 影片的片名findTitle = re.compile(r'<span class="title">(.*)</span>')# 影片的评分findRating = re.compile(r'<span class="rating_num" property="v:average">(.*)</span>')# 评价人数findJudge = re.compile(r'<span>(\d*)人评价</span>')# 找到概况findInq = re.compile(r'<span class="inq">(.*)</span>')# 影片信息findBd = re.compile(r'<p class="">(.*?)</p>', re.S)# 爬取网页def getData(baseurl):datalist = [] # 获取的网页的数据for i in range(0, 10): # 调用获取页面信息的函数!10次。url = baseurl + str(i * 25)html = askURL(url) # 保存获取到的网页源码# 逐一进行解析soup = BeautifulSoup(html, "html.parser")for item in soup.find_all('div', class_="item"): # 查找符合要求的字符串,形成列表# print(item)data = [] # 保存一部电影的信息item = str(item)links = re.findall(findLink, item)[0] # re库,通过正则查找字符串模式data.append(links)imgSrc = re.findall(findImageSrc, item)[0]data.append(imgSrc)titles = re.findall(findTitle, item) # 片名可能只有一个if len(titles) == 2:ctitle = titles[0] # 添加中文名data.append(ctitle)otitle = titles[1].replace("/", "") # 无关的符号data.append(otitle) # 添加中国名else:data.append(titles[0])data.append(" ") # 因为要加入到excel,要给外国名留空rating = re.findall(findRating, item)[0]data.append(rating)judge = re.findall(findJudge, item)[0]data.append(judge)inq = re.findall(findInq, item)if len(inq) != 0:inq = inq[0].replace("。", "") # 去掉句号data.append(inq)else:data.append(" ")bd = re.findall(findBd, item)[0]bd = re.sub(r'<br(\s+)?/>(\s+?)', " ", bd) # 去掉br/bd = re.sub(r'/', " ", bd)data.append(bd.strip()) # 去掉前后空格datalist.append(data) # 将处理好的data添加到datalistprint(datalist)return datalist# 得到指定一个URL的网页内容def askURL(url):header = { # 模拟浏览器头部信息,向网页服务器发送消息"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36 Edg/95.0.1020.40"}request = urllib.request.Request(url=url, headers=header)html = ""try:response = urllib.request.urlopen(request)html = response.read().decode('utf-8')# print(html)except urllib.error.URLError as e:if hasattr(e, 'code'):print(e.code)if hasattr(e, 'reason'):print(e.reason)return html# 保存数据def saveData(datalist, savePath):workbook = xlwt.Workbook(encoding='utf-8') # 创建workbook对象worksheet = workbook.add_sheet('豆瓣电影top250', cell_overwrite_ok=True) # 创建工作表col = ('电影详情连接', "图片连接", "中文名", "外国名", "评分", "评价数", "概况", "相关信息")for i in range(0, 8):worksheet.write(0, i, col[i])for i in range(0, 250):print("第%d条" % (i+1))data = datalist[i]for j in range(0, 8):worksheet.write(i + 1, j, data[j])workbook.save(savePath) # 保存到excelif __name__ == "__main__":main()print("爬取完毕")

保存到sqlite

# -*- coding = utf-8 -*-# @Time : 2022/1/2 19:38# @Author : LiAo# @File : spider_douban.py# @Software : PyCharmfrom bs4 import BeautifulSoup # 网页解析,获取数据import re # 正则表达式,进行文字匹配import urllib.request, urllib.error # 制定url,获取网页数据import xlwt # 进行excel操作import sqlite3 # sqlite数据库操作def main():baseurl = "https://movie.douban.com/top250?start="# 1.爬取网页datalist = getData(baseurl)# 3.保存数据# savepath = "豆瓣电影top250.xls"# saveData(datalist, savepath)# askURL("https://movie.douban.com/top250?start=")dbpath = "movie.db"saveData2DB(datalist, dbpath)# 影片的链接findLink = re.compile(r'<a href="(.*?)">') # 创建正则表达式对象# 影片的图片findImageSrc = re.compile(r'<img.*src="(.*?)"', re.S) # 让换行符包含在字符中# 影片的片名findTitle = re.compile(r'<span class="title">(.*)</span>')# 影片的评分findRating = re.compile(r'<span class="rating_num" property="v:average">(.*)</span>')# 评价人数findJudge = re.compile(r'<span>(\d*)人评价</span>')# 找到概况findInq = re.compile(r'<span class="inq">(.*)</span>')# 影片信息findBd = re.compile(r'<p class="">(.*?)</p>', re.S)# 爬取网页def getData(baseurl):datalist = [] # 获取的网页的数据for i in range(0, 10): # 调用获取页面信息的函数!10次。url = baseurl + str(i * 25)html = askURL(url) # 保存获取到的网页源码# 逐一进行解析soup = BeautifulSoup(html, "html.parser")for item in soup.find_all('div', class_="item"): # 查找符合要求的字符串,形成列表# print(item)data = [] # 保存一部电影的信息item = str(item)links = re.findall(findLink, item)[0] # re库,通过正则查找字符串模式data.append(links)imgSrc = re.findall(findImageSrc, item)[0]data.append(imgSrc)titles = re.findall(findTitle, item) # 片名可能只有一个if len(titles) == 2:ctitle = titles[0] # 添加中文名data.append(ctitle)otitle = titles[1].replace("/", "") # 无关的符号data.append(otitle) # 添加中国名else:data.append(titles[0])data.append(" ") # 因为要加入到excel,要给外国名留空rating = re.findall(findRating, item)[0]data.append(rating)judge = re.findall(findJudge, item)[0]data.append(judge)inq = re.findall(findInq, item)if len(inq) != 0:inq = inq[0].replace("。", "") # 去掉句号data.append(inq)else:data.append(" ")bd = re.findall(findBd, item)[0]bd = re.sub(r'<br(\s+)?/>(\s+?)', " ", bd) # 去掉br/bd = re.sub(r'/', " ", bd)data.append(bd.strip()) # 去掉前后空格datalist.append(data) # 将处理好的data添加到datalistprint(datalist)return datalist# 得到指定一个URL的网页内容def askURL(url):header = { # 模拟浏览器头部信息,向网页服务器发送消息"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36 Edg/95.0.1020.40"}request = urllib.request.Request(url=url, headers=header)html = ""try:response = urllib.request.urlopen(request)html = response.read().decode('utf-8')# print(html)except urllib.error.URLError as e:if hasattr(e, 'code'):print(e.code)if hasattr(e, 'reason'):print(e.reason)return html# 保存数据def saveData(datalist, savePath):workbook = xlwt.Workbook(encoding='utf-8') # 创建workbook对象worksheet = workbook.add_sheet('豆瓣电影top250', cell_overwrite_ok=True) # 创建工作表col = ('电影详情连接', "图片连接", "中文名", "外国名", "评分", "评价数", "概况", "相关信息")for i in range(0, 8):worksheet.write(0, i, col[i])for i in range(0, 250):print("第%d条" % (i + 1))data = datalist[i]for j in range(0, 8):worksheet.write(i + 1, j, data[j])workbook.save(savePath) # 保存到exceldef saveData2DB(datalist, dbpath):init_db(dbpath)conn = sqlite3.connect(dbpath)cur = conn.cursor()for data in datalist:for index in range(len(data)):if index == 4 or index == 5:continuedata[index] = '"' + data[index] + '"'sql = '''insert into movie250(info_link,pic_link,cname,ename,score,rated,instroduction,info)values(%s)''' % ",".join(data) # 将data列表用,连接起来cur.execute(sql)conn.commit()cur.close()conn.close()def init_db(dbpath):sql = '''create table movie250(id integer primary key autoincrement,info_link text,pic_link text,cname varchar,ename varchar,score numeric,rated numeric ,instroduction text,info text);''' # 创建数据表conn = sqlite3.connect(dbpath)cursor = conn.cursor() # 获取游标cursor.execute(sql) # 执行语句conn.commit() # 提交数据库操作conn.close() # 保存并关闭数据库if __name__ == "__main__":main()# init_db("moivetest.db")print("爬取完毕")

详细注释版

# -*- coding = utf-8 -*-# @Time : 2022/1/2 19:38# @Author : LiAo# @File : spider_douban.py# @Software : PyCharmfrom bs4 import BeautifulSoup # 网页解析,获取数据import re # 正则表达式,进行文字匹配import urllib.request, urllib.error # 制定url,获取网页数据import xlwt # 进行excel操作import sqlite3 # sqlite数据库操作def main():#爬取的网页,这里末尾不能填写0。baseurl = "https://movie.douban.com/top250?start="datalist = getData(baseurl)# savepath = "豆瓣电影top250.xls"# saveData(datalist, savepath)# askURL("https://movie.douban.com/top250?start=")dbpath = "movie.db"saveData2DB(datalist, dbpath)# 影片的链接findLink = re.compile(r'<a href="(.*?)">') # 创建正则表达式对象# 影片的图片findImageSrc = re.compile(r'<img.*src="(.*?)"', re.S) # 让换行符包含在字符中# 影片的片名findTitle = re.compile(r'<span class="title">(.*)</span>')# 影片的评分findRating = re.compile(r'<span class="rating_num" property="v:average">(.*)</span>')# 评价人数findJudge = re.compile(r'<span>(\d*)人评价</span>')# 找到概况findInq = re.compile(r'<span class="inq">(.*)</span>')# 影片信息findBd = re.compile(r'<p class="">(.*?)</p>', re.S)# 爬取网页def getData(baseurl):datalist = [] # 获取的网页的数据for i in range(0, 10): # 调用获取页面信息的函数!10次。url = baseurl + str(i * 25)html = askURL(url) # 保存获取到的网页源码# 逐一进行解析soup = BeautifulSoup(html, "html.parser")for item in soup.find_all('div', class_="item"): # 查找符合要求的字符串,形成列表# print(item)data = [] # 保存一部电影的信息item = str(item)links = re.findall(findLink, item)[0] # re库,通过正则查找字符串模式data.append(links)imgSrc = re.findall(findImageSrc, item)[0]data.append(imgSrc)titles = re.findall(findTitle, item) # 片名可能只有一个if len(titles) == 2:ctitle = titles[0] # 添加中文名data.append(ctitle)otitle = titles[1].replace("/", "") # 无关的符号data.append(otitle) # 添加中国名else:data.append(titles[0])data.append(" ") # 因为要加入到excel,要给外国名留空rating = re.findall(findRating, item)[0]data.append(rating)judge = re.findall(findJudge, item)[0]data.append(judge)inq = re.findall(findInq, item)if len(inq) != 0:inq = inq[0].replace("。", "") # 去掉句号data.append(inq)else:data.append(" ")bd = re.findall(findBd, item)[0]bd = re.sub(r'<br(\s+)?/>(\s+?)', " ", bd) # 去掉br/bd = re.sub(r'/', " ", bd)data.append(bd.strip()) # 去掉前后空格datalist.append(data) # 将处理好的data添加到datalistprint(datalist)return datalist# 得到指定一个URL的网页内容def askURL(url):header = { # 模拟浏览器头部信息,向网页服务器发送消息"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/95.0.4638.54 Safari/537.36 Edg/95.0.1020.40"}request = urllib.request.Request(url=url, headers=header)html = ""try:response = urllib.request.urlopen(request)html = response.read().decode('utf-8')# print(html)except urllib.error.URLError as e:if hasattr(e, 'code'):print(e.code)if hasattr(e, 'reason'):print(e.reason)return html# 保存数据def saveData(datalist, savePath):workbook = xlwt.Workbook(encoding='utf-8') # 创建workbook对象worksheet = workbook.add_sheet('豆瓣电影top250', cell_overwrite_ok=True) # 创建工作表col = ('电影详情连接', "图片连接", "中文名", "外国名", "评分", "评价数", "概况", "相关信息")for i in range(0, 8):worksheet.write(0, i, col[i])for i in range(0, 250):print("第%d条" % (i + 1))data = datalist[i]for j in range(0, 8):worksheet.write(i + 1, j, data[j])workbook.save(savePath) # 保存到exceldef saveData2DB(datalist, dbpath):init_db(dbpath)conn = sqlite3.connect(dbpath)cur = conn.cursor()for data in datalist:for index in range(len(data)):if index == 4 or index == 5:continuedata[index] = '"' + data[index] + '"'sql = '''insert into movie250(info_link,pic_link,cname,ename,score,rated,instroduction,info)values(%s)''' % ",".join(data) # 将data列表用,连接起来cur.execute(sql)conn.commit()cur.close()conn.close()def init_db(dbpath):sql = '''create table movie250(id integer primary key autoincrement,info_link text,pic_link text,cname varchar,ename varchar,score numeric,rated numeric ,instroduction text,info text);''' # 创建数据表conn = sqlite3.connect(dbpath)cursor = conn.cursor() # 获取游标cursor.execute(sql) # 执行语句conn.commit() # 提交数据库操作conn.close() # 保存并关闭数据库if __name__ == "__main__":main()# init_db("moivetest.db")print("爬取完毕")