- 分享主题:Transfer Learning, Domain Generalization, CV, Explicit Feature Distribution Alignment, Adversarial

- 论文标题:Adversarial target-invariant representation learning for domain generalization

- 论文链接:https://arxiv.org/pdf/1911.00804v2.pdf

1.Summary

This is a paper about classification tasks. Now there are some source domains and no target domain. The source domain data is labeled. So, it is a domain generalization problem.This paper uses the adversarial method to realize the transfer of knowledge. Domain generalization is a challenging problem. The difficulty of this problem is that there is no data on the target domain, so the distribution of the source domain and the target domain cannot be aligned directly like domain adaptation. Therefore, you can only align the distribution of each source domain. Almost all work is done in this way. Suppose there are N source domains. In order to achieve this goal, this paper sets up N discriminators, and the i-th discriminator is used to judge whether the representation output by the feature extractor comes from the i-th source domain. Each representation needs to pass through all N discriminators. This aligns the distribution of all source domains. To deepen my understanding of this paper, I can read some information about domain generalization.2.你对于论文的思考

这是一篇关于DG的文章,文章提出了一种可以对齐所有源域的方法,假设有N个源域,那么设置N个判别器,第i个判别器判断特征提取器输出的表征来自源域还是目标域,每个表征都要经过所有N个判别器。这个方法感觉还可以,但是在判别器那里时间复杂度比较高(源域数*样本数),但是万一源域数目比较多的话,那么运行时间会变长很多,对于像文章中的实验那样,只有两三个源域的情况还是可以的。3. 其他

3.1 解决的问题

这篇文章解决的问题是在DG中对齐所有源域的分布。3.2 模型

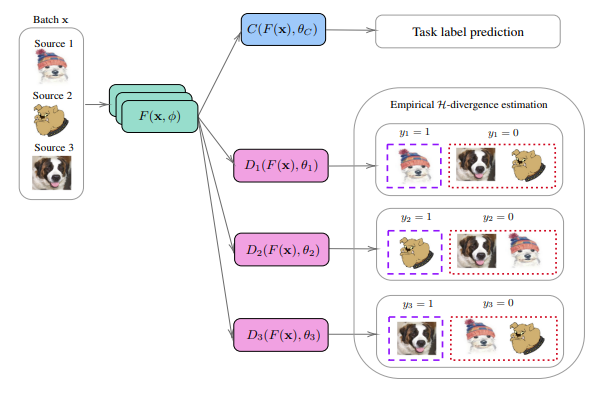

如下图所示,假设一共有三个源域,那么设置三个判别器,每个判别器要对每一个F(x, Φ)进行判别,最终使得所有源域的分布能够对齐。

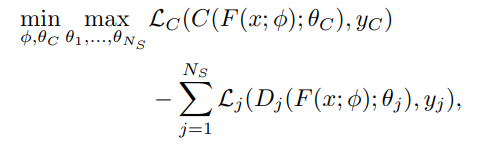

损失函数(第一部分为样本标签的分类损失,第二部分为域对抗的损失):

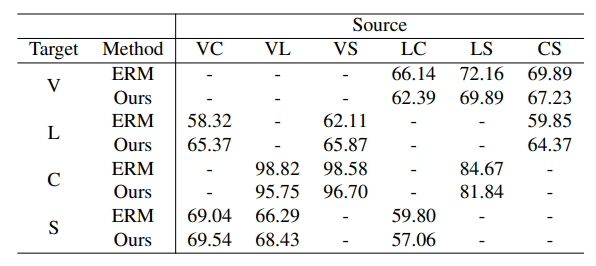

3.3 实验

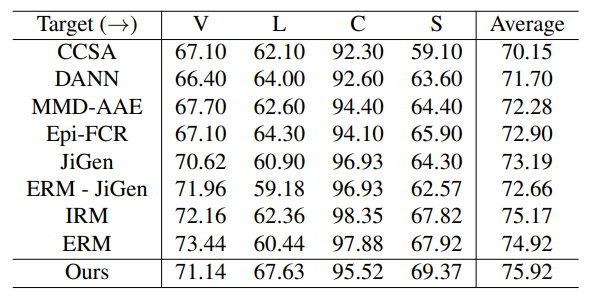

(1)数据集:VLCS

(2)数据集:VLCS

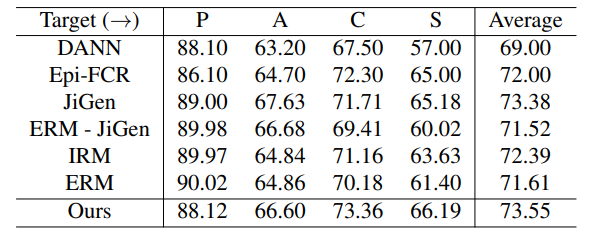

(3)数据集:PACS