前期准备

首先,需要先导入[synonyms](https://github.com/huyingxi/Synonyms)库,synonyms可以用于自然语言理解的很多任务:文本对齐,推荐算法,相似度计算,语义偏移,关键字提取,概念提取,自动摘要,搜索引擎等。

pip install synonyms

初次安装会下载词向量文件比较大,可能会下载失败,官网又给一个解决办法,但笔者这里直接本地下载后,移动到 当前python环境/synonyms/data文件夹下(文件夹下会看见下载好的vocab.txt)完成安装

本地下载gitee地址:词向量文件gitee地址

synonyms的词向量在V3.12.0后做了一次升级,目前有40w+的词汇,会加载词向量会需要等待一定时间和内存消耗。

代码

读取哈工大停用词表

import jiebaimport synonymsimport randomfrom random import shufflerandom.seed(2019)# 停用词列表,默认使用哈工大停用词表f = open('./hit_stopwords.txt', 'r', encoding='utf-8')stop_words = list()for stop_word in f.readlines():stop_words.append(stop_word[:-1])

随机同义词替换

功能:替换一个语句中的n个单词为其同义词

输入:words为分词列表,n 控制替换个数

输出:新的分词列表

# 随机同义词替换def synonym_replacement(words, n):new_words = words.copy()random_word_list = list(set([word for word in words if word not in stop_words]))random.shuffle(random_word_list)num_replaced = 0for random_word in random_word_list:synonyms = get_synonyms(random_word)if len(synonyms) >= 1:synonym = random.choice(synonyms)new_words = [synonym if word == random_word else word for word in new_words]num_replaced += 1if num_replaced >= n:breaksentence = ' '.join(new_words)new_words = sentence.split(' ')return new_words# 获取同义词def get_synonyms(word):return synonyms.nearby(word)[0]

随机插入

功能:随机在语句中插入n个词

输入:words为分词列表,n 控制插入个数

输出:新的分词列表

# 随机插入def random_insertion(words, n):new_words = words.copy()for _ in range(n):add_word(new_words)return new_wordsdef add_word(new_words):synonyms = []counter = 0while len(synonyms) < 1:random_word = new_words[random.randint(0, len(new_words) - 1)]synonyms = get_synonyms(random_word)counter += 1if counter >= 10:returnrandom_synonym = random.choice(synonyms)random_idx = random.randint(0, len(new_words) - 1)new_words.insert(random_idx, random_synonym)

随机交换

功能:将句子中的两个单词随机交换n次

输入:words为分词列表,n 交换次数

输出:新的分词列表

# 随机交换def random_swap(words, n):new_words = words.copy()for _ in range(n):new_words = swap_word(new_words)return new_wordsdef swap_word(new_words):random_idx_1 = random.randint(0, len(new_words) - 1)random_idx_2 = random_idx_1counter = 0while random_idx_2 == random_idx_1:random_idx_2 = random.randint(0, len(new_words) - 1)counter += 1if counter > 3:return new_wordsnew_words[random_idx_1], new_words[random_idx_2] = new_words[random_idx_2], new_words[random_idx_1]return new_words

随机删除

功能:以概率p删除语句中的词

输入:words为分词列表,p为概率值

输出:新的分词列表

# 随机删除def random_deletion(words, p):if len(words) == 1:return wordsnew_words = []for word in words:r = random.uniform(0, 1)if r > p:new_words.append(word)if len(new_words) == 0:rand_int = random.randint(0, len(words) - 1)return [words[rand_int]]return new_words

EDA

将前面几个功能合并,

sentence:输入句子,字符串(str)形式

alpha_sr:随机替换同义词的比重,默认为0.1

alpha_ri:随机插入的比重,默认为0.1

alpha_rs:随机交换的比重,默认为0.1

p_rd:随机删除的概率值大小,认为0.1

num_aug:增强次数,默认为9词

# EDA函数def eda(sentence, alpha_sr=0.1, alpha_ri=0.1, alpha_rs=0.1, p_rd=0.1, num_aug=9):seg_list = jieba.cut(sentence)seg_list = " ".join(seg_list)words = list(seg_list.split())num_words = len(words)augmented_sentences = []num_new_per_technique = int(num_aug / 4) + 1n_sr = max(1, int(alpha_sr * num_words))n_ri = max(1, int(alpha_ri * num_words))n_rs = max(1, int(alpha_rs * num_words))# print(words, "\n")# 同义词替换srfor _ in range(num_new_per_technique):a_words = synonym_replacement(words, n_sr)augmented_sentences.append(' '.join(a_words))# 随机插入rifor _ in range(num_new_per_technique):a_words = random_insertion(words, n_ri)augmented_sentences.append(' '.join(a_words))# 随机交换rsfor _ in range(num_new_per_technique):a_words = random_swap(words, n_rs)augmented_sentences.append(' '.join(a_words))# 随机删除rdfor _ in range(num_new_per_technique):a_words = random_deletion(words, p_rd)augmented_sentences.append(' '.join(a_words))# print(augmented_sentences)shuffle(augmented_sentences)if num_aug >= 1:augmented_sentences = augmented_sentences[:num_aug]else:keep_prob = num_aug / len(augmented_sentences)augmented_sentences = [s for s in augmented_sentences if random.uniform(0, 1) < keep_prob]augmented_sentences.append(seg_list)return augmented_sentences

实践

以近期突发事件文本为例子

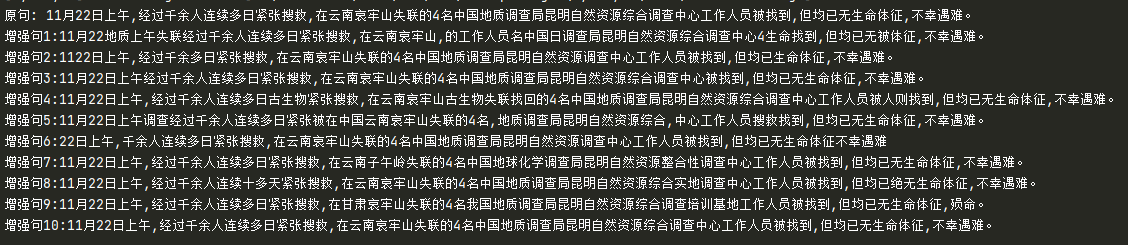

输入句子:“11月22日上午,经过千余人连续多日紧张搜救,在云南哀牢山失联的4名中国地质调查局昆明自然资源综合调查中心工作人员被找到,但均已无生命体征,不幸遇难。”

输出10组增强后的句子

if __name__ == '__main__':sentence = "11月22日上午,经过千余人连续多日紧张搜救,在云南哀牢山失联的4名中国地质调查局昆明自然资源综合调查中心工作人员被找到,但均已无生命体征,不幸遇难。"augmented_sentences = eda(sentence=sentence)print("原句:",sentence)for idx,sentence in enumerate(augmented_sentences):print("增强句{}:{}".format(idx+1,sentence))

结果如图所示

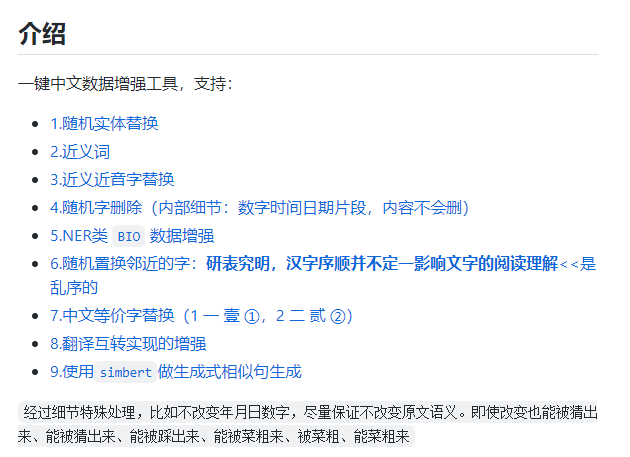

此外,针对中文的数据增强工具还有一个比较好用的工具nlpcda,关于nlpcda的内容将在后续进行实践

参考资料:

[1] An implement of the paper of EDA for Chinese corpus.中文语料的EDA数据增强工具

[2] EDA: Easy Data Augmentation Techniques for Boosting Performance on Text Classification Tasks