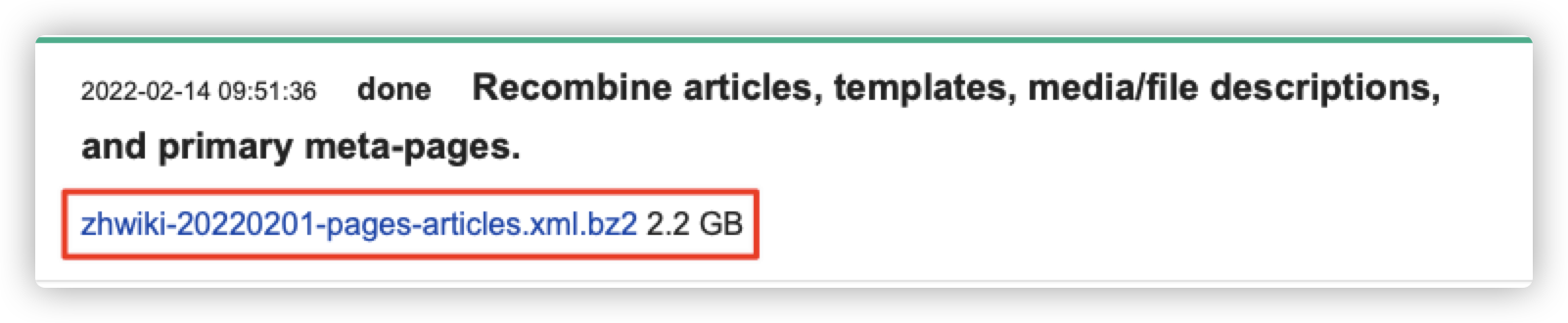

1、 下载wiki百科数据

维基百科-资料库下载

pages-articles.xml.bz2 为结尾的文件

2、 解析wiki百科文本数据

python3 wiki_to_txt.py zhwiki-20220201-pages-articles.xml.bz2

import loggingimport sysfrom gensim.corpora import WikiCorpusdef main():if len(sys.argv) != 2:print("Usage: python3 " + sys.argv[0] + " wiki_data_path")exit()logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)wiki_corpus = WikiCorpus(sys.argv[1], dictionary={})texts_num = 0with open("wiki_texts.txt", 'w', encoding='utf-8') as output:for text in wiki_corpus.get_texts():output.write(' '.join(text) + '\n')texts_num += 1if texts_num % 10000 == 0:logging.info("已處理 %d 篇文章" % texts_num)if __name__ == "__main__":main()

2022-02-24 10:30:07,609 : INFO : 已處理 10000 篇文章......2022-02-24 10:44:44,092 : INFO : 已處理 410000 篇文章2022-02-24 10:45:09,587 : INFO : finished iterating over Wikipedia corpus of 417371 documents with 96721989 positions (total 3964095 articles, 113681913 positions before pruning articles shorter than 50 words)

3、 繁体文本转简体

使用opencc 将文本数据繁体转简体

opencc -i wiki_texts.txt -o wiki_zh_tw.txt -c t2s.json

4、 分词处理(包含去除停用词)

使用jieba分词对简体中文文本数据做分词,分词后写入txt文件(用于gensim模型训练)

python3 segment.py

import jiebaimport loggingdef main():logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)# jieba custom setting.jieba.set_dictionary('jieba_dict/dict.txt.big')# load stopwords setstopword_set = set()with open('jieba_dict/stopwords.txt','r', encoding='utf-8') as stopwords:for stopword in stopwords:stopword_set.add(stopword.strip('\n'))output = open('wiki_seg.txt', 'w', encoding='utf-8')with open('wiki_zh_tw.txt', 'r', encoding='utf-8') as content :for texts_num, line in enumerate(content):line = line.strip('\n')words = jieba.cut(line, cut_all=False)for word in words:if word not in stopword_set:output.write(word + ' ')output.write('\n')if (texts_num + 1) % 10000 == 0:logging.info("已完成前 %d 行的斷詞" % (texts_num + 1))output.close()if __name__ == '__main__':main()

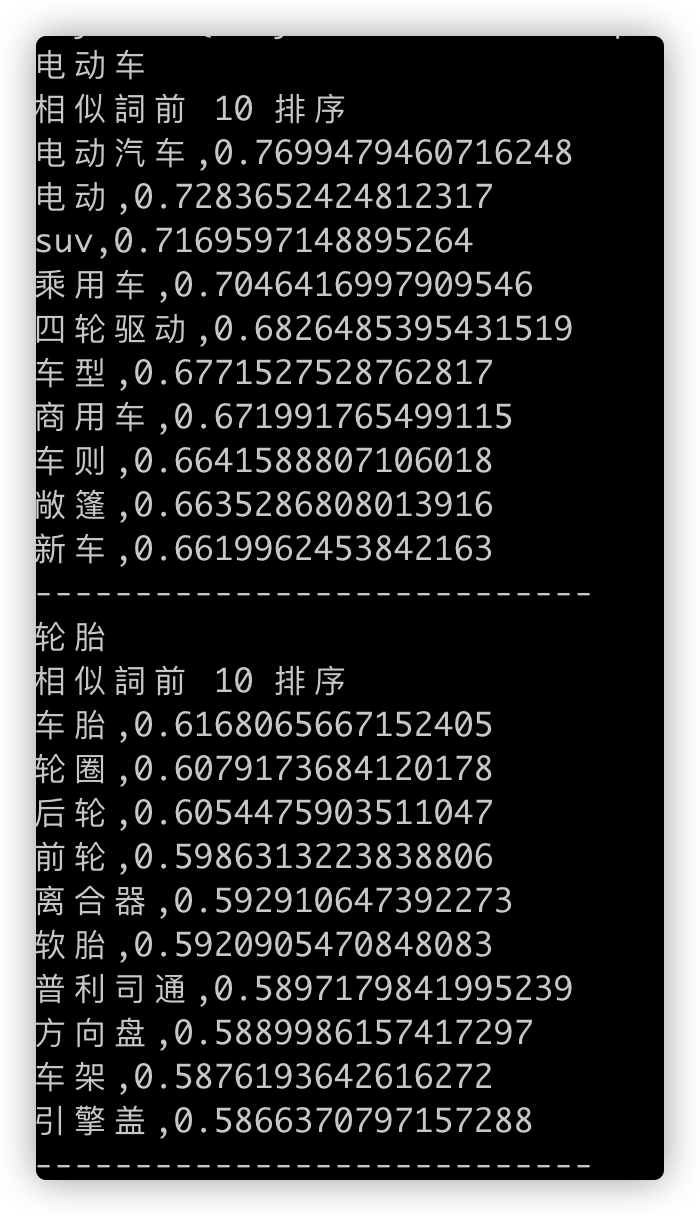

5、 模型训练和应用

python3 train.pypython3 demo.py

# train.pyimport loggingfrom gensim.models import word2vecdef main():logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)sentences = word2vec.LineSentence("wiki_seg.txt")# model = word2vec.Word2Vec(sentences, vector_size=250)model = word2vec.Word2Vec(sentences, sg=1, window=10, min_count=5, workers=6, vector_size=250)# 保存模型,供日後使用model.save("word2vec.model")# 模型讀取方式# model = word2vec.Word2Vec.load("your_model_name")if __name__ == "__main__":main()

# demo.pyfrom gensim.models import word2vecfrom gensim import modelsimport loggingdef main():logging.basicConfig(format='%(asctime)s : %(levelname)s : %(message)s', level=logging.INFO)model = models.Word2Vec.load('word2vec.model')print("提供 3 種測試模式\n")print("輸入一個詞,則去尋找前十個該詞的相似詞")print("輸入兩個詞,則去計算兩個詞的餘弦相似度")print("輸入三個詞,進行類比推理")while True:try:query = input()q_list = query.split()if len(q_list) == 1:print("相似詞前 10 排序")res = model.wv.most_similar(q_list[0], topn=10)for item in res:print(item[0] + "," + str(item[1]))elif len(q_list) == 2:print("計算 Cosine 相似度")res = model.wv.similarity(q_list[0], q_list[1])print(res)else:print("%s之於%s,如%s之於" % (q_list[0], q_list[2], q_list[1]))res = model.wv.most_similar([q_list[0], q_list[1]], [q_list[2]], topn=100)for item in res:print(item[0] + "," + str(item[1]))print("----------------------------")except Exception as e:print(repr(e))if __name__ == "__main__":main()

6、效果图

数据及代码:https://github.com/SeafyLiang/machine_learning_study/tree/master/nlp_study/gensim_word2vec