Anatomy of a Flink Cluster

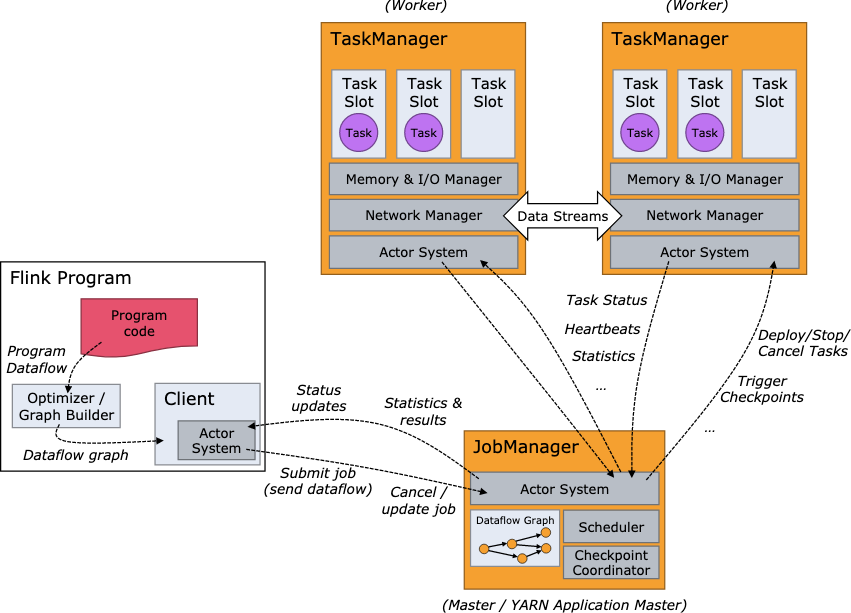

- The Flink runtime consists of two types of processes:

- a JobManager

- one or more TaskManagers.

- Client向JobManager发送dataflow,之后有两种选择

- detached mode: disconnect

- attached mode:stay connected

client可以是Java /Scala program,也可以是command line process

JobManager

负责schedule tasks, coordinates checkpoints, and coordinates recovery on failures

主要有三个不同的组件ResourceManager

- 负责资源申请/销毁,管理slots

- Flink实现了多种不同环境的task slots,如YARN、Mesos和Kubernetes等

- Dispatcher

- provides a REST interface to submit Flink applications for execution and starts a new JobMaster for each submitted job.

- It also runs the Flink WebUI to provide information about job executions.

JobMaster

taskmanager中最小的资源调度单元是task slot

- task slot数量代表任务的并行度

- 多个operators可能在一个slot中执行

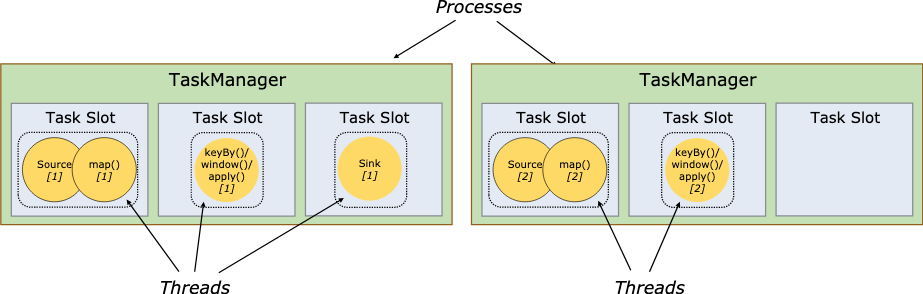

Tasks and Operator Chains

- Chaining operators together into tasks : 把多个operator整合为一个任务(如source-map)

- 避免线程(任务)间数据交互的开销,提升总体的吞吐量,降低延迟

Task Slots and Resources

- 一个TaskManager就是一个JVM进程,可以执行多个子任务

- taskslots数量控制并行度

- slot只管控内存,不隔离cpu?

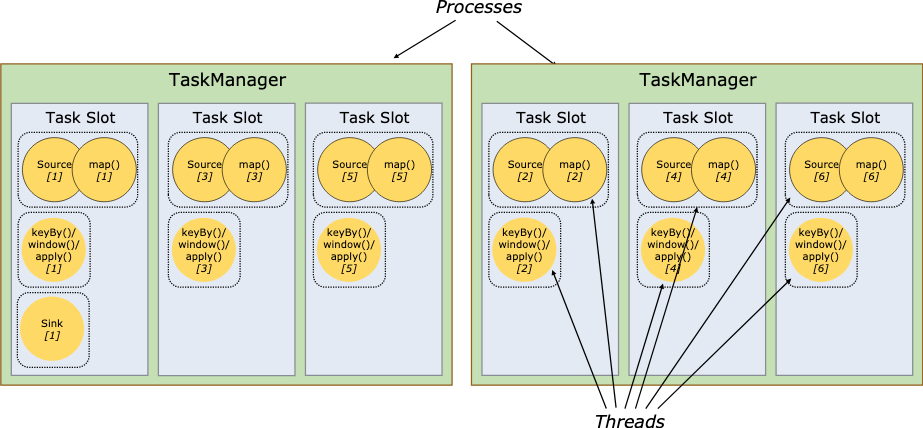

- flink允许子任务共享slot,只要他们是同一个job(允许是不同任务的子任务)

- one slot may hold an entire pipeline of the job。好处是:

- 更好地优化资源使用

- 没有太看明白这里英文

- Without slot sharing, the non-intensive source/map() subtasks would block as many resources as the resource intensive window subtasks.

- With slot sharing, increasing the base parallelism in our example from two to six yields full utilization of the slotted resources, while making sure that the heavy subtasks are fairly distributed among the TaskManagers.

同一时刻一个slot对应一个线程

考虑先后的话,一个slot可以执行多个subtask的计算线程。

Flink Application Execution

flink任务可以被提交到以下三种集群

- Flink Session Cluster

- 预先存在,长时间运行。

- 提交任务时向RecourceMangaer申请slot,job结束slot回收

- 所有job共享这一个集群,存在资源竞争

- 一个TaskManager crash,所有运行在之类TaskManager上的任务失败

- JobManager出问题,运行在这个集群上的所有任务都会受影响。

- 可以避免任务提交时,临时申请资源,启动TaskManager的开销

- Flink Job Cluster

- cluster manager (like YARN or Kubernetes) is used to spin up a cluster for each submitted job and this cluster is available to that job only

- 生命周期与Job紧密绑定

- JobManager有问题,仅影响当前任务

- 启动进程、申请资源有一定耗时

- Flink Application Cluster

- 生命周期与flink application绑定

- Flink集群只执行来自这个application的job

- In a Flink Application Cluster, the ResourceManager and Dispatcher are scoped to a single Flink Application, which provides a better separation of concerns than the Flink Session Cluster.