前提

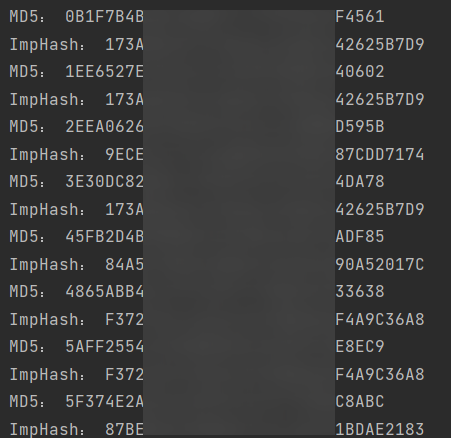

突然收到比较多数量的PE文件分析要去,通过文件大小和ImpHash进行分类,避免重复分析😣

🐍Python🐍

逻辑

- 遍历文件夹

- 获取文件MD5

- 获取ImpHash

代码

#coding=utf-8import osimport hashlibimport pefiledef GetFileMD5(filename):if not os.path.isfile(filename):print(filename)returnstrMD5 = hashlib.md5()f = open(filename, 'rb')while True:fContent = f.read()if not fContent:breakstrMD5.update(fContent)f.close()return strMD5.hexdigest().upper()def EnumFile(dir):for home, dirs, files in os.walk(dir):for dir in dirs:print(dir)for filename in files:pathFile = os.path.join(home, filename)nameNew = GetFileMD5(pathFile)#print(pathFile)print("MD5:", nameNew)ImpHash = GetImpHash(pathFile)print("ImpHash:", ImpHash)#os.rename(pathFile, nameNew)def GetImpHash(filename):file = pefile.PE(filename)return file.get_imphash().upper()path = "路径"if __name__ == "__main__":EnumFile(path)

升级

后因为要可视化成图表,新写了另一个Python:

数据处理 | pandas处理数据后输出为Excel表格