看DeblurGAN-v2: Deblurring (Orders-of-Magnitude) Faster and Better中说:

To take advantage of both global and local features, we propose to use a double-scale discriminator, consisting of one local branch that operates on patch levels like [13] did, and the other global branch that feeds the full input image.

而在其开源代码中两个discriminator喂的都是full Image,就觉得受到了欺骗。

后来又去看了它引用的论文[13]Image-to-image translation with conditional adversarial networks中关于patch_gan的部分:

Isola et al. [13] propose to use a PatchGAN discriminator which operates on the images patches of size 70 × 70, that proves to produce sharper results than the standard “global” discriminator that operates on the full image.

Patch Gan中说对7070的patch进行判别,用于保持高细节。这个7070的来源搞不懂。

本文针对pix2pix的patch gan进行解释。

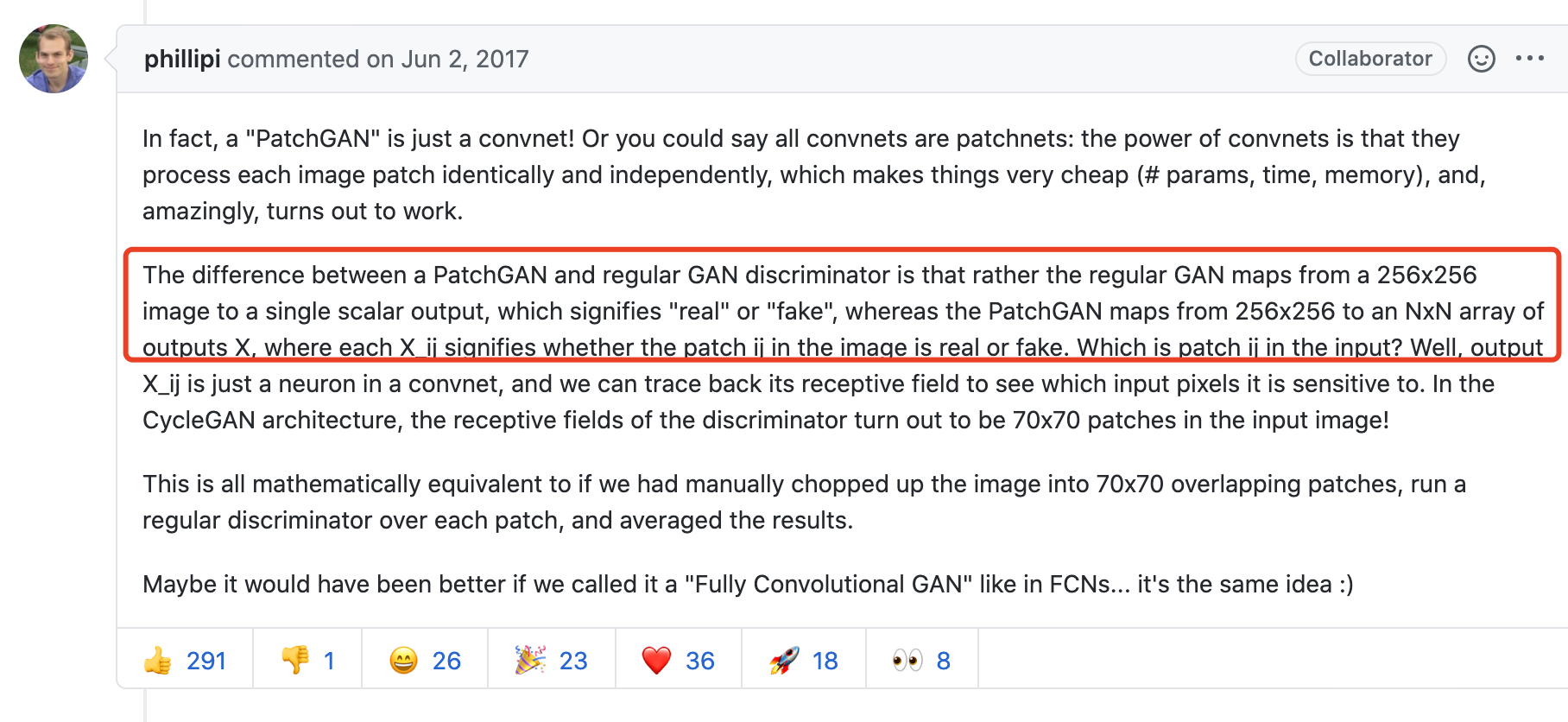

常规判别器是从一张图像得到True / False的评价,而patchGAN则是从一张图像映射到一个MxN的矩阵。由于该判别器的感受野是70x70,因此相当于每个70*70的patch对应一个评价。

70*70的计算来源:

def f(output_size, ksize, stride):return (output_size - 1) * stride + ksizelast_layer = f(output_size=1, ksize=4, stride=1)# Receptive field: 4fourth_layer = f(output_size=last_layer, ksize=4, stride=1)# Receptive field: 7third_layer = f(output_size=fourth_layer, ksize=4, stride=2)# Receptive field: 16second_layer = f(output_size=third_layer, ksize=4, stride=2)# Receptive field: 34first_layer = f(output_size=second_layer, ksize=4, stride=2)# Receptive field: 70print(f'最后一层感受域大小:{last_layer}')print(f'第一层感受域大小:{first_layer}')#最后一层感受域大小:4#第一层感受域大小:70

ksize 卷积核大小

PatchGAN代码:

# Defines the PatchGAN discriminator with the specified arguments.

class NLayerDiscriminator(nn.Module):

def __init__(self, input_nc=3, ndf=64, n_layers=3, norm_layer=nn.BatchNorm2d, use_sigmoid=False, use_parallel=True):

super(NLayerDiscriminator, self).__init__()

self.use_parallel = use_parallel

if type(norm_layer) == functools.partial:

use_bias = norm_layer.func == nn.InstanceNorm2d

else:

use_bias = norm_layer == nn.InstanceNorm2d

kw = 4

padw = int(np.ceil((kw-1)/2))

sequence = [

nn.Conv2d(input_nc, ndf, kernel_size=kw, stride=2, padding=padw),

nn.LeakyReLU(0.2, True)

]

nf_mult = 1

for n in range(1, n_layers):

nf_mult_prev = nf_mult

nf_mult = min(2**n, 8)

sequence += [

nn.Conv2d(ndf * nf_mult_prev, ndf * nf_mult,

kernel_size=kw, stride=2, padding=padw, bias=use_bias),

norm_layer(ndf * nf_mult),

nn.LeakyReLU(0.2, True)

]

nf_mult_prev = nf_mult

nf_mult = min(2**n_layers, 8)

sequence += [

nn.Conv2d(ndf * nf_mult_prev, ndf * nf_mult,

kernel_size=kw, stride=1, padding=padw, bias=use_bias),

norm_layer(ndf * nf_mult),

nn.LeakyReLU(0.2, True)

]

sequence += [nn.Conv2d(ndf * nf_mult, 1, kernel_size=kw, stride=1, padding=padw)]

if use_sigmoid:

sequence += [nn.Sigmoid()]

self.model = nn.Sequential(*sequence)

def forward(self, input):

return self.model(input)

参考:

https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/39

https://blog.csdn.net/xiaoxifei/article/details/86506955

https://blog.csdn.net/weixin_35576881/article/details/88058040 感受野计算

https://medium.com/@sahiltinky94/understanding-patchgan-9f3c8380c207 这个讲了感受野怎么计算