3台裸机Linux就可以部署KubeSphere并且可以自动的安装好K8s环境

1.环境准备

- 4c 8 g (master)

- 8c 16g *2(work)

2.步骤

2.0环境准备(3台都执行)

#设置hostnameyum install -y socatyum install -y conntrackyum install -y ebtablesyum install -y ipset

docker加速器配置

sudo mkdir -p /etc/dockersudo tee /etc/docker/daemon.json <<-'EOF'{"registry-mirrors": ["https://vovncyjm.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"],"log-driver": "json-file","log-opts": {"max-size": "100m"},"storage-driver": "overlay2"}EOFsudo systemctl daemon-reload

2.1下载KubeKey

export KKZONE=cncurl -sfL https://get-kk.kubesphere.io | VERSION=v1.1.1 sh -chmod +x kk

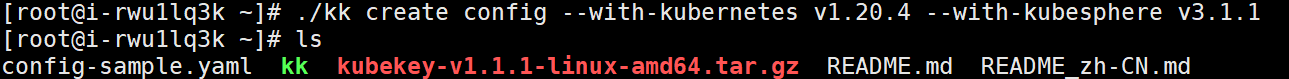

2.2创建集群配置文件

./kk create config --with-kubernetes v1.20.4 --with-kubesphere v3.1.1

2.3创建集群

./kk create cluster -f config-sample.yaml

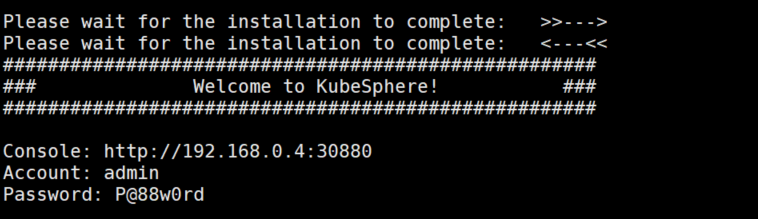

2.4查看进度

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

3.附录

ip需要更改为自己的内网ip

apiVersion: kubekey.kubesphere.io/v1alpha1kind: Clustermetadata:name: samplespec:hosts:- {name: master, address: 192.168.0.4, internalAddress: 192.168.0.4, user: root, password: Gaoxi.123}- {name: node1, address: 192.168.0.3, internalAddress: 192.168.0.3, user: root, password: Gaoxi.123}- {name: node2, address: 192.168.0.2, internalAddress: 192.168.0.2, user: root, password: Gaoxi.123}roleGroups:etcd:- mastermaster:- masterworker:- node1- node2controlPlaneEndpoint:domain: lb.kubesphere.localaddress: ""port: 6443kubernetes:version: v1.20.4imageRepo: kubesphereclusterName: cluster.localnetwork:plugin: calicokubePodsCIDR: 10.233.64.0/18kubeServiceCIDR: 10.233.0.0/18registry:registryMirrors: []insecureRegistries: []addons: []---apiVersion: installer.kubesphere.io/v1alpha1kind: ClusterConfigurationmetadata:name: ks-installernamespace: kubesphere-systemlabels:version: v3.1.1spec:persistence:storageClass: ""authentication:jwtSecret: ""zone: ""local_registry: ""etcd:monitoring: falseendpointIps: localhostport: 2379tlsEnable: truecommon:redis:enabled: falseredisVolumSize: 2Giopenldap:enabled: falseopenldapVolumeSize: 2GiminioVolumeSize: 20Gimonitoring:endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090es:elasticsearchMasterVolumeSize: 4GielasticsearchDataVolumeSize: 20GilogMaxAge: 7elkPrefix: logstashbasicAuth:enabled: falseusername: ""password: ""externalElasticsearchUrl: ""externalElasticsearchPort: ""console:enableMultiLogin: trueport: 30880alerting:enabled: false# thanosruler:# replicas: 1# resources: {}auditing:enabled: falsedevops:enabled: falsejenkinsMemoryLim: 2GijenkinsMemoryReq: 1500MijenkinsVolumeSize: 8GijenkinsJavaOpts_Xms: 512mjenkinsJavaOpts_Xmx: 512mjenkinsJavaOpts_MaxRAM: 2gevents:enabled: falseruler:enabled: truereplicas: 2logging:enabled: falselogsidecar:enabled: truereplicas: 2metrics_server:enabled: falsemonitoring:storageClass: ""prometheusMemoryRequest: 400MiprometheusVolumeSize: 20Gimulticluster:clusterRole: nonenetwork:networkpolicy:enabled: falseippool:type: nonetopology:type: noneopenpitrix:store:enabled: falseservicemesh:enabled: falsekubeedge:enabled: falsecloudCore:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []cloudhubPort: "10000"cloudhubQuicPort: "10001"cloudhubHttpsPort: "10002"cloudstreamPort: "10003"tunnelPort: "10004"cloudHub:advertiseAddress:- ""nodeLimit: "100"service:cloudhubNodePort: "30000"cloudhubQuicNodePort: "30001"cloudhubHttpsNodePort: "30002"cloudstreamNodePort: "30003"tunnelNodePort: "30004"edgeWatcher:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []edgeWatcherAgent:nodeSelector: {"node-role.kubernetes.io/worker": ""}tolerations: []