https://www.bilibili.com/medialist/play/watchlater/BV18b4y1k7no

这个视频是讲怎么用代码把GCN的公式实现的

DGL

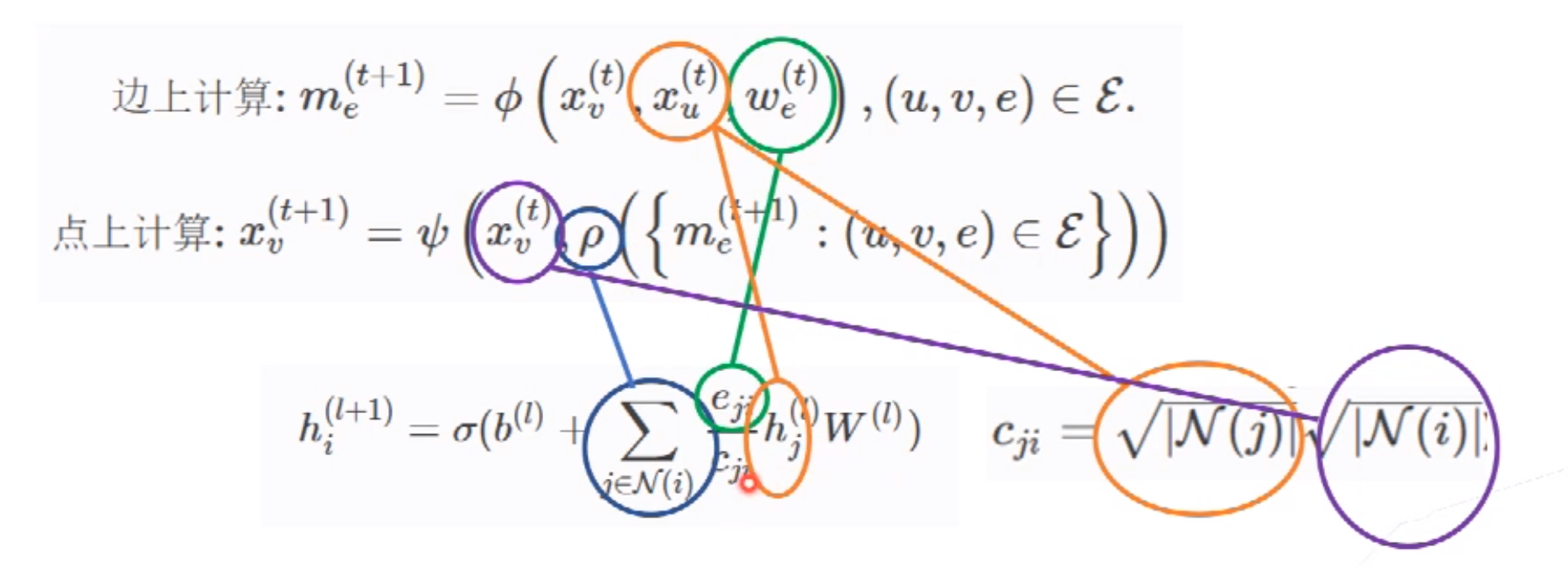

消息传递范式

如何更新(t+1)时刻结点v的特征? 假设v有邻居有u等结点,以下步骤以邻居u举例

- (t+1)时刻,收集 t时刻 结点u,v和边e(由uv构成)的特征

- 聚合v的所有邻居发送过来的消息,再加上v自身的特征。更新v

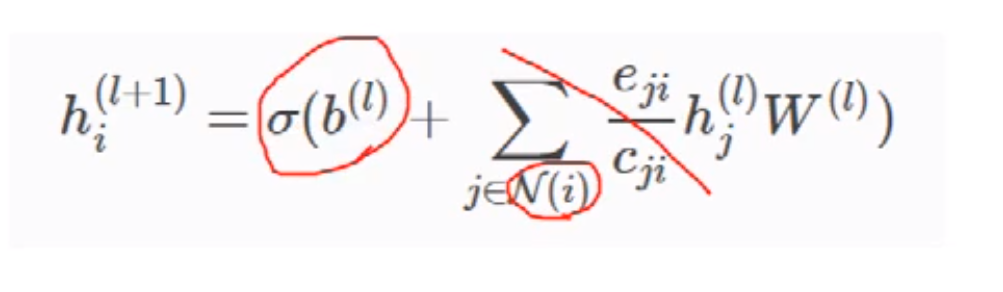

GraphConv

参数说明

入度为0的情况下: 只有bias,没有意义。

只有bias,没有意义。

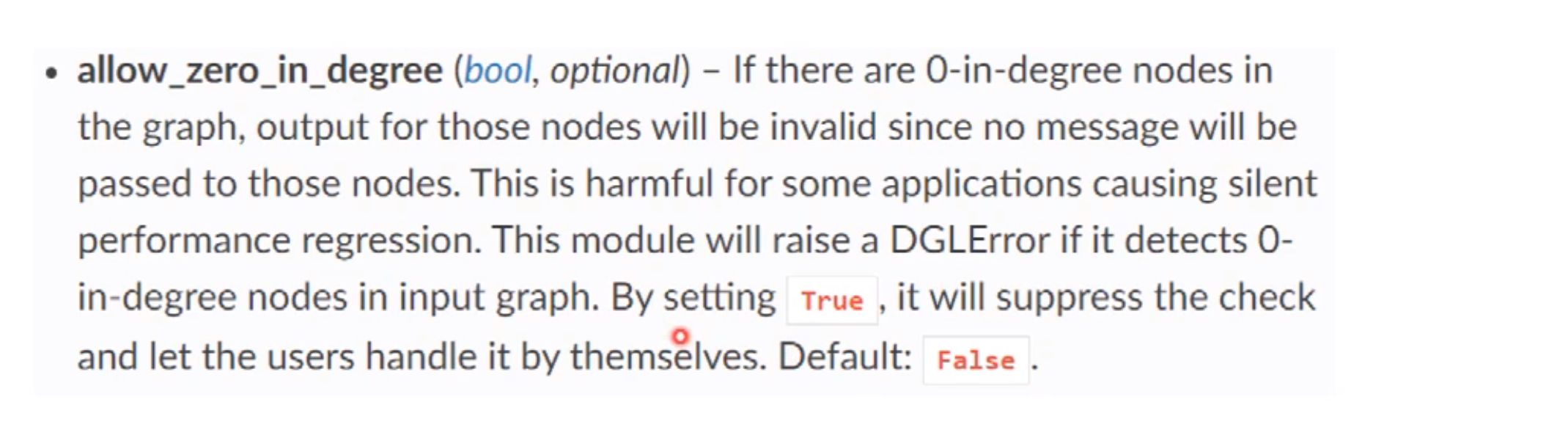

但是参数构造里可以选择是否允许。

(可以在构造图的时候加一个自环loof,避免入度为0的情况。仅限同构图。)

forward()函数

把源节点的特征h 作为消息m存储在边上

h 乘以 edge_weight 存储在m

根据输入和输出特征的大小来选择计算量比较小的方式

例子

import dglimport numpy as npimport torch as thfrom dgl.nn import GraphConv

# Case 1: 同构图g = dgl.graph(([0,1,2,3,2,5], [1,2,3,4,0,3]))g = dgl.add_self_loop(g) #这里加了环feat = th.ones(6, 10)conv = GraphConv(10, 2, norm='both', weight=True, bias=True)res = conv(g, feat)print(res)#tensor([[ 1.3326, -0.2797],[ 1.4673, -0.3080],[ 1.3326, -0.2797],[ 1.6871, -0.3541],[ 1.7711, -0.3717],[ 1.0375, -0.2178]], grad_fn=<AddBackward0>)# allow_zero_in_degree example 不加环的情况g = dgl.graph(([0,1,2,3,2,5], [1,2,3,4,0,3]))conv = GraphConv(10, 2, norm='both', weight=True, bias=True, allow_zero_in_degree=True)res = conv(g, feat)print(res)#tensor([[-0.2473, -0.4631],[-0.3497, -0.6549],[-0.3497, -0.6549],[-0.4221, -0.7905],[-0.3497, -0.6549],[ 0.0000, 0.0000]], grad_fn=<AddBackward0>)

代码

删去注释后的实现代码,官方文档:https://docs.dgl.ai/_modules/dgl/nn/pytorch/conv/graphconv.html#GraphConv

class GraphConv(nn.Module):def __init__(self,in_feats,out_feats,norm='both',weight=True,bias=True,activation=None,allow_zero_in_degree=False):super(GraphConv, self).__init__()if norm not in ('none', 'both', 'right', 'left'):raise DGLError('Invalid norm value. Must be either "none", "both", "right" or "left".'' But got "{}".'.format(norm))self._in_feats = in_featsself._out_feats = out_featsself._norm = normself._allow_zero_in_degree = allow_zero_in_degreeif weight:self.weight = nn.Parameter(th.Tensor(in_feats, out_feats))else:self.register_parameter('weight', None)if bias:self.bias = nn.Parameter(th.Tensor(out_feats))else:self.register_parameter('bias', None)self.reset_parameters()self._activation = activationdef reset_parameters(self):if self.weight is not None:init.xavier_uniform_(self.weight)if self.bias is not None:init.zeros_(self.bias)def set_allow_zero_in_degree(self, set_value):self._allow_zero_in_degree = set_valuedef forward(self, graph, feat, weight=None, edge_weight=None):with graph.local_scope():if not self._allow_zero_in_degree:if (graph.in_degrees() == 0).any():raise DGLError('There are 0-in-degree nodes in the graph, ''output for those nodes will be invalid. ''This is harmful for some applications, ''causing silent performance regression. ''Adding self-loop on the input graph by ''calling `g = dgl.add_self_loop(g)` will resolve ''the issue. Setting ``allow_zero_in_degree`` ''to be `True` when constructing this module will ''suppress the check and let the code run.')aggregate_fn = fn.copy_src('h', 'm')if edge_weight is not None:assert edge_weight.shape[0] == graph.number_of_edges()graph.edata['_edge_weight'] = edge_weightaggregate_fn = fn.u_mul_e('h', '_edge_weight', 'm')# (BarclayII) For RGCN on heterogeneous graphs we need to support GCN on bipartite.feat_src, feat_dst = expand_as_pair(feat, graph)if self._norm in ['left', 'both']:degs = graph.out_degrees().float().clamp(min=1)if self._norm == 'both':norm = th.pow(degs, -0.5)else:norm = 1.0 / degsshp = norm.shape + (1,) * (feat_src.dim() - 1)norm = th.reshape(norm, shp)feat_src = feat_src * normif weight is not None:if self.weight is not None:raise DGLError('External weight is provided while at the same time the'' module has defined its own weight parameter. Please'' create the module with flag weight=False.')else:weight = self.weightif self._in_feats > self._out_feats:# mult W first to reduce the feature size for aggregation.if weight is not None:feat_src = th.matmul(feat_src, weight)graph.srcdata['h'] = feat_srcgraph.update_all(aggregate_fn, fn.sum(msg='m', out='h'))rst = graph.dstdata['h']else:# aggregate first then mult Wgraph.srcdata['h'] = feat_srcgraph.update_all(aggregate_fn, fn.sum(msg='m', out='h'))rst = graph.dstdata['h']if weight is not None:rst = th.matmul(rst, weight)if self._norm in ['right', 'both']:degs = graph.in_degrees().float().clamp(min=1)if self._norm == 'both':norm = th.pow(degs, -0.5)else:norm = 1.0 / degsshp = norm.shape + (1,) * (feat_dst.dim() - 1)norm = th.reshape(norm, shp)rst = rst * normif self.bias is not None:rst = rst + self.biasif self._activation is not None:rst = self._activation(rst)return rstdef extra_repr(self):summary = 'in={_in_feats}, out={_out_feats}'summary += ', normalization={_norm}'if '_activation' in self.__dict__:summary += ', activation={_activation}'return summary.format(**self.__dict__)