详解Transformer (Attention Is All You Need)

https://github.com/dk-liang/Awesome-Visual-Transformer

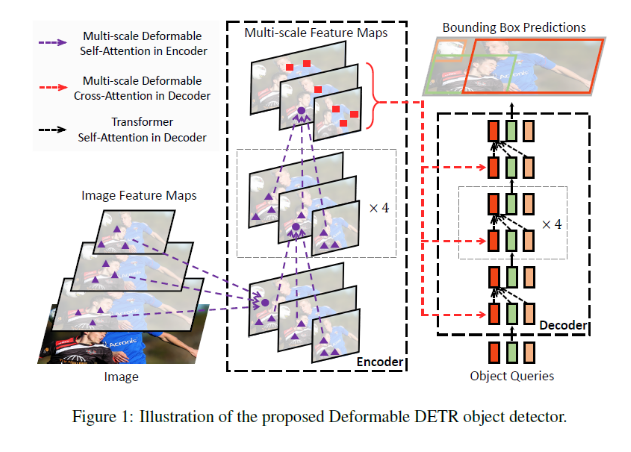

Deformable DETR: Deformable Transformers for End-to-End Object Detection

1、Compiling CUDA operators

cd ./models/opssh ./make.sh# unit test (should see all checking is True)python test.py

2、Prepare datasets and annotations

python tools/convert_COCOText_to_coco.pypython tools/convert_ICDAR15_to_coco.pypython tools/convert_ICDAR13_to_coco.pypython tools/convert_SynthText_to_coco.pypython tools/convert_VISD_to_coco.py

3、Pretrain

# 在COCOTEXT_v2上pretrainpython3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/Pretrain_COCOTextV2 --dataset_file coco --coco_path /share/wuweijia/Data/COCOTextV2 --batch_size 2 --with_box_refine --num_queries 500 --epochs 300 --lr_drop 150python3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/Pretrain_COCOText --dataset_file cocotext --coco_path /share/wuweijia/Data/COCOTextV2 --batch_size 2 --with_box_refine --num_queries 300 --epochs 300 --lr_drop 150# COCOTex上做pretrainpython3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/Pretrain_COCOText --dataset_file cocotext --coco_path /share/wuweijia/Data/COCOTextV2 --batch_size 2 --with_box_refine --num_queries 100 --epochs 300 --lr_drop 150# VISD上做pretrainpython3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/VISD --dataset_file VISD --coco_path /share/wuweijia/Data/VISD --batch_size 2 --with_box_refine --num_queries 300 --epochs 300 --lr_drop 150--resume ./output/Pretrain_COCOTextV2/checkpoint.pth# 在SynthText上pretrainpython3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/Pretrain_SynthText --dataset_file SynthText --coco_path /share/wuweijia/Data/SynthText --batch_size 2 --with_box_refine --num_queries 300 --epochs 300 --lr_drop 150# 在UnrealText上pretrainpython3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/Pretrain_UnrealText --dataset_file UnrealText --coco_path /share/wuweijia/Data/UnrealText --batch_size 2 --with_box_refine --num_queries 300 --epochs 300 --lr_drop 150python3 tools/Pretrain_model_to_finetune.py

4、Train

GPUS_PER_NODE=8 ./tools/run_dist_launch.sh 8 ./configs/r50_deformable_detr.shpython3 -m torch.distributed.launch --nproc_per_node=4 --use_env main.py --output_dir ./exps/r50_TotalText --dataset_file coco --coco_path /home/wuweijia/.jupyter/Data/Total-text --batch_size 1 --with_box_refine --num_queries 500 --epochs 50 --lr_drop 30ICDAR15python3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/ICDAR15 --dataset_file coco --coco_path /share/wuweijia/Data/ICDAR2015 --batch_size 2 --with_box_refine --num_queries 100 --epochs 500 --lr_drop 200 --resume ./output/Pretrain_COCOText/pretrain_coco.pthMOVTextpython3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/MOVText --dataset_file MOVText --coco_path /share/wuweijia/MyBenchMark/relabel/Dapan_lizhuang/final_FrameAnn/MOVText --batch_size 2 --with_box_refine --num_queries 100 --epochs 40 --lr_drop 20 --resume ./output/Pretrain_COCOText/pretrain_coco.pthLSVTDpython3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/LSVTD --dataset_file LSVTD --coco_path /share/wuweijia/Data/VideoText/ICDAR21SVTS/SVTS --batch_size 2 --with_box_refine --num_queries 100 --epochs 40 --lr_drop 20 --resume ./output/Pretrain_COCOText/pretrain_coco.pthRoadText1kpython3 -m torch.distributed.launch --nproc_per_node=8 --use_env main.py --output_dir ./output/RoadText1k --dataset_file RoadText1k --coco_path /share/wuweijia/Data/VideoText/RoadText1k --batch_size 2 --with_box_refine --num_queries 100 --epochs 40 --lr_drop 20 --resume ./output/Pretrain_COCOText/pretrain_coco.pth

5、Inference

# ICDAR2015python3 -m torch.distributed.launch --nproc_per_node=1 --use_env main.py --output_dir ./output/ICDAR15 --dataset_file coco --coco_path /share/wuweijia/Data/ICDAR2015 --batch_size 1 --with_box_refine --num_queries 300 --resume ./output/ICDAR15/checkpoint.pth --eval --eval_dataset_file icdar15 --threshold 0.5 --show# evluationcd tools/Evaluation_ICDAR15/python scipy.py#ICDAR13python3 -m torch.distributed.launch --nproc_per_node=1 --use_env main.py --output_dir ./output/ICDAR13 --dataset_file coco --coco_path /share/wuweijia/Data/ICDAR2013 --batch_size 1 --with_box_refine --num_queries 500 --resume ./output/Pretrain_COCOTextV2/ICDAR150.786.pth --eval --eval_dataset_file icdar13 --threshold 0.5#MOVTextpython3 -m torch.distributed.launch --nproc_per_node=1 --use_env main.py --output_dir ./output/MOVText --dataset_file MOVText --coco_path /share/wuweijia/MyBenchMark/relabel/Dapan_lizhuang/final_FrameAnn/MOVText --batch_size 1 --with_box_refine --num_queries 100 --resume ./output/MOVText/checkpoint.pth --eval --eval_dataset_file MOVText --threshold 0.5