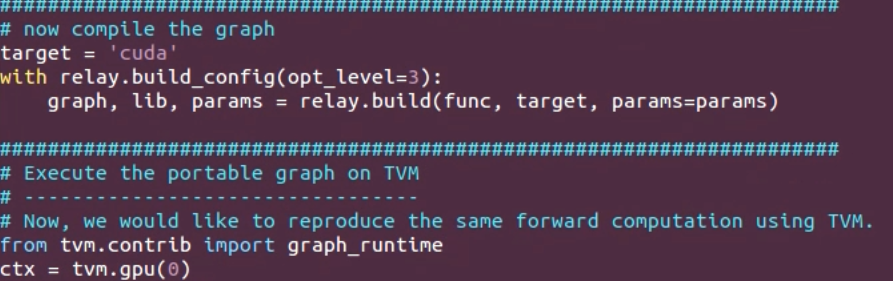

如上图所示:

如果有GPU,可以选择cuda

target = ‘cuda’

ctx=tvm.gpu(0)

如果没有GPU,也可以用CPU, llvm ;

target = ‘llvm’

ctx=tvm.cpu(0)

或是自定义的硬件

target = ‘llvm’

ctx=tvm.vacc(0)

详细请参考tutorials/relay_quick_start.py

import numpy as npfrom tvm import relayfrom tvm.relay import testingimport tvmfrom tvm.contrib import graph_runtime####################################################################### Define Neural Network in Relaybatch_size = 1num_class = 1000image_shape = (3, 224, 224)data_shape = (batch_size,) + image_shapeout_shape = (batch_size, num_class)mod, params = relay.testing.resnet.get_workload(num_layers=18, batch_size=batch_size, image_shape=image_shape)# set show_meta_data=True if you want to show meta dataprint(mod.astext(show_meta_data=False))####################################################################### Compilationopt_level = 3target = tvm.target.cuda()with relay.build_config(opt_level=opt_level):graph, lib, params = relay.build_module.build(mod, target, params=params)###################################################################### Run the generate library# create random inputctx = tvm.gpu()data = np.random.uniform(-1, 1, size=data_shape).astype("float32")# create modulemodule = graph_runtime.create(graph, lib, ctx)# set input and parametersmodule.set_input("data", data)module.set_input(**params)# runmodule.run()# get outputout = module.get_output(0, tvm.nd.empty(out_shape)).asnumpy()# Print first 10 elements of outputprint(out.flatten()[0:10])####################################################################### Save and Load Compiled Module# -----------------------------# We can also save the graph, lib and parameters into files and load them# back in deploy environment.##################################################### save the graph, lib and params into separate filesfrom tvm.contrib import utiltemp = util.tempdir()path_lib = temp.relpath("deploy_lib.tar")lib.export_library(path_lib)with open(temp.relpath("deploy_graph.json"), "w") as fo:fo.write(graph)with open(temp.relpath("deploy_param.params"), "wb") as fo:fo.write(relay.save_param_dict(params))print(temp.listdir())##################################################### load the module back.loaded_json = open(temp.relpath("deploy_graph.json")).read()loaded_lib = tvm.module.load(path_lib)loaded_params = bytearray(open(temp.relpath("deploy_param.params"), "rb").read())input_data = tvm.nd.array(np.random.uniform(size=data_shape).astype("float32"))module = graph_runtime.create(loaded_json, loaded_lib, ctx)module.load_params(loaded_params)module.run(data=input_data)out_deploy = module.get_output(0).asnumpy()# Print first 10 elements of outputprint(out_deploy.flatten()[0:10])# check whether the output from deployed module is consistent with original onetvm.testing.assert_allclose(out_deploy, out, atol=1e-3)