retinaface 是一个鲁棒性较强的单阶段人脸检测器,比较突出的工作是加入了 extra-supervised 和 self-supervised;

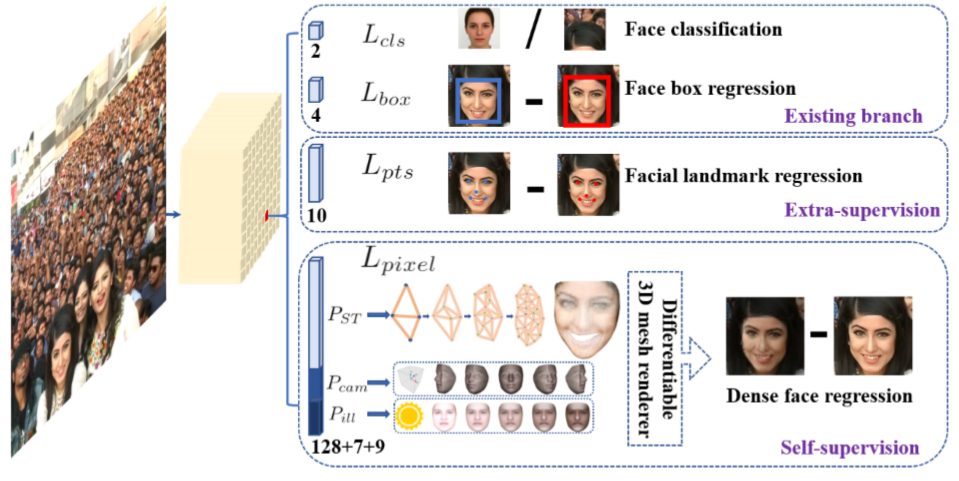

大部分人脸检测重点关注人脸分类和人脸框定位这两部分,retinaface 加入了 face landmark 回归( five facial landmarks)以及 dense face regression(主要是 3d 相关);

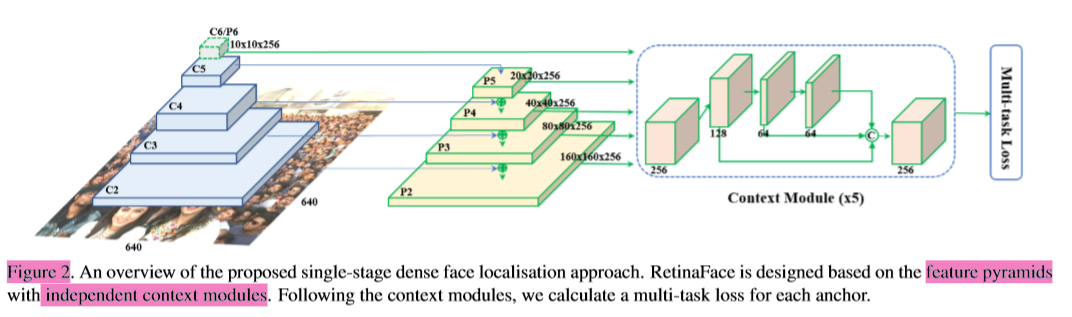

加入的任务如下图所示:

retinaface 结构特点

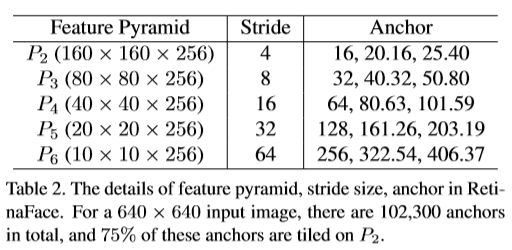

feature pyramid,采用特征金字塔提取多尺度特征, (to increase the receptive field and enhance the rigid context modelling power)

single-stage,单阶段,快捷高效,用 mobile-net 时在 arm 上可以实时

Context Modelling, (to increase the receptive field and enhance the rigid context modelling power)

Multi-task Learning ,额外监督信息

结构图

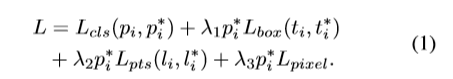

损失函数: Multi-taskLoss

第一部分是分类 Loss, 第二部分是人脸框回归 Loss, 第三部分是人脸关键点回归 loss,第四部分是 dense regression loss;

在实现的时候,还有些细节。

- 使用可行变卷积代替 lateral connections 和 context modules 中的 3*3 卷积 (further strengthens the non-rigid context modelling capacity);

2.anchor 的设置,fpn 每层输出对应不同的 anchor 尺寸。

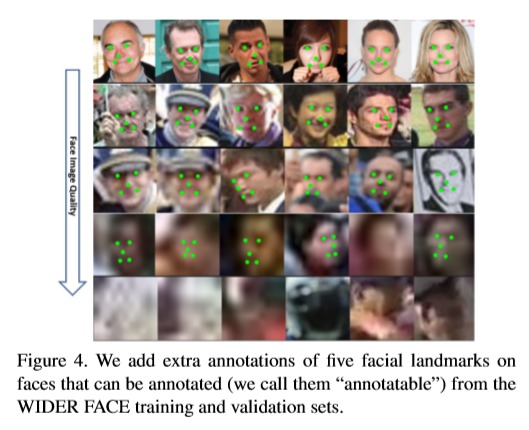

数据集部分做额外的标注信息

3.1 定义了五个等级的人脸质量,根据清晰度检测难度定义;

3.2 定义人脸关键点。

结果

在 WIDER FACE dataset 上,96.9% (Easy), 96.1% (Medium) and 91.8% (Hard) for validation set, and 96.3% (Easy), 95.6% (Medium) and 91.4% (Hard) for test set.

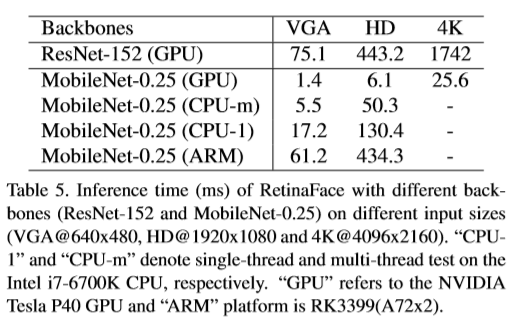

速度

表格里面单位 ms;轻量级网轻松达到实时检测。

主网络结构代码:

引用的 fpn,ssh 等结构代码

import timeimport torchimport torch.nn as nnimport torchvision.models._utils as _utilsimport torchvision.models as modelsimport torch.nn.functional as Ffrom torch.autograd import Variabledef conv_bn(inp, oup, stride = 1, leaky = 0):return nn.Sequential(nn.Conv2d(inp, oup, 3, stride, 1, bias=False),nn.BatchNorm2d(oup),nn.LeakyReLU(negative_slope=leaky, inplace=True))def conv_bn_no_relu(inp, oup, stride):return nn.Sequential(nn.Conv2d(inp, oup, 3, stride, 1, bias=False),nn.BatchNorm2d(oup),)def conv_bn1X1(inp, oup, stride, leaky=0):return nn.Sequential(nn.Conv2d(inp, oup, 1, stride, padding=0, bias=False),nn.BatchNorm2d(oup),nn.LeakyReLU(negative_slope=leaky, inplace=True))def conv_dw(inp, oup, stride, leaky=0.1):return nn.Sequential(nn.Conv2d(inp, inp, 3, stride, 1, groups=inp, bias=False),nn.BatchNorm2d(inp),nn.LeakyReLU(negative_slope= leaky,inplace=True),nn.Conv2d(inp, oup, 1, 1, 0, bias=False),nn.BatchNorm2d(oup),nn.LeakyReLU(negative_slope= leaky,inplace=True),)class SSH(nn.Module):def __init__(self, in_channel, out_channel):super(SSH, self).__init__()assert out_channel % 4 == 0leaky = 0if (out_channel <= 64):leaky = 0.1self.conv3X3 = conv_bn_no_relu(in_channel, out_channel//2, stride=1)self.conv5X5_1 = conv_bn(in_channel, out_channel//4, stride=1, leaky = leaky)self.conv5X5_2 = conv_bn_no_relu(out_channel//4, out_channel//4, stride=1)self.conv7X7_2 = conv_bn(out_channel//4, out_channel//4, stride=1, leaky = leaky)self.conv7x7_3 = conv_bn_no_relu(out_channel//4, out_channel//4, stride=1)def forward(self, input):conv3X3 = self.conv3X3(input)conv5X5_1 = self.conv5X5_1(input)conv5X5 = self.conv5X5_2(conv5X5_1)conv7X7_2 = self.conv7X7_2(conv5X5_1)conv7X7 = self.conv7x7_3(conv7X7_2)out = torch.cat([conv3X3, conv5X5, conv7X7], dim=1)out = F.relu(out)return outclass FPN(nn.Module):def __init__(self,in_channels_list,out_channels):super(FPN,self).__init__()leaky = 0if (out_channels <= 64):leaky = 0.1self.output1 = conv_bn1X1(in_channels_list[0], out_channels, stride = 1, leaky = leaky)self.output2 = conv_bn1X1(in_channels_list[1], out_channels, stride = 1, leaky = leaky)self.output3 = conv_bn1X1(in_channels_list[2], out_channels, stride = 1, leaky = leaky)self.merge1 = conv_bn(out_channels, out_channels, leaky = leaky)self.merge2 = conv_bn(out_channels, out_channels, leaky = leaky)def forward(self, input):# names = list(input.keys())input = list(input.values())output1 = self.output1(input[0])output2 = self.output2(input[1])output3 = self.output3(input[2])up3 = F.interpolate(output3, size=[output2.size(2), output2.size(3)], mode="nearest")output2 = output2 + up3output2 = self.merge2(output2)up2 = F.interpolate(output2, size=[output1.size(2), output1.size(3)], mode="nearest")output1 = output1 + up2output1 = self.merge1(output1)out = [output1, output2, output3]return outclass MobileNetV1(nn.Module):def __init__(self):super(MobileNetV1, self).__init__()self.stage1 = nn.Sequential(conv_bn(3, 8, 2, leaky = 0.1), # 3conv_dw(8, 16, 1), # 7conv_dw(16, 32, 2), # 11conv_dw(32, 32, 1), # 19conv_dw(32, 64, 2), # 27conv_dw(64, 64, 1), # 43)self.stage2 = nn.Sequential(conv_dw(64, 128, 2), # 43 + 16 = 59conv_dw(128, 128, 1), # 59 + 32 = 91conv_dw(128, 128, 1), # 91 + 32 = 123conv_dw(128, 128, 1), # 123 + 32 = 155conv_dw(128, 128, 1), # 155 + 32 = 187conv_dw(128, 128, 1), # 187 + 32 = 219)self.stage3 = nn.Sequential(conv_dw(128, 256, 2), # 219 +3 2 = 241conv_dw(256, 256, 1), # 241 + 64 = 301)self.avg = nn.AdaptiveAvgPool2d((1,1))self.fc = nn.Linear(256, 1000)def forward(self, x):x = self.stage1(x)x = self.stage2(x)x = self.stage3(x)x = self.avg(x)# x = self.model(x)x = x.view(-1, 256)x = self.fc(x)return x

代码链接:pytorch 实现