- canal 搭建

- tcp bind ip

- register ip to zookeeper

- canal instance user/passwd

- canal.user = canal

- canal.passwd = E3619321C1A937C46A0D8BD1DAC39F93B27D4458

- canal admin config

- canal.admin.manager = 127.0.0.1:8089

- flush data to zk

- tcp, kafka, RocketMQ

- flush meta cursor/parse position to file

- canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()

- support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery

- mysql fallback connected to new master should fallback times

- network config

- binlog filter config

- binlog format/image check

- binlog ddl isolation

- parallel parser config

- canal.instance.parser.parallelThreadSize = 16

- table meta tsdb info

- dump snapshot interval, default 24 hour

- purge snapshot expire , default 360 hour(15 days)

- aliyun ak/sk , support rds/mq

- conf root dir

- auto scan instance dir add/remove and start/stop instance

- canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

- canal.instance.global.spring.xml = classpath:spring/memory-instance.xml

- canal.instance.global.spring.xml = classpath:spring/default-instance.xml

- canal.mq.properties. =

- Set this value to “cloud”, if you want open message trace feature in aliyun.

- aliyun mq namespace

- canal.mq.namespace =

- 写个简单的Demo 去监听mysql 数据的变动

canal 搭建

搭建mysql环境

对于自建 MySQL , 需要先开启 Binlog 写入功能,配置 binlog-format 为 ROW 模式,my.cnf 中配置如下

[mysqld]log-bin=mysql-bin # 开启 binlogbinlog-format=ROW # 选择 ROW 模式server_id=1 # 配置 MySQL replaction 需要定义,不要和 canal 的 slaveId 重复

授权 canal 链接 MySQL 账号具有作为 MySQL slave 的权限, 如果已有账户可直接 grant

CREATE USER canal IDENTIFIED BY 'canal';GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';-- GRANT ALL PRIVILEGES ON *.* TO 'canal'@'%' ;FLUSH PRIVILEGES;

搭建canal环境

下载 canal, 访问 release 页面 , 选择需要的包下载, 如以 1.0.17 版本为例

wget https://github.com/alibaba/canal/releases/download/canal-1.0.17/canal.deployer-1.0.17.tar.gz

解压缩

mkdir /tmp/canaltar zxvf canal.deployer-$version.tar.gz -C /tmp/canal

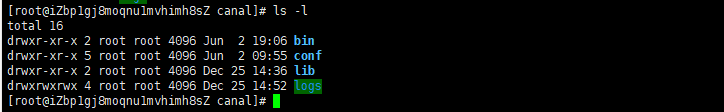

解压完成后,进入 /tmp/canal 目录,可以看到如下结构

- 配置修改

- instance.properties

vi conf/example/instance.properties

- instance.properties

样例:

## mysql serverIdcanal.instance.mysql.slaveId = 1234#position info,需要改成自己的数据库信息canal.instance.master.address = 127.0.0.1:3306canal.instance.master.journal.name =canal.instance.master.position =canal.instance.master.timestamp =#canal.instance.standby.address =#canal.instance.standby.journal.name =#canal.instance.standby.position =#canal.instance.standby.timestamp =#username/password,需要改成自己的数据库信息canal.instance.dbUsername = canalcanal.instance.dbPassword = canalcanal.instance.defaultDatabaseName =canal.instance.connectionCharset = UTF-8#table regexcanal.instance.filter.regex = .\*\\\\..\*

实例:

################################################### mysql serverId , v1.0.26+ will autoGen# canal.instance.mysql.slaveId=0# enable gtid use true/falsecanal.instance.gtidon=false# position infocanal.instance.master.address=rm-bp1r09z18z9kh8xp6.mysql.rds.aliyuncs.com:3306canal.instance.master.journal.name=canal.instance.master.position=canal.instance.master.timestamp=canal.instance.master.gtid=# rds oss binlogcanal.instance.rds.accesskey=canal.instance.rds.secretkey=canal.instance.rds.instanceId=# table meta tsdb infocanal.instance.tsdb.enable=true#canal.instance.tsdb.url=jdbc:mysql://127.0.0.1:3306/canal_tsdb#canal.instance.tsdb.dbUsername=canal#canal.instance.tsdb.dbPassword=canal#canal.instance.standby.address =#canal.instance.standby.journal.name =#canal.instance.standby.position =#canal.instance.standby.timestamp =#canal.instance.standby.gtid=# username/password# 用户名/密码canal.instance.dbUsername=zjt_lkyw_rdscanal.instance.dbPassword=ZjjtGPSAL2016canal.instance.connectionCharset = UTF-8# enable druid Decrypt database passwordcanal.instance.enableDruid=false#canal.instance.pwdPublicKey=MFwwDQYJKoZIhvcNAQEBBQADSwAwSAJBALK4BUxdDltRRE5/zXpVEVPUgunvscYFtEip3pmLlhrWpacX7y7GCMo2/JM6LeHmiiNdH1FWgGCpUfircSwlWKUCAwEAAQ==# table regex# canal.instance.filter.regex=.*\\..*# 需要匹配的表名canal.instance.filter.regex=lkyw_rd.zjw_lkyw_gps_raw_1,lkyw_rd.zjw_lkyw_gps_raw_2,lkyw_rd.zjw_lkyw_gps_raw_3,lkyw_rd.zjw_lkyw_gps_raw_4,lkyw_rd.zjw_lkyw_gps_raw_5,lkyw_rd.zjw_lkyw_gps_raw_6,lkyw_rd.zjw_lkyw_gps_raw_7# table black regexcanal.instance.filter.black.regex=# table field filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2)#canal.instance.filter.field=test1.t_product:id/subject/keywords,test2.t_company:id/name/contact/ch# table field black filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2)#canal.instance.filter.black.field=test1.t_product:subject/product_image,test2.t_company:id/name/contact/ch# mq configcanal.mq.topic=example# dynamic topic route by schema or table regex#canal.mq.dynamicTopic=mytest1.user,mytest2\\..*,.*\\..*canal.mq.partition=0# hash partition config#canal.mq.partitionsNum=3#canal.mq.partitionHash=test.table:id^name,.*\\..*#################################################

- canal.instance.connectionCharset 代表数据库的编码方式对应到 java 中的编码类型,比如 UTF-8,GBK , ISO-8859-1

如果系统是1个 cpu,需要将 canal.instance.parser.parallel 设置为 false

canal admin config

canal.admin.manager = 127.0.0.1:8089

canal.admin.port = 11110 canal.admin.user = admin canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

canal.zkServers =

flush data to zk

canal.zookeeper.flush.period = 1000 canal.withoutNetty = false

tcp, kafka, RocketMQ

canal.serverMode = tcp

flush meta cursor/parse position to file

canal.file.data.dir = ${canal.conf.dir} canal.file.flush.period = 1000

memory store RingBuffer size, should be Math.pow(2,n)

canal.instance.memory.buffer.size = 16384

memory store RingBuffer used memory unit size , default 1kb

canal.instance.memory.buffer.memunit = 1024

meory store gets mode used MEMSIZE or ITEMSIZE

canal.instance.memory.batch.mode = MEMSIZE canal.instance.memory.rawEntry = true

detecing config

canal.instance.detecting.enable = false

canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()

canal.instance.detecting.sql = select 1 canal.instance.detecting.interval.time = 3 canal.instance.detecting.retry.threshold = 3 canal.instance.detecting.heartbeatHaEnable = false

support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery

canal.instance.transaction.size = 1024

mysql fallback connected to new master should fallback times

canal.instance.fallbackIntervalInSeconds = 60

network config

canal.instance.network.receiveBufferSize = 16384 canal.instance.network.sendBufferSize = 16384 canal.instance.network.soTimeout = 30

binlog filter config

canal.instance.filter.druid.ddl = true canal.instance.filter.query.dcl = false canal.instance.filter.query.dml = false canal.instance.filter.query.ddl = false canal.instance.filter.table.error = false canal.instance.filter.rows = false canal.instance.filter.transaction.entry = false

binlog format/image check

canal.instance.binlog.format = ROW,STATEMENT,MIXED canal.instance.binlog.image = FULL,MINIMAL,NOBLOB

binlog ddl isolation

canal.instance.get.ddl.isolation = false

parallel parser config

canal.instance.parser.parallel = true

concurrent thread number, default 60% available processors, suggest not to exceed Runtime.getRuntime().availableProcessors()

canal.instance.parser.parallelThreadSize = 16

disruptor ringbuffer size, must be power of 2

canal.instance.parser.parallelBufferSize = 256

table meta tsdb info

canal.instance.tsdb.enable = true canal.instance.tsdb.dir = ${canal.file.data.dir:../conf}/${canal.instance.destination:} canal.instance.tsdb.url = jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL; canal.instance.tsdb.dbUsername = canal canal.instance.tsdb.dbPassword = canal

dump snapshot interval, default 24 hour

canal.instance.tsdb.snapshot.interval = 24

purge snapshot expire , default 360 hour(15 days)

canal.instance.tsdb.snapshot.expire = 360

aliyun ak/sk , support rds/mq

canal.aliyun.accessKey = canal.aliyun.secretKey =

#

### destinations

#

canal.destinations = example

conf root dir

canal.conf.dir = ../conf

auto scan instance dir add/remove and start/stop instance

canal.auto.scan = true canal.auto.scan.interval = 5

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xml

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

canal.instance.global.mode = spring canal.instance.global.lazy = false canal.instance.global.manager.address = ${canal.admin.manager}

canal.instance.global.spring.xml = classpath:spring/memory-instance.xml

canal.instance.global.spring.xml = classpath:spring/file-instance.xml

canal.instance.global.spring.xml = classpath:spring/default-instance.xml

#

### MQ

#

canal.mq.servers = 127.0.0.1:6667 canal.mq.retries = 0 canal.mq.batchSize = 16384 canal.mq.maxRequestSize = 1048576 canal.mq.lingerMs = 100 canal.mq.bufferMemory = 33554432 canal.mq.canalBatchSize = 50 canal.mq.canalGetTimeout = 100 canal.mq.flatMessage = true canal.mq.compressionType = none canal.mq.acks = all

canal.mq.properties. =

canal.mq.producerGroup = test

Set this value to “cloud”, if you want open message trace feature in aliyun.

canal.mq.accessChannel = local

aliyun mq namespace

canal.mq.namespace =

#

### Kafka Kerberos Info

#

canal.mq.kafka.kerberos.enable = false canal.mq.kafka.kerberos.krb5FilePath = “../conf/kerberos/krb5.conf” canal.mq.kafka.kerberos.jaasFilePath = “../conf/kerberos/jaas.conf”

<a name="Of7xL"></a>### 启动

sh bin/startup.sh

到目前为止 canal的服务端我们已经搭建好了 但是到目前 我们只是把数据库的binlog 拉到canal中,我们还得把数据用otter去消费

sh bin/startup.sh

查看 server 日志

vi logs/canal/canal.log

```2013-02-05 22:45:27.967 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## start the canal server.2013-02-05 22:45:28.113 [main] INFO com.alibaba.otter.canal.deployer.CanalController - ## start the canal server[10.1.29.120:11111]2013-02-05 22:45:28.210 [main] INFO com.alibaba.otter.canal.deployer.CanalLauncher - ## the canal server is running now ......

查看 instance 的日志

vi logs/example/example.log

2013-02-05 22:50:45.636 [main] INFO c.a.o.c.i.spring.support.PropertyPlaceholderConfigurer - Loading properties file from class path resource [canal.properties]2013-02-05 22:50:45.641 [main] INFO c.a.o.c.i.spring.support.PropertyPlaceholderConfigurer - Loading properties file from class path resource [example/instance.properties]2013-02-05 22:50:45.803 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example2013-02-05 22:50:45.810 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start successful....

写个简单的Demo 去监听mysql 数据的变动

Jar包

<dependency><groupId>com.alibaba.otter</groupId><artifactId>canal.client</artifactId><version>1.1.3</version></dependency>

测试代码

package com.hq.eos.sync.client;import java.net.InetSocketAddress;import java.util.List;import com.alibaba.otter.canal.client.CanalConnector;import com.alibaba.otter.canal.client.CanalConnectors;import com.alibaba.otter.canal.protocol.CanalEntry.Column;import com.alibaba.otter.canal.protocol.CanalEntry.Entry;import com.alibaba.otter.canal.protocol.CanalEntry.EntryType;import com.alibaba.otter.canal.protocol.CanalEntry.EventType;import com.alibaba.otter.canal.protocol.CanalEntry.RowChange;import com.alibaba.otter.canal.protocol.CanalEntry.RowData;import com.alibaba.otter.canal.protocol.Message;public class CanalTest {public static void main(String[] args) throws Exception {CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress("10.0.98.186", 11111), "expert", "root", "root");connector.connect();connector.subscribe(".*\\..*");connector.rollback();while (true) {Message message = connector.getWithoutAck(100); // 获取指定数量的数据long batchId = message.getId();if (batchId == -1 || message.getEntries().isEmpty()) {Thread.sleep(1000);continue;}// System.out.println(message.getEntries());printEntries(message.getEntries());connector.ack(batchId);// 提交确认,消费成功,通知server删除数据// connector.rollback(batchId);// 处理失败, 回滚数据,后续重新获取数据}}private static void printEntries(List<Entry> entries) throws Exception {for (Entry entry : entries) {if (entry.getEntryType() != EntryType.ROWDATA) {continue;}RowChange rowChange = RowChange.parseFrom(entry.getStoreValue());EventType eventType = rowChange.getEventType();System.out.println(String.format("================> binlog[%s:%s] , name[%s,%s] , eventType : %s",entry.getHeader().getLogfileName(), entry.getHeader().getLogfileOffset(),entry.getHeader().getSchemaName(), entry.getHeader().getTableName(), eventType));for (RowData rowData : rowChange.getRowDatasList()) {switch (rowChange.getEventType()) {case INSERT:System.out.println("INSERT ");printColumns(rowData.getAfterColumnsList());break;case UPDATE:System.out.println("UPDATE ");printColumns(rowData.getAfterColumnsList());break;case DELETE:System.out.println("DELETE ");printColumns(rowData.getBeforeColumnsList());break;default:break;}}}}private static void printColumns(List<Column> columns) {for(Column column : columns) {System.out.println(column.getName() + " : " + column.getValue() + " update=" + column.getUpdated());}}}