安装flink集群运行在yarn上:

https://www.cnblogs.com/hxuhongming/p/12873010.html

https://www.cnblogs.com/hxuhongming/p/12819916.html

https://yijiyong.com/dp/flink/02-install.html

先安装zk集群和hadoop集群:

curl -O https://archive.apache.org/dist/zookeeper/zookeeper-3.4.10/zookeeper-3.4.10.tar.gzscp -r zookeeper-3.4.10.tar.gz root@hadoop2:`pwd`scp -r zookeeper-3.4.10.tar.gz root@hadoop3:`pwd`tar -zxvf zookeeper-3.4.10.tar.gzcp zoo_sample.cfg zoo.cfgvim zoo.cfg# 三个节点都需要启动zookeeperdataDir=/data/zookeeperserver.1=0.0.0.0:2888:3888server.2=hadoop2:2888:3888server.3=hadoop3:2888:3888新建myid文件并编辑

cd /export/server/zookeeper-3.4.10/bin./zkServer.sh start //启动zookeeper./zkServer.sh stop //停止zookeeper./zkServer.sh status //查看zookeeper状态

flink安装配置:

################################################################################jobmanager.rpc.port: 6123jobmanager.memory.process.size: 1600mtaskmanager.memory.process.size: 1728mtaskmanager.numberOfTaskSlots: 1parallelism.default: 1high-availability: zookeeperhigh-availability.storageDir: hdfs:///flink/ha/high-availability.zookeeper.quorum: node1:2181,node2:2181,node3:2181high-availability.zookeeper.path.root: /flink# high-availability.zookeeper.client.acl: openstate.backend: filesystem# Directory for checkpoints filesystem, when using any of the default bundledstate.checkpoints.dir: hdfs://namenode-host:port/flink-checkpointsstate.savepoints.dir: hdfs://namenode-host:port/flink-savepointsjobmanager.execution.failover-strategy: region# Override below configuration to provide custom ZK service name if configured# zookeeper.sasl.service-name: zookeeper# The configuration below must match one of the values set in "security.kerberos.login.contexts"# zookeeper.sasl.login-context-name: Client

vim /etc/profileexport FLINK_HOME=/export/server/flink-1.13.5export PATH=$PATH:$FLINK_HOME/binsource /etc/profile

启动顺序:先启动zk和hdfs,再启动flink

cd /export/server/flink-1.13.5/bin./start-cluster.sh

查看日志:cd /export/server/flink-1.13.5/logtailf flink-root-standalonesession-0-node1.log

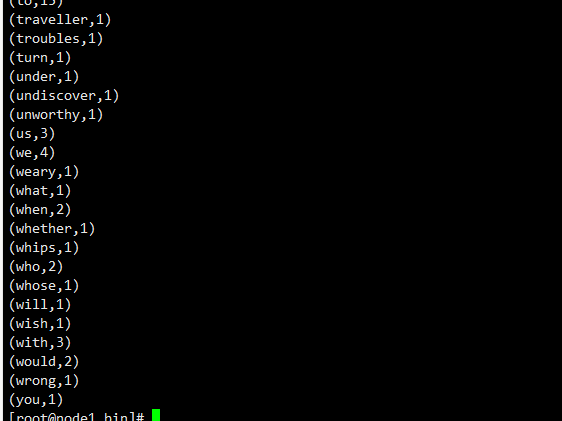

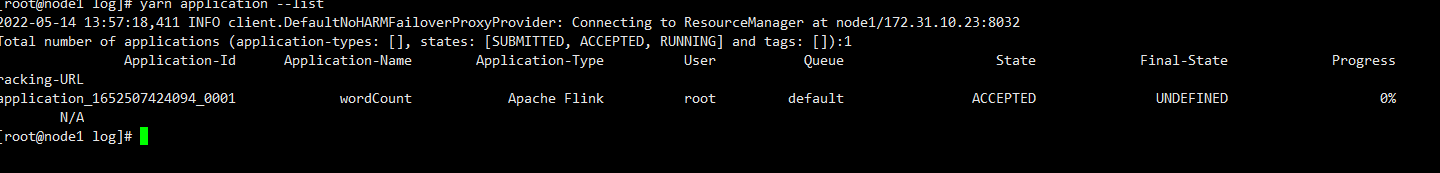

1.(1)申请资源cd /export/server/flink-1.13.5/bin./yarn-session.sh -nm wordCount -n 2(2)查看申请的资源yarn application --list2.提交wordCount计算作业./flink run -yid application_1652507424094_0001 ../examples/batch/WordCount.jar