https://blog.csdn.net/weixin_44517301/article/details/89605496

课堂纪要.py day01_deeplearning.py 深度学习day01.pdf

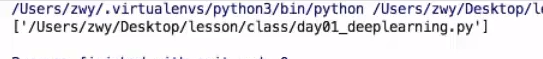

1 machine_learn VS deeping learn

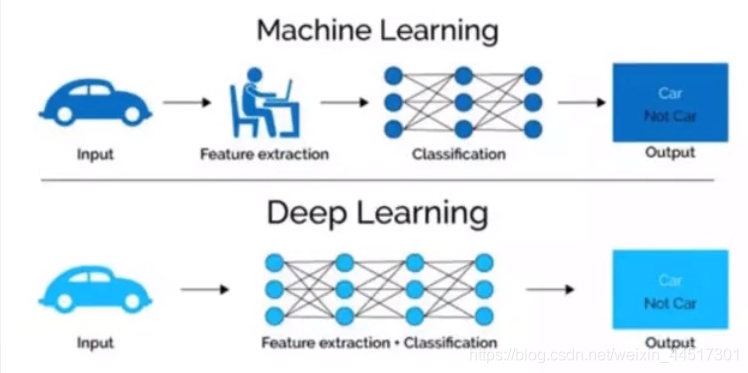

1.1.1 特征提取

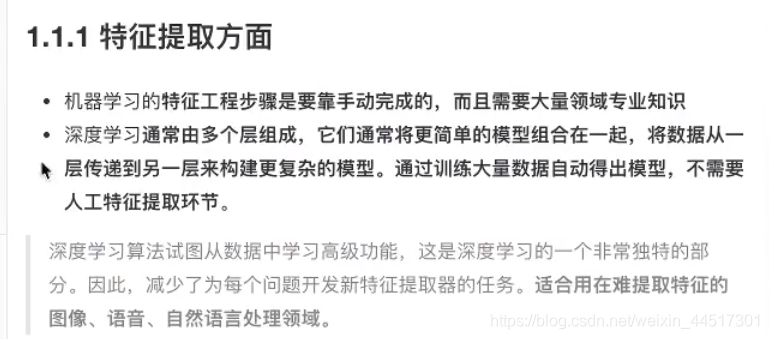

1.1.2 数据量 和 计算性能要求

1.1.3 算法代表

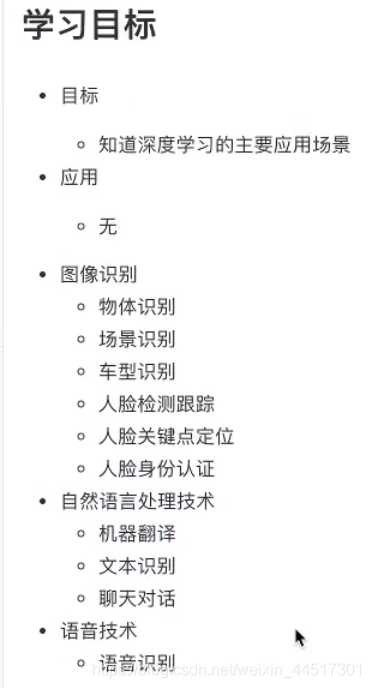

1.2 应用场景

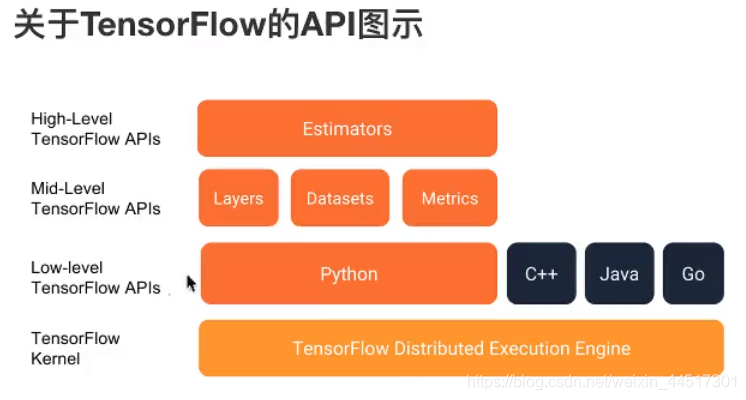

1.3 tensorflow 总体框架

1.3.3 tensorflow install

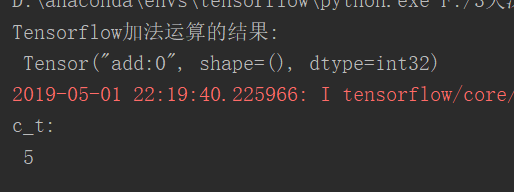

用Tensorflow实现加法运算演示数据流图(需要开启会话)

import tensorflow as tfdef tensorflow_demo():# Tensorflow实现加法a=tf.constant(2)b=tf.constant(3)c=a+bprint("Tensorflow加法运算的结果:\n",c)#开启会话with tf.Session() as sess:c_t=sess.run(c)print("c_t:\n",c_t)if __name__ == '__main__':tensorflow_demo()

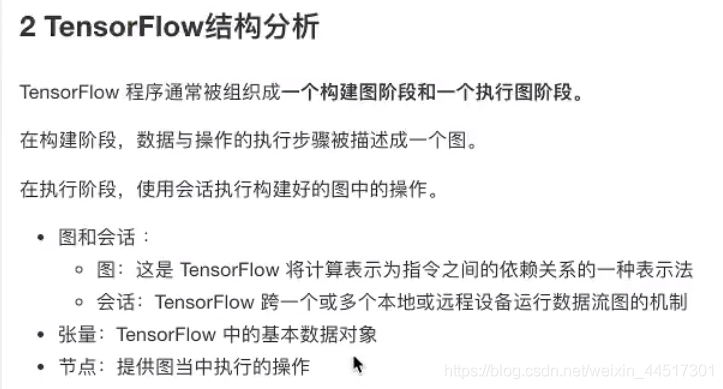

2 Tensorflow 结构分析

2.2 图 与 TensorBoard

2.2.1 什么是图结构

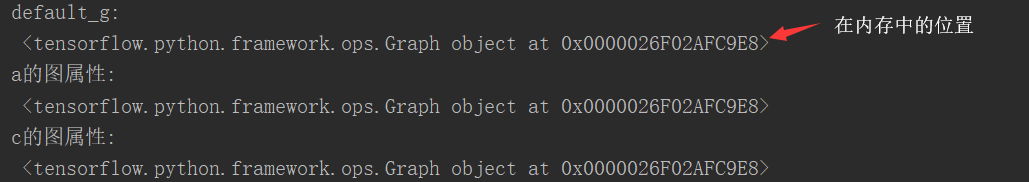

2.2.2 图 相关操作

import tensorflow as tfdef tensorflow_demo():# Tensorflow实现加法a=tf.constant(2)b=tf.constant(3)#c=a+b(不提倡直接使用符合运算)c=tf.add(a,b)print("Tensorflow加法运算的结果:\n",c)# 查看默认图# 方法1:调用方法default_g = tf.get_default_graph()print("default_g:\n", default_g)# 方法2:查看属性print("a的图属性:\n", a.graph)print("c的图属性:\n", c.graph)#开启会话with tf.Session() as sess:c_t=sess.run(c)print("c_t:\n",c_t)print("sess的图属性:\n",sess.graph)return Noneif __name__ == '__main__':tensorflow_demo()

2.2.3 创建图

import tensorflow as tfdef tensorflow_demo():new_g = tf.get_default_graph()with new_g.as_default():a_new = tf.constant(20)b_new = tf.constant(30)c_new = a_new+b_newprint("c_new:\n",c_new)#这时就不能用默认的sesstion了#开启new_g的会话with tf.Session(graph=new_g) as new_sess:c_new_value = new_sess.run(c_new)print("c_new_value:\n",c_new_value)print("new_sess的图属性:\n",new_sess.graph)return Noneif __name__ == '__main__':tensorflow_demo()

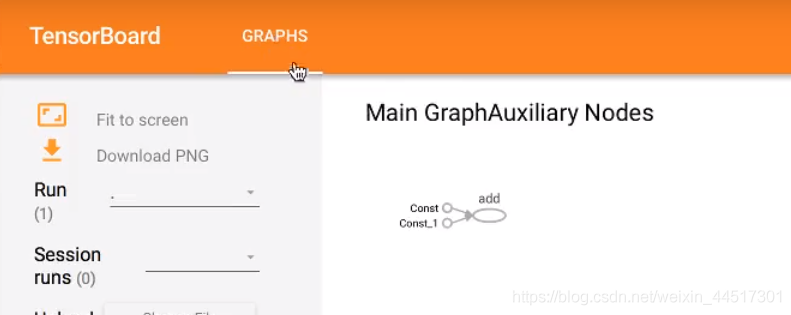

2.2.4 TensorBoard: 可视化

OP

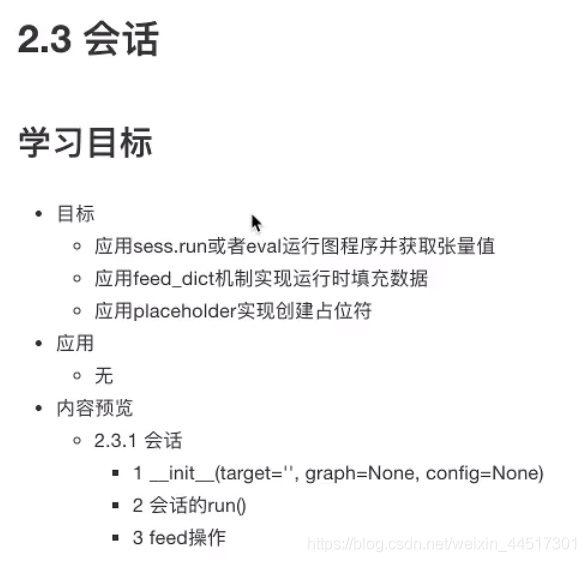

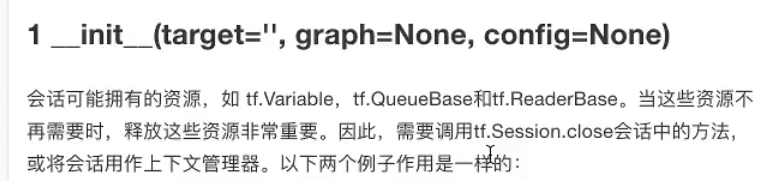

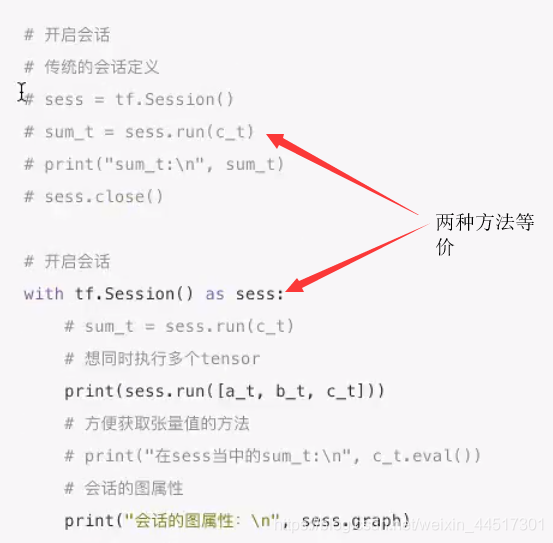

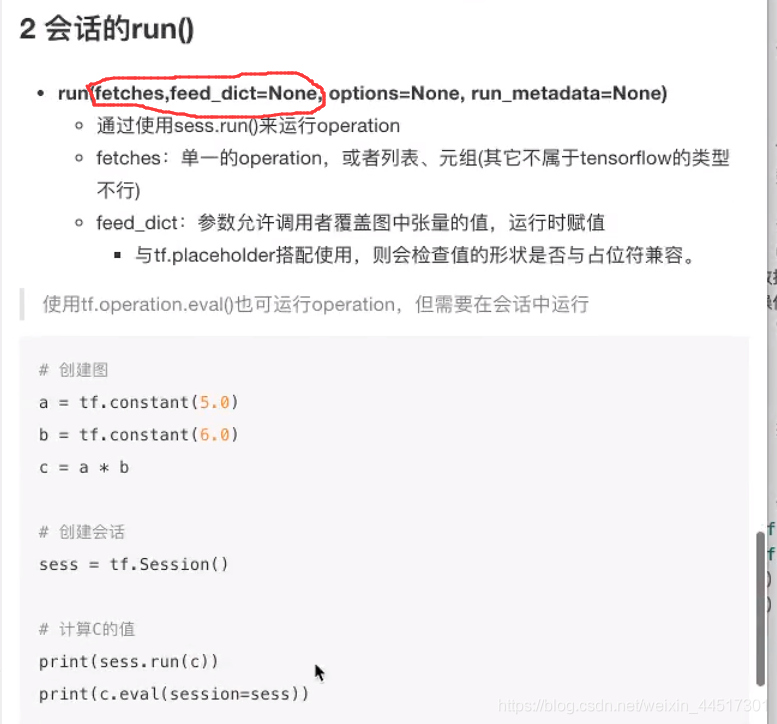

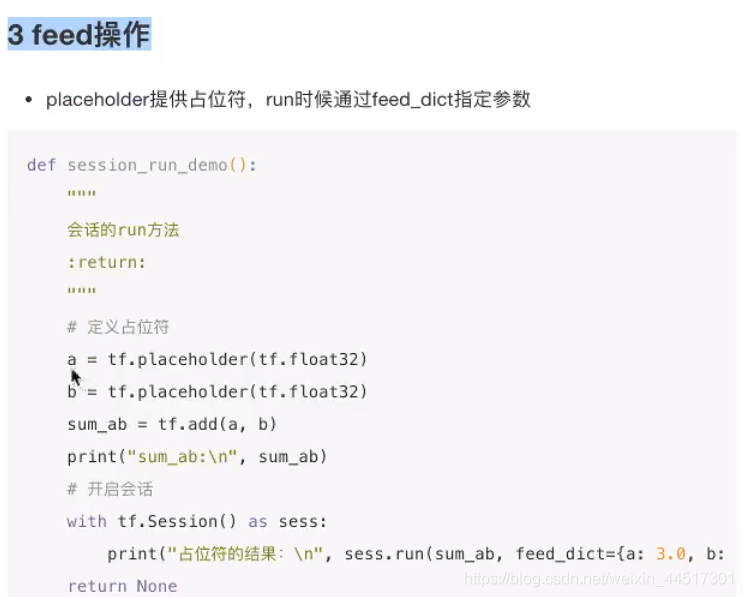

2.3 session

2.3.1 session

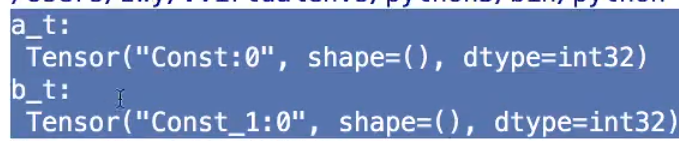

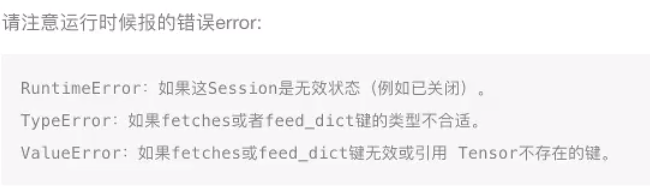

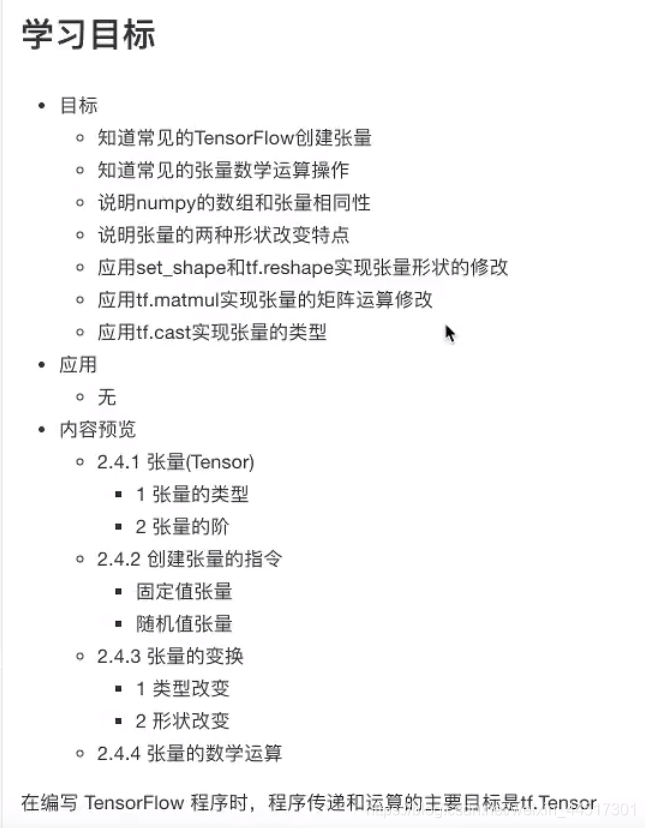

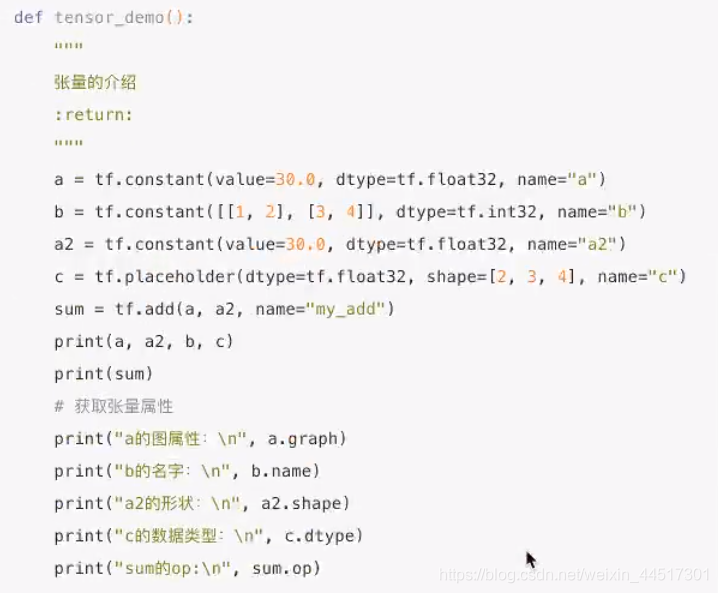

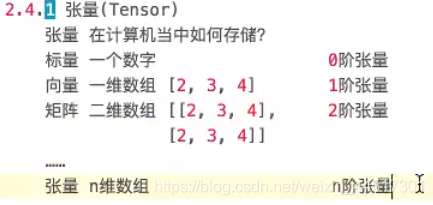

2.4 张量

2.4.1 tensor

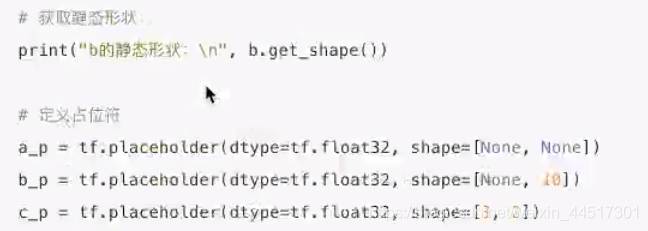

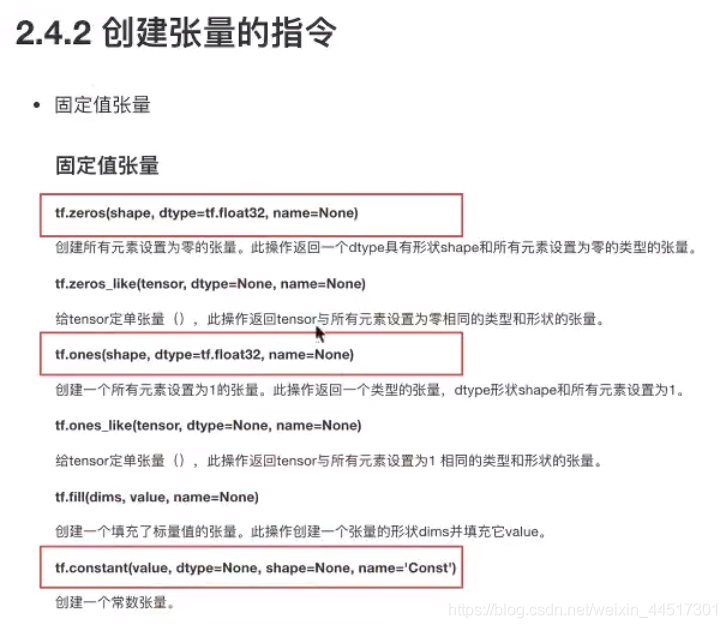

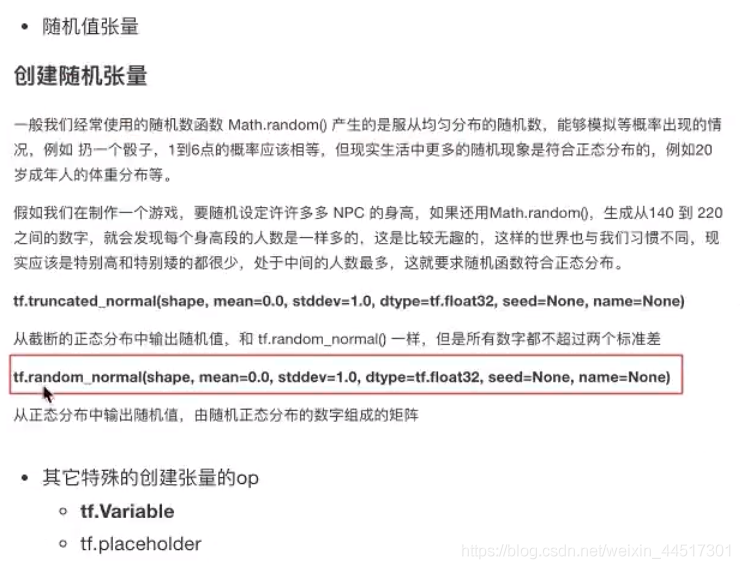

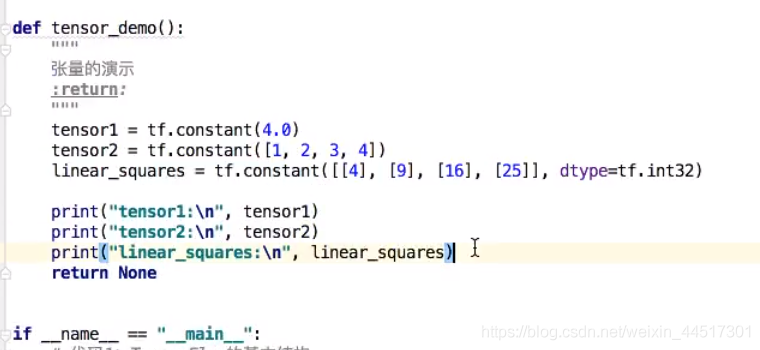

2.4.2 创建 张量

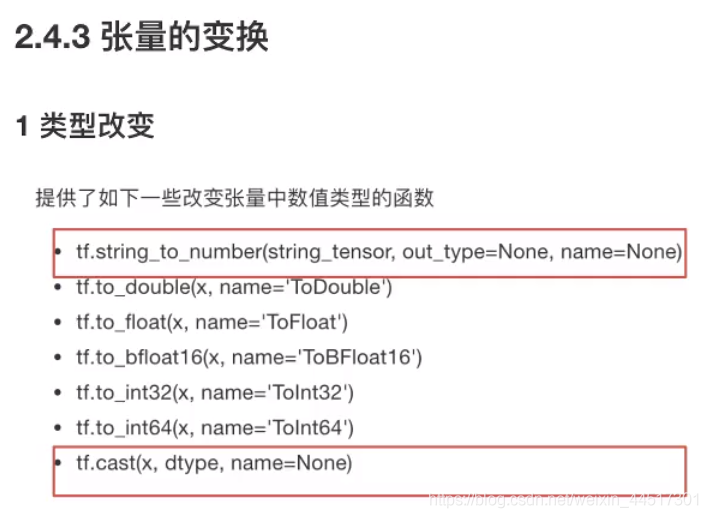

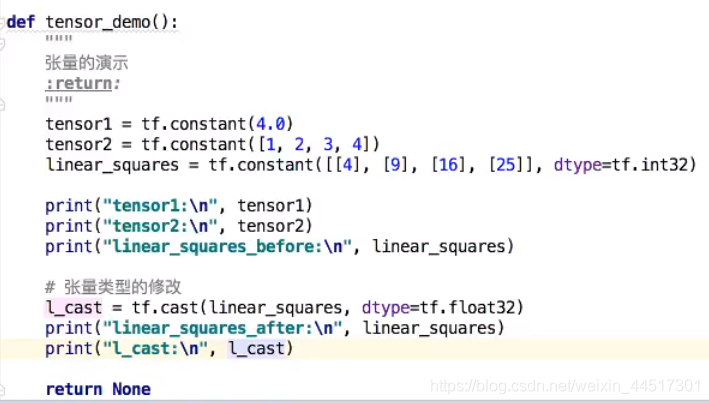

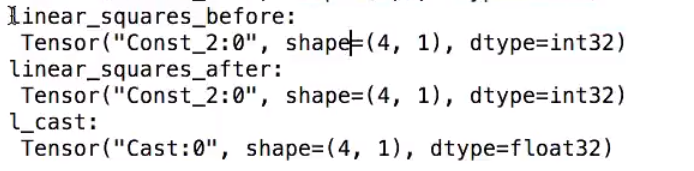

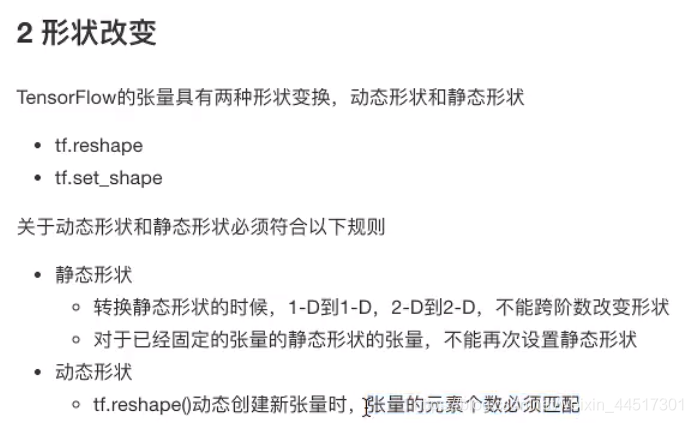

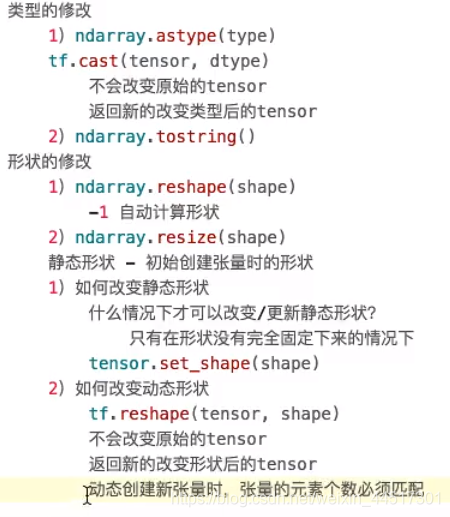

2.4.3 张量的变换

2.4.4 张量的数学运算

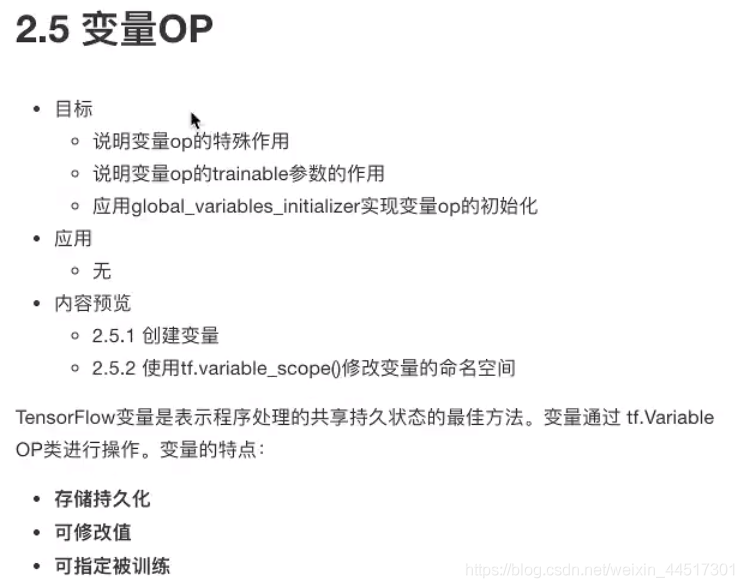

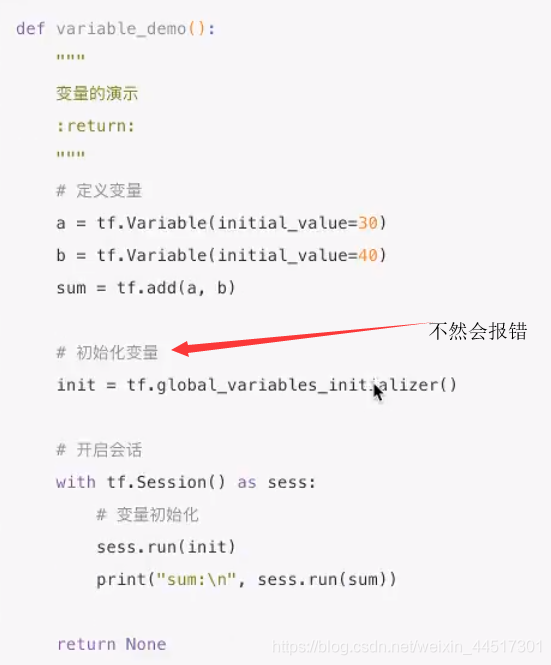

2.5 变量

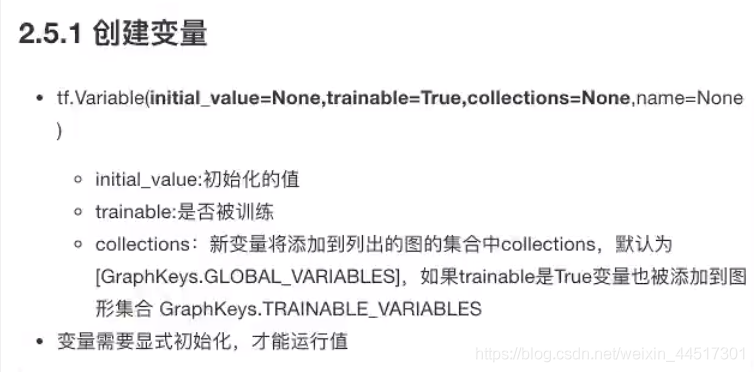

2.5.1 创建变量

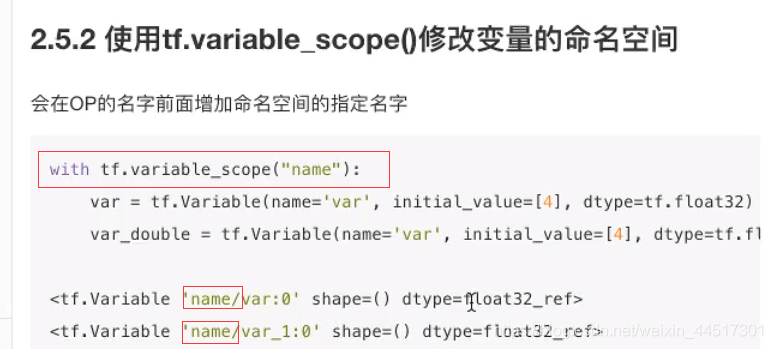

2.5.2 tf.variable 修改变量的命名空间

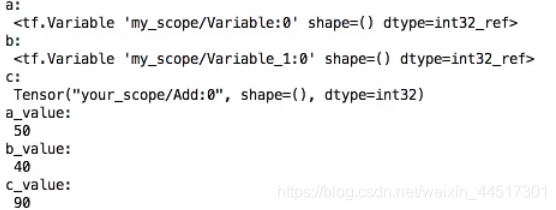

创建变量、变量的初始化、修改命名空间代码:

import tensorflow as tfimport osos.environ['TF_CPP_MIN_LOG_LEVEL']='2'def variable_demo():"""变量的演示:return:"""# 创建变量with tf.variable_scope("my_scope"):a = tf.Variable(initial_value=50)b = tf.Variable(initial_value=40)with tf.variable_scope("your_scope"):c = tf.add(a, b)print("a:\n", a)print("b:\n", b)print("c:\n", c)# 初始化变量init = tf.global_variables_initializer()# 开启会话with tf.Session() as sess:# 运行初始化sess.run(init)a_value, b_value, c_value = sess.run([a, b, c])print("a_value:\n", a_value)print("b_value:\n", b_value)print("c_value:\n", c_value)return Noneif __name__ == '__main__':variable_demo()

2.6 高级API

2.6.1 其他基础 API

2.6.2 高级API

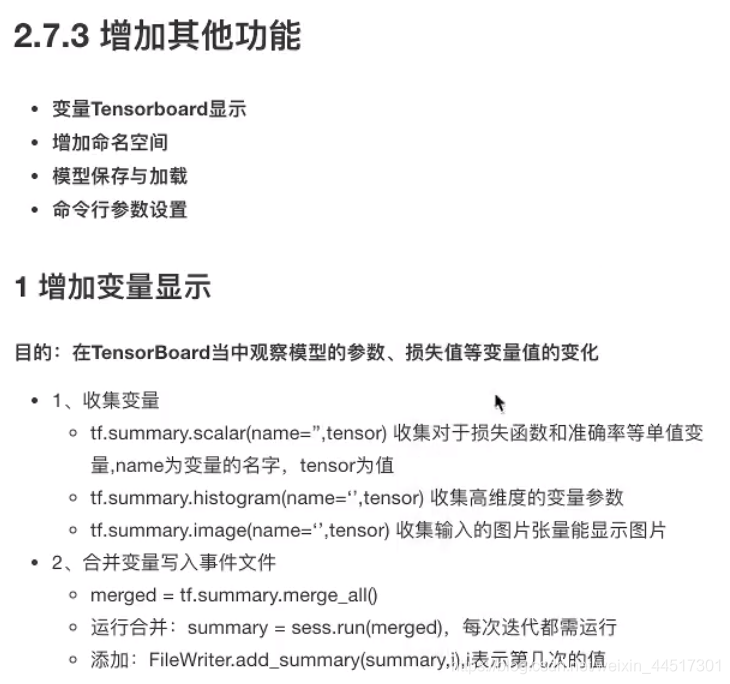

2.7 实现线性回归

2.7.1 线性回归原理复习

3 步骤

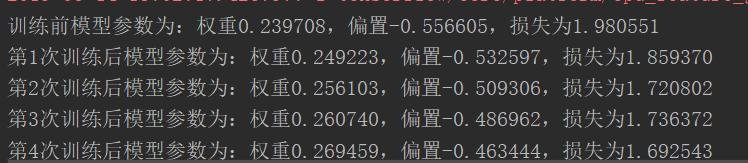

import tensorflow as tfdef linear_regression():"""自实现一个线性回归:return:"""# 1)准备数据X = tf.random_normal(shape=[100, 1])y_true = tf.matmul(X, [[0.8]]) + 0.7# 2)构造模型# 定义模型参数 用 变量weights = tf.Variable(initial_value=tf.random_normal(shape=[1, 1]))bias = tf.Variable(initial_value=tf.random_normal(shape=[1, 1]))y_predict = tf.matmul(X, weights) + bias# 3)构造损失函数error = tf.reduce_mean(tf.square(y_predict - y_true))# 4)优化损失optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error)# 显式地初始化变量init = tf.global_variables_initializer()# 开启会话with tf.Session() as sess:# 初始化变量sess.run(init)# 查看初始化模型参数之后的值print("训练前模型参数为:权重%f,偏置%f,损失为%f" % (weights.eval(), bias.eval(), error.eval()))#开始训练for i in range(100):sess.run(optimizer)print("第%d次训练后模型参数为:权重%f,偏置%f,损失为%f" % (i+1, weights.eval(), bias.eval(), error.eval()))return Noneif __name__ == '__main__':linear_regression()

import tensorflow as tfimport osos.environ['TF_CPP_MIN_LOG_LEVEL']='2'def linear_regression():"""自实现一个线性回归:return:"""with tf.variable_scope("prepare_data"):# 1)准备数据X = tf.random_normal(shape=[100, 1], name="feature")y_true = tf.matmul(X, [[0.8]]) + 0.7with tf.variable_scope("create_model"):# 2)构造模型# 定义模型参数 用 变量weights = tf.Variable(initial_value=tf.random_normal(shape=[1, 1]), name="Weights")bias = tf.Variable(initial_value=tf.random_normal(shape=[1, 1]), name="Bias")y_predict = tf.matmul(X, weights) + biaswith tf.variable_scope("loss_function"):# 3)构造损失函数error = tf.reduce_mean(tf.square(y_predict - y_true))with tf.variable_scope("optimizer"):# 4)优化损失optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error)# 2_收集变量tf.summary.scalar("error", error)tf.summary.histogram("weights", weights)tf.summary.histogram("bias", bias)# 3_合并变量merged = tf.summary.merge_all()# 创建Saver对象saver = tf.train.Saver()# 显式地初始化变量init = tf.global_variables_initializer()# 开启会话with tf.Session() as sess:# 初始化变量sess.run(init)# 1_创建事件文件file_writer = tf.summary.FileWriter("./tmp/linear", graph=sess.graph)# 查看初始化模型参数之后的值print("训练前模型参数为:权重%f,偏置%f,损失为%f" % (weights.eval(), bias.eval(), error.eval()))# #开始训练# for i in range(100):# sess.run(optimizer)# print("第%d次训练后模型参数为:权重%f,偏置%f,损失为%f" % (i+1, weights.eval(), bias.eval(), error.eval()))## # 运行合并变量操作# summary = sess.run(merged)# # 将每次迭代后的变量写入事件文件# file_writer.add_summary(summary, i)## # 保存模型# if i % 10 ==0:# saver.save(sess, "./tmp/model/my_linear.ckpt")# 加载模型if os.path.exists("./tmp/model/checkpoint"):saver.restore(sess, "./tmp/model/my_linear.ckpt")print("训练后模型参数为:权重%f,偏置%f,损失为%f" % (weights.eval(), bias.eval(), error.eval()))return Noneif __name__ == '__main__':linear_regression()

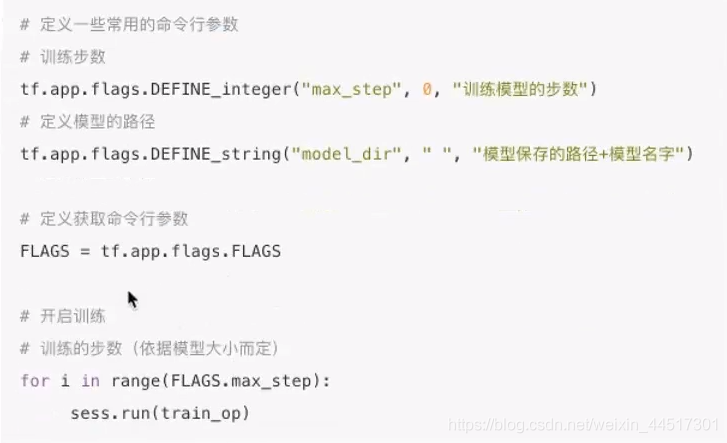

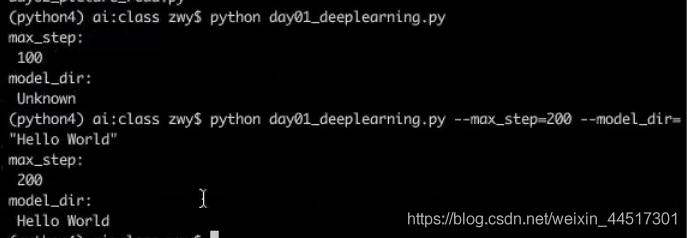

import tensorflow as tf# 1)定义命令行参数tf.app.flags.DEFINE_integer("max_step", 100, "训练模型的步数")tf.app.flags.DEFINE_string("model_dir", "Unknown", "模型保存的路径+模型名字")# 2)简化变量名FLAGS = tf.app.flags.FLAGSdef command_demo():"""命令行参数演示:return:"""print("max_step:\n", FLAGS.max_step)print("model_dir:\n", FLAGS.model_dir)return Noneif __name__ == '__main__':command_demo()

在主函数中直接使用

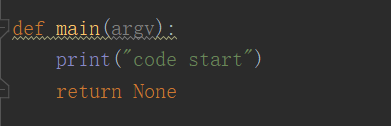

就可以调用main(argv),功能是显示路径