原理

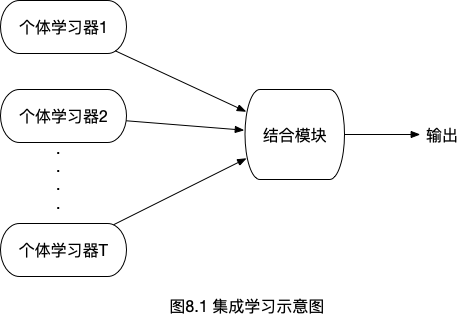

集成学习通过构建多个学习器,然后综合考虑多个学习器的结果来完成任务,任务本身可以是分类的,也可以是回归的。

多个学习器可以是弱学习器,也可以是强学习器,PS:弱学习器是指正确率在0.5左右的学习器,也就是略优于随机猜测(对于2分类问题,不会差于随机猜测,因为如果正确率=0.4,我只要取相反的类别,就可以保证正确率是0.6了),而强学习器是指正确率较高的学习器。

集成学习的核心问题是如何产生并结合”好而不同”的个体学习器?

分类

按照个体学习器是否相同分为:

1. 同质集成(homogeneous)

同质集成的意思是个体学习器都是同一个类型的,比如都是决策树,都是神经网络。这种情况的个体学习器,也称为基学习器,相应的算法称为基学习算法。

2. 异质集成(heterogeneous)

对应于上面的同质集成,我们就可以很容易理解异质集成,就是个体学习器不是同一个算法,比如某些是决策树,某些是神经网络。这种情况,一般不称为基学习器,而是叫做组件学习器。

按照个体学习器的生成方式分为:

1. boosting

个体学习器间存在强依赖关系,必须串行的生成,就是说只能先生成第一个学习器,再生成第二个学习器,以此类推…,最后将所有的学习器组合起来。

2. bagging 和 random forest

个体学习器间不存在依赖关系,可以并行生成,也就是可以同时生成N个学习器,然后再组合起来。

boosting

boosting是一族学习算法,也就是说是一类算法的统称,基本遵循以下学习过程:首先从初始训练集训练出一个基学习器,再根据基学习器的表现对训练样本的分布进行调整,使得先前训练错误的样本可以在下一轮迭代时更受关注,然后基于调整后的样本分布来训练下一个基学习器,如此反复,直到训练T个基学习器为止,(T是人工指定的一个数),最终将这个T个基学习器进行加权结合。

boosting可以将弱学习器提升为强学习器,最着名的代表就是Adaboost.

AdaBoost

Adaptive Boosting

Adaboost有很多种推导方式,这里讲解最易于理解的加法模型,即最终的结合模块采用加权T个基分类器的方式输出。

AdaBoost只能用于2分类任务,用于多分类和回归需要对算法进行修改

%3D%5Csum%7Bt%3D1%7D%5E%7BT%7D%20%5Calpha(t)h_t(x)%0A#card=math&code=H%28x%29%3D%5Csum%7Bt%3D1%7D%5E%7BT%7D%20%5Calpha%28t%29h_t%28x%29%0A&height=53&width=155)

其中,#card=math&code=H%28x%29&height=20&width=37)表示最终的预测结果,

#card=math&code=%5Calpha%28t%29&height=20&width=29)表示每个基分类器的权重,

#card=math&code=h_t%28x%29&height=20&width=38)表示基分类器的预测结果。

算法过程

输入: 训练集D={(x1,y1), (x2,y2),…,(xm,ym)};基学习器:G;训练轮数T。

过程:

- 初始化时,每个样本的权重一致,因为样本总数为m个,所以,每个样本的权重是

。

%3D%5Cfrac%7B1%7D%7Bm%7D%0A#card=math&code=D_1%28x%29%3D%5Cfrac%7B1%7D%7Bm%7D%0A&height=37&width=87)

- for t = 1,2,…,T do ,总共T轮迭代,也就是依次训练T个基分类器,每个训练过程如下:

- 基于分布

,从训练数据集D中训练出分类器

。

- 基于分布

%0A#card=math&code=h_t%3DG%28D%2CD_t%29%0A&height=20&width=105)

- 计算

的误差,

%5Cneq%20f(x))%0A#card=math&code=et%3DP%7Bx%20%5Csim%20D_t%7D%28h_t%28x%29%5Cneq%20f%28x%29%29%0A&height=20&width=185)

- 如果误差

,那么就进行下一轮迭代

- 确定第t个基分类器

的权重,可以看到,基分类器的误差

越大,那么权重就越小

%3D%5Cfrac%7B1%7D%7B2%7D%20ln(%5Cfrac%7B1-e_t%7D%7Be_t%7D)%0A#card=math&code=%5Calpha%28t%29%3D%5Cfrac%7B1%7D%7B2%7D%20ln%28%5Cfrac%7B1-e_t%7D%7Be_t%7D%29%0A&height=40&width=144)

- 更新样本分布,

是规范化因子

%26%3D%5Cfrac%7BDt(x)%7D%7BZ_t%7D%20%5Ctimes%20%0A%5Cbegin%7Bcases%7D%0A%20%20%20%20e%5E%7B-%5Calpha(t)%7D%20%26%5Ctext%7Bif%20%7D%20h(x)%3Df(x)%20%5C%5C%0A%20%20%20%20e%5E%7B%5Calpha(t)%7D%20%26%5Ctext%7Bif%20%7D%20h(x)%20%5Cneq%20f(x)%0A%5Cend%7Bcases%7D%20%5C%5C%0A%0A%26%3D%20%5Cfrac%7BD_t(x)e%5E%7B-%5Calpha%20f(x)h_t(x)%7D%7D%7BZ_t%7D%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0AD%7Bt%2B1%7D%28x%29%26%3D%5Cfrac%7BD_t%28x%29%7D%7BZ_t%7D%20%5Ctimes%20%0A%5Cbegin%7Bcases%7D%0A%20%20%20%20e%5E%7B-%5Calpha%28t%29%7D%20%26%5Ctext%7Bif%20%7D%20h%28x%29%3Df%28x%29%20%5C%5C%0A%20%20%20%20e%5E%7B%5Calpha%28t%29%7D%20%26%5Ctext%7Bif%20%7D%20h%28x%29%20%5Cneq%20f%28x%29%0A%5Cend%7Bcases%7D%20%5C%5C%0A%0A%26%3D%20%5Cfrac%7BD_t%28x%29e%5E%7B-%5Calpha%20f%28x%29h_t%28x%29%7D%7D%7BZ_t%7D%0A%5Cend%7Baligned%7D%0A&height=93&width=330)

输出:

%3Dsign%20%5Cbigg(%5Csum%7Bt%3D1%7D%5E%7BT%7D%20%5Calpha_t%20h_t(x)%20%5Cbigg)%0A#card=math&code=H%28X%29%3Dsign%20%5Cbigg%28%5Csum%7Bt%3D1%7D%5E%7BT%7D%20%5Calpha_t%20h_t%28x%29%20%5Cbigg%29%0A&height=53&width=206)

AdaBoost算法的理解

其实这里有个问题没懂,为什么我们要推导最小化损失函数,并证明后面的两个公式?也就是为什么我们的每一步的目的都是为了最小化损失函数就是对的了?

最小化损失函数的推导

先来看指数损失函数,意思是关于D()的一个期望值,至于为什么要采用这个指数损失函数,算是个问题:

%3DEx%20%5Csim%20D%5Be%5E%7B-f(x)H(x)%7D%5D%0A#card=math&code=l%7Bexp%7D%28H%7CD%29%3DE_x%20%5Csim%20D%5Be%5E%7B-f%28x%29H%28x%29%7D%5D%0A&height=25&width=228)

因为AdaBoost的输出空间为{-1, +1},因此,上式就可以写为:

%7D%26%3DP(f(x)%3D1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7B-(f(x)%3D1)H(x)%7D%20%2B%20P(f(x%3D-1)%20%5Cmid%20x)%20%5Ccdot%20e%5E%7B-(f(x)%3D-1)H(x)%7D%20%5C%5C%0A%26%3DP(f(x)%3D1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7B-1%20%5Ccdot%20H(x)%7D%20%2B%20P(f(x)%3D-1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7B-(-1)%20%5Ccdot%20H(x)%7D%20%5C%5C%0A%26%3DP(f(x)%3D1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7B-H(x)%7D%20%2B%20P(f(x)%3D-1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7BH(x)%7D%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0Al_%7Bexp%7D%7B%28H%20%5Cmid%20D%29%7D%26%3DP%28f%28x%29%3D1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7B-%28f%28x%29%3D1%29H%28x%29%7D%20%2B%20P%28f%28x%3D-1%29%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7B-%28f%28x%29%3D-1%29H%28x%29%7D%20%5C%5C%0A%26%3DP%28f%28x%29%3D1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7B-1%20%5Ccdot%20H%28x%29%7D%20%2B%20P%28f%28x%29%3D-1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7B-%28-1%29%20%5Ccdot%20H%28x%29%7D%20%5C%5C%0A%26%3DP%28f%28x%29%3D1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7B-H%28x%29%7D%20%2B%20P%28f%28x%29%3D-1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7BH%28x%29%7D%0A%5Cend%7Baligned%7D%0A&height=74&width=585)

如果H(x)能令%7D#card=math&code=l_%7Bexp%7D%7B%28H%20%5Cmid%20D%29%7D&height=21&width=81)最小化,则考虑对上式求偏导:

怎么理解求最小值就是偏导=0?

%7D%7D%7D%7B%5Cpartial%20H(x)%7D%26%3D%5Cfrac%7B%5Cpartial%20(%7BP(f(x)%3D1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7B-H(x)%7D%20%2B%20P(f(x)%3D-1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7BH(x)%7D%7D)%7D%20%7B%5Cpartial%20H(x)%7D%20%5C%5C%0A%26%3D-P(f(x)%3D1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7B-H(x)%7D%20%2B%20P(f(x)%3D-1%20%5Cmid%20x)%20%5Ccdot%20e%5E%7BH(x)%7D%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0A%5Cfrac%7B%5Cpartial%7Bl_%7Bexp%7D%7B%28H%20%5Cmid%20D%29%7D%7D%7D%7B%5Cpartial%20H%28x%29%7D%26%3D%5Cfrac%7B%5Cpartial%20%28%7BP%28f%28x%29%3D1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7B-H%28x%29%7D%20%2B%20P%28f%28x%29%3D-1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7BH%28x%29%7D%7D%29%7D%20%7B%5Cpartial%20H%28x%29%7D%20%5C%5C%0A%26%3D-P%28f%28x%29%3D1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7B-H%28x%29%7D%20%2B%20P%28f%28x%29%3D-1%20%5Cmid%20x%29%20%5Ccdot%20e%5E%7BH%28x%29%7D%0A%5Cend%7Baligned%7D%0A&height=74&width=517)

令上式=0,也就是%7D#card=math&code=l_%7Bexp%7D%7B%28H%20%5Cmid%20D%29%7D&height=21&width=81)最小化,可以解得:

%20%3D%20%7B1%20%5Cover%202%7D%20ln%5Cfrac%7BP(f(x)%3D1%20%5Cmid%20x)%7D%7BP(f(x)%3D-1%20%5Cmid%20x)%7D%20%0A#card=math&code=H%28x%29%20%3D%20%7B1%20%5Cover%202%7D%20ln%5Cfrac%7BP%28f%28x%29%3D1%20%5Cmid%20x%29%7D%7BP%28f%28x%29%3D-1%20%5Cmid%20x%29%7D%20%0A&height=47&width=221)

因此有(这里忽略了等于的情况):

)%26%3Dsign%5Cbigg(%20%7B1%20%5Cover%202%7D%20ln%5Cfrac%7BP(f(x)%3D1%20%5Cmid%20x)%7D%7BP(f(x)%3D-1%20%5Cmid%20x)%7D%20%20%20%5Cbigg)%20%5C%5C%0A%26%3D%5Cbegin%7Bcases%7D%0A%20%20%201%20%26%5Ctext%7Bif%20%7D%20P(f(x)%3D1%20%5Cmid%20x)%20%3E%20P(f(x)%3D-1%20%5Cmid%20x)%20%5C%5C%0A%20%20%20-1%20%26%5Ctext%7Bif%20%7D%20P(f(x)%3D1%20%5Cmid%20x)%20%3C%20P(f(x)%3D-1%20%5Cmid%20x)%0A%5Cend%7Bcases%7D%20%5C%5C%0A%26%3D%20%5Carg%5Cmax%7By%20%5Cin%20%7B%5C%7B1%2C%20-1%5C%7D%7D%7D%20%7BP(f(x)%3Dy%20%5Cmid%20x)%7D%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0Asign%28H%28x%29%29%26%3Dsign%5Cbigg%28%20%7B1%20%5Cover%202%7D%20ln%5Cfrac%7BP%28f%28x%29%3D1%20%5Cmid%20x%29%7D%7BP%28f%28x%29%3D-1%20%5Cmid%20x%29%7D%20%20%20%5Cbigg%29%20%5C%5C%0A%26%3D%5Cbegin%7Bcases%7D%0A%20%20%201%20%26%5Ctext%7Bif%20%7D%20P%28f%28x%29%3D1%20%5Cmid%20x%29%20%3E%20P%28f%28x%29%3D-1%20%5Cmid%20x%29%20%5C%5C%0A%20%20%20-1%20%26%5Ctext%7Bif%20%7D%20P%28f%28x%29%3D1%20%5Cmid%20x%29%20%3C%20P%28f%28x%29%3D-1%20%5Cmid%20x%29%0A%5Cend%7Bcases%7D%20%5C%5C%0A%26%3D%20%5Carg%5Cmax%7By%20%5Cin%20%7B%5C%7B1%2C%20-1%5C%7D%7D%7D%20%7BP%28f%28x%29%3Dy%20%5Cmid%20x%29%7D%0A%5Cend%7Baligned%7D%0A&height=127&width=440)

这意味着sign(H(x))达到了贝叶斯最优错误率,换言之,若指数函数损失最小化,即%20%3D%20%7B1%20%5Cover%202%7D%20ln%5Cfrac%7BP(f(x)%3D1%20%5Cmid%20x)%7D%7BP(f(x)%3D-1%20%5Cmid%20x)%7D#card=math&code=H%28x%29%20%3D%20%7B1%20%5Cover%202%7D%20ln%5Cfrac%7BP%28f%28x%29%3D1%20%5Cmid%20x%29%7D%7BP%28f%28x%29%3D-1%20%5Cmid%20x%29%7D&height=47&width=221),此时sign(H(x))分类是一定正确的,也就是错误率为0。

AdaBoost算法中的第4步,为什么要更新的权重为

%3D%5Cfrac%7B1%7D%7B2%7D%20ln(%5Cfrac%7B1-e_t%7D%7Be_t%7D)#card=math&code=%5Calpha%28t%29%3D%5Cfrac%7B1%7D%7B2%7D%20ln%28%5Cfrac%7B1-e_t%7D%7Be_t%7D%29&height=40&width=144)?

在AdaBoost算法中,第一个基分类器是通过直接将基学习算法用于初始数据分布而得,此后迭代地生成

和

,当基分类器

基于分布

产生后,该基分类器的权重

应当使

最小化指数损失函数:

%26%3DE%7Bx%20%5Csim%20D_t%7D%5Be%7B-f(x)%20%5Calpha_t%20h_t(x)%7D%5D%20%5C%5C%0A%26%3DE%7Bx%20%5Csim%20Dt%7D%5Be%5E%7B-%5Calpha_t%7DI(f(x)%3Dh_t(x))%20%2B%20e%5E%7B%5Calpha_t%7DI(f(x)%20%5Cneq%20h_t(x))%5D%20%5C%5C%0A%26%3De%5E%7B-%5Calpha_t%7DP%7Bx%20%5Csim%20Dt%7D(f(x)%3Dh_t(x))%20%2B%20e%5E%7B%5Calpha_t%7DP%7Bx%20%5Csim%20Dt%7D(f(x)%20%5Cneq%20h_t(x))%20%5C%5C%0A%26%3D%20e%5E%7B-%5Calpha_t%7D(1-%5Cepsilon_t)%2Be%5E%7B-%5Calpha_t%7D%5Cepsilon_t%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0Al%7Bexp%7D%28%5Calphat%20h_t%20%5Cmid%20D_t%29%26%3DE%7Bx%20%5Csim%20Dt%7D%5Be%7B-f%28x%29%20%5Calpha_t%20h_t%28x%29%7D%5D%20%5C%5C%0A%26%3DE%7Bx%20%5Csim%20Dt%7D%5Be%5E%7B-%5Calpha_t%7DI%28f%28x%29%3Dh_t%28x%29%29%20%2B%20e%5E%7B%5Calpha_t%7DI%28f%28x%29%20%5Cneq%20h_t%28x%29%29%5D%20%5C%5C%0A%26%3De%5E%7B-%5Calpha_t%7DP%7Bx%20%5Csim%20Dt%7D%28f%28x%29%3Dh_t%28x%29%29%20%2B%20e%5E%7B%5Calpha_t%7DP%7Bx%20%5Csim%20D_t%7D%28f%28x%29%20%5Cneq%20h_t%28x%29%29%20%5C%5C%0A%26%3D%20e%5E%7B-%5Calpha_t%7D%281-%5Cepsilon_t%29%2Be%5E%7B-%5Calpha_t%7D%5Cepsilon_t%0A%5Cend%7Baligned%7D%0A&height=93&width=503)

其中%20%5Cneq%20ht(x))#card=math&code=%5Cepsilon_t%3DP%7Bx%20%5Csim%20D_t%7D%28f%28x%29%20%5Cneq%20h_t%28x%29%29&height=20&width=184)。考虑损失函数的导数:

%7D%7D%7B%5Cpartial%7B%5Calphat%7D%7D%3D-e%5E%7B-%5Calpha_t%7D(1-%5Cepsilon_t)%2Be%5E%7B%5Calpha_t%7D%5Cepsilon_t%0A#card=math&code=%5Cfrac%7B%5Cpartial%7Bl%7Bexp%7D%28%5Calpha_t%20h_t%20%5Cmid%20D_t%29%7D%7D%7B%5Cpartial%7B%5Calpha_t%7D%7D%3D-e%5E%7B-%5Calpha_t%7D%281-%5Cepsilon_t%29%2Be%5E%7B%5Calpha_t%7D%5Cepsilon_t%0A&height=45&width=296)

令上式为0,得:

%0A#card=math&code=%5Calpha_t%20%3D%20%7B1%20%5Cover%202%7Dln%5Cbigg%28%20%5Cfrac%7B1-%5Cepsilon_t%7D%7B%5Cepsilon_t%7D%20%5Cbigg%29%0A&height=45&width=141)

由此,得到AdaBoost算法的第4步的权重,也就是说,按照这个权重来更新当前的基分类器,是可以使得损失函数最小化的。

AdaBoost算法中的第5步,为什么会按照那个公式来更新数据的分布呢?

AdaBoost算法在获得之后,样本分布将进行调整,使下一轮的基学习器

能纠正

的一些错误。理想的

应该能纠正

的所有错误,即最小化:

%26%3DE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f(x)(H%7Bt-1%7D(x)%2Bht(x))%7D%5D%20%5C%5C%0A%26%3DE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%20%5Ccdot%20e%5E%7B-f(x)h_t(x)%7D%5D%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0Al%7Bexp%7D%28H%7Bt-1%7D%20%2B%20h_t%20%5Cmid%20D_t%29%26%3DE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f%28x%29%28H%7Bt-1%7D%28x%29%2Bh_t%28x%29%29%7D%5D%20%5C%5C%0A%26%3DE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f%28x%29H_%7Bt-1%7D%28x%29%7D%20%5Ccdot%20e%5E%7B-f%28x%29h_t%28x%29%7D%5D%0A%5Cend%7Baligned%7D%0A&height=49&width=386)

注意到%3Dh_t%5E2(x)%3D1#card=math&code=f%5E2%28x%29%3Dh_t%5E2%28x%29%3D1&height=24&width=133),上式可以用

h_t(x)%7D#card=math&code=e%5E%7B-f%28x%29h_t%28x%29%7D&height=20&width=69)的泰勒展开式近似为:

%26%5Cbacksimeq%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%20%5Cbigg(%201-f(x)ht(x)%2B%5Cfrac%7Bf%5E2(x)h_t%5E2(x)%7D%7B2%7D%20%5Cbigg)%20%5Cbigg%5D%20%5C%5C%0A%26%3DE%7Bx%20%5Csim%20D%7D%20%5Cbigg%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%20%5Cbigg(%201-f(x)h_t(x)%2B%5Cfrac%7B1%7D%7B2%7D%20%5Cbigg)%20%5Cbigg%5D%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0Al%7Bexp%7D%28H%7Bt-1%7D%20%2B%20h_t%20%5Cmid%20D_t%29%26%5Cbacksimeq%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5Be%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%20%5Cbigg%28%201-f%28x%29h_t%28x%29%2B%5Cfrac%7Bf%5E2%28x%29h_t%5E2%28x%29%7D%7B2%7D%20%5Cbigg%29%20%5Cbigg%5D%20%5C%5C%0A%26%3DE%7Bx%20%5Csim%20D%7D%20%5Cbigg%5Be%5E%7B-f%28x%29H_%7Bt-1%7D%28x%29%7D%20%5Cbigg%28%201-f%28x%29h_t%28x%29%2B%5Cfrac%7B1%7D%7B2%7D%20%5Cbigg%29%20%5Cbigg%5D%0A%5Cend%7Baligned%7D%0A&height=93&width=544)

于是,理想的基学习器:

%26%3D%5Carg%5Cminh%20l%7Bexp%7D(H%7Bt-1%7D%2Bh%20%5Cmid%20D)%20%5C%5C%0A%26%3D%5Carg%5Cmin_h%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%20%5Cbigg(%201-f(x)h_t(x)%2B%5Cfrac%7B1%7D%7B2%7D%20%5Cbigg)%20%5Cbigg%5D%20%5C%5C%0A%26%3D%5Carg%5Cmin_h%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%20f(x)h_t(x)%20%20%5Cbigg%5D%20%5C%5C%0A%26%3D%5Carg%5Cmin_h%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5B%5Cfrac%7Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%7D%7BE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%5D%7D%20f(x)h_t(x)%20%20%5Cbigg%5D%20%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0Ah_t%28x%29%26%3D%5Carg%5Cmin_h%20l%7Bexp%7D%28H%7Bt-1%7D%2Bh%20%5Cmid%20D%29%20%5C%5C%0A%26%3D%5Carg%5Cmin_h%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5Be%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%20%5Cbigg%28%201-f%28x%29h_t%28x%29%2B%5Cfrac%7B1%7D%7B2%7D%20%5Cbigg%29%20%5Cbigg%5D%20%5C%5C%0A%26%3D%5Carg%5Cmin_h%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5Be%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%20f%28x%29h_t%28x%29%20%20%5Cbigg%5D%20%5C%5C%0A%26%3D%5Carg%5Cmin_h%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5B%5Cfrac%7Be%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%7D%7BE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f%28x%29H_%7Bt-1%7D%28x%29%7D%5D%7D%20f%28x%29h_t%28x%29%20%20%5Cbigg%5D%20%0A%5Cend%7Baligned%7D%0A&height=170&width=424)

注意到H%7Bt-1%7D(x)%7D%5D#card=math&code=E%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f%28x%29H_%7Bt-1%7D%28x%29%7D%5D&height=24&width=133)是一个常数。令

表示一个分布:

%3D%5Cfrac%7BD(x)e%5E%7B-f(x)H%7Bt-1%7D(x)%7D%7D%7BE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%5D%7D%0A#card=math&code=D_t%28x%29%3D%5Cfrac%7BD%28x%29e%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%7D%7BE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%5D%7D%0A&height=50&width=205)

根据数学期望的定义,这等价于令:

%26%3D%5Carg%5Cmaxh%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5B%20%5Cfrac%7Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%7D%7BE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%5D%7D%20f(x)h(x)%20%5Cbigg%5D%20%5C%5C%0A%26%3D%5Carg%5Cmax_h%20E%7Bx%20%5Csim%20D%7D%20%5Bf(x)h(x)%5D%0A%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0Aht%28x%29%26%3D%5Carg%5Cmax_h%20E%7Bx%20%5Csim%20D%7D%20%5Cbigg%5B%20%5Cfrac%7Be%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%7D%7BE%7Bx%20%5Csim%20D%7D%5Be%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%5D%7D%20f%28x%29h%28x%29%20%5Cbigg%5D%20%5C%5C%0A%26%3D%5Carg%5Cmax_h%20E%7Bx%20%5Csim%20D%7D%20%5Bf%28x%29h%28x%29%5D%0A%0A%5Cend%7Baligned%7D%0A&height=81&width=388)

由%2Ch(x)%20%5Cin%20%7B-1%2C%20%2B1%7D#card=math&code=f%28x%29%2Ch%28x%29%20%5Cin%20%7B-1%2C%20%2B1%7D&height=20&width=143),有:

h(x)%3D1-2I(f(x)%20%5Cneq%20h(x))%0A#card=math&code=f%28x%29h%28x%29%3D1-2I%28f%28x%29%20%5Cneq%20h%28x%29%29%0A&height=20&width=232)

则理想的基学习器:

%3D%5Carg%5Cminh%20E%7Bx%20%5Csim%20D%7D%20%5BI(f(x)%20%5Cneq%20h(x))%5D%0A#card=math&code=h%28x%29%3D%5Carg%5Cminh%20E%7Bx%20%5Csim%20D%7D%20%5BI%28f%28x%29%20%5Cneq%20h%28x%29%29%5D%0A&height=28&width=269)

由此可见,理想的将在分布

下最下化分类误差。因此,弱分类器将基于分布

来训练,且针对

的分类误差应小于0.5。这在一定程度上类似”残差逼近“的思想。不太明白这哪里类似残差逼近的思想了?

考虑到和

的关系,有:

%26%3D%5Cfrac%7B%20D(x)e%5E%7B-f(x)Ht(x)%7D%7D%20%7BE%7Bx%20%5Csim%20D%7D%20%5Be%5E%7B-f(x)Ht(x)%7D%5D%7D%20%5C%5C%0A%5C%5C%0A%26%3D%5Cfrac%7B%20D(x)e%5E%7B-f(x)H%7Bt-1%7D(x)%7D%20e%5E%7B-f(x)%20%5Calphat%20h_t(x)%7D%7D%20%7BE%7Bx%20%5Csim%20D%7D%20%5Be%5E%7B-f(x)Ht(x)%7D%5D%7D%20%5C%5C%0A%0A%26%3D%20D_t(x)%20%5Ccdot%20e%5E%7B-f(x)%20%5Calpha_t%20h_t(x)%7D%20%5Cfrac%7B%7BE%7Bx%20%5Csim%20D%7D%20%5Be%5E%7B-f(x)H%7Bt-1%7D(x)%7D%5D%7D%7D%20%7B%7BE%7Bx%20%5Csim%20D%7D%20%5Be%5E%7B-f(x)Ht(x)%7D%5D%7D%7D%0A%5Cend%7Baligned%7D%0A#card=math&code=%5Cbegin%7Baligned%7D%0AD%7Bt%2B1%7D%28x%29%26%3D%5Cfrac%7B%20D%28x%29e%5E%7B-f%28x%29Ht%28x%29%7D%7D%20%7BE%7Bx%20%5Csim%20D%7D%20%5Be%5E%7B-f%28x%29Ht%28x%29%7D%5D%7D%20%5C%5C%0A%5C%5C%0A%26%3D%5Cfrac%7B%20D%28x%29e%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%20e%5E%7B-f%28x%29%20%5Calphat%20h_t%28x%29%7D%7D%20%7BE%7Bx%20%5Csim%20D%7D%20%5Be%5E%7B-f%28x%29Ht%28x%29%7D%5D%7D%20%5C%5C%0A%0A%26%3D%20D_t%28x%29%20%5Ccdot%20e%5E%7B-f%28x%29%20%5Calpha_t%20h_t%28x%29%7D%20%5Cfrac%7B%7BE%7Bx%20%5Csim%20D%7D%20%5Be%5E%7B-f%28x%29H%7Bt-1%7D%28x%29%7D%5D%7D%7D%20%7B%7BE%7Bx%20%5Csim%20D%7D%20%5Be%5E%7B-f%28x%29H_t%28x%29%7D%5D%7D%7D%0A%5Cend%7Baligned%7D%0A&height=175&width=362)

这恰是AdaBoost算法中第5步的公式。

样本处理

Boosting算法对样本的处理有两种常用方法:

- 重赋值法

这个就是上面AdaBoost采用的方法,每轮迭代对样本赋以不同的权重。 - 重采样法

对于无法接受带权样本的算法,可以采用重采样的方法,也就是每轮迭代中对训练样本进行重新采样,用重采样得到的样本作为训练集来训练基学习器。

一般而言,两种做法没有显着的优劣差别而言。

GBDT

Gradient Boosting Decision Tree

上面讲的AdaBoost的推导源于统计视角,此派认为AdaBoost实质上是基于加性模型,以类似牛顿迭代法来优化指数损失函数,受此启发,将优化过程替换为其他优化方法,产生了GBDT。

XgBOOST

Bagging - Boostrap AGGregating

Bagging是并行式学习最着名的代表。

为了得到泛化性能较强的集成,个体学习器应该尽可能的独立,现实中,独立做不到,通常考虑自助采样法(bootstrap sampling),对m个数据集进行有放回的采样m个,这样,有63.2%的样本会被选中。通常不会直接将数据集划分为T个不同的数据集,这样的话,每个基学习器都只用到了一小部分样本集,有可能欠拟合。并且自助采样过程剩下的36.8%的数据可以用作验证集,用来给决策树剪枝或者神经网络的泛化性评估等。

所以通常来说Bagging的方法是对T个已经抽样好的训练集同时训练,然后通过集成方法,将他们组合在一起,对回归问题,会取平均值;对分类问题,会取多数

Bagging主要关注降低方差,而boosting主要关注降低偏差。

相比于标准的AdaBoost只能用于二分类,Bagging可以用于多分类,二分类以及回归任务。

RF

Radom Forest,Bagging的一个扩展变体

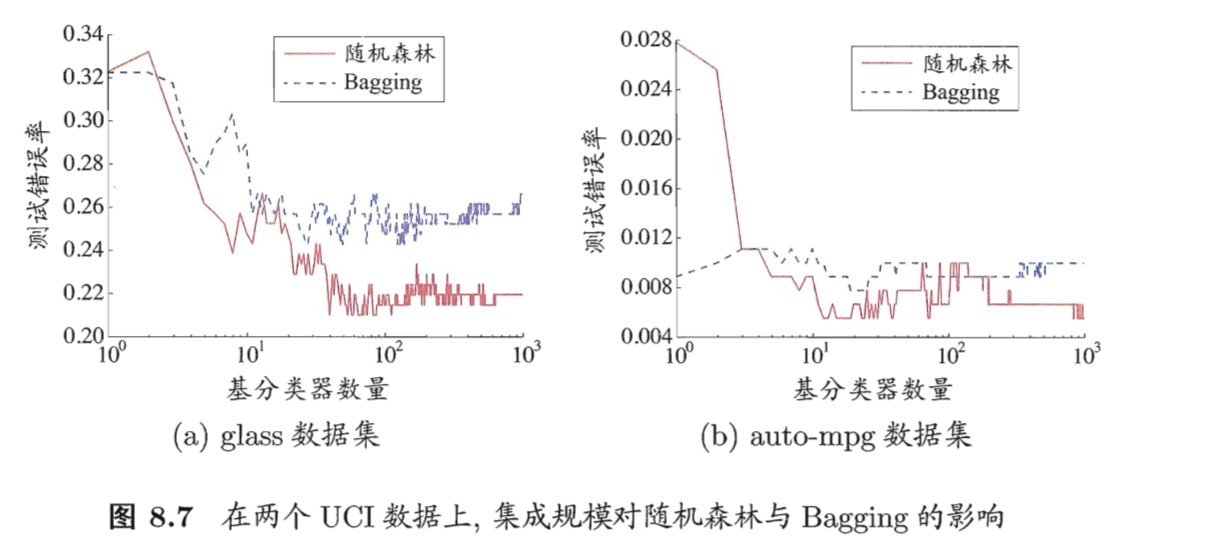

RF在决策树为基学习器构建Bagging集成的基础上,进一步在决策树的训练过程中,引入随机属性选择。也即是说,在基学习器训练时,传统决策树是在当前结点的属性集合(假设为d个)中选择一个最优属性,而RF是先从d个属性中,选择k个属性,然后在这k个属性中,计算最优属性,进行拆分。通常。

实践中的RF在决策树的数量较少时性能往往较差,因为属性的随机选择,所以效果肯定会差一些,但是如果决策树的数量较多时,RF基本是最优的集成算法之一,效果非常强,这主要也是因为随机属性,导致了不同决策树之间的差异较大。并且因为没有采用所有的属性,所以,通常效率也高于常规的Bagging。

结合策略

平均法

简单平均

%3D%5Cfrac%7B1%7D%7BT%7D%20%5Csum%7Bi%3D1%7D%5ETH_i%20(x)%0A#card=math&code=H%28x%29%3D%5Cfrac%7B1%7D%7BT%7D%20%5Csum%7Bi%3D1%7D%5ETH_i%20%28x%29%0A&height=53&width=150)

加权平均

%3D%5Csum%7Bi%3D1%7D%5ET%20%5Comega_i%20H_i(x)%0A#card=math&code=H%28x%29%3D%5Csum%7Bi%3D1%7D%5ET%20%5Comega_i%20H_i%28x%29%0A&height=53&width=145)

其中wi是个体学习器的权重,通常要求wi >= 0,。

投票法

绝对多数投票法

某类投票过半,选择该类作为预测类,不过半,则作废。

相对多数投票法

选择类最多的,作为预测类。

加权投票法

每个个体学习器的权重不同。