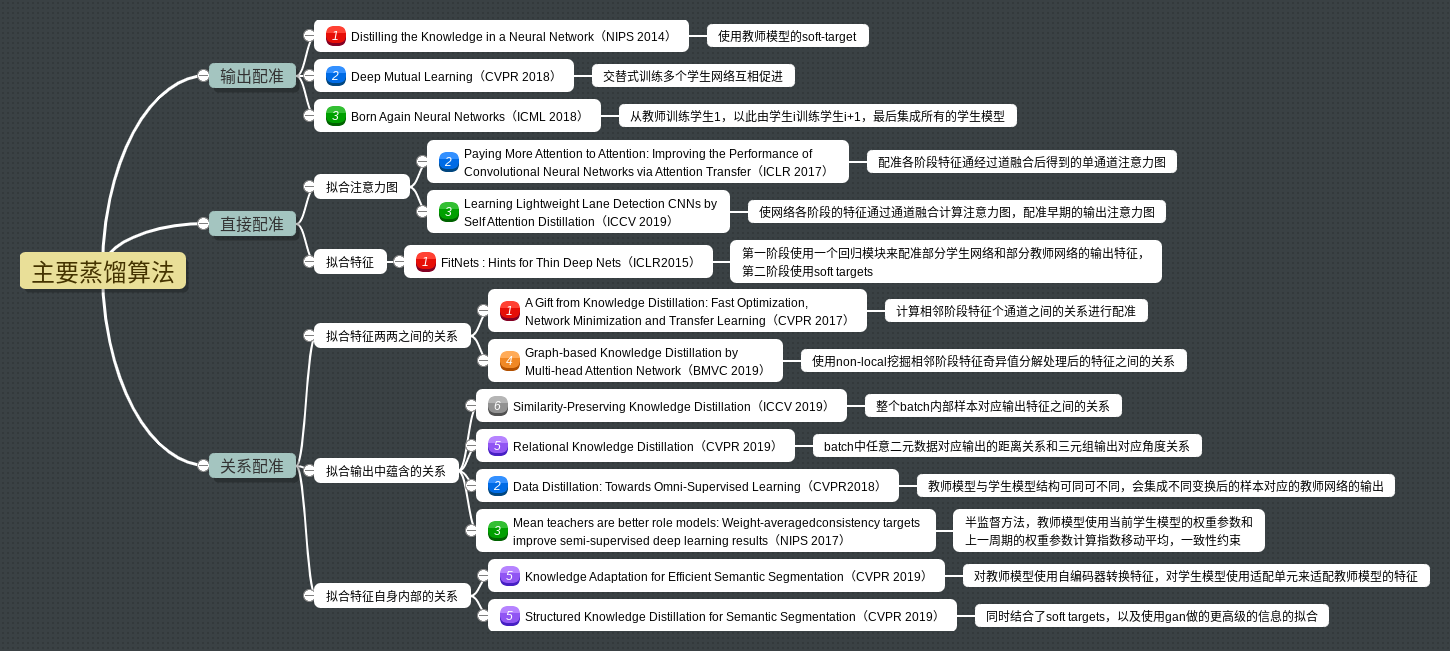

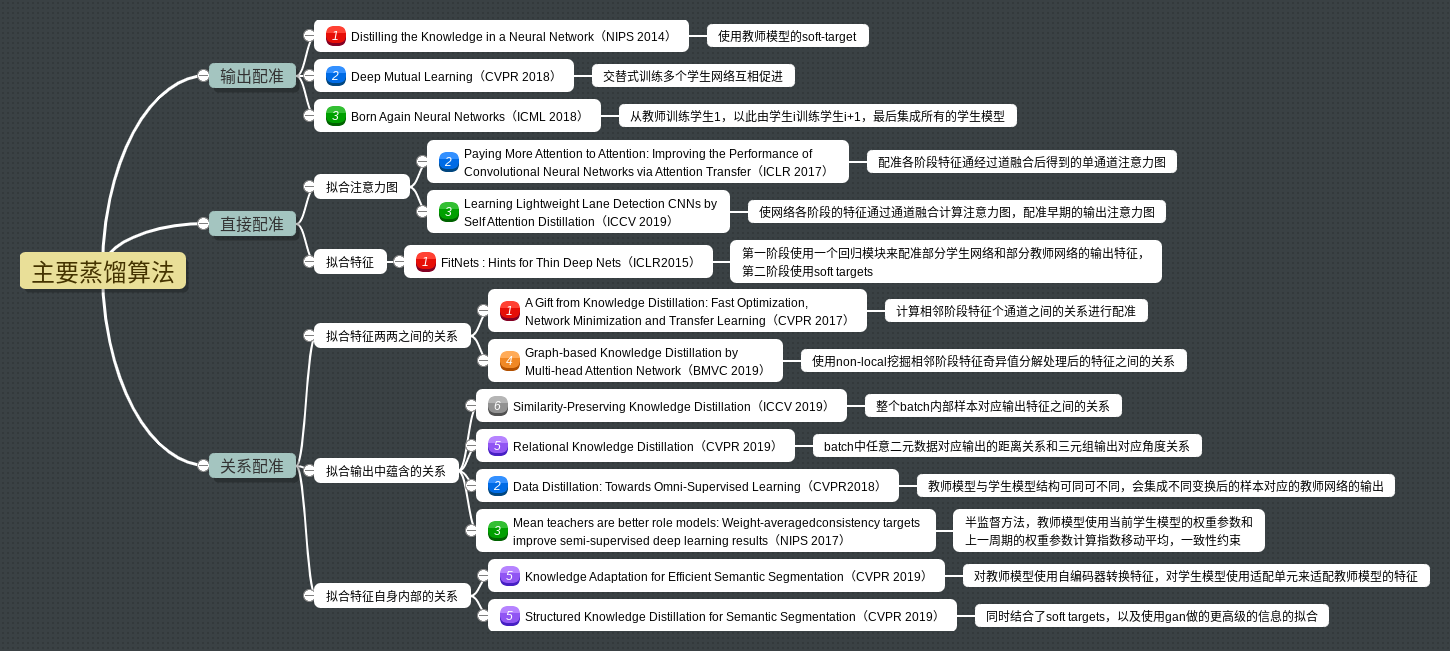

最近看了一些关于蒸馏的文章,这里做一个简单的总结:

脑图的原始文档:http://naotu.baidu.com/file/f60fea22a9ed0ea7236ca9a70ff1b667?token=dab31b70fffa034a(kdxj)

下面是做的ppt,这里转成了pdf。

最近看了一些关于蒸馏的文章,这里做一个简单的总结:

脑图的原始文档:http://naotu.baidu.com/file/f60fea22a9ed0ea7236ca9a70ff1b667?token=dab31b70fffa034a(kdxj)

下面是做的ppt,这里转成了pdf。

让时间为你证明