- (0)模型导入

- jy: 方式1:

- jy: 使用英语模型: 老版本为 “en”, 新版本为 “en_core_web_sm”

- 调用 /spacy/init.py 中的 load 函数: “Load a spaCy model from an installed

- package or a local path.” (对应的模型需提前下载: spacy download en_core_web_sm)

- 传入的参数是模型名称或模型所在路径; 得到的 nlp 为 /spacy/lang/en/init.py 中的

- English 类(已实例化), 该类继承自 /spacy/language.py 中的 Language 类(初始化方法也

- 在该父类中定义);

- nlp = spacy.load(‘en’)

- jy: 方式2:

- 即调用 /encoreweb_sm/__init.py 中的 load 函数, 其会进一步调用 /spacy/util.py

- 中的 loadmodelfrom_init_py 函数(传入 /path/to/en_core_web_sm/__init.py 和可

- 能的一些其它额外参数, 如: disable 列表参数: “Names of pipeline components to

- disable. Disabled pipes will be loaded but they won’t be run unless you

- explicitly enable them by calling nlp.enable_pipe.”),

- nlp = en_core_web_sm.load()

- nlp = en_core_web_sm.load(disable=[“parser”, “ner”, “textcat”])

- nlp.enable_pipe(“ner”)

- nlp.enable_pipe(“parser”)

- jy: 将 sentence 传入 nlp 对象时(已实例化的 English 类, 其父类(/spacy/language.py 中的

- Language 类)定义了 call 方法), 会执行父类的 call 方法, 返回一个已初始化的

- Doc 类 (/spacy/tokens/doc.cpython-36m-x86_64-linux-gnu.so 中定义);

- jy: True; 得到的 doc 是一个可迭代对象;

- spacy 的大部分核心功能是通过 Doc 、Span 和 Token 对象上的方法访问的。

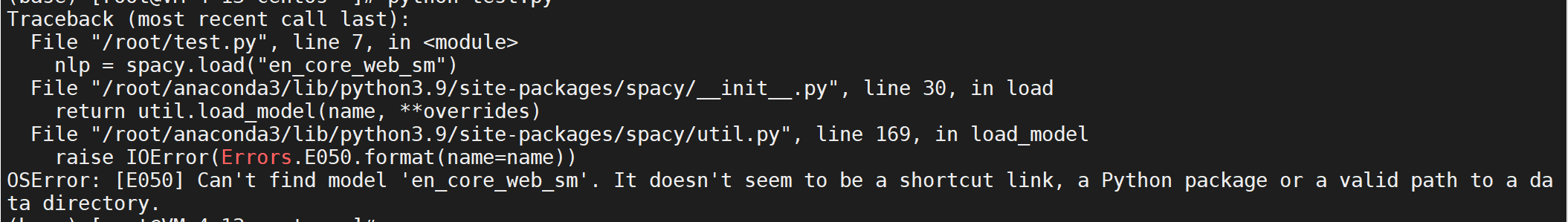

python -m spacy download en_core_web_sm- 后续使用

spacy.load("en_core_web_sm")时,需要预先下载该模型,否则会报错:

- 后续使用

(0)模型导入

- 此部分代码后续均需要使用 ```python import spacy from collections import Iterable import en_core_web_sm

jy: 方式1:

jy: 使用英语模型: 老版本为 “en”, 新版本为 “en_core_web_sm”

调用 /spacy/init.py 中的 load 函数: “Load a spaCy model from an installed

package or a local path.” (对应的模型需提前下载: spacy download en_core_web_sm)

传入的参数是模型名称或模型所在路径; 得到的 nlp 为 /spacy/lang/en/init.py 中的

English 类(已实例化), 该类继承自 /spacy/language.py 中的 Language 类(初始化方法也

在该父类中定义);

nlp = spacy.load(‘en’)

nlp = spacy.load(“en_core_web_sm”)

jy: 方式2:

即调用 /encoreweb_sm/__init.py 中的 load 函数, 其会进一步调用 /spacy/util.py

中的 loadmodelfrom_init_py 函数(传入 /path/to/en_core_web_sm/__init.py 和可

能的一些其它额外参数, 如: disable 列表参数: “Names of pipeline components to

disable. Disabled pipes will be loaded but they won’t be run unless you

explicitly enable them by calling nlp.enable_pipe.”),

nlp = en_core_web_sm.load()

nlp = en_core_web_sm.load(disable=[“parser”, “ner”, “textcat”])

nlp.enable_pipe(“ner”)

nlp.enable_pipe(“parser”)

jy: 将 sentence 传入 nlp 对象时(已实例化的 English 类, 其父类(/spacy/language.py 中的

Language 类)定义了 call 方法), 会执行父类的 call 方法, 返回一个已初始化的

Doc 类 (/spacy/tokens/doc.cpython-36m-x86_64-linux-gnu.so 中定义);

doc = nlp(u’This is a sentence from England.’)

jy: True; 得到的 doc 是一个可迭代对象;

print(isinstance(doc, Iterable))

<a name="EspZY"></a>## (1)tokenize```pythonprint("1) tokenize ==============================================")for token in doc:# jy: doc 中的每个元素为一个 /spacy/tokens/token.cpython-36m-x86_64-linux-gnu.so 中# 定义的已初始化的 Token 类; 包含各种属性, 输出时以字符串的形式输出;#print(type(token))# jy: token.orth_ 获取对应的字符串格式 token; token.is_punct 当为标点符号时为 True;#print(type(token.orth_), token.is_punct)print(token, token.is_punct)"""This Falseis Falsea Falsesentence Falsefrom FalseEngland False. True"""

(2)Lemmatize

print("2) 词干化(Lemmatize) =====================================")for token in doc:# jy: doc 中的元素(token) 的 lemma_ 属性值为原词的词干部分(如 running 对应 run)print(token, token.lemma_, token.lemma)"""This this 1995909169258310477is be 10382539506755952630a a 11901859001352538922sentence sentence 18108853898452662235from from 7831658034963690409England England 10941252157987694380. . 12646065887601541794"""

(3)POS Tagging

print("3) 词性标注(POS Tagging) =================================")for token in doc:# jy: token 的 pos_ 属性(粗粒度)或 tag_ 属性(细粒度)值为原词的词性print(token, token.pos_, token.pos, token.tag_, token.tag)"""This PRON 95 DT 15267657372422890137is AUX 87 VBZ 13927759927860985106a DET 90 DT 15267657372422890137sentence NOUN 92 NN 15308085513773655218from ADP 85 IN 1292078113972184607England PROPN 96 NNP 15794550382381185553. PUNCT 97 . 12646065887601541794"""

(4)NER

print("4) 命名实体识别(NER) =====================================")# jy: <class 'tuple'>#print(type(doc.ents))for entity in doc.ents:# jy: True; 得到的 entity 是一个 /spacy/tokens/span.cpython-36m-x86_64-linux-gnu.so# 中的 Span 类(为可迭代对象); 输出时会以字符串形式输出#print(isinstance(entity, Iterable))# jy: 对应的 label_ 属性值为命名实体的标签;print(entity, entity.label_, entity.label)"""England GPE 384"""

(5)名词短语提取

print("5) 名词短语提取 ==========================================")# jy: <class 'generator'>#print(type(doc.noun_chunks))for nounc in doc.noun_chunks:# jy: 得到的 nounc 也是一个 /spacy/tokens/span.cpython-36m-x86_64-linux-gnu.so# 中的 Span 类(为可迭代对象); 输出时会以字符串形式输出;print(nounc)"""Thisa sentenceEngland"""

(6)文本分句

print("6) 文本分句 ============================================")wiki_obama = """Barack Obama is an American politician who served as the 44th President of the United States from 2009 to 2017. He is the first African American to have served as president, as well as the first born outside the contiguous United States."""doc_obama = nlp(wiki_obama)# jy: <generator object at 0x7f79d7e74c28>print(doc_obama.sents)for ix, sent in enumerate(doc_obama.sents, 1):print("Sentence number {}: {}".format(ix, sent))"""Sentence number 1: Barack Obama is an American politician who served as the 44th President of the United States from 2009 to 2017.Sentence number 2: He is the first African American to have served as president, as well as the first born outside the contiguous United States."""