- 1、代码下载、依赖包安装

- 2、Evaluation

- 3、Train

- 下载训练数据

- For supervised SimCSE: SNLI and MNLI datasets

- For unsupervised SimCSE: 1 million sentences from English Wikipedia

- jy: 训练过程即传入参数执行 train.py 脚本

- unsupervised: provide a single-GPU (or CPU) example for the unsupervised version

- 注意: 如果没有 GPU 环境, 则传入 train.py 中的 —fp16 参数需要被注释掉;

- supervised SimCSE: give a multiple-GPU example for the supervised version

- 4、训练过程的注意事项

1、代码下载、依赖包安装

git clone https://github.com/princeton-nlp/SimCSE.gitcd SimCSE/# 需先安装好 torch 1.7.1pip install -r requirements.txt

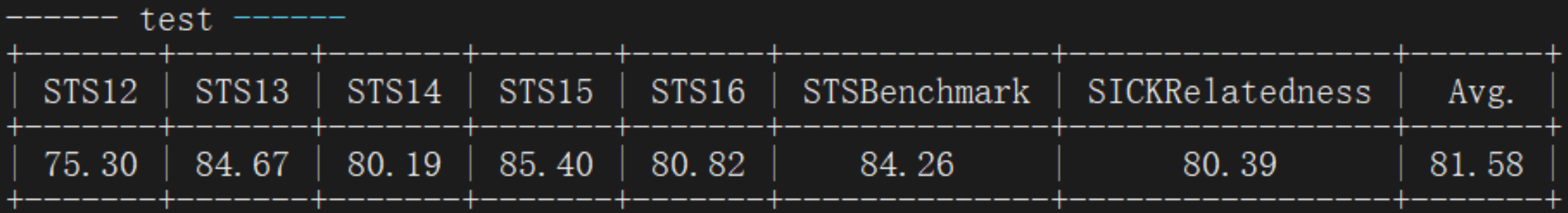

2、Evaluation

(1)执行命令

cd /path/to/SimCSE# jy: 下载数据集cd SentEval/data/downstream/bash download_dataset.shcd ../../../# can evaluate any transformers-based pre-trained models using thisevaluation code.python evaluation.py \--model_name_or_path princeton-nlp/sup-simcse-bert-base-uncased \--pooler cls \--task_set sts \--mode test

测试结果如下:

(2)相关参数说明

--model_name_or_path: The name or path of a transformers-based pre-trained checkpoint.--pooler: Pooling method. Now we supportcls(default): Use the representation of[CLS]token. A linear+activation layer is applied after the representation (it’s in the standard BERT implementation). If you use supervised SimCSE, you should use this option.cls_before_pooler: Use the representation of[CLS]token without the extra linear+activation. If you use unsupervised SimCSE, you should take this option.avg: Average embeddings of the last layer. If you use checkpoints of SBERT/SRoBERTa, you should use this option.- SBERT/SRoBERTa 论文:【2019-11-03】Sentence-BERT:Sentence Embeddings using Siamese BERT-Networks

avg_top2: Average embeddings of the last two layers.avg_first_last: Average embeddings of the first and last layers. If you use vanilla BERT or RoBERTa, this works the best.

--mode: Evaluation modetest(default): To faithfully reproduce our results, you should use this option.dev: Report the development set results.- Note that in STS tasks, only

STS-BandSICK-Rhave development sets, so we only report their numbers. - It also takes a fast mode for transfer tasks, so the running time is much shorter than the

testmode (though numbers are slightly lower).

- Note that in STS tasks, only

fasttest: It is the same as test, but with a fast mode so the running time is much shorter, but the reported numbers may be lower (only for transfer tasks).

--task_set: What set of tasks to evaluate on (if set, it will override--tasks)sts(default): Evaluate on STS tasks, includingSTS 12~16,STS-BandSICK-R. This is the most commonly-used set of tasks to evaluate the quality of sentence embeddings.transfer: Evaluate on transfer tasks.full: Evaluate on both STS and transfer tasks.na: Manually set tasks by--tasks.

--tasks: Specify which dataset(s) to evaluate on. Will be overridden if--task_setis notna. See the code for a full list of tasks.3、Train

(1)执行命令

```python cd /path/to/SimCSE下载训练数据

cd dataFor supervised SimCSE: SNLI and MNLI datasets

bash download_nli.shFor unsupervised SimCSE: 1 million sentences from English Wikipedia

bash download_wiki.sh cd ../

jy: 训练过程即传入参数执行 train.py 脚本

unsupervised: provide a single-GPU (or CPU) example for the unsupervised version

注意: 如果没有 GPU 环境, 则传入 train.py 中的 —fp16 参数需要被注释掉;

bash run_unsup_example.sh

supervised SimCSE: give a multiple-GPU example for the supervised version

bash run_sup_example.sh

<a name="CrxrJ"></a>## (2)相关参数说明(即传入 train.py 的参数)- `--train_file`: Training file path. You can use our provided Wikipedia or NLI data, or you can use your own data with the same format. Support format:- "txt" files:one line for one sentence- "csv" files- 2-column: pair data with no hard negative- 3-column: pair data with one corresponding hard negative instance.- `--model_name_or_path`: Pre-trained checkpoints to start with. Support:- BERT-based models:`bert-base-uncased`, `bert-large-uncased`, etc.- RoBERTa-based models :`RoBERTa-base`, `RoBERTa-large`, etc.- `--temp`: Temperature for the contrastive loss(默认为 0.05).- `--pooler_type`: Pooling method. It's the same as the `--pooler` in the evaluation part.- `--mlp_only_train`: We have found that for unsupervised SimCSE, it works better to train the model with MLP layer but test the model without it. You should use this argument when training unsupervised SimCSE models.- `--hard_negative_weight`: If using hard negatives (i.e., there are 3 columns in the training file), this is the logarithm of the weight. For example, if the weight is 1, then this argument should be set as 0 (default value).- `--do_mlm`: Whether to use the MLM auxiliary objective. If True:- `--mlm_weight`: Weight for the MLM objective.- `--mlm_probability`: Masking rate for the MLM objective.- All the other arguments are standard Huggingface's transformers training arguments. Some of the often-used arguments are: `--output_dir`, `--learning_rate`, `--per_device_train_batch_size`.- In our example scripts, we also set to evaluate the model on the STS-B development set (need to download the dataset following the evaluation section) and save the best checkpoint.<a name="eQ8pf"></a>## (3)训练参数汇总```shellusage: train.py --xxx xxx_val [...]optional arguments:-h, --help show this help message and exit--model_name_or_path MODEL_NAME_OR_PATHThe model checkpoint for weights initialization.Don\'tset if you want to train a model from scratch.--model_type MODEL_TYPEIf training from scratch, pass a model type from thelist: layoutlm, distilbert, albert, bart, camembert,xlm-roberta, longformer, roberta, squeezebert, bert,mobilebert, flaubert, xlm, electra, reformer, funnel,mpnet, tapas--config_name CONFIG_NAMEPretrained config name or path if not the same asmodel_name--tokenizer_name TOKENIZER_NAMEPretrained tokenizer name or path if not the same asmodel_name--cache_dir CACHE_DIRWhere do you want to store the pretrained modelsdownloaded from huggingface.co--no_use_fast_tokenizerWhether to use one of the fast tokenizer (backed bythe tokenizers library) or not.--model_revision MODEL_REVISIONThe specific model version to use (can be a branchname, tag name or commit id).--use_auth_token Will use the token generated when running`transformers-cli login` (necessary to use this scriptwith private models).--temp TEMP Temperature for softmax.--pooler_type POOLER_TYPEWhat kind of pooler to use (cls, cls_before_pooler,avg, avg_top2, avg_first_last).--hard_negative_weight HARD_NEGATIVE_WEIGHTThe **logit** of weight for hard negatives (onlyeffective if hard negatives are used).--do_mlm Whether to use MLM auxiliary objective.--mlm_weight MLM_WEIGHTWeight for MLM auxiliary objective (only effective if--do_mlm).--mlp_only_train Use MLP only during training--dataset_name DATASET_NAMEThe name of the dataset to use (via the datasetslibrary).--dataset_config_name DATASET_CONFIG_NAMEThe configuration name of the dataset to use (via thedatasets library).--overwrite_cache Overwrite the cached training and evaluation sets--validation_split_percentage VALIDATION_SPLIT_PERCENTAGEThe percentage of the train set used as validation setin case there\'s no validation split--preprocessing_num_workers PREPROCESSING_NUM_WORKERSThe number of processes to use for the preprocessing.--train_file TRAIN_FILEThe training data file (.txt or .csv).--max_seq_length MAX_SEQ_LENGTHThe maximum total input sequence length aftertokenization. Sequences longer than this will betruncated.--pad_to_max_length Whether to pad all samples to `max_seq_length`. IfFalse, will pad the samples dynamically when batchingto the maximum length in the batch.--mlm_probability MLM_PROBABILITYRatio of tokens to mask for MLM (only effective if--do_mlm)--output_dir OUTPUT_DIRThe output directory where the model predictions andcheckpoints will be written.--overwrite_output_dirOverwrite the content of the output directory. Use thisto continue training if output_dir points to acheckpoint directory.--do_train Whether to run training.--do_eval Whether to run eval on the dev set.--do_predict Whether to run predictions on the test set.--evaluation_strategy {EvaluationStrategy.NO, EvaluationStrategy.STEPS,EvaluationStrategy.EPOCH}The evaluation strategy to use.--prediction_loss_onlyWhen performing evaluation and predictions, onlyreturns the loss.--per_device_train_batch_size PER_DEVICE_TRAIN_BATCH_SIZEBatch size per GPU/TPU core/CPU for training.--per_device_eval_batch_size PER_DEVICE_EVAL_BATCH_SIZEBatch size per GPU/TPU core/CPU for evaluation.--per_gpu_train_batch_size PER_GPU_TRAIN_BATCH_SIZEDeprecated, the use of `--per_device_train_batch_size`is preferred. Batch size per GPU/TPU core/CPU fortraining.--per_gpu_eval_batch_size PER_GPU_EVAL_BATCH_SIZEDeprecated, the use of `--per_device_eval_batch_size`is preferred. Batch size per GPU/TPU core/CPU forevaluation.--gradient_accumulation_steps GRADIENT_ACCUMULATION_STEPSNumber of updates steps to accumulate beforeperforming a backward/update pass.--eval_accumulation_steps EVAL_ACCUMULATION_STEPSNumber of predictions steps to accumulate beforemoving the tensors to the CPU.--learning_rate LEARNING_RATEThe initial learning rate for Adam.--weight_decay WEIGHT_DECAYWeight decay if we apply some.--adam_beta1 ADAM_BETA1Beta1 for Adam optimizer--adam_beta2 ADAM_BETA2Beta2 for Adam optimizer--adam_epsilon ADAM_EPSILONEpsilon for Adam optimizer.--max_grad_norm MAX_GRAD_NORMMax gradient norm.--num_train_epochs NUM_TRAIN_EPOCHSTotal number of training epochs to perform.--max_steps MAX_STEPSIf > 0: set total number of training steps to perform.Override num_train_epochs.--lr_scheduler_type {SchedulerType.LINEAR,SchedulerType.COSINE,SchedulerType.COSINE_WITH_RESTARTS,SchedulerType.POLYNOMIAL,SchedulerType.CONSTANT,SchedulerType.CONSTANT_WITH_WARMUP}The scheduler type to use.--warmup_steps WARMUP_STEPSLinear warmup over warmup_steps.--logging_dir LOGGING_DIRTensorboard log dir.--logging_first_step Log the first global_step--logging_steps LOGGING_STEPSLog every X updates steps.--save_steps SAVE_STEPSSave checkpoint every X updates steps.--save_total_limit SAVE_TOTAL_LIMITLimit the total amount of checkpoints.Deletes theolder checkpoints in the output_dir. Default isunlimited checkpoints--no_cuda Do not use CUDA even when it is available--seed SEED random seed for initialization--fp16 Whether to use 16-bit (mixed) precision (throughNVIDIA Apex) instead of 32-bit--fp16_opt_level FP16_OPT_LEVELFor fp16: Apex AMP optimization level selected in['O0', 'O1', 'O2', and 'O3']. See details athttps://nvidia.github.io/apex/amp.html--fp16_backend {auto,amp,apex}The backend to be used for mixed precision.--local_rank LOCAL_RANKFor distributed training: local_rank--tpu_num_cores TPU_NUM_CORESTPU: Number of TPU cores (automatically passed bylauncher script)--tpu_metrics_debug Deprecated, the use of `--debug` is preferred. TPU:Whether to print debug metrics--debug Whether to print debug metrics on TPU--dataloader_drop_lastDrop the last incomplete batch if it is not divisibleby the batch size.--eval_steps EVAL_STEPSRun an evaluation every X steps.--dataloader_num_workers DATALOADER_NUM_WORKERSNumber of subprocesses to use for data loading(PyTorch only). 0 means that the data will be loadedin the main process.--past_index PAST_INDEXIf >=0, uses the corresponding part of the output asthe past state for next step.--run_name RUN_NAME An optional descriptor for the run. Notably used forwandb logging.--disable_tqdm DISABLE_TQDMWhether or not to disable the tqdm progress bars.--no_remove_unused_columnsRemove columns not required by the model when using annlp.Dataset.--label_names LABEL_NAMES [LABEL_NAMES ...]The list of keys in your dictionary of inputs thatcorrespond to the labels.--load_best_model_at_endWhether or not to load the best model found duringtraining at the end of training.--metric_for_best_model METRIC_FOR_BEST_MODELThe metric to use to compare two different models.--greater_is_better GREATER_IS_BETTERWhether the `metric_for_best_model` should bemaximized or not.--ignore_data_skip When resuming training, whether or not to skip thefirst epochs and batches to get to the same trainingdata.--sharded_ddp Whether or not to use sharded DDP training (indistributed training only).--deepspeed DEEPSPEEDEnable deepspeed and pass the path to deepspeed jsonconfig file (e.g. ds_config.json)--label_smoothing_factor LABEL_SMOOTHING_FACTORThe label smoothing epsilon to apply (zero means nolabel smoothing).--adafactor Whether or not to replace Adam by Adafactor.--eval_transfer Evaluate transfer task dev sets (in validation).

4、训练过程的注意事项

(1)训练结束后报错

return comm.gather(inputs, ctx.dim, ctx.target_device)

File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/site-packages/torch/nn/parallel/comm.py", line 230, in gather

return torch._C._gather(tensors, dim, destination)

RuntimeError: Input tensor at index 1 has invalid shape [62, 62], but expected [62, 63]

- 原因分析:https://github.com/princeton-nlp/SimCSE/issues/147

It seems to be a GPU communication-related error. Maybe try limiting the number of GPUs to 1 and try again.

(2)训练过程加载数据集时出现报错

Traceback (most recent call last): File "train.py", line 585, in <module> main() File "train.py", line 310, in main datasets = load_dataset(extension, data_files=data_files, cache_dir="./data/") File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/site-packages/datasets/load.py", line 591, in load_dataset path, script_version=script_version, download_config=download_config, download_mode=download_mode, dataset=True File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/site-packages/datasets/load.py", line 267, in prepare_module local_path = cached_path(file_path, download_config=download_config) File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/site-packages/datasets/utils/file_utils.py", line 343, in cached_path max_retries=download_config.max_retries, File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/site-packages/datasets/utils/file_utils.py", line 617, in get_from_cache raise ConnectionError("Couldn't reach {}".format(url)) ConnectionError: Couldn't reach https://raw.githubusercontent.com/huggingface/datasets/1.2.1/datasets/text/text.py Traceback (most recent call last): File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/runpy.py", line 193, in _run_module_as_main "__main__", mod_spec) File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/runpy.py", line 85, in _run_code exec(code, run_globals) File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/site-packages/torch/distributed/launch.py", line 260, in <module> main() File "/home/huangjiayue/anaconda3/envs/jy-pharm_paper_search/lib/python3.6/site-packages/torch/distributed/launch.py", line 256, in main cmd=cmd)解决方法:在

Simcse项目下创建text/text.py```python “”” jy: 该文件下载自: https://raw.githubusercontent.com/huggingface/datasets/1.2.1/datasets/text/text.py “”” import logging from dataclasses import dataclass import pyarrow as pa import datasets

logger = logging.getLogger(name) FEATURES = datasets.Features( { “text”: datasets.Value(“string”), } )

@dataclass class TextConfig(datasets.BuilderConfig): “””BuilderConfig for text files.”””

encoding: str = "utf-8"

chunksize: int = 10 << 20 # 10MB

class Text(datasets.ArrowBasedBuilder): BUILDER_CONFIG_CLASS = TextConfig

def _info(self):

return datasets.DatasetInfo(features=FEATURES)

def _split_generators(self, dl_manager):

"""The `data_files` kwarg in load_dataset() can be a str, List[str], Dict[str,str], or Dict[str,List[str]].

If str or List[str], then the dataset returns only the 'train' split.

If dict, then keys should be from the `datasets.Split` enum.

"""

if not self.config.data_files:

raise ValueError(f"At least one data file must be specified, but got data_files={self.config.data_files}")

data_files = dl_manager.download_and_extract(self.config.data_files)

if isinstance(data_files, (str, list, tuple)):

files = data_files

if isinstance(files, str):

files = [files]

return [datasets.SplitGenerator(name=datasets.Split.TRAIN, gen_kwargs={"files": files})]

splits = []

for split_name, files in data_files.items():

if isinstance(files, str):

files = [files]

splits.append(datasets.SplitGenerator(name=split_name, gen_kwargs={"files": files}))

return splits

def _generate_tables(self, files):

for file_idx, file in enumerate(files):

batch_idx = 0

with open(file, "r", encoding=self.config.encoding) as f:

while True:

batch = f.read(self.config.chunksize)

if not batch:

break

batch += f.readline() # finish current line

batch = batch.splitlines()

pa_table = pa.Table.from_arrays([pa.array(batch)], schema=pa.schema({"text": pa.string()}))

# Uncomment for debugging (will print the Arrow table size and elements)

# logger.warning(f"pa_table: {pa_table} num rows: {pa_table.num_rows}")

# logger.warning('\n'.join(str(pa_table.slice(i, 1).to_pydict()) for i in range(pa_table.num_rows)))

yield (file_idx, batch_idx), pa_table

batch_idx += 1

<a name="PpFvC"></a>

# 5、训练过程解读

<a name="qrkUa"></a>

## (1)unsupervised

```shell

:<<!

python train.py \

--model_name_or_path bert-base-uncased \

--train_file data/wiki1m_for_simcse.txt \

--output_dir result/my-unsup-simcse-bert-base-uncased \

--num_train_epochs 1 \

--per_device_train_batch_size 64 \

--learning_rate 3e-5 \

--max_seq_length 32 \

--evaluation_strategy steps \

--metric_for_best_model stsb_spearman \

--load_best_model_at_end \

--eval_steps 125 \

--pooler_type cls \

--mlp_only_train \

--overwrite_output_dir \

--temp 0.05 \

--do_train \

--do_eval \

--fp16 \

"$@"

!

# jy: 如果没有 GPU 环境, 则传入 train.py 中的 --fp16 参数需要被注释掉;

python train.py \

--model_name_or_path bert-base-uncased \

--train_file data/wiki1m_for_simcse-20w.txt \

--output_dir result/my-unsup-simcse-bert-base-uncased \

--num_train_epochs 1 \

--per_device_train_batch_size 64 \

--learning_rate 3e-5 \

--max_seq_length 32 \

--evaluation_strategy steps \

--metric_for_best_model stsb_spearman \

--load_best_model_at_end \

--eval_steps 125 \

--pooler_type cls \

--mlp_only_train \

--overwrite_output_dir \

--temp 0.05 \

--do_train \

--do_eval \

"$@"